We just shipped our 150th public release of curl. On December 2, 2015.

We just shipped our 150th public release of curl. On December 2, 2015.

curl 7.46.0

One hundred and fifty public releases done during almost 18 years makes a little more than 8 releases per year on average. In mid November 2015 we also surpassed 20,000 commits in the git source code repository.

With the constant and never-ending release train concept of just another release every 8 weeks that we’re using, no release is ever the grand big next release with lots of bells and whistles. Instead we just add a bunch of things, fix a bunch of bugs, release and then loop. With no fanfare and without any press-stopping marketing events.

So, instead of just looking at what was made in this last release, because you can check that out yourself in our changelog, I wanted to take a look at the last two years and have a moment to show you want we have done in this period. curl and libcurl are the sort of tool and library that people use for a long time and a large number of users have versions installed that are far older than two years and hey, now I’d like to tease you and tell you what can be yours if you take the step straight into the modern day curl or libcurl.

Thanks

Before we dive into the real contents, let’s not fool ourselves and think that we managed these years and all these changes without the tireless efforts and contributions from hundreds of awesome hackers. Thank you everyone! I keep calling myself lead developer of curl but it truly would not not exist without all the help I get.

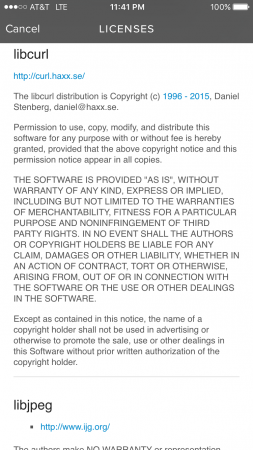

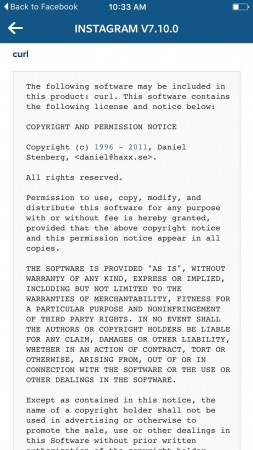

We keep getting a steady stream of new contributors and quality patches. Our problem is rather to review and receive the contributions in a timely manner. In a personal view, I would also like to just add that during these two last years I’ve had support from my awesome employer Mozilla that allows me to spend a part of my work hours on curl.

What happened the last 2 years in curl?

We released curl and libcurl 7.34.0 on December 17th 2013 (12 releases ago). What did we do since then that could be worth mentioning? Well, a lot, and then I’m going to mostly skip the almost 900 bug fixes we did in this time.

Many security fixes

Almost half (18 out of 37) of the security vulnerabilities reported for our project were reported during the last two years. It may suggest a greater focus and more attention put on those details by users and developers. Security reports are a good thing, it means that we address and find problems. Yes it unfortunately also shows that we introduce security issues at times, but I consider that secondary, even if we of course also work on ways to make sure we’ll do this less in the future.

URL specific options: –next

A pretty major feature that was added to the command line tool without much bang or whistles. You can now add –next as a separator on the command line to “group” options for specific URLs. This allows you to run multiple different requests on URLs that still can re-use the same connection and so on. It opens up for lots of more fun and creative uses of curl and has in fact been requested on and off for the project’s entire life time!

HTTP/2

There’s a new protocol version in town and during the last two years it was finalized and its RFC went public. curl and libcurl supports HTTP/2, although you need to explicitly ask for it to be used still.

HTTP/2 is binary, multiplexed, uses compressed headers and offers server push. Since the command line tool is still serially sending and receiving data, the multiplexing and server push features can right now only get fully utilized by applications that use libcurl directly.

HTTP/2 in curl is powered by the nghttp2 library and it requires a fairly new TLS library that supports the ALPN extension to be fully usable for HTTPS. Since the browsers only support HTTP/2 over HTTPS, most HTTP/2 in the wild so far is done over HTTPS.

We’ve gradually implemented and provided more and more HTTP/2 features.

Separate proxy headers

For a very long time, there was no way to tell curl which custom headers to use when talking to a proxy and which to use when talking to the server. You’d just add a custom header to the request. This was never good and we eventually made it possible to specify them separately and then after the security alert on the same thing, we made it the default behavior.

Option man pages

We’ve had two user surveys as we now try to make it an annual spring tradition for the project. To learn what people use, what people think, what people miss etc. Both surveys have told us users think our documentation needs improvement and there has since been an extra push towards improving the documentation to make it more accessible and more readable.

One way to do that, has been to introduce separate, stand-alone, versions of man pages for each and very libcurl option. For the functions curl_easy_setopt, curl_multi_setopt and curl_easy_getinfo. Right now, that means 278 new man pages that are easier to link directly to, easier to search for with Google etc and they are now written with more text and more details for each individual option. In total, we now host and maintain 351 individual man pages.

The boringssl / libressl saga

The Heartbleed incident of April 2014 was a direct reason for libressl being created as a new fork of OpenSSL and I believe it also helped BoringSSL to find even more motivation for its existence.

Subsequently, libcurl can be built to use either one of these three forks based on the same origin. This is however not accomplished without some amount of agony.

SSLv3 is also disabled by default

The continued number of problems detected in SSLv3 finally made it too get disabled by default in curl (together with SSLv2 which has been disabled by default for a while already). Now users need to explicitly ask for it in case they need it, and in some cases the TLS libraries do not even support them anymore. You may need to build your own binary to get the support back.

Everyone should move up to TLS 1.2 as soon as possible. HTTP/2 also requires TLS 1.2 or later when used over HTTPS.

support for the SMB/CIFS protocol

For the first time in many years we’ve introduced support for a new protocol, using the SMB:// and SMBS:// schemes. Maybe not the most requested feature out there, but it is another network protocol for transfers…

code of conduct

Triggered by several bad examples in other projects, we merged a code of conduct document into our source tree without much of a discussion, because this is the way this project always worked. This just makes it clear to newbies and outsiders in case there would ever be any doubt. Plus it offers a clear text saying what’s acceptable or not in case we’d ever come to a point where that’s needed. We’ve never needed it so far in the project’s very long history.

–data-raw

Just a tiny change but more a symbol of the many small changes and advances we continue doing. The –data option that is used to specify what to POST to a server can take a leading ‘@’ symbol and then a file name, but that also makes it tricky to actually send a literal ‘@’ plus it makes scripts etc forced to make sure it doesn’t slip in one etc.

–data-raw was introduced to only accept a string to send, without any ability to read from a file and not using ‘@’ for anything. If you include a ‘@’ in that string, it will be sent verbatim.

attempting VTLS as a lib

We support eleven different TLS libraries in the curl project – that is probably more than all other transfer libraries in existence do. The way we do this is by providing an internal API for TLS backends, and we call that ‘vtls’.

In 2015 we started made an effort in trying to make that into its own sub project to allow other open source projects and tools to use it. We managed to find a few hackers from the wget project also interested and to participate. Unfortunately I didn’t feel I could put enough effort or time into it to drive it forward much and while there was some initial work done by others it soon was obvious it wouldn’t go anywhere and we pulled the plug.

The internal vtls glue remains fine though!

pull-requests on github

Not really a change in the code itself but still a change within the project. In March 2015 we changed our policy regarding pull-requests done on github. The effect has been a huge increase in number of pull-requests and a slight shift in activity away from the mailing list over to github more. I think it has made it easier for casual contributors to send enhancements to the project but I don’t have any hard facts backing this up (and I wouldn’t know how to measure this).

… as mentioned in the beginning, there have also been hundreds of smaller changes and bug fixes. What fun will you help us make reality in the next two years?

We can’t have it like that! I rolled up my imaginary sleeves (imaginary since my swag tshirt doesn’t really have sleeves) and I now offer

We can’t have it like that! I rolled up my imaginary sleeves (imaginary since my swag tshirt doesn’t really have sleeves) and I now offer