Readers of my blog and friends in general know that I’m not really a Windows guy. I never use it and I never develop things explicitly for windows – but I do my best in making sure my portable code also builds and runs on windows. This blog post is about a new detail that I’ve just learned and that I think I could help shed the light on, to help my fellow hackers. The other day I was contacted by a user of libcurl because he was using it on Windows and he noticed that when wanting to transfer data from the loopback device (where he had a service of his own), and he accessed it using “localhost” in the URL passed to libcurl, he would spot a DNS request for the address of that host name while when he used regular windows tools he would not see that! After some mails back and forth, the details got clear:

Windows has a default /etc/hosts version (conveniently instead put at “c:\WINDOWS\system32\drivers\etc\hosts”) and that default  /etc/hosts alternative used to have an entry for “localhost” in it that would point to 127.0.0.1.

When Windows 7 was released, Microsoft had removed the localhost entry from the /etc/hosts file. Reading sources on the net, it might be related to them supporting IPv6 for real but it’s not at all clear what the connection between those two actions would be.

getaddrinfo() in Windows has since then, and it is unclear exactly at which point in time it started to do this, been made to know about the specific string “localhost” and is documented to always return “all loopback addresses on the local computer”.

So, a custom resolver such as c-ares that doesn’t use Windows’ functions to resolve names but does it all by itself, that has been made to look in the /etc/host file etc now suddenly no longer finds “localhost” in a local file but ends up asking the DNS server for info about it… A case that is far from ideal. Most servers won’t have an entry for it and others might simply provide the wrong address.

I think we’ll have to give in and provide this hack in c-ares as well, just the way Windows itself does.

Oh, and as a bonus there’s even an additional hack mentioned in the getaddrinfo docs: On Windows Server 2003 and later if the pNodeName parameter points to a string equal to “..localmachine”, all registered addresses on the local computer are returned.

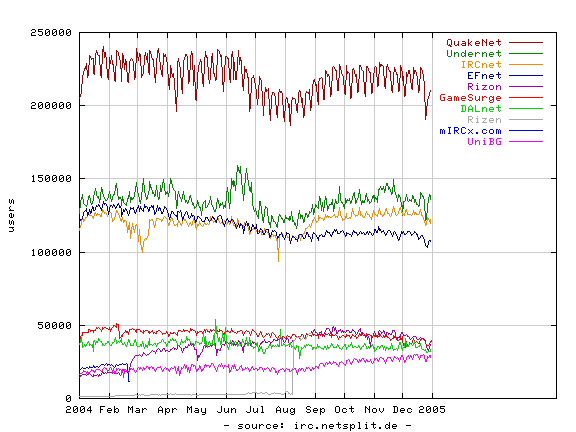

Thirteen years ago I released the first version of

Thirteen years ago I released the first version of