Okay, so I’ll delve a bit deeper into the libcurl internals than usual here. Beware of low-level talk!

There’s a never-ending stream of things to polish and improve in a software project and curl is no exception. Let me tell you what I fell over and worked on the other day.

Smaller than what holds Linux

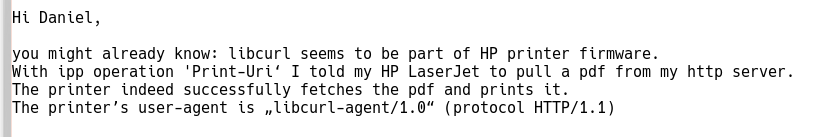

We have users who are running curl on tiny devices, often put under the label of Internet of Things, IoT. These small systems typically have maybe a megabyte or two of ram and flash and are often too small to even run Linux. They typically run one of the many different RTOS flavors instead.

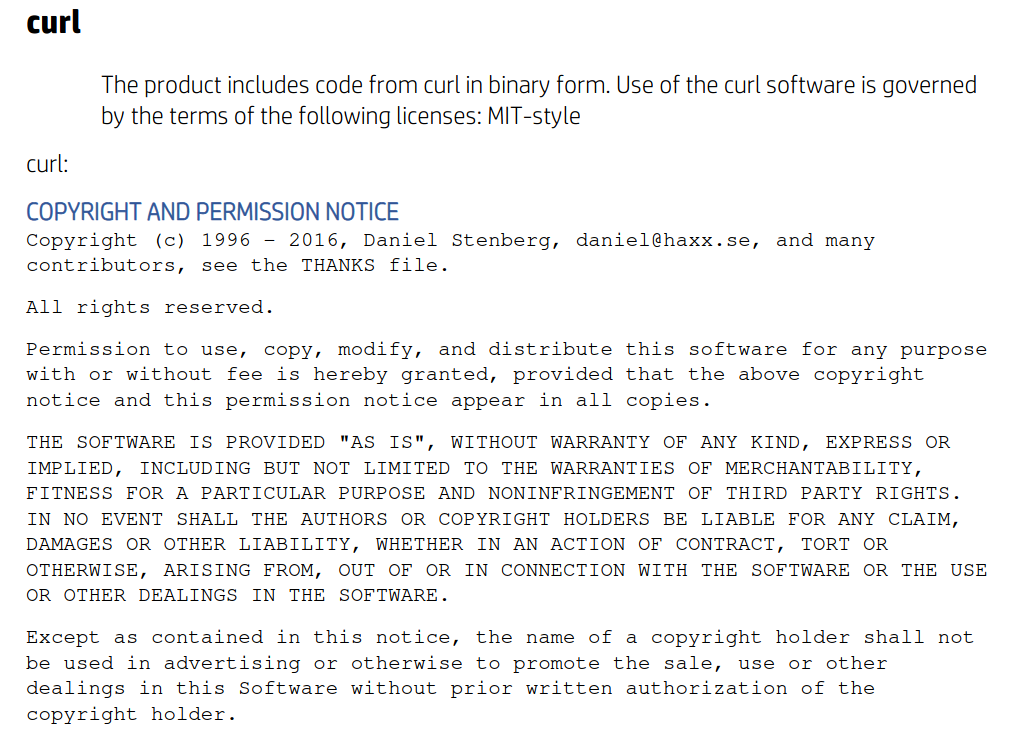

It is with these users in mind I’ve worked on the tiny-curl effort. To make curl a viable alternative even there. And believe me, the world of RTOSes and IoT is literally filled with really low quality and half-baked HTTP client implementations. Often certainly very small but equally as often with really horrible shortcuts or protocol misunderstandings in them.

Going with curl in your IoT device means going with decades of experience and reliability. But for libcurl to be an option for many IoT devices, a libcurl build has to be able to get really small. Both the footprint on storage but also in the required amount of dynamic memory used while executing.

Being feature-packed and attractive for the high-end users and yet at the same time being able to get really small for the low-end is a challenge. And who doesn’t like a good challenge?

Reduce reduce reduce

I’ve set myself on a quest to make it possible to build libcurl smaller than before and to use less dynamic memory. The first tiny-curl releases were only the beginning and I already then aimed for a libcurl + TLS library within 100K storage size. I believe that goal was met, but I also think there’s more to gain.

I will make tiny-curl smaller and use less memory by making sure that when we disable parts of the library or disable specific features and protocols at build-time, they should no longer affect storage or dynamic memory sizes – as far as possible. Tiny-curl is a good step in this direction but the job isn’t done yet – there’s more “dead meat” to carve off.

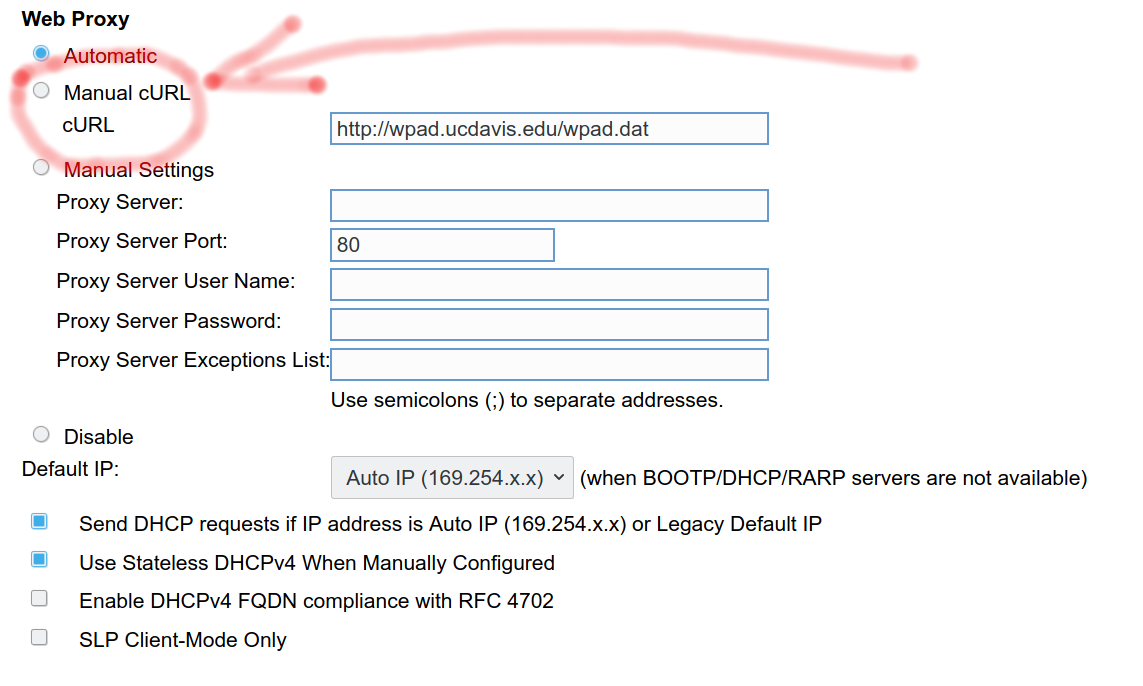

One example is my current work (PR #5466) on making sure there’s much less proxy remainders left when libcurl is built without support for such. This makes it smaller on disk but also makes it use less dynamic memory.

To decrease the maximum amount of allocated memory for a typical transfer, and in fact for all kinds of transfers, we’ve just switched to a model with on-demand download buffer allocations (PR #5472). Previously, the download buffer for a transfer was allocated at the same time as the handle (in the curl_easy_init call) and kept allocated until the handle was cleaned up again (with curl_easy_cleanup). Now, we instead lazy-allocate it first when the transfer starts, and we free it again immediately when the transfer is over.

It has several benefits. For starters, the previous initial allocation would always first allocate the buffer using the default size, and the user could then set a smaller size that would realloc a new smaller buffer. That double allocation was of course unfortunate, especially on systems that really do want to avoid mallocs and want a minimum buffer size.

The “price” of handling many handles drastically went down, as only transfers that are actively in progress will actually have a receive buffer allocated.

A positive side-effect of this refactor, is that we could now also make sure the internal “closure handle” actually doesn’t use any buffer allocation at all now. That’s the “spare” handle we create internally to be able to associate certain connections with, when there’s no user-provided handles left but we need to for example close down an FTP connection as there’s a command/response procedure involved.

Downsides? It means a slight increase in number of allocations and frees of dynamic memory for doing new transfers. We do however deem this a sensible trade-off.

Numbers

I always hesitate to bring up numbers since it will vary so much depending on your particular setup, build, platform and more. But okay, with that said, let’s take a look at the numbers I could generate on my dev machine. A now rather dated x86-64 machine running Linux.

For measurement, I perform a standard single transfer getting a 8GB file from http://localhost, written to stderr:

curl -s http://localhost/8GB -o /dev/null

With all the memory calls instrumented, my script counts the number of memory alloc/realloc/free/etc calls made as well as the maximum total memory allocation used.

The curl tool itself sets the download buffer size to a “whopping” 100K buffer (as it actually makes a difference to users doing for example transfers from localhost or other really high bandwidth setups or when doing SFTP over high-latency links). libcurl is more conservative and defaults it to 16K.

This command line of course creates a single easy handle and makes a single HTTP transfer without any redirects.

Before the lazy-alloc change, this operation would peak at 168978 bytes allocated. As you can see, the 100K receive buffer is a significant share of the memory used.

After the alloc work, the exact same transfer instead ended up using 136188 bytes.

102,400 bytes is for the receive buffer, meaning we reduced the amount of “extra” allocated data from 66578 to 33807. By 49%

Even tinier tiny-curl: in a feature-stripped tiny-curl build that does HTTPS GET only with a mere 1K receive buffer, the total maximum amount of dynamically allocated memory is now below 25K.

Caveats

The numbers mentioned above only count allocations done by curl code. It does not include memory used by system calls or, when used, third party libraries.

Landed

The changes mentioned in this blog post have landed in the master branch and will ship in the next release: curl 7.71.0.