The thing about me being a BDFL for curl is that it has the D in there. I have the means and ability to push for or veto just about anything I like or don’t like in the project, should I decide to. In my public presentations about curl I emphasize that I truly try to be a benevolent dictator, but then I also presume quite a few dictators would say and believe so whether that is true or not to the outside world.

I think we can say with some certainty that dictatorships are not the ideal way of running a country, and it might also go for Open Source projects.

In curl we remain using this model because it works and changing it to something else is a large and complicated process which we have not wanted to get to because we have not had any strong reason. There is anecdotal evidence that this way of running the project works somewhat.

A significant difference between being a dictator for an Open Source project compared to a country is however the ease with which every citizen could just leave one day and start a new clone country, with all the same citizens and the same layout, just without the dictator. I’m easily replaceable and made into past tense if I would abuse my role.

So there is this inherent force to push me to do good for the project even if I am a “dictator”.

As a BDFL of curl…

This is what I think the curl project should focus on. What I want the curl project to be. These are the ten commandments I think should remain our guiding principles. I think this is what makes curl. My benevolent guidelines.

My ten guiding principles for curl

- Be open and friendly

- Ship rock-solid products

- Be a leader in Open Source

- Maintain a security first focus

- Provide top-notch documentation

- Remain independent

- Respond timely

- Keep up with the world

- Stay on the bleeding edge

- Respect feedback

This list of ten areas are perhaps things every open source projects want to focus and excel in, but I think that is irrelevant here. These are then ten key and core focus points for me when I work on curl. We should be best-in-class in each and every one of them.

Let me elaborate

1. Be open and friendly to all contributors, new and old

I think open source projects have a lot to gain by making efforts in being friendly and approachable. We were all newcomers into a project once. We gain more contributors, better, by remaining a friendly and open project.

This does not mean that we should tolerate abuse or trolling.

I try to lead this by example. I do not always succeed.

2. Ship rock-solid products for the universe to depend upon

Reviews, tests, analyzers, fuzzers, audits, bug bounty programs etc are means to make sure the code runs smoothly everywhere. Studying protocol specs and inter-operating with servers and other clients on the Internet ensure that the products we make work as expect by billions of end users. On this planet and beyond. If our products cannot carry the world on their shoulders, we fail.

Rock-solid also means we are reliable over time – we do not break users’ scripts and applications. We maintain ABI/API compatibility. The command line options the curl tool introduces are supported until the end of time.

3. Be a leader in Open Source, follow every best practice

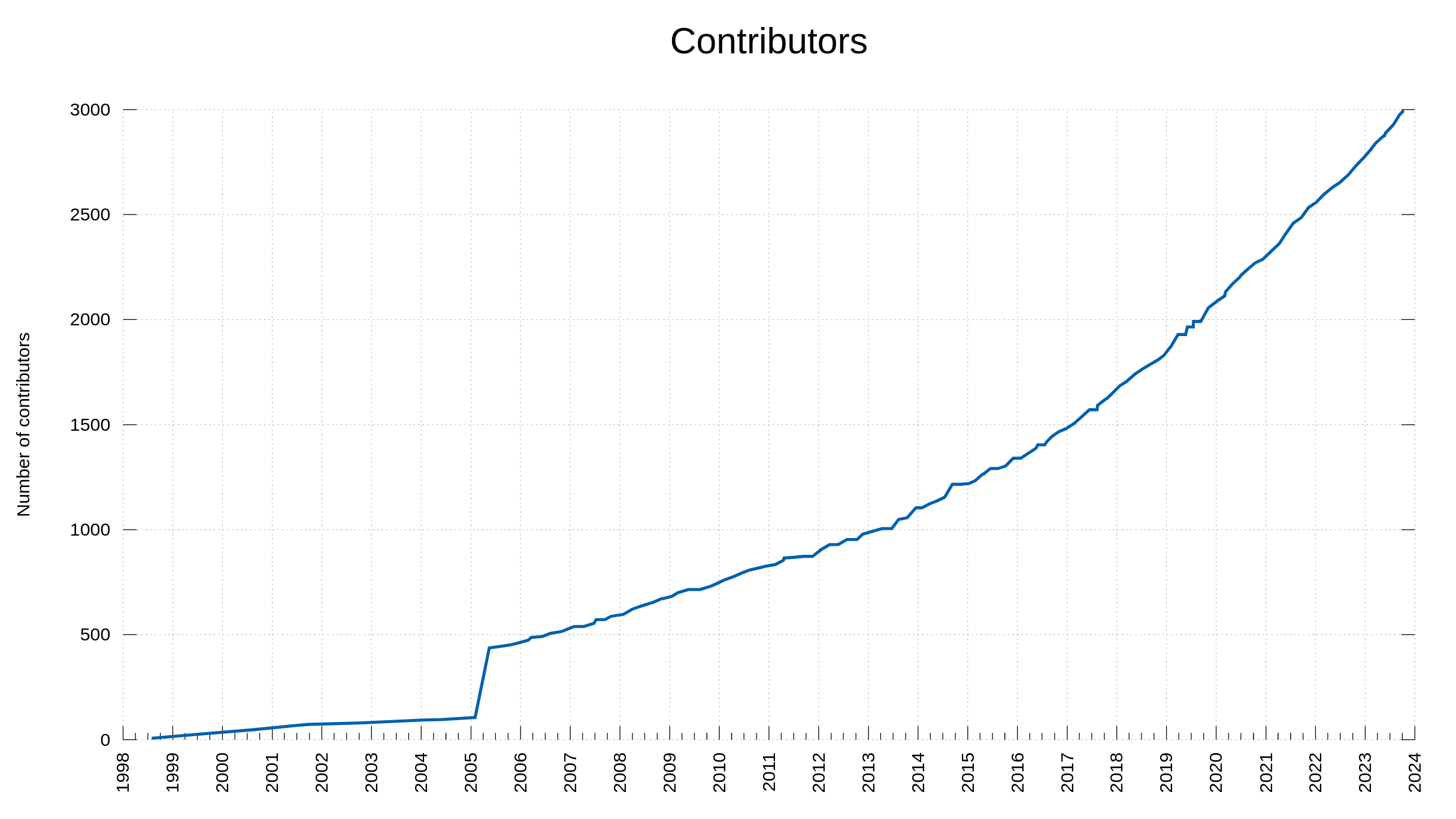

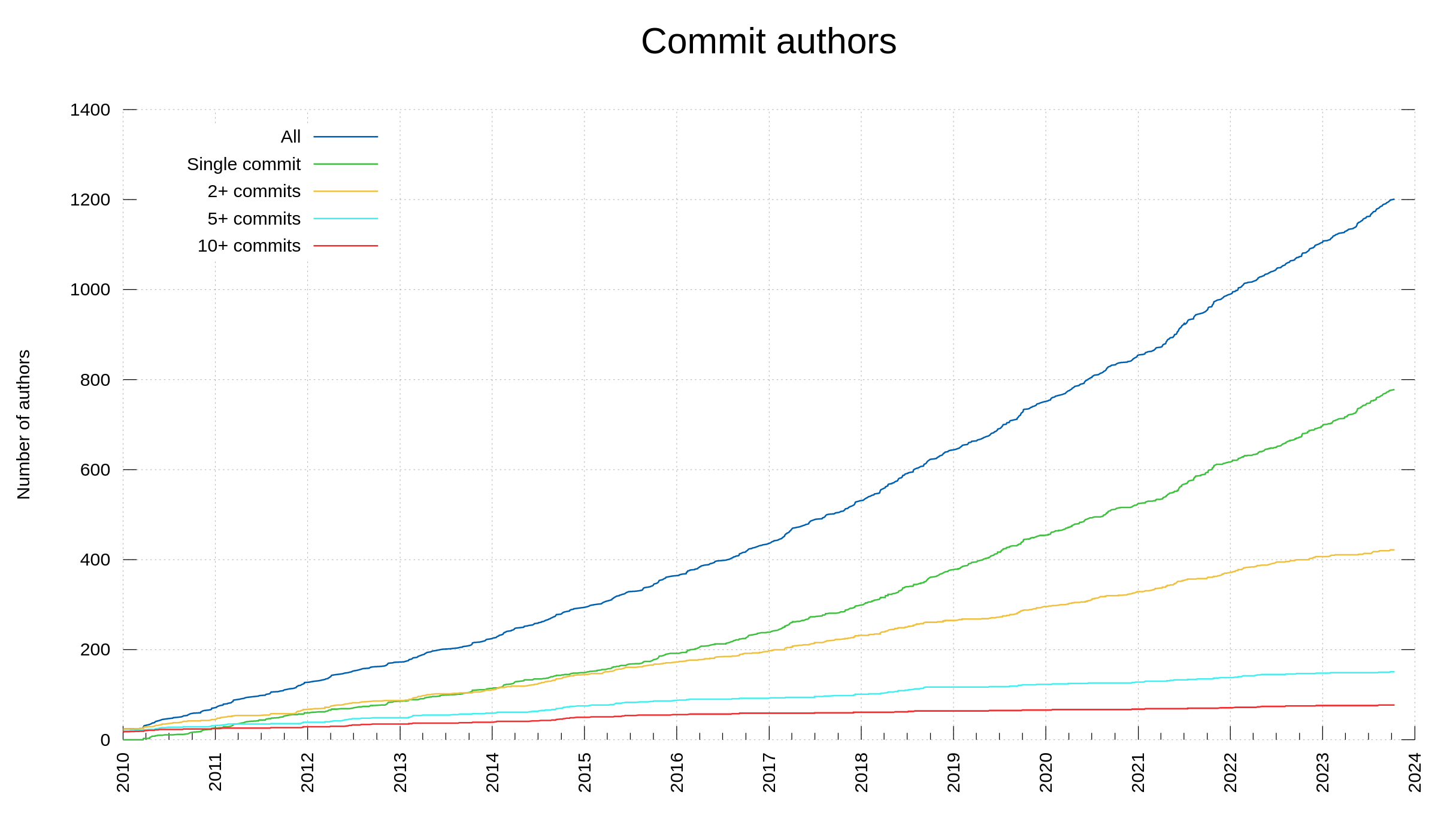

As true believers in the powers of Open Source we lead by example. We are here to show that you not only can do all development and everything in the open and using open source practices, but that it also makes the project thrive and deliver state of the art outcomes.

4. Always keep users secure, maintain a security first focus

Provide features and functionality with user and protocol security in focus. Address security concerns and reports without delays. Document every past mistake in thorough detail. Help users do secure and safe Internet transfers – by default.

5. Provide industry-leading quality documentation

A key to successful usage of our products, to give users the means and ability to use our project fully, we need to document how it works and how to use it. Everything needs to be documented with clarity and detail enough so that users understand and are empowered.

No comparable software project, open or proprietary, can compete with the quality and amount of documentation we provide.

6. Roam free, independent from all companies and organizations

curl shall forever remain independent. curl is not part of any umbrella organization, it is not owned or controlled by any company. It makes us entirely independent and free to do what we think is best for the community, for our users and for Internet transfers in general. Its license shall remain set and it ensures that curl remains free. Copyright holders are individuals, there is no assignment or licensing of copyrights involved.

7. Respond timely on issues and questions

We shall strive to respond to issues, reports and questions sent to the project within a reasonable time. To show that we care and to help users solve their problems. We want users to solve their problems sooner rather than later.

It does not mean that we always can fix the problems or give a good answer immediately. Sometimes we just have to say that we can’t fix it for now.

8. Remain the internet transfer choice, keep up with the world

In the curl project we should keep up with protocol development, updates and changes. The way we do Internet transfers changes over time and curl needs to keep up to remain relevant.

We also need to write our protocol implementations sensibly, knowing that we are being watched and our way of doing things are often copied, referred to and relied upon by other Internet clients.

This also implies that we are never done and that we can always improve. In every aspect of the project.

9. Offer bleeding edge protocol support to aid early adopters

When new protocols or ways to do protocols are introduced to the world, curl can play a great role in providing and offering early support of such protocols. This has through the years helped countless of other implementers or even protocol authors of these protocols and with this, we help improving the world around us. It also helps us get early feedback on our implementation and thus ship better code earlier.

10. Listen to and respect community feedback

I might be a dictator, but this dictatorship would not work if I and the rest of the curl maintainers did not listen to what the users and the greater curl community have to say. We need to stay agile and have a sense of what people want our products to do and to not do. Now and in the future.

An open source project can always get forked the second it makes a bad turn and somehow gives up on, sells out or betrays its users. Me being a dictator does not protect us from that. We need to stay responsive, listening and caring. We are here for our users.

Flag?

If curl was an evil empire, I figure we would sport this flag: