Welcome to one of the more feature-packed curl releases we have had in a while. Exactly eight weeks since we shipped 8.15.0.

Release presentation

Numbers

the 270th release

17 changes

56 days (total: 10,036)

260 bugfixes (total: 12,538)

453 commits (total: 36,025)

2 new public libcurl function (total: 98)

0 new curl_easy_setopt() option (total: 308)

3 new curl command line option (total: 272)

76 contributors, 39 new (total: 3,499)

32 authors, 17 new (total: 1,410)

2 security fixes (total: 169)

Security

We publish two severity-low vulnerabilities in sync with this release:

- CVE-2025-9086 identifies a bug in the cookie path handler that can make curl get confused and override a secure cookie with a non-secure one using the same name. If the planets all happen to align correctly.

- CVE-2025-10148 points out a mistake in the WebSocket implementation that makes curl not update the frame mask correctly for each new outgoing frame – as it is supposed to.

Changes

We have a long range of changes this time:

- curl gets a

--followoption - curl gets an

--out-nulloption - curl gets a

--parallel-max-hostoption to limit concurrent connections per host --retry-delayand--retry-max-timeaccept decimal seconds- curl gets support for

--longopt=value - curl -w now supports %time{}

- now libcurl caches negative name resolves

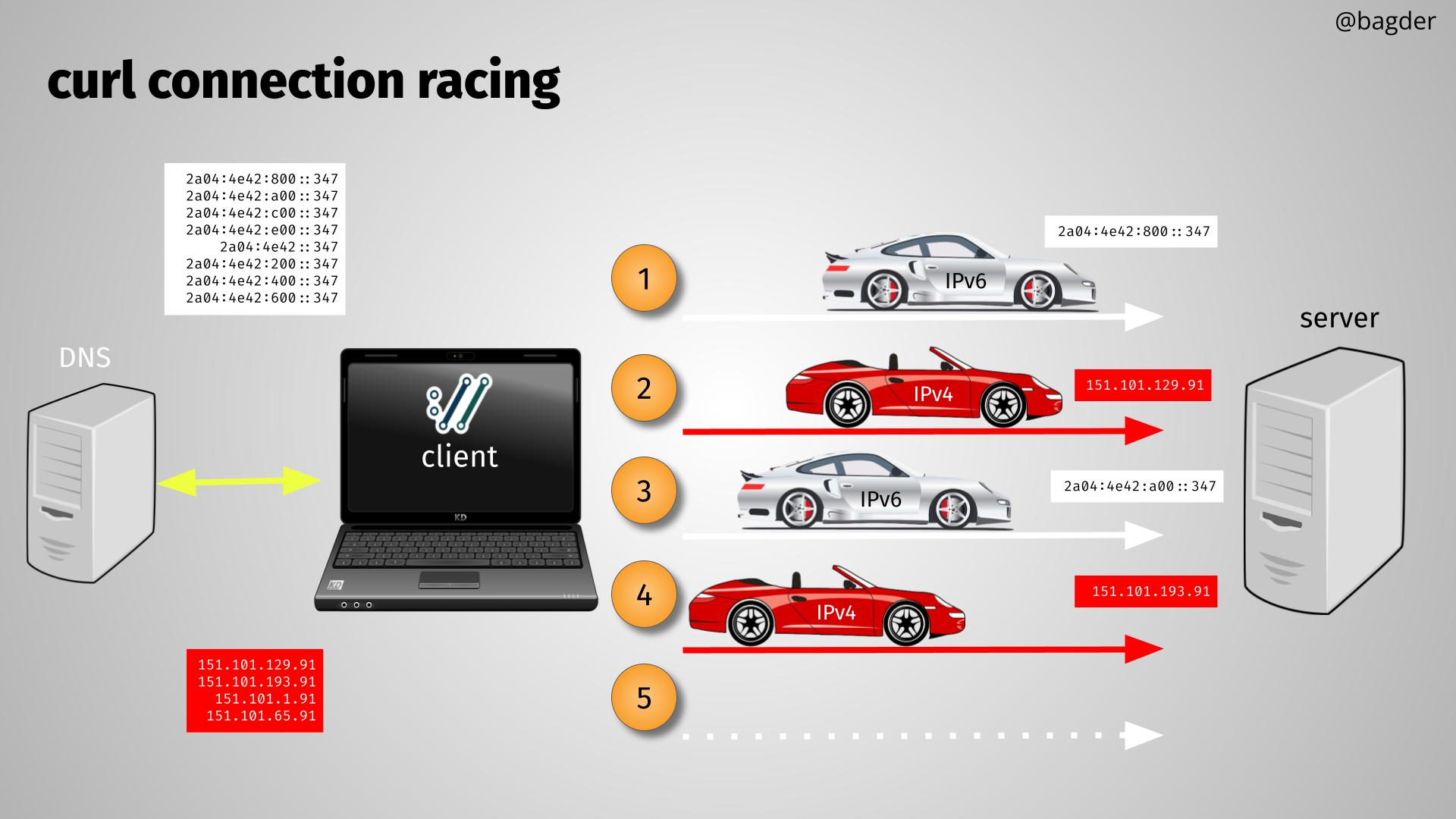

- ip happy eyeballing: keep attempts running

- bump minimum mbedtls version required to 3.2.0

- add curl_multi_get_offt() for getting multi related information

- add CURLMOPT_NETWORK_CHANGED to signal network changed to libcurl

- use the

NETRCenvironment variable (first) if set - bump minimum required mingw-w64 to v3.0 (from v1.0)

- smtp: allow suffix behind a mail address for RFC 3461

- make default TLS version be minimum 1.2

- drop support for msh3

- support CURLOPT_READFUNCTION for WebSocket

Bugfixes

The official bugfix count surpassed 250 this cycle and we have documented them all in the changelog, including links to most issues or pull-requests where they originated.

See the release presentation for a walk-through of some of the perhaps most interesting ones.