On March 20th 1998 curl 4 was released. It was the first curl release ever even if already at version 4 since we kept the version number from the previous projects we did before curl – using other names. We started it all with having the tool named httpget (which was an existing small tool written by Rafael Sagula), soon changed name to urlget to end up with curl – all renames happening due to shifting features and focus.

Like many other projects, this started because of an itch. I wanted to get currency rates off the internet to allow an IRC bot to be able to provide an “exchange service” for users with accurate up-to-date rates. I thought the existing projects I found all did too much or did the wrong thing. That bot and service is now gone since long.

curl has been a truly portable project from day 1, and the first windows build was already urlget 2.1 (pre-curl). autoconf support for the build process was added in October 1998.

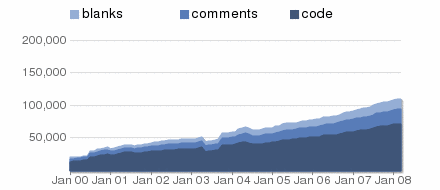

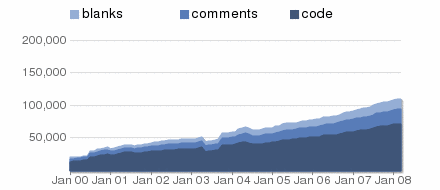

Unfortunately I don’t have the original release 4 tarball left anymore, the closest one I have is curl 4.8 (dated August 31 1998). curl 4.8 is about 3400 lines of code. Today we’re totaling in well over 100K source lines, so it has grown over 30 times!

I had no big plans for curl nor did I think very much about the future of the project. I just added the features I and my fellow contributors wanted to have for the moment. That’s actually pretty much how the project has continued to work. We don’t have many long-term plans for what to do with it, we mostly look just inches ahead of our noses and act accordingly.

During the version 6 period (Sep 1999 – Mar 2000) we learned that curl was getting popular, was useful and worked rather well, so the work on providing a libcurl started. We wanted to offer other applications the ability to use curl’s file transfer powers. Version 7.1 was released in August 2000 and thus libcurl was officially born.

curl and libcurl remained being a rather low-key project, I just work on it on my spare time and there are no full-time developers paid to work on this project – apart from some occasional sub-projects now and then that have been sponsored by companies and organizations. (See later on for an example.)

Slowly but surely more and more people started using libcurl and contributed with bug reports and patches. When the project turned 5 years in 2003 I collected all the names of all contributors so far and I reached the number 270. I found the number very high and I was mostly kidding when I said I hoped we would double that amount by the time we celebrate our tenth anniversary. Of course we’ve more than doubled that amount today when we have more than 620 named contributors so far – and continuously adding new ones with every release.

During this journey of a decade, I’ve remained the lead developer and project leader but we’re now some 10 developers with commit access (that also use it) and I try to be open and responsive in order to attract more developers to come aboard, to listen to their advice and ideas and to be sensitive on what our users want from us.

In 2005 I was lucky enough to get a grant from the Swedish IIS organization for the purpose of developing a new event-based API for libcurl to better deal with very large amount of connections, the problem so nicely called c10k.

In the days when our humble project turns 10, I spend about two hours spare time per day on the project and it is my primary hobby, we make 5-6 releases per year, we get about 7000 unique visitors on the web site a normal day, about one million curl packages are downloaded per year – from our servers.

Today, libcurl is feature-rich, portable, very widely used, very fast, well supported and there are no signs of stagnation in release nor development pace. In fact, looking at the source-code growth over the last couple of years we can see a pretty stable and continuous growth:

Just as I never looked ahead and planned for the future much in the past, I don’t do that now either so I really don’t know and can’t tell what the future will hold for us. We’ll just continue to develop the world’s best client-side file transfer library, to make it even more solid for the foreseeable future, to make it do the things users and developers out there think it should do. Possibly that involves adding support for more protocols, removing some of the less popular ones or simply by enhancing how we support the existing ones.

Join the mailing lists and join us for the next ten years to come!