I did a 25 minute talk yesterday in Swedish on the topic at the foss-sthlm meetup #5, and the slides from my presentation are available below.

All posts by Daniel Stenberg

localhost hack on Windows

Readers of my blog and friends in general know that I’m not really a Windows guy. I never use it and I never develop things explicitly for windows – but I do my best in making sure my portable code also builds and runs on windows. This blog post is about a new detail that I’ve just learned and that I think I could help shed the light on, to help my fellow hackers. The other day I was contacted by a user of libcurl because he was using it on Windows and he noticed that when wanting to transfer data from the loopback device (where he had a service of his own), and he accessed it using “localhost” in the URL passed to libcurl, he would spot a DNS request for the address of that host name while when he used regular windows tools he would not see that! After some mails back and forth, the details got clear:

Windows has a default /etc/hosts version (conveniently instead put at “c:\WINDOWS\system32\drivers\etc\hosts”) and that default  /etc/hosts alternative used to have an entry for “localhost” in it that would point to 127.0.0.1.

When Windows 7 was released, Microsoft had removed the localhost entry from the /etc/hosts file. Reading sources on the net, it might be related to them supporting IPv6 for real but it’s not at all clear what the connection between those two actions would be.

getaddrinfo() in Windows has since then, and it is unclear exactly at which point in time it started to do this, been made to know about the specific string “localhost” and is documented to always return “all loopback addresses on the local computer”.

So, a custom resolver such as c-ares that doesn’t use Windows’ functions to resolve names but does it all by itself, that has been made to look in the /etc/host file etc now suddenly no longer finds “localhost” in a local file but ends up asking the DNS server for info about it… A case that is far from ideal. Most servers won’t have an entry for it and others might simply provide the wrong address.

I think we’ll have to give in and provide this hack in c-ares as well, just the way Windows itself does.

Oh, and as a bonus there’s even an additional hack mentioned in the getaddrinfo docs: On Windows Server 2003 and later if the pNodeName parameter points to a string equal to “..localmachine”, all registered addresses on the local computer are returned.

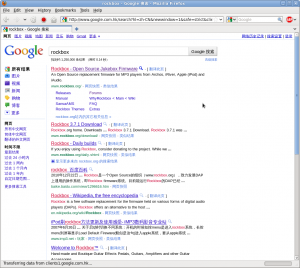

Today I am Chinese

I’m going about my merry life and I use google every day.

I’m going about my merry life and I use google every day.

Today Google decided I’m in China and redirects me to google.com.hk and it shows me all text in Chinese. It’s just another proof how silly it is trying to use the IP address to figure out location (or even worse trying to guess language based on IP address).

Click on the image to get it in its full glory.

I haven’t changed anything locally, but it seems Google has updated (broken) their database somehow.

Just to be perfectly sure my browser isn’t playing any tricks behind my back, I snooped up the headers sent in the HTTP request and there’s nothing notable:

GET /complete/search?output=firefox&client=firefox&hl=en-US&q=rockbox HTTP/1.1 Host: suggestqueries.google.com User-Agent: Mozilla/5.0 (X11; U; Linux x86_64; en-US; rv:1.9.2.13) Gecko/20101209 Fedora/3.6.13-1.fc13 Firefox/3.6.13 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-us,en;q=0.5 Accept-Encoding: gzip,deflate Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7 Keep-Alive: 115 Connection: keep-alive Cookie: PREF=ID=dc410 [truncated]

Luckily, I know about the URL “google.com/ncr” (No Country Redirect) so I can still use it, but not through my browser’s search box…

Fosdem 2011: my libcurl talk on video

Kai Engert was good enough to capture all the talks in the security devroom at Fosdem 2011, and while I’m seeding the full torrent I’ve made my own talk available as a direct download from here:

Fosdem 2011: security-room at 14:15 by Daniel Stenberg

The thing is about 107MB big, 640×480 resolution and is roughly 26 minutes playing time. WebM format.

libcurl, seven SSL libs and one SSH lib

I did a talk today at Fosdem with this title. The room only had 48 seats and it was completely packed with people standing everywhere it was possible around the seated guys.

The English slides from my talk are below. It was also recorded on video so I hope I’ll be able to post once it becomes available online

News flash! Tech terms used almost correctly!

Ok, The Social Network isn’t a new movie by any means at this time, but I happened to see it the other day. I’ll leave the entire story and whatever facts or not it did or didn’t portrait in a correct manner.

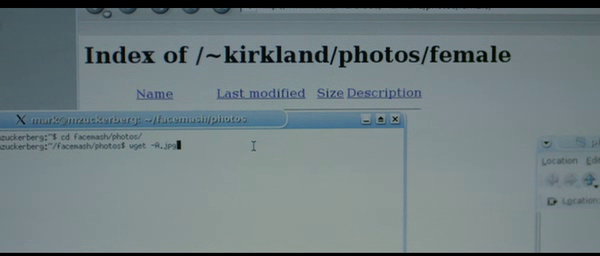

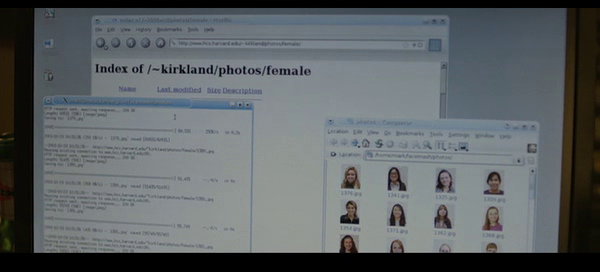

But I did spot the use of several at least basic technical terms used in the beginning that struck me as amazingly correctly used! The movie character Mark actually used wget to download images (at about 10:05 into the movie), and as you can see on my first screenshot the initial keystrokes we get to see on the command line also actually resembles a correct wget command line. You can click on these images to get a slightly larger version of the pics. I’m sorry I couldn’t get any higher quality ones, but I figure the point is still the same!

After having invoked wget, as is explained he gets many pictures downloaded and what do you know, the screen output actually looks like it could’ve been a wget that has downloaded a couple of files:

He also mentioned the terms ‘Apache’, ’emacs’ and ‘perl scripts’ in complete and correct sentences.

Where is the world heading?!

Update: Hrvoje Niksic, the founder of wget, helped out with some additional observations:

The options looked right to me, something like -r -A.jpg …

I was wondering about the historical accuracy of the progress bar, but it checks out. The movie takes place about a year and a half after the release of Wget 1.8, which added the feature. The department that takes care of these things did a good job. 🙂

Cookies and Websockets and HTTP headers

So yesterday we held a little HTTP-related event in Stockholm, arranged by OWASP Sweden. We talked a bit about cookies, websockets and recent HTTP headers.

Below are all the slides from the presentations I, Martin Holst Swende and John Wilanders did. (The entire event was done in Swedish.)

Martin Holst Swende’s talk:

John Wilander’s slides from his talk are here:

Foss-sthlm number five!

In the series of Free Software and Open Source talks and seminars we’ve been doing in the Foss-sthlm community, we are about to set off the fifth meetup on February 24, 2011 in Kista, Stockholm, Sweden. This time we will offer six talks by six different persons, all skilled and well educated within their respective topics.

The event will be held completely in Swedish, and the talks that are planned to get presented are as following:

- Foss in developing nations – Pernilla Näsfors

- Open Source Framework Agreements in the Swedish Government – Daniel Melin

- From telecommunications to computer networks – security for real-timecommunications over IP – Olle E Johansson

- Maintaining – how to manage your FOSS project – Daniel Stenberg (yes, that’s me)

- Peek in HTTP and HTTP traffic – Henrik Nordström

- Cease and desist, stories from threat mails received in the Rockbox project – Björn Stenberg

As usual, we do this admission free and our sponsor (CAG this time) will provide with something to eat and drink. After all the scheduled talks (around 20:00) we’ll continue the evening at a pub somewhere and discuss open source and free software over a beer or two.

See you there!

Rockbox on Maemo

Thomas Jarosch has been quite busy and worked a lot on the Rockbox port for Maemo, it is the direct result of the previous work on making it possible to run Rockbox as an app on top of operating systems. It is still early and there are things missing, but it is approaching usable really fast it seems

Thomas Jarosch has been quite busy and worked a lot on the Rockbox port for Maemo, it is the direct result of the previous work on making it possible to run Rockbox as an app on top of operating systems. It is still early and there are things missing, but it is approaching usable really fast it seems

The work on the app for Android has also been progressing over time and even though it is still not available to download from the Android Market, the apk is updated regularly and pretty functional.

Back in the printing game

After my printer died, I immediately ordered a new one online and not long afterwards I could pick it up from my local post office. As I use both the scanner and the printer features pretty much I went with another “all-in-one” model and I chose an HP model (again) basically because I’ve been happy with how my previous worked (before its death). “HP Officejet Pro 8500 A910” seems to be the whole name. And yeah, it really is as black as the picture here shows it.

After my printer died, I immediately ordered a new one online and not long afterwards I could pick it up from my local post office. As I use both the scanner and the printer features pretty much I went with another “all-in-one” model and I chose an HP model (again) basically because I’ve been happy with how my previous worked (before its death). “HP Officejet Pro 8500 A910” seems to be the whole name. And yeah, it really is as black as the picture here shows it.

This model is less “photo-focused” than my previous but I never print my own photos so that’s no loss. What did annoy me was however that this model uses 4 ink cartridges instead of the 6 in my previous, but of a completely different design so I can’t even re-use my half-full ink containers from the corpse!

My new printer has some fancy features. It is one of them that I can give an email address and then print on by sending email to it. The email address then gets a really long one with lots of seemingly random letters, it is in the hp.com domain and I can set up a white-list of people (From: addresses) that is allowed to print on it via email.

It also has full internet access itself so it could fetch a firmware upgrade file and install that entirely on its own without the use of a computer. (Which made me wonder if they use libcurl, but I realize there’s no way for me to tell and of course there are many alternatives they might use.)

Driver-wise, it seems like a completely different set for Windows (hopefully this won’t uninstall itself) and on Linux I could install it fine to print, but xsane just won’t find it to scan. I intend to instead try to use the printer’s web service for scanning, hopefully that will be roughly equivalent for my limited use – I mostly scan documents, bills and invoices for my work.