On September 10th, I sent out the invite to the foss-sthlm community for an embedded hacking event just before lunch. In just four hours, the 40 available tickets had been claimed and the waiting list started to get filled up as well… I later increased the amount to 46, we had some cancellations and I handed out more tickets and we had 46 people signed up at the day of the event (I believe 3 of these didn’t show up). At the day the event started, we still had another 20 people in the waiting list with hopes of getting a spot!

On September 10th, I sent out the invite to the foss-sthlm community for an embedded hacking event just before lunch. In just four hours, the 40 available tickets had been claimed and the waiting list started to get filled up as well… I later increased the amount to 46, we had some cancellations and I handed out more tickets and we had 46 people signed up at the day of the event (I believe 3 of these didn’t show up). At the day the event started, we still had another 20 people in the waiting list with hopes of getting a spot!

(All photos in this post are scaled down versions, click the picture to see a slightly higher resolution version!)

In Enea we had found an excellent sponsor for this event. They provided the place, the food, the raspberry pis, the coffe, the tshirts, the infrastructure and everything else that had to be there to make it an awesome day.

We started off the event at 10:00 on October 20 in the Enea offices in Kista, Stockholm Sweden. People dropped in one by one and were handed their welcome present containing a raspberry pi board, a 2GB SD card and a USB-to-serial cable to interface/power the board with. People then found their seats in the room.

We started off the event at 10:00 on October 20 in the Enea offices in Kista, Stockholm Sweden. People dropped in one by one and were handed their welcome present containing a raspberry pi board, a 2GB SD card and a USB-to-serial cable to interface/power the board with. People then found their seats in the room.

There were fruit, candy, water and coffee to start off and keep the mood high. We experienced some initial wifi and internet access problems but luckily we had no less than two dedicated Enea IT support people present and they could swiftly fix the little hiccups that occurred.

Once everyone seemed to have landed, I welcomed everyone and just gave a short overview of what to expect from the day, where the toilets are and so on.

In order to try to please everyone who couldn’t be with us at this event, due to plans or due to simply not having got one of the attractive 40 “tickets”,  Enea helped us arrange a video camera which we used during the entire day to film all talks and the contest. I can’t promise any delivery time for them but I’ll work on getting them made public as soon as possible. I’ll make a separate blog post when there’s something to see. (All talks were in Swedish!)

Enea helped us arrange a video camera which we used during the entire day to film all talks and the contest. I can’t promise any delivery time for them but I’ll work on getting them made public as soon as possible. I’ll make a separate blog post when there’s something to see. (All talks were in Swedish!)

At 11:30 I started off the day for real by holding the first presentation. We used one of the conference rooms for this, just next to the big room where everyone say hacking. This day we had removed all tables and only had chairs in the room movie theater style and it turned out we could fit just about all attendees in the room this way. I think that was good as I think almost everyone sat down to hear and see me:

Open Source in Embedded Systems

I did a rather non-technical talk about a couple of trends in the embedded operating systems market and how I see the upcoming future and then some additional numbers etc. The full presentation (with most of the text in Swedish) can be found on slideshare.

I did a rather non-technical talk about a couple of trends in the embedded operating systems market and how I see the upcoming future and then some additional numbers etc. The full presentation (with most of the text in Swedish) can be found on slideshare.

I got good questions and I think it turned out an interesting discussion on how things run and work these days.

After my talk (which I of course did longer than planned) we served lunch. Three different sallads, bread and stuff were brought out. Several people approached me to say how they appreciated the food so I must say that Enea managed really well on that account too!

Development and trends in multicore CPUs

Jonas Svennebring from Freescale was up next and talked about current multicore CPU development trends and what the challenges are for the manufacturers are today. It was a very good and very technical talk and he topped it off by showing off his board with T4240 running, Freecale’s latest flagship chip that is just now about to become available for companies outside of Freescale.

On this photo on the left you see the power supply in the foreground and the ATX board with a huge fan and cooler on top of the actual T4240 chip.

On this photo on the left you see the power supply in the foreground and the ATX board with a huge fan and cooler on top of the actual T4240 chip.

T4240 is claimed to have a new world record in coremark performance, features 12 hyper-threaded ppc cores in up to 1.8GHz.

There were some good questions to Jonas and he delivered good and well thought out answers. Then people walked out in the big room again to continue getting some actual hacking done.

We then took the opportunity to hand out the very nice-looking tshirts to all attendees, again kindly done so by Enea.

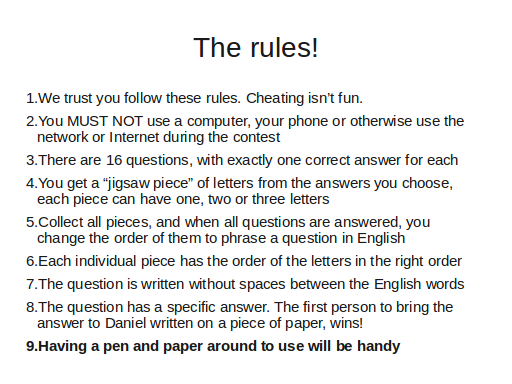

The Contest

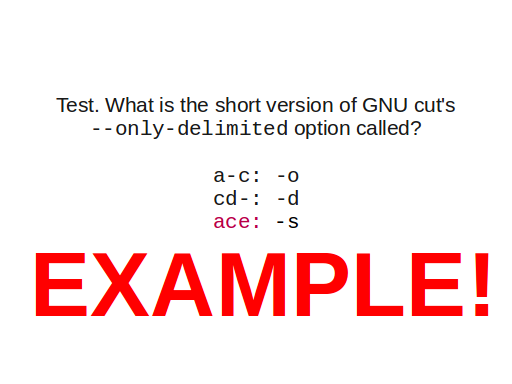

The next interruption was the contest. Designed entirely by me to allow everyone to participate, even my friends and Enea employees etc. On the photo on the right you can see I now wear the tshirt of the day.

The contest was hard. I knew it was hard as I wanted it really make it a race that was only for the ones who really get embedded linux and have their brain laid out properly!

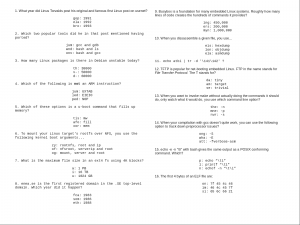

I posted the entire contest in separate blog post, but the gist of it was that I presented 16 questions with 3 answer alternatives. Each alternative had a sequence of letters. So after 16 questions you had 16 letter sequences you had to put in the right order to get a 17th question. The first one to give a correct answer to that 17th question would win.

A whole bunch of people gave up immediately but there was a core group who really fought hard, long and bravely and in the end we got a winner. The winner had paired up so the bottle of champagne went jointly to Klas and Jonas. It was a very close call as others were within seconds of figuring it out too.

I think the competition was harder than I thought. Possibly a little too hard…

Your own code on others’ hardware

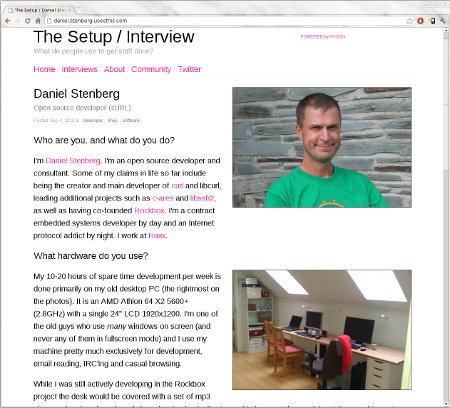

Linus from Haxx (who shouldn’t be much of a stranger to readers of this blog) then gave some insights on how he reversed engineered mp3 players for the Rockbox project. Reverse engineering is a subject that attracts many people and I believe it has some sort of magic aura around it. Again many good questions and interested people in the room.

Linus from Haxx (who shouldn’t be much of a stranger to readers of this blog) then gave some insights on how he reversed engineered mp3 players for the Rockbox project. Reverse engineering is a subject that attracts many people and I believe it has some sort of magic aura around it. Again many good questions and interested people in the room.

On the photo on the right you can see Linus’ stripped down hardware which he explained he had ripped off all components from in order to properly hunt down how things were connected on the PCB.

On the photo on the right you can see Linus’ stripped down hardware which he explained he had ripped off all components from in order to properly hunt down how things were connected on the PCB.

Coffee

We did not keep the time schedule so we had to get the coffee break in after Linus, and there were buns and so on.

Yocto

Björn from Haxx then educated the room on the Yocto Project. What it is, why it is, who it is and a little about how it is designed and how it works etc.

I think perhaps people started to get a little soft in their brain as we had now blasted through all but one of the talks, and as a speaker finale we had Henrik…

u-boot on Allwinner A10

Henrik Nordström did a walk-through explaining some u-boot basics and then explained what he had done for the Allwinner targets and related info.

I believe the talks were kind of the glue that made people stick around. Once Henrik was done and there was no more talks planned for the day, it was obvious that it was sort of the signal for people to start calling it a day even though there was still over one hour left until the official end time (20:00).

Of course I don’t blame anyone for that. I had hardly had any time myself to sit down or do anything relaxing during the day so I was kind of exhausted myself…

Summary

I got a lot of very positive comments from people when they left the facilities with big smiles on their faces, asking for more of these sorts of events in the future.

I am very happy with the overly positive response, with the massive interest from our community to come to such an event and again, Enea was an awesome sponsor for this.

I am very happy with the overly positive response, with the massive interest from our community to come to such an event and again, Enea was an awesome sponsor for this.

I didn’t get anything done on the raspberry pi during this day. As a matter of fact I never even got around to booting my board, but I figure that wasn’t a top priority for me this day.

I didn’t get anything done on the raspberry pi during this day. As a matter of fact I never even got around to booting my board, but I figure that wasn’t a top priority for me this day.

The crowd size felt really perfect for these facilities and 40 something also still keeps the spirit of familiarity and it doesn’t feel like a “big” event or so.

Will I work on making another event similar to this again? Sure. It might not happen immediately, but I don’t see why it can’t be made again under similar circumstances.

Credits

All photos on this page were taken by me, Björn Stenberg, Kjell Ericson, Mats Lidell and Mia Åkerström.

All photos on this page were taken by me, Björn Stenberg, Kjell Ericson, Mats Lidell and Mia Åkerström.

Thanks to Jonas, Björn, Linus and Henrik for awesome talks.

Thanks to Enea for sponsoring this event, and Mia then in particular for being a good organizer.

My take away from this contest is that it was harder than I anticipated and took a longer time to crack than I thought. I gave away a few additional clues and hints as the time went by, but in the end I believe there were several persons who were very close to breaking it at almost the same time. In the end, Klas and Jonas presented the correct answer first and won the bottle of Champagne. I’m sure you appreciate their efforts after having tried this yourself!

My take away from this contest is that it was harder than I anticipated and took a longer time to crack than I thought. I gave away a few additional clues and hints as the time went by, but in the end I believe there were several persons who were very close to breaking it at almost the same time. In the end, Klas and Jonas presented the correct answer first and won the bottle of Champagne. I’m sure you appreciate their efforts after having tried this yourself!

I did a rather non-technical talk about a couple of trends in the embedded operating systems market and how I see the upcoming future and then some additional numbers etc. The full presentation (with most of the text in Swedish) can be found on

I did a rather non-technical talk about a couple of trends in the embedded operating systems market and how I see the upcoming future and then some additional numbers etc. The full presentation (with most of the text in Swedish) can be found on

I got

I got  Back in 2002 I realized that having libcurl not do SSL server verification by default basically meant that everyone writing

Back in 2002 I realized that having libcurl not do SSL server verification by default basically meant that everyone writing

Much thanks to autoconf and automake we have an established more or less standardized way to build and install tools, libraries and other software. We build them with ‘make’ and we install them with ‘

Much thanks to autoconf and automake we have an established more or less standardized way to build and install tools, libraries and other software. We build them with ‘make’ and we install them with ‘ Introducing ptest

Introducing ptest