(I am not a lawyer, this is not legal advice and these are not legal analyses, just my personal observations and ramblings. Please correct me where I’m wrong or add info if you have any!)

At 3:45 pm on March 18th 2011, the company Content Delivery Solutions LLC filed a complaint in a court in Texas, USA. The defendants are several bigwigs and the list includes several big and known names of the Internet:

- Akamai

- AOL

- AT&T

- CD Networks

- Globalscape

- Limelight Networks

- Peer 1 Network

- Research In Motion

- Savvis

- Verizon

- Yahoo!

The complaint was later amended with an additional patent (filed on April 18th), making it list three patents that these companies are claimed to violate (I can’t find the amended version online though). Two of the patents ( 6,393,471 and 6,058,418) are for marketing data and how to use client info to present ads basically. The third is about file transfer resumes.

I was contacted by a person involved in the case at one of the defendants’. This unspecified company makes one or more products that use “curl“. I don’t actually know if they use the command line tool or the library – but I figure that’s not too important here. curl gets all its superpowers from libcurl anyway.

This Patent Troll thus basically claims that curl violates a patent on resumed file transfers!

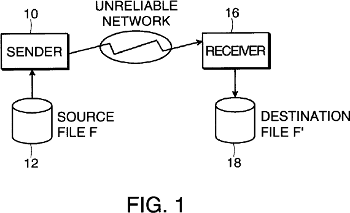

The patent in question that would be one that curl would violate is the US patent 6,098,180 which basically claims to protect this idea:

A system is provided for the safe transfer of large data files over an unreliable network link in which the connection can be interrupted for a long period of time.

The patent describes several ways in how it may detect how it should continue the transfer from such a break. As curl only does transfer resumes based on file name and an offset, as told by the user/application, that could be the only method that they can say curl would violate of their patent.

The patent goes into detail in how a client first sends a “signature” and after an interruption when the file transfer is about to continue, the client would ask the server about details of what to send in the continuation. With a very vivid imagination, that could possibly equal the response to a FTP SIZE command or the Content-Length: response in a HTTP GET or HEAD request.

A more normal reader would rather say that no modern file transfer protocol works as described in that patent and we should go with “defendant is not infringing, move on nothing to see here”.

But for the sake of the argument, let’s pretend that the patent actually describes a method of file transfer resuming that curl uses.

The ‘180 (it is referred to with that name within the court documents) patent was filed at February 18th 1997 (and issued on August 1, 2000). Apparently we need to find prior art that was around no later than February 17th 1996, that is to say one year before the filing of the stupid thing. (This I’ve been told, I had no idea it could work like this and it seems shockingly weird to me.)

What existing tools and protocols did resumed transfers in February 1996 based on a file name and a file offset?

Lots!

Thank you all friends for the pointers and references you’ve brought to me.

- The FTP spec RFC 959 was published in October 1985. FTP has a REST command that tells at what offset to “restart” the transfer at. This was being used by FTP clients long before 1996, and an example is the known Kermit FTP client that did offset-based file resumed transfer in 1995.

- The HTTP header Range: introduces this kind of offset-based resumed transfer, although with a slightly fancier twist. The Range: header was discussed before the magic date, as also can be seen on the internet already in this old mailing list post from December 1995.

- One of the protocols from the old days that those of us who used modems and BBSes in the old days remember is zmodem. Zmodem was developed in 1986 and there’s this zmodem spec from 1988 describing how to do file transfer resumes.

- A slightly more modern protocol that I’ve unfortunately found no history for before our cut-off date is rsync, as I could only find the release mail for rsync 1.0 from June 1996. Still long before the patent was filed obviously, and also clearly showing that the one year margin is silly as for all we know they could’ve come up with the patent idea after reading the rsync releases notes and still rsync can’t be counted as prior art.

- Someone suggested GetRight as a client doing this, but GetRight wasn’t released in 1.0 until Febrary 1997 so unfortunately that didn’t help our case even if it seems to have done it at the time.

- curl itself does not pre-date the patent filing. curl was first released in March 1998, and the predecessor was started around summer-time 1997. I don’t have any remaining proofs of that, and it still wasn’t before “the date” so I don’t think it matters much now.

At the time of this writing I don’t know where this will end up or what’s going to happen. Time will tell.

This Software patent obviously is a concern mostly to US-based companies and those selling products in the US. I am neither a US citizen nor do I have or run any companies based in the US. However, since curl and libcurl are widely used products that are being used by several hundred companies already, I want to help bring out as much light as possible onto this problem.

The patent itself is of course utterly stupid and silly and it should never have been accepted as it describes trivially thought out ideas and concepts that have been thought of and implemented already decades before this patent was filed or granted although I claim that the exact way explained in the patent is not frequently used. Possibly the protocol using a method that is closed to the description of the patent is zmodem.

I guess I don’t have to mention what I think about software patents.

I’m convinced that most or all download tools and browsers these days know how to resume a previously interrupted transfer this way. Why wouldn’t these guys also approach one of the big guys (with thick wallets) who also use this procedure? Surely we can think of a few additional major players with file tools that can resume file transfers and who weren’t targeted in this suit!

I don’t know why. Clearly they’ve not backed down from attacking some of the biggest tech and software companies.

(Illustration from the ‘180 patent.)