Let me tell you a story about how Windows users are deleting files from their installation and as a consequence end up in tears.

Background

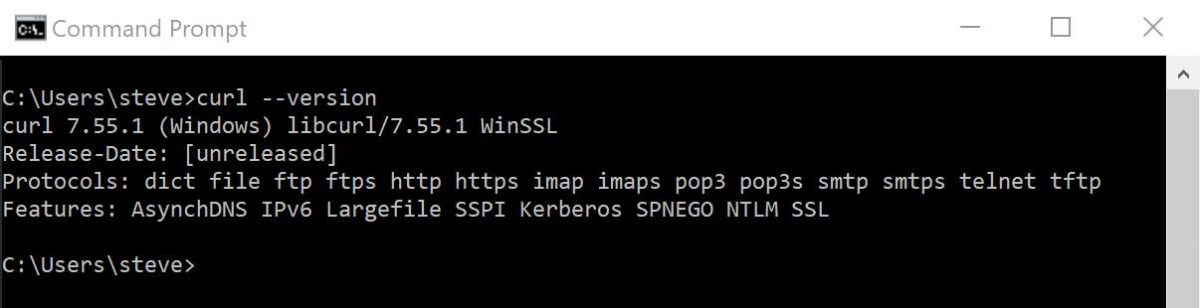

The real and actual curl tool has been shipped as part of Windows 10 and Windows 11 for many years already. It is called curl.exe and is located in the System32 directory.

Microsoft ships this bundled with its Operating system. They get the code from the curl project but Microsoft builds, tests, ships and are in all ways responsible for their operating system.

NVD inflation

As I have blogged about separately earlier, the next brick in the creation of this story is the fact that National Vulnerability Database deliberately inflates the severity levels of security flaws in its vast database. They believe scaremongering serves their audience.

In one particular case, CVE-2022-43552 was reported by the curl project in December 2022. It is a use-after-free flaw that we determined to be severity low and not higher mostly because of the very limited time window you need to make something happen for it to be exploited or abused. NVD set it to medium which admittedly was just one notch higher (this time).

This is not helpful.

“Security scanners”

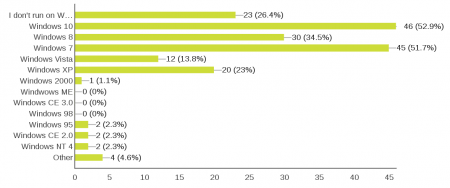

Lots of Windows users everywhere runs security scanners on their systems with regular intervals in order to verify that their systems are fine. At some point after December 21, 2022, some of these scanners started to detect installations of curl that included the above mentioned CVE. Nessus apparently started this on February 23.

This is not helpful.

Panic

Lots of Windows users everywhere then started to panic when these security applications warned them about their vulnerable curl.exe. Many Windows users are even contractually “forced” to fix (all) such security warnings within a certain time period or risk bad consequences and penalties.

How do you fix this?

I have been asked numerous times about how to fix this problem. I have stressed at every opportunity that it is a horrible idea to remove the system curl or to replace it with another executable. It is very easy to download a fresh curl install for Windows from the curl site – but we still strongly discourage everyone from replacing system files.

But of course, far from everyone asked us. A seemingly large enough crowd has proceeded and done exactly what we would stress they should not: they deleted or replaced their C:\Windows\System32\curl.exe.

The real fix is of course to let Microsoft ship an update and make sure to update then. The exact update that upgrades curl to version 8.0.1 is called KB5025221 and shipped on April 11. (And yes, this is the first time you get the very latest curl release shipped in a Windows update)

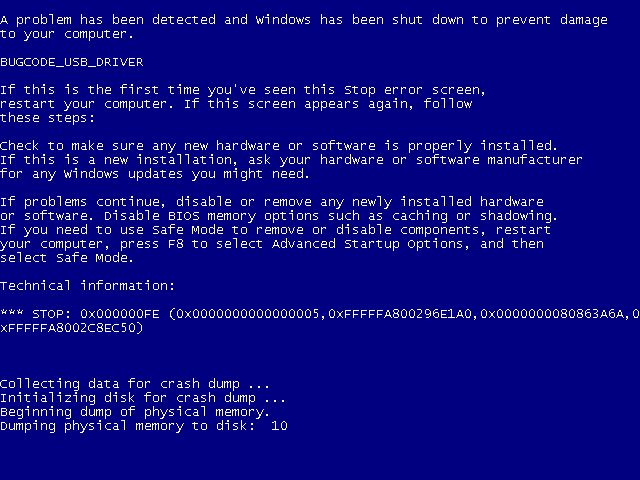

The people who deleted or replaced the curl executable noticed that they cannot upgrade because the Windows update procedure detects that the Windows install has been tampered with and it refuses to continue.

I do not know how to restore this to a state that Windows update is happy with. Presumably if you bring back curl.exe to the exact state from before it could work, but I do not know exactly what tricks people have tested and ruled out.

Bad advice

I have been pointed to responses on the Microsoft site answers.microsoft.com done by “helpful volunteers” that specifically recommend removing the curl.exe executable as a fix.

This is not helpful.

I don’t want to help spreading that idea so I will not link to any such post. I have reported this to Microsoft contacts and I hope they can maybe edit or comment those posts soon.

We are not responsible

I just want to emphasize that if you install and run Windows, your friendly provider is Microsoft. You need to contact Microsoft for support and help with Windows related issues. The curl.exe you have in System32 is only provided indirectly by the curl project and we cannot fix this problem for you. We in fact fixed the problem in the source code already back in December 2022.

If you have removed curl.exe or otherwise tampered with your Windows installation, the curl project cannot help you.