For us who don’t really like Java and who get annoyed by the fanboys who claim Java is as fast as C, Shawn O. Pearce’s recent write-up on the git mailing list on why the Java implementation (JGit) doesn’t run nearly as fast as the C implementation is minty fresh and with lots of details…

Mine is More than Yours

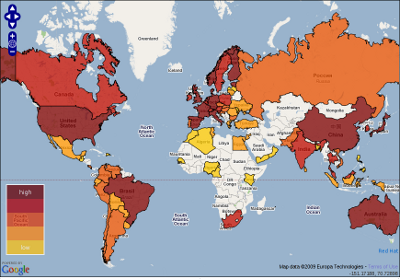

So Redhat created and made this very interesting Open Source Activity Map available. It rates 75 countries’ open sourceness based on “Government, Industry, and Community” and how good the countries are at open source. Sort of. The numbers are based on research done by Georgia Institute of Technology.

What does it give?

I’m a Swede who lives in Sweden and I can see that we’re not generally that much into open source, but we’re also a very small population compared to lots of other countries. But no, I cannot see how Finland or Norway are any further than we. What also puzzles me is how they even rate China before Sweden. The numbers that are provided don’t appear to take population into account, or even participation level in open source projects.

Of course I realize I have but one view and my view is deeply skewed by the projects I work in and by the people I meet and I have never even tried to compare different countries’ governments against each other in regards of open source so I figure I can’t make a good comment on these results. What is weird is that there’s simply almost no participants in open source projects from China (and several other Asian countries) and I’ve always thought that’s primarily due to language barriers. Is this map then suggesting that those missing people make up for it in projects within their own language regions?

Or isn’t it so that this map is more a map of comparing legislations and governments against each other, and no so much what actual people from these countries do in various projects? I would otherwise assume that us people in the western world have a small benefit from being close to the English language. Not to mention how those speaking native English can easily jump into most projects without thinking twice about language problems.

I think however that this is a very good idea. It brings issues to the open. What makes a country good for open source? What’s needed to make my country better?

bittorrent vs HTTP

A while ago I put together my document FTP vs HTTP that compares data transfers done using those two protocols. Similarities and differences.

Today I’m taking the next step in this little series and I offer you Bittorrent vs HTTP! This document discusses differences in areas such as:

- Transfer Speed

- Streaming

- Uplink

- Firewalls

- Redundancy

- Server Load

- Encryption

- Protocol Standards

As usual, I’m all ears for your valuable input and help on making it more accurate and more detailed than I manage to myself. Point out my mistakes, my weird use of words or whatever. Post a comment here or email me.

HTTP Status Report

Mark Nottingham held a very interesting one hour talk on the status of HTTP and the work on HTTPbis on a QCon conference recently, and luckily for us HTTP geeks there’s this great video/presentation from that.

Mark Nottingham held a very interesting one hour talk on the status of HTTP and the work on HTTPbis on a QCon conference recently, and luckily for us HTTP geeks there’s this great video/presentation from that.

curl is mentioned at least twice in the slides, unfortunately it has a wrong fact on the second mention where it says curl uses “Pragma: no-cache” as it isn’t true anymore. It used to do that, but we’ve stopped doing it in curl since a while ago.

I’m a subscriber to the httpbis mailing list and a casual contributor, but nonetheless his summary and overview of the state was refreshing as I’ve not been able to keep up with all the details and I haven’t been tracking that working group from its start either.

Rockbox gsoc2009

So finally it went public that this year Rockbox will be mentoring five students to reach their

individual goals and get their projects turned into realities.

The projects are new codecs, one is a new port, one is USB HID work and finally there’s this “make Rockbox an instrument” project.

Personally I’m admin for Rockbox gsoc effort for the third year, and this year I’m also co-mentoring a student (Robert Keevil) in his project to bring Rockbox to the Sansa View.

Let’s make this a great gsoc year!

Not social enough

There’s this concept that’s very popular these days. Social networking web sites. I’ve always been intrigued by the six degrees of separation idea so I joined Facebook and I’ve given it a try. Result: yawn.

Of course I realize everything depends on who you are, how your social network works and so on, but for me the Facebook experiment has only proven to me what I already suspected: I’m not “social enough” to care about all my friends’ teeny weeny little issues and expressions. I don’t have many friend added (35 at this particular moment) but already at this low number I get terribly uncomfortable after reading too much personal goings-on. And I’m not interested in everyones’ top-lists, what IKEA furniture they would be or which of the characters in the Muppet Show they resemble the most. I’m not going to use Facebook much until something changes.

Twitter is another one of the more trendy sites and services. This is very chaotic and most of the stuff posted there is utter crap. But there are some interesting people to follow and I do my best at following the tradition and contribute with my junk: My Twitter feed. More seriously I kind of use and view twitter as chatter around the coffee machine at a virtual office. You can select who to listen to. You can say whatever you feel like and the ones who might care could be reading it… The good part – for me of course – being that I can stay all geeky and techy and avoid that facebookish stuff I don’t like. Oh, and if you’re a friend in this manner, do tell me so that I can follow you!

LinkedIn is different. Here’s a site with a different goal and perspective, and keeping in touch with people I’ve been involved with professionally is a totally different matter. This makes a lot of sense to me, and it’s actually proven to pay off – several times. I believe me being a contract developer of course also make me value having a large network to reach out to so that I keep getting myself interesting assignments on a regular basis! My LinkedIn page.

USB converter woes

USB to rs232 converters are just never sold properly advertising what chip’s inside and right now I want to know if this one UART I’m working with perhaps is not playing fine with my existing converter cable.

I have this XScale PXA270 on a  board, and it has only one full featured RS232 (FFUART) and I’m about to move things over to the lesser featured BTUART.

board, and it has only one full featured RS232 (FFUART) and I’m about to move things over to the lesser featured BTUART.

A theory is that my current USB converter that is based on a “Prolific PL2303” doesn’t play nicely on the serial port that isn’t a full RS232.

So I ran off and bought a new cable. I grabbed the only model I found in my local Kjell & Company store – it’s quite different looking than my existing but there’s no hint anywhere on the package or inside of it that says what chipset that empowers it.

A quick drive back home (I’m working from home in this assignment), I plugged it in and I got to see this depressingly familiar dmesg output:

usbcore: registered new interface driver usbserial usbserial: USB Serial support registered for generic usbcore: registered new interface driver usbserial_generic usbserial: USB Serial Driver core usbserial: USB Serial support registered for pl2303 pl2303 2-2.4:1.0: pl2303 converter detected usb 2-2.4: pl2303 converter now attached to ttyUSB0 usbcore: registered new interface driver pl2303 pl2303: Prolific PL2303 USB to serial adaptor driver

So what now? I hate how (my) computers these days don’t have serial ports while the entire embedded world still very much uses them. I think I’ll go searching in my closet to see if I can find an old crap computer with a serial port to try.

Another theory is that the port simply is broken hw-wise on the dev board but that’s harder to check for me right now.

Update: it was (as usual) only my stupidity that prevented this from working. If I switch it over to the correct baudrate the usb converter does fine. But before I found that out, I did find a computer with a serial port and I did see it working on that too…

User data probably for sale

It’s time for a little “doomsday prophesy”.

Already seen happen

As was reported last year in Sweden, mobile operators here sell customer data (Swedish article) to companies who are willing to pay. Even though this might be illegal (Swedish article), all the major Swedish mobile phone operators do this. This second article mentions that the operators think this practice is allowed according to the contract every customer has signed, but that’s far from obvious in everybody else’s eyes and may in fact not be legal.

For the non-Swedes: one mobile phone user found himself surfing to a web site that would display his phone number embedded on the site! This was only possible due to the site buying this info from the operator.

While the focus on what data they sell has been on the phone number itself – and I do find that a pretty good privacy breach in itself – there’s just so much more the imaginative operators just very likely soon will offer companies who just pay enough.

Legislations going the wrong way

There’s this EU “directive” from a few years back:

Directive 2006/24/EC of the European Parliament and of the Council of 15 March 2006 on the retention of data generated or processed in connection with the provision of publicly available electronic communications services or of public communications networks and amending Directive 2002/58/EC

It basically says that Internet operators must store information of users’ connections made on the net and keep them around for a certain period. Sweden hasn’t yet ratified this but I hear other EU member states already have it implemented…

(The US also has some similar legislation being suggested.)

It certainly doesn’t help us who believe in maintaining a level of privacy!

What soon could happen

There’s hardly a secret that operators run network supervision equipments on their customer networks and thus they analyze and snoop on network data sent and received by each and every customer. They do this for network management reasons and for such legislations I mentioned above. (Disclaimer: I’ve worked and developed code for a client that makes and sells products for exactly this purpose.)

Anyway, it is thus easy for the operators to for example spot common URLs their users visit. They can spot what services (bittorrent, video sites, Internet radio, banks, porn etc) a user frequents. Given a particular company’s interest, it could certainly be easy to check for specific competitors in users’ visitor logs or whatever and sell that info.

If operators can sell the phone numbers of their individual users, what stops them from selling all this other info – given a proper stash of money from the ones who want to know? I’m convinced this will happen sooner or later, unless we get proper legislation that forbids the operators from doing this… In Sweden this sell of info is mostly likely to get done by the mobile network operators and not the regular Internet providers simply because the mobile ones have this end user contract to lean on that they claim gives them this right. That same style of contract and terminology, is not used for regular Internet subscriptions (I believe).

So here’s my suggestion for Think Geek to expand somewhat on their great shirt:

(yeah, I have one of those boring ones with only the first line on it…)

C Code Commandments

I’m an old school C programmers guy and I stay true to some of the older and commonly used rules present in many open source and similar projects. Since I sometimes rant about this to people, I thought I’d amuse my surrounding by stating them here for public use/ridicule. Of course heavily inspired by the great and superior The Ten Commandments for C Programmers. My commandments are not necessarily in any prio order.

Thy Code Shall Be Narrow

Only in very rare situations should code be allowed to be wider than 80 columns. I want my two or three windows next to each other horizontally and still see the code fine. Not to mention the occasional loading up in an editor in a 80 columns terminal and that is should be possibly print nicely (for reviews etc). Wide code is also harder to read I think, quite similarly to how very wide texts in web pages etc aren’t kind to your eyes either.

Thou Shall Not Use Long Symbol Names

To be able to keep the code easily readable by human eyes so that you quickly get an overview and understand things, you simply need to keep the function and variable names fairly short. Not to mention that the code gets harder to keep within 80 columns if you use ridiculously long names.

Comments Shall Be Plenty

Yes, this is something we know everyone says and few live up to. In statistical analyzes of my own C code I usually reach around 25-27% comments and I’m usually happy with that amount. Comments should explain what is otherwise not obvious in the code.

No Hiding What’s Really Happening

I’m not a fan of overloaded operators or snazzy macros that do fancy stuff without it being noticeable in the code. It should be clear when reading the code what it does. That’s also one of the reasons you don’t catch me doing a lot of C++ work…

Thou Shalt Hunt Down and Kill Compiler Warnings

Compiler warnings may be significant and in some cases they are not. Either way, it is our duty to silence them at all times. Firstly because it is often simpler to fix the code to not warn than to figure out if the warning is indeed right or not, but perhaps primarily because it makes it harder to see new warnings appearing if the old ones have been left there.

Write Portable Code Unless Forced by Evil

You may first believe that your code will live on forever on this single platform with this single compiler, but soon and very soon you will learn otherwise. Then you will cheer this rule as it makes you consider unaligned memory accesses, assuming byte-order of binary data or the size of your ‘long’ variable type.

Repeat Not, Use Functions

I see a lot of “copy and paste” programming in my daily life and I’ve learned that sooner or later such practices lead to sorrow. If you paste the same code on multiple places it not only makes it repetitive and boring to update it when an API or something changes, more seriously it increases the risk that you address bugs only on one out of many places or that the fix differ etc. It also makes the code larger and thus harder to follow and understand.

Thou Shalt Not Typedef Away Pointers

A really nasty habit to be seen in some source codes is when people use typedefs to define their own types that is simply a pointer to something. Like with ‘typedef struct whatever * whatever_t’. While I’m in general against excessive typedefing, I’m fine with them in many cases but not when used to hide pointers to look like “ordinary” types. It makes code harder to follow.

Defines, no fixed numbers

Code that relies on zero and non-zero can get away without this, but as soon as you start relying on more numbers in the code you must start using #defines or possibly enums to make them appear with names in the code. Using names is more clever than hardcoded numbers since you can avoid having to explain the number in a comment, and of course it’ll be easier to change the actual number in the code at a later point without it being a painful search-and-replace operation.

Blabbing on curl

It’s now been confirmed and set in stone that I will do a 20 minute talk (in Swedish!) on curl and libcurl on April 27th on an event arranged by Dataföreningen Stockholm’s open source network.

My guess is that if you’re interested and involved enough to read this here on my blog, you already know just about everything I can fill my short talk with…!