Welcome to this patch release of curl, shipped only 14 days since the previous version. We decided to cut the release cycle short because of the several security vulnerabilities that were pointed out. See below for details. There are no new features added in this release.

It burns. Mostly in our egos.

Release video

Numbers

the 208th release

0 changes

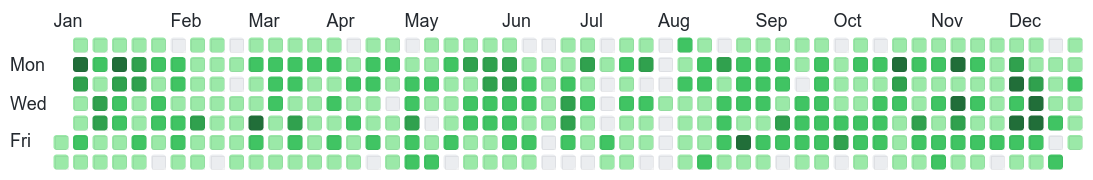

14 days (total: 8,818)

41 bug-fixes (total: 7,857)

65 commits (total: 28,573)

0 new public libcurl function (total: 88)

0 new curl_easy_setopt() option (total: 295)

0 new curl command line option (total: 247)

20 contributors, 6 new (total: 2,632)

13 authors, 3 new (total: 1,030)

6 security fixes (total: 121)

Bug Bounties total: 22,660 USD

Security

Axel Chong reported three issues, Harry Sintonen two and Florian Kohnhäuser one. An avalanche of security reports. Let’s have a look.

curl removes wrong file on error

CVE-2022-27778 reported a way how the brand new command line options remove-on-error and no-clobber when used together could end up having curl removing the wrong file. The file that curl was told not to clobber actually.

cookie for trailing dot TLD

CVE-2022-27779 is the first of two issues this time that identified a problem with how curl handles trailing dots since the 7.82.0 version. This flaw lets a site set a cookie for a TLD with a trailing dot that then might have curl send it back for all sites under that TLD.

percent-encoded path separator in URL host

In CVE-2022-27780 the reporter figured out how to abuse curl URL parser and its recent addition to decode percent-encoded host names.

CERTINFO never-ending busy-loop

CVE-2022-27781 details how a malicious server can trick curl built with NSS to get stuck in a busy-loop when returning a carefully crafted certificate.

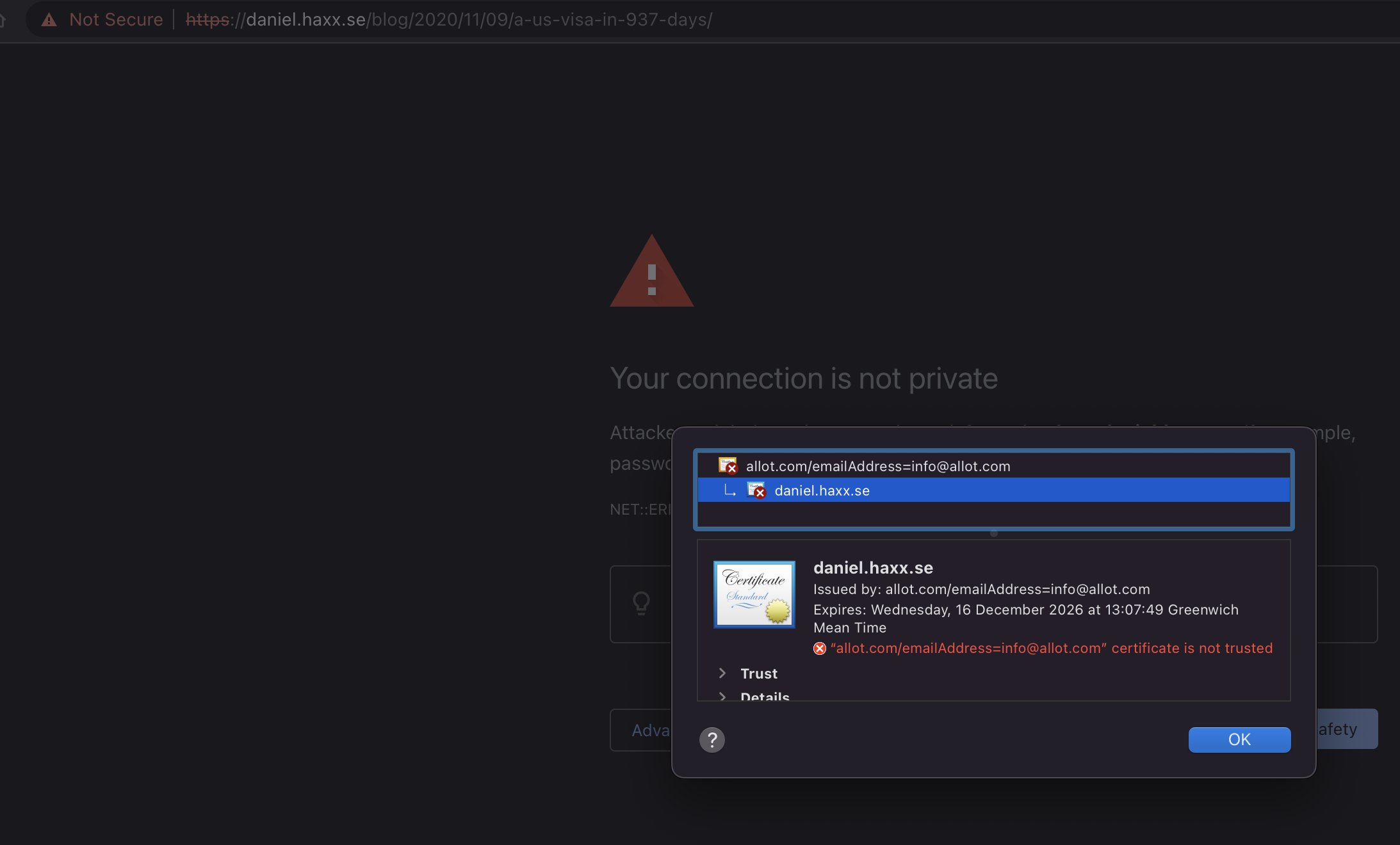

TLS and SSH connection too eager reuse

CVE-2022-27782 was reported and identifies a set of TLS and SSH config parameters that curl did not consider when reusing a connection, which could end up in an application getting a reused connection for a transfer that it really did not expected to.

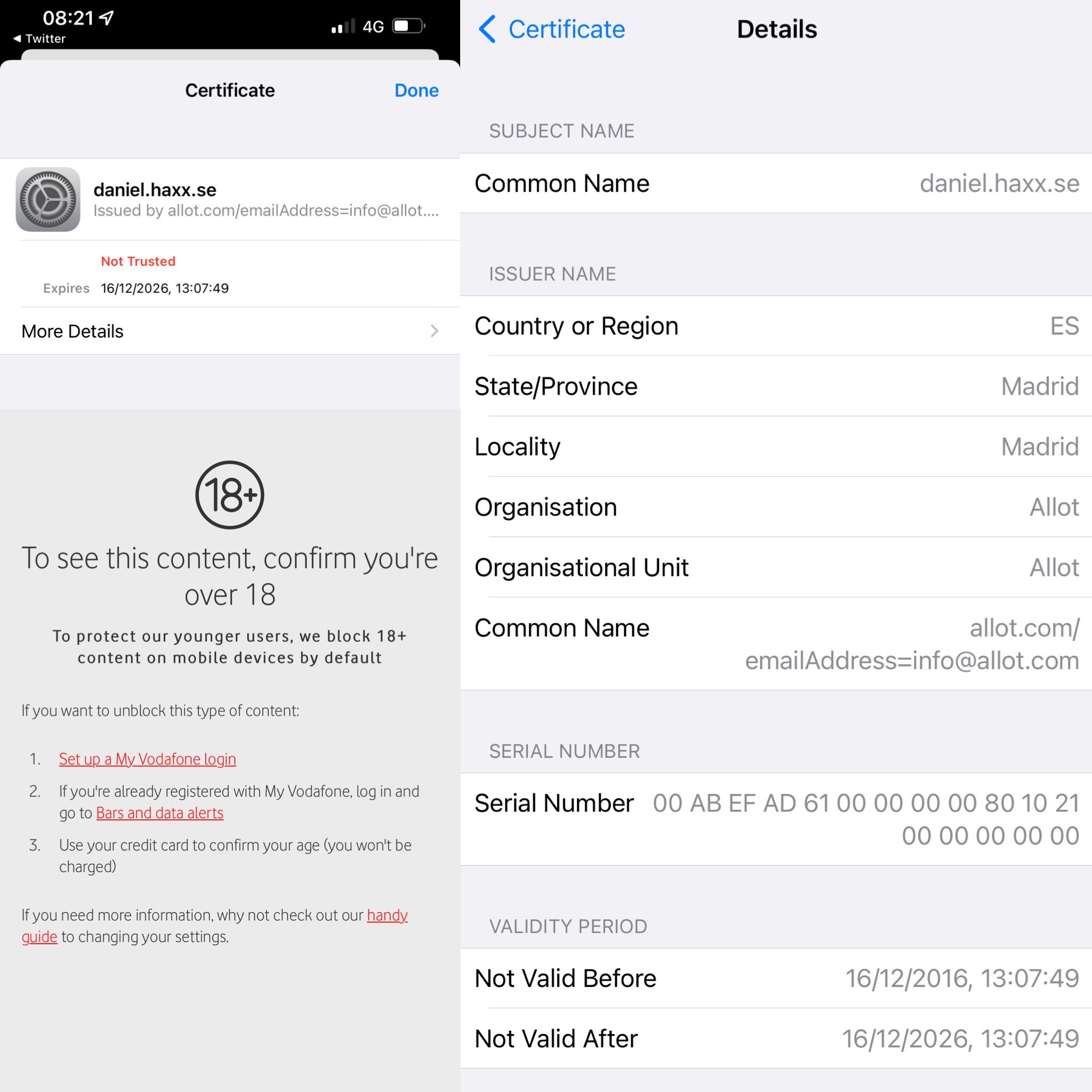

HSTS bypass via trailing dot

CVE-2022-30115 is very similar to the cookie TLD one, CVE-2022-27779. A user can make curl first store HSTS info for a host name without a trailing dot, and then in subsequent requests bypass the HSTS treatment by adding the trailing dot to the host name in the URL.

Bug-fixes

The security fixes above took a lot of my efforts this cycle, but there were a few additional ones I could mention.

urlapi: address (harmless) UndefinedBehavior sanitizer warning

In our regular attempts to remove warnings and errors, we fixed this warning that was on the border of a false positive. We want to be able to run with sanitizers warning-free so that every real warning we get can be treated accordingly.

gskit: fixed bogus setsockopt calls

A set of setsockopt() calls in the gskit.c backend was fond to be defective and haven’t worked since their introduction several years ago.

define HAVE_SSL_CTX_SET_EC_CURVES for libressl

Users of the libressl backend can now set curves correctly as well. OpenSSL and BoringSSL users already could.

x509asn1: make do_pubkey handle EC public keys

The libcurl private asn1 parser (used for some TLS backends) did not have support for these before.