Over in our podcast Fossified, we did an entire episode dedicated to curl’s 25th birthday.

Enjoy!

Open Source, Free Software, and similar

Over in our podcast Fossified, we did an entire episode dedicated to curl’s 25th birthday.

Enjoy!

curl turns 25 years old and what better way to celebrate this than to join fellow curl friends, developers and fans online on the exact birthday?

At 17:00 UTC March 20, 2023. We run an Online birthday party open for everyone to join.

Consider muting yourself when you join, but feel encouraged to leave the camera on. Click the link above to get the time for your location. It is within this weird period between the US has switched to daylight saving time while Europe has not yet switched.

If it works out, I will do a presentation walking over the bigger changes done over the years while sipping on the 25 year old single malt I have arranged for the occasion. With the ability for everyone to ask questions or otherwise contribute.

The meeting might be recorded and made available for watching after the fact.

The actual links needed to join or watch the celebrations will be added to this blog post closer to the event start.

Date: March 20 2023

Time: 17:00 UTC March 20, 2023

Where: Online

The event will be live-streamed.

Zoom meeting link

Please express whatever you feel like in regards to curl’s 25th birthday in this discussion thread setup for this purpose.

When a security vulnerability has been found and confirmed in curl, we request a CVE Id for the issue. This is a global unique identifier for this specific problem. We request the ID from our CVE Numbering Authority (CNA), Hackerone, which once we make the issue public will publish all details about it to MITRE, which hosts the central database.

In the curl project we have until today requested CVE Ids for and provided information about 135 vulnerabilities spread out over twenty-five years.

A CVE identifier affects a specific product (or set of products), and the problem affects the product from a version until a fixed version. And then there is a severity. How bad is the problem?

The Common Vulnerability Scoring System (CVSS) is a way to grade severity on a scale from zero to ten. You typically use a CVSS calculator, fill in the info as good as you can and voilá, out comes a score.

The ranges have corresponding names:

| Name | Range |

| Low | lower 4 |

| Medium | 4.0-6.9 |

| High | 7.0-8.9 |

| Critical | 9 or higher |

Anyone who ever gets a problem reported for their project and tries to assess and set a CVSS score will immediately realize what an imperfect, simplified and one-dimensional concept this is.

The CVSS score leaves out several very important factors like how widespread the affected platform is, how common the affected configuration is and yet it is still very subjective as you need to assess as and mark different things as None, Low, Medium or High.

The same bug is therefore likely to end up with different CVSS scores depending on who fills in the form – even when the persons are familiar with the product and the error in question.

In the curl project we decided to abandon CVSS years ago because of its inherent problems. Instead we use only the four severity names: Low, Medium, High, and Critical and we work out the severity together in the curl security team as we work on the vulnerability. We make sure we understand the problem, the risks, its prevalence and more. We take all factors into account and then we set a severity level we think helps the world understand it.

All security vulnerabilities are vulnerabilities and therefore security risks, even the ones set to severity Low, but having the correct severity is still important in messaging and for the rest of the world to get a better picture of how serious the issue is. Getting the right severity is important.

Let me introduce yet another player in this game. The National Vulnerability Database (NVD). (And no, it’s not “national” really).

NVD hosts a database of vulnerabilities. All CVEs that are submitted to MITRE are sucked in into NVD’s database. NVD says it “performs analysis on CVEs that have been published to the CVE Dictionary“.

That last sentence is probably important.

NVD imports CVEs into their database and they in turn offer other databases to import vulnerabilities from them. One large and known user of the NVD database is this I mentioned in a recent blog post: GitHub Security Advisory Database (GHSA DB) .

This GitHub thing an ambitious database that subsequently hosts a lot of vulnerabilities that people and projects reported themselves in addition to them importing information about all vulnerabilities ever published with CVE Ids.

This creates a huge database that in theory should contain just about every software vulnerability ever reported in the public. Pretty cool.

NVD, in their great wisdom, rescores the CVSS score for CVE Ids they import into their database! (It’s not clear how or why, but they seem to not do it for all issues).

NVD decides they know better than the project that set the severity level for the issue, enters their own answers in the CVSS calculator and eventually sets that new score on the CVEs they import.

NVD clearly thinks they need to do this and that they improve the state of the CVEs by this practice, but the end result is close to scaremongering.

Because NVD sets their own severity level and they have some sort of “worst case” approach, virtually all issues that NVD sets severity for is graded worse or much worse when they do it than how we set the severity levels.

Let’s take an example: CVE-2022-42915: HTTP proxy double-free. We deemed this a medium severity. It was not made higher partly because of the very limited time-window between the two frees, making it harder to take advantage of.

What did NVD say? Severity 9.8: critical. See the same issue on GitHub.

Yes, it makes you wonder what magic insights and knowledge the person/bots on NVD possessed when they did this.

The different severity levels should not matter too much but people find those inflated ones and they believe them. Users also find the discrepancies, get confused and won’t know what to believe or whom to trust. After all, NVD is trust-inducing brand. People think they know their stuff and if they say critical and the curl project says medium, what are we expected to think?

I claim that NVD overstate their severity levels and there unnecessarily scares readers and make them think issues are worse and more dangerous than they actually are.

The fact that GitHub now imports all CVE data from NVD makes these severity levels get transported, shown and believed as they are now also shown in the GHSA DB.

Look how many critical issues there are!

This NVD habit of re-scoring is an old existing habit and I just recently learned it. GitHub’s displaying the severity levels highlighted it for me, especially since users out there seem to trust and use this GitHub database.

I have talked to humans on the GitHub database team and I push for them to ignore or filter out the severity levels as set by NVD, if possible. But me being just a single complaining maintainer I do not expect this to have much of an effect. I would urge NVD to stop this insanity if I had any way to.

(Updated after first post). It turns out that some CVEs that we have filed from the curl project that uses our CNA hackerone have been submitted to MITRE without any severity level or CVSS score at all. For such issues, I of course understand why someone would put their own score on the issue because then our originally set score/severity is not passed on. Then the “blame” is instead shifted to Hackerone. I have contacted them about it.

NVD provides a way to dispute their rescores, but that’s just an open free-text form. I have use that form to request that NVD stop rescoring all curl issues. Although I honestly think they should rather stop all rescoring and only do that in the rare occasions where the original score or severity is obviously wrong.

I cannot dispute the severity levels at GitHub. They show the NVD levels.

My home office was featured over at Hacker Stations where I also detailed stuff in my workplace and offer a few more photos.

I have been working exclusively from home for nine years straight now.

Recently there has been an interesting debate in the Open Source world where people have objected to being called “Suppliers” as in Supply Chain Security when you are but an Open Source developer offering your code to the world for free and at no cost but also without any warranties. That is not a supplier, that’s just a creator.

A supplier would have some form of relationship or contract with the users of your code.

Terminology is difficult and yet powerful but changing what words we use for certain things is an uphill fight. I suspect we will keep using the term supplier even when we are not under contract.

Over the last few years the Open Source ecosystem have gotten attention when serious security flaws have been found and exploited, like log4shell and similar. It has brought the discussions to a higher level and now we talk about SBOMs and what responsibility “suppliers” and users of software based products have.

Already back when I participated in the meeting with the Cyber Safety Review Board the Open Source people present stressed – in unison – that the security problems are rarely problems in the upstream Open Source projects:

Most popular and widely used Open Source projects fix our security problem really fast, in a responsibly manner and provide information and fixes within a time period few proprietary software vendors match.

The issue is rather that the fixed versions are not being used. Things remain unpatched and running old, stale, versions because upgrading is hard and has a cost attached to it. Many stick to not upgrading their product and rather make the bet that whatever problem that practice might bring in the future, it is cheaper than doing upgrades. Capitalism.

Then there are intermediates. There are suppliers of software that are sitting in-between the producer of the code and the end user of it. They are for example Linux Distribution. They package Open Source products and provide them to users in a convenient way for users to install what they select. They take the role of the supplier.

Open Source software distribution depends on intermediates: package managers and curators. It would be highly impractical to try to use the universe of existing code without them.

This however puts a lot of power and responsibility in the hands of these package managers.

In the early days of the Internet software was often provided via “download sites”. Websites featuring basically a catalog of software to which they allowed anyone to upload software to, and everyone to download whatever software they wanted from.

Those systems ended up highly criticized because they were too easily used to spread viruses or other malware. Over time we have switched to “package managers” which (usually) work in slightly more intelligent manners with package verification and more.

But not all package managers are sane package managers. Some of them are just download sites under a different name. Intermediates who do not accept their responsibilities as software suppliers.

“NuGet is the package manager for .NET” is the exact quote from their website.

NuGet is run by Microsoft (which gives it an official sounding status and flare), but packages are built and provided by volunteers. It is unclear to me what kind of checks, if any, that are done on the packages before they are allowed to get distributed by nuget to end users. I looked through their docs but I found no mention of this.

In early March 2023, I went to the nuget site and I searched for “curl”. I got a match for what is a packaged curl version and detailed instructions of how to install it.

On this curl page, it links to the curl project page and the libcurl landing page. For a casual user it probably looks official enough. It also mentions how users have downloaded curl 137,000 times from there. 3,388 is said to have downloaded curl in the last six weeks – proving that this page still tricks people.

A more experienced curler might spot that it links to the old curl domain name (which we moved away from two plus years ago) and that the links use http:// (not https), which we all collectively stopped doing many years ago.

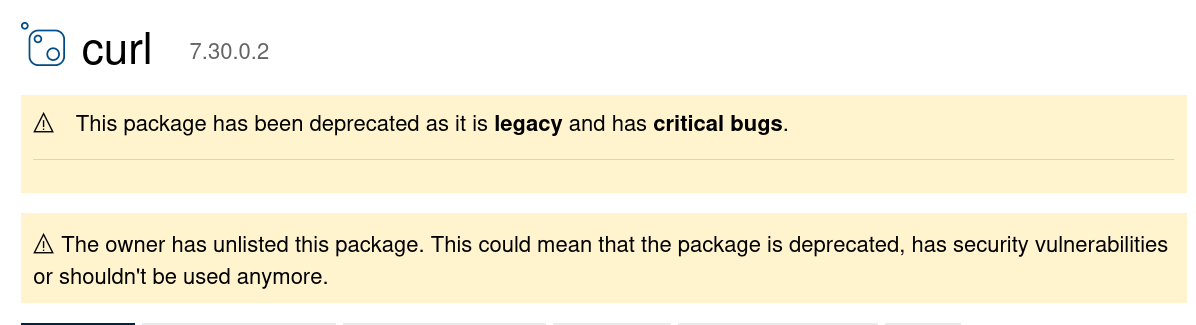

This curl version is almost ten years old. curl 7.30.0 was released in April 2013.

By using this official-sounding package manager to install what sounds like an official package, you get a curl package from a decade ago.

At the moment of this writing, curl 7.30.0 has been reported to have 68 individual security problems. Problems that have all since been fixed in later versions.

I reported this as an issue to NuGet on February 27 and asked them to remove this severely outdated package. Now that Windows 10 and 11 ship curl bundled already, and the curl project offers fresh official Windows builds.

(I would not be able to personally provide an update or “take over” responsibility for this package.)

The Nuget team responded after just six hours:

Thank you for contacting support for the NuGet.org website. We do not support individual NuGet packages. Please contact the package owner directly using the “Contact owners” link on the package details page.

(The response email was also riddled with references to Microsoft, there is no doubt this is an official service. )

I did not ask for support of this package, but okay, I proceeded and contacted the owner of this package via another form. I asked them to either remove the package from nuget or to upgrade it to a modern version as soon as possible. Apparently the nuget admins do not consider this to be a problem worth addressing.

The owner of the nuget curl package is called coapp, and is responsible for a whole series of packages, most of them seem to be packaged in the same style. Their 57 packages have been download 1.8 million times and I could only spot one of them as updated after 2015. Most of them have not been touched since 2013. The curl package is just the one that triggered me. There are probably about 55 other packages that should be updated or removed as well.

Someone pointed out to me that coapp was also the name of some kind of Windows build tool/system, that according to nuget’s own GitHub issue was declared dead already in 2016. They sound related.

When coapp (the owner of the curl package) had not responded after 16 hours, I tried another approach: I could report this package as vulnerable to security problems. I mean, I know for sure it is vulnerable for 68 errors that are well explained (and I wrote every single one of the explanations). But it did not succeed either.

When I tried to report this as a security problem, I could either report a problem with a Microsoft product and get linked over to their site for this purpose, or get informed that if there is a problem with a non-Microsoft package I should just instead contact the owner…

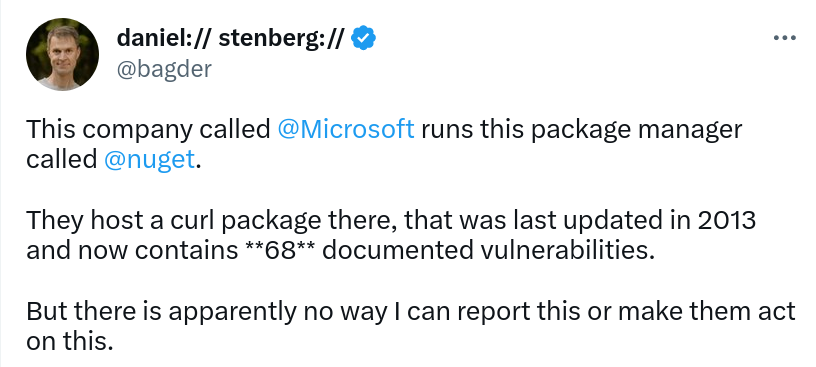

So I took it to Twitter. I posted a four-tweet mini-rant about the situation and got several responses from the right people within minutes.

I was also contacted over email and a conversation started. To their credit, they say that they started the conversation internally already before my Twitter rant based on my initial report, but did not inform me about it.

Not even 24 hours after my Twitter post, the curl package on nuget was “unlisted”. Meaning it is no longer found by search on the site and it was made to feature this big warning message on the top (if you still knew how to go to the URL):

For me, this is certainly good enough. A user can no longer get tricked into believing this is a fine package to install without understanding that there are sever risks involved.

I also specifically told the nuget team that this is not a unique situation for curl. There are numerous other packages in the exact same situation. Like several (most?) of the other packages the same owner published and hasn’t updated since 2013. This is a systemic failure, not a single instance. Every package can not have someone yell on Twitter about their situations.

Why did the nuget team not know about the 68 vulnerabilities that affected the curl version they hosted?

They “blame” the fact that they use the GitHub Security Advisory Database (GHSA DB) to lookup this in, and yeah, not a single curl issue matched!!

This, because the GHSA DB machine-imports all details about CVEs from MITRE but they apparently cannot set the metadata correctly so there are no issues for a package called “curl”. You can free-text search it to find the curl issues, but they don’t have the metadata fields set correctly, like package name, affected versions or patched versions.

It really does not scale or work at any level that all the world’s projects would have to go to the GHSA DB to update this information. And how would we even know when and that it is desired?

The GHSA DB allows “community contributions” which in theory could allow us to provide updates. Except that GitHub very explicitly only allow such updates to packages within specific ares. A quote from their site:

Unfortunately, we cannot accept community contributions to advisories outside of our supported ecosystems

The “ecosystems” they talk about is then basically a dozen different package managers. curl is not part of any of those.

In the curl project we highlight every confirmed security issue prominently in release notes and in release video presentations. We send out individual emails about each flaw to let the world know. Every issue is also posted to the oss-security mailing list.

Issues are also sent to MITRE for the official CVE Id database via our CNA: Hackerone. It should be noted however that the MITRE database never has an as complete and detailed description and overview of the problem as curl’s own descriptions have. The MITRE database is for looking up CVEs, not for getting the entire picture.

We have what I consider one of the most complete and detailed overview of past security vulnerabilities of any software project on our website to help users see exactly which flaws exist in which curl version.

Now we are discussing what more we can do in our end.

Thanks to [anonymous] who highlighted the NuGet situation for us.

Henrik, Johan, Magnus and I are all Swedish “FOSS people” and friends since many years back.

We like open source. We work with Open Source. We have contributed to Open Source since a long time back. We also have slightly different backgrounds and areas of expertise so we don’t all just totally overlap.

We decided we wanted to try putting together a podcast and talk about all things FOSS: from lightweight news down to more deep dives and interviews and discussions with peeps who know more. With our takes and personal views applied of course.

We named it Fossified. We have recorded a first pilot where we test the concept a little, but more importantly this is just the beginning and we have created a GitHub repository where we collect program ideas and proposals.

We certainly need and appreciate your help. With ideas for topics and guests. Perhaps even with a logo or why not an intro song?

We have recorded our first episode. You can find it on our very fancy website fossified.com.

I attended FOSDEM 2023 and over the two days of conference I gave away well over a thousand curl stickers. Every last curl sticker I had in my possession.

What does a curl maintainer do when he runs out of curl stickers? He restocks.

Right now, the only way to get your hands on a set of these fine quality stickers is to meet me. Attend a talk that I do or a conference that I visit.

I might do another attempt at distributing stickers at a later point, but the last time did leave some scars I still haven’t gotten over!

My desktop computer is my trusted work machine that I do the majority of all my (curl) development on. When the 15th computer I’ve owned through the times was ten years old the time was ripe to bump things up a notch.

I don’t do games (as in: never) and I don’t do any other 3D stuff. I just need my two 4K monitors to display my desktops and browser windows fine.

In my ordinary days I compile C code and I run tests. CPU and memory will be used to build and test faster and to be able to run separate VM runtimes in parallel without problems. I rarely even build very large or complicated software projects. (The days of building Firefox are long gone…)

Ideally, this upgrade will last for a long time again so I’ve tried to push it a little to increase those chances.

This new baby is (of course) built from components and I’ve relied heavily on advice, research and help by my brother Björn for this.

I’m a sucker for maximum single-thread performance. Lots of things I do still run in single-threaded in a single core so I think this is good for me.

The Intel Core i7-13700K at 3.4 GHz is benchmarked at a CPU Mark that is over 7 times faster than the CPU of my old machine. 16 cores, Socket 1700 Raptor Lake. “13th gen”

On cpubenchmark.net, this model is currently ranked 4th among all current CPUs in single-thread performance.

I think I’m not alone in having past happy experiences with Noctua. This time I use the Noctua NH-U12A, which I have gotten reports does a good job for this CPU.

Something to host the CPU that just does the job. MSI PRO B660M-A DDR4 is a small board, but I don’t need anything more.

Turned out to require a little dance to make it accept my CPU since the BIOS it shipped with did not support it, so we had to insert an older CPU first just in order to upgrade the BIOS to make it boot with the intended CPU!

My plan is to start trying out the built-in Intel video capabilities. Nothing extra. Lots of space in the box!

I don’t think I’ve experienced a situation when I have run out of my memory in my current 32GB setup, so my original plan was to go with 64GB in this new machine. However it turned out that the motherboard does not work with all four slots using my 3600MHz memories at full speed and I decided it is better to start out with 32 really fast gigabytes than 64GB at 2100MHz (which was the alternative)!

Corsair Vengeance RGB PRO SL / 3600MHz / DDR4 / CL18. Two 16GB modules installed makes it 32GB in total. I can go 2x32GB in a future when if this turns out to be too limited.

RGB-LEDs on the memory modules is apparently a thing now.

Should be >50% faster than my old memory.

I am not a data hoarder. On the disks in my current machine I use just a few hundred gigabytes. 2 TB will give me sufficient space to play with for a while. My old machine had a 3 TB spinning disk so this is less room than before, but I don’t expect that to be a problem. This storage is speced doing 14 times faster reads than my previous SSD.

The Samsung 980 Pro series SSD 2TB M.2 (MZ-V8P2T0)

The Kolink Enclave / 600W / 80+ Gold is nothing special. A modular and cheap alternative that my preferred supplier happened to offer. Again, I will not run any power hungry graphics cards.

Me and friends have been happy with Fractal Design cases in the past and a friend of mine mentioned that he recently purchased this model and is very happy, so I went with the Fractal Design Define 7 Compact / Solid.

This is a big case for a what is otherwise a very small computer (need). Partly because of the recommendation but also partly because that my preferred supplier did not offer any smaller Fractal Design case at the moment. At least there will be lots of air in the box.

This case looks almost identical to my old case which will make my machine upgrade at least physically impossible to detect in my home office once installed.

Front interface from left to right: Reset button, Audio I/O, 1x USB 3.1 Gen 2 Type-C, Power button, 2x USB 2.0, 2x USB 3.0,

Here’s how the new beast compares to the old box when doing a few of my regular every day tasks.

On a typical curl debug build of mine, identical setups. Run-time in seconds on the new machine vs the old.

| Task | Old | New |

| build curl with make -sj | 13.1 | 2.7 |

| autoreconf -fi | 13.2 | 5.6 |

| configure | 19.3 | 10.1 |

| build in curl’s test directory | 30.1 | 7.6 |

| run the first 200 curl tests with valgrind | 331 | 194 |

Downloading 100 GB from http://localhost to /dev/null with curl. Old: 2248MB/sec. New: 4281MB/sec.

Things did not work out the way we had planned. The 7.88.0 release that was supposed to be the last curl version 7 release contained a nasty bug that made us decide that we better ship an update once that is fixed. This is the update. The second final version 7 release.

the 214th release

0 changes

5 days (total: 9,103)

25 bug-fixes (total: 8,690)

32 commits (total: 29,853)

0 new public libcurl function (total: 91)

0 new curl_easy_setopt() option (total: 302)

0 new curl command line option (total: 250)

19 contributors, 7 new (total: 2,819)

10 authors, 1 new (total: 1,120)

0 security fixes (total: 135)

As this is a rushed patch-release, there is only a small set of bugfixes merged in this cycle. The following notable bugs were fixed.

The main bug that triggered the patch release. In some circumstances , when data was delivered as a HTTP/2 multiplexed stream, curl would get it wrong and cause the saved data to be corrupt. It would get the wrong data from the internal buffer.

This was not a new bug, but recent changes made it more likely to trigger.

In some cases curl would only allow half the given timeout period when doing connects.

Running the test suite with verbose mode enabled, it would error out with this message. Since a short while back, we consider warnings in the test script fatal so this then aborts all the tests.

The test suite uses gnuserv-tls to verify SRP authentication. It will only use this tool if found at startup, but due to recent changes in the GnuTLS project that ships this tool, it now builds with SRP disabled by default and thus can’t be used for this test. Now, the test script also checks that it actually supports SRP before trying to use it.

A regression made it impossible to ask for HTTP/3 if the build did not also support HTTP/2.

The fix in 7.88.0 turned out to cause occasional hiccups (on Windows at least) and this is a follow-up improvement for the verification of the socketpair emulation. When we create the pair and verify that it works, we must make sure that the code handles EWOULDBLOCK correctly.

Welcome to the final and last release in the series seven. The next release is planned and intended to become version 8.

the 213th release

5 changes

56 days (total: 9,098)

173 bug-fixes (total: 8,665)

250 commits (total: 29,821)

0 new public libcurl function (total: 91)

0 new curl_easy_setopt() option (total: 302)

1 new curl command line option (total: 250)

78 contributors, 41 new (total: 2,812)

42 authors, 18 new (total: 1,119)

3 security fixes (total: 135)

This time we bring you three security fixes. All of them covering cases for which we have had problems reported and fixed before, but these are new subtle variations.

CURL_HTTP_VERSION_3ONLY was added for the library and --http3-only was added to the tool.CURLU_PUNYCODE which allows and application to get the punycode version of a host name/URL.-w now offers %{certs} and %{num_certs} which outputs the server certificate(s).While we count over 140 individual bugfixes merged for this release, here follows a curated subset of some of the more interesting ones.

When asking for HTTP/3, curl will now also try older HTTP versions with a slight delay so that if HTTP/3 does not work, it might still succeed with and use an older version.

An application can now set drastically larger download buffers. For high speed/localhost transfers of some protocols this might sometimes make a difference.

To help users realize when they use a debug build of curl, it now outputs a warning at the top of the --verbose output. We strongly discourage users to ship or use such builds in production.

WebSocket support remains an experimental feature in curl but it is getting better. Several smaller and bigger bugs were squashed. Please continue to try it and report any problems and we can probably consider removing the experimental label soon.

If you used a DICT URL it would sometimes do wrong as it previously only URL decoded parts of the path when using it. Now it correctly decodes the entire thing.

The libcurl functions for doing these conversions were sped up significantly. In the order of 3x and 7x.

The haproxy details are now properly sent before the TLS handshake takes place.

Fixes a stalling problem when data is being uploaded and downloaded at the same time.

Optimizes outgoing frames for HTTP/2 into doing more in fewer sends.

By changing the order of things, curl is better off spending CPU cycles while waiting for the server’s response and thereby making the entire handshake process complete faster.

A regression in 7.87.0 made this feature completely broken. Now back on track again.

By improving the handling of multiple concurrent streams over a single connection, curl now performs such transfers much faster than before. Sometimes an almost 3x speedup.

The parser that parses the “noproxy” string accepts plain space (without comma) as separators, while hardly any other tool or library does. This matters because it can be set in an environment variable. This accepted space-only separation is now marked as deprecated.

The NSS backend was improved to work better for cases when the socket has been drained of data and only the NSS internal buffers has it, which could lead to curl getting stalled or losing data. Note: NSS support is marked for removal later in 2023.

curl has an internal socketpair emulation function for Windows. The way it worked did not allow MITM sniffers, but instead return error if such a thing was detected. It turns out too many users run tools on Windows that do this, so we have changed the logic to accept their presence and use.

An entirely new line of tests that opens up new ways to test and verify our HTTP implementations in ways we could not do before. It uses pytest and an apache httpd server with special test modules.

A regression.

If you would use an invalid URL for upload, curl would erroneously report the problem as “out of memory” which unsurprisingly greatly confused users.