I got into the world of Android for real when I got my HTC Magic in July last year, as my first smart phone. It has served me well for almost 18 months and now I’ve taken the next step. I got myself an HTC Desire HD to replace it. For your and my own pleasure and amusement, I’m presenting my comparison of the two phones here.

I got into the world of Android for real when I got my HTC Magic in July last year, as my first smart phone. It has served me well for almost 18 months and now I’ve taken the next step. I got myself an HTC Desire HD to replace it. For your and my own pleasure and amusement, I’m presenting my comparison of the two phones here.

The bump up from a 3.2″ screen at 480×320 to a 4.3″ 800×480 is quite big. The big screen also feels crisper and brighter, but I’m not sure if the size helps to give that impression. Even though the 4.3″ screen has the same resolution that several phones already do at 3.7″ the pixel density is still higher than my old phone’s and if I may say so: it is quite OK.

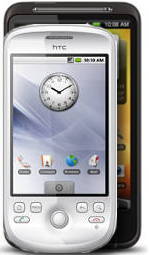

The Desire HD phone is huge. 68 mm wide, 11.8mm thick and 123 mm tall and a massive 164 grams makes it a monster next to the magic. The Magic is 55.5 mm wide, 13.6 mm think and 113 mm tall at 116 grams.

So when put on top of the HD with two sides aligned, the HD is 10mm larger in two directions. Taken together, the bigger size is not a problem. The big screen is lovely to use when browsing the web, reading emails and using the on-screen keyboard. I don’t have any problems to slide the phone into my pocket and the weight is actually a pretty good weight as it makes the phone feel solid and reliable in my hand. Also, the HD has much less “margin” outside of the screen than the Magic, so the percentage of the front that is screen is now higher.

The big screen makes the keyboard much easier to type on. The Androd 2.2 (Sense?) keyboard is also better than the old 1.5 one that was shipped on the Magic. The ability to switch language quickly is going to make my life soooo much better. And again, the big screen makes the buttons larger and more separated and that is good.

The HD has soft buttons on the bottom of the phone, where the Magic has physical ones. I actually do like physical ones a bit better, but I’ve found these ones to work really nice and I’ve not had much reason to long for the old ones. I also appreciate that the HD has the four buttons in the same order as the Magic so I don’t have to retrain my spine for that. The fact that Android phones can have the buttons in other orders is a bit confusing to me and I think it is entirely pointless for manufacturers to not go with a single unified order!

I never upgraded the Magic. Yes, I know it’s a bit of a tragic reality when a hacker-minded person like myself doesn’t even get around to upgrade the firmware of his phone, and I haven’t experienced cyanogenmod other than through hearsay yet. Thus, the Android 2.2 on the HD feels like a solid upgrade from the old and crufty Magic’s Android 1.5. The availability of a long range of applications that didn’t work on the older Android is also nice.

Desire HD is a fast phone. It is clocked at twice the speed as the Magic, I believe the Android version is faster in general, it has more RAM and it has better graphics performance. Everything feels snappy and happens faster then before. Getting a web page to render, installing apps from the market, starting things. Everything.

The HTC Magic was the first Android phone to appear in Sweden. It was shipped with standard Android, before HTC started to populate everything with their HTC Sense customization. This is therefore also my introduction to HTC Sense and as I’ve not really used 2.2 before either, I’m not 100% sure exactly what stuff that is Sense and what’s just a better and newer Android. I don’t mind that very much. I think HTC Sense is a pretty polished thing and it isn’t too far away from the regular Android to annoy me too much.

I’ve not yet used the HD enough in a similar way that I used the Magic to be able to judge how the battery time compares. The Magic’s 1340 mAh battery spec against the HD’s 1230 mAh doesn’t really say much. The HD battery is also smaller physically.

I’ve not yet used the HD enough in a similar way that I used the Magic to be able to judge how the battery time compares. The Magic’s 1340 mAh battery spec against the HD’s 1230 mAh doesn’t really say much. The HD battery is also smaller physically.

USB micro vs mini. The USB micro plug was designed to handle more insert/unplug rounds and “every” phone these days use that. The Magic was of the former generation and came with a mini plug. There’s not much to say about that, other that the GPS in my car uses a mini plug and thus the cable in the car was conveniently able to charge both my phone and GPS, but now I have to track down a converter so that I don’t have to change between two cables just for that reason.

The upgrade to a proper earphone plug is a huge gain. The Magic was one of the early and few phones that only had a USB plug for charging, earphones and data exchange. The most annoying part of that was that I couldn’t listen with my earphones while charging.

The comparison image on the right side here is a digital mock-up that I’ve created using the correct scale, so it shows the devices true relative sizes. I just so failed at making a decent proper photograph…

After my printer died, I immediately ordered a new one online and not long afterwards I could pick it up from my local post office. As I use both the scanner and the printer features pretty much I went with another “all-in-one” model and I chose an HP model (again) basically because I’ve been happy with how my previous worked (before its death). “HP Officejet Pro 8500 A910” seems to be the whole name. And yeah, it really is as black as the picture here shows it.

After my printer died, I immediately ordered a new one online and not long afterwards I could pick it up from my local post office. As I use both the scanner and the printer features pretty much I went with another “all-in-one” model and I chose an HP model (again) basically because I’ve been happy with how my previous worked (before its death). “HP Officejet Pro 8500 A910” seems to be the whole name. And yeah, it really is as black as the picture here shows it.

I’ve not yet used the HD enough in a similar way that I used the Magic to be able to judge how the battery time compares. The Magic’s 1340 mAh battery spec against the HD’s 1230 mAh doesn’t really say much. The HD battery is also smaller physically.

I’ve not yet used the HD enough in a similar way that I used the Magic to be able to judge how the battery time compares. The Magic’s 1340 mAh battery spec against the HD’s 1230 mAh doesn’t really say much. The HD battery is also smaller physically.