(This text has been updated since first post. It used to say 300 million but then I missed all iOS devices…)

Ok, so here’s a little ego game. The rules are very simple: try to figure out all things I’ve written code in (to any noticeable degree) and count how many users the products that use such code might have. Then estimate the total amount of humans that may in fact use my code from time to time.

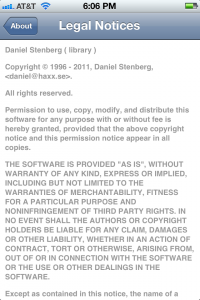

I’ve been doing software both for fun and professionally for over 20 years (my first code I made available to others was written in 1986 on the C64). But as I look back on what I’ve done at my day job for all this time, most of my labor have been hidden into some sort of devices or equipment that never really were distributed to many customers. I don’t think I’ve ever done software professionally for consumer stuff. My open source code however has found its way into all sorts of things so I decided I could limit this count to open source code I’ve done. It is also slightly easier. Or perhaps less hard. And when it comes to open source, none of my other projects is as popular and widely used as curl. Counting curl users will drown all others.

First some basic stats: the curl.haxx.se web site gets more than 12000 unique visitors every weekday. curl packages are downloaded from there at a rate of roughly 1 million times/year. The site sends over 200GB of data every month. We have no idea how large share of users who get curl from the main site, but a guess is that it is far less than half of the user base. But of course the number of downloads says nothing about how many users there are.

Mac OS X ships with curl (and libcurl?) by default. There are perhaps 86 million macs in the world.

libcurl is used in television sets and Bluray players made by at least five major brands (LG, Panasonic, Philips, Sony and Toshiba). I’m convinced they don’t use it in all models but probably just a few of their higher end internet-connected ones. 10% of the total? It seems in 2009 there were 35 million flat panel TVs sold in the US with a forecast of the sales growing slightly over the years. I figure that would mean perhaps 100 million ones sold in the US the three last years possibly made by these brands (and lets assume that includes some Blu-rays too), and lets say that is half the world market for them, it would make libcurl shipping in 20 million something TVs.

curl and libcurl are installed by default in some Linux distributions but not in all. In Debian it is an optional extra and the popcon overview shows perhaps 70% of Debian users install libcurl (and 56% use libssh2). Lets assume that’s a suitable average for all desktop Linux users. How many are we? Let’s for the sake of the argument say that 3% of all computers using the internet run Linux. Some numbers say there are 2.3 billion internet users. It would make 70 million Linux computers and thus 49 million libcurl installations. Roughly.

Open Office and the recent spin-off LibreOffice are both using libcurl. Open Office said they have 100 million users now in May 2012.

Games: Second Life, Warhammer 40000, Ghost Recon, Need for speed world, Game Face and “Saints Row: The third” all use libcurl. The first game alone boasts over 20 million registered users. I couldn’t find any numbers for any other game I know uses libcurl.

Other embedded uses: libcurl and libssh2 are both announced as supported packages of Wind River Linux, the perhaps most dominant provider of embedded Linux and another leading provider is Montavista which also offers curl and libcurl. How many users? I have absolutely no idea. I’d say more than just a few, but how many? Impossible to tell so let’s ignore that possibly huge install base. Spotify uses or at least used libcurl, and early 2012 they had 15 million users.

Phones. libcurl is shipped in iOS and WebOS and it is used by RIM and Apple for some (to me) unknown purposes. Lots of applications on Android still build and use libcurl, c-ares and libssh2 for their apps but it is just impossible to estimate how many users they get. Apple has sold 250 million iOS devices, at least. (This little number was missed by me in the calculation I first posted.)

Infrastructure. libcurl is used in the Tornado web server made by Friendfeed/Facebook and it is used by significant services at Yahoo.com. How many users of said services? Surely many millions. But really, that would be users of just 2 libcurl users so let’s not rush ahead and count those as direct users!

libcurl powers the very popular PHP/CURL extension that a large amount of PHP-running sites have enabled and use. How many? In 2008, 33% of all internet sites run PHP. Let’s say the share has decreased to 30% since then and the total amount of active sites is now 200M. That makes 60M PHP sites, and if there’s 10% of them using PHP/CURL we’re talking 6 million users.

Development. git, darcs, bazaar and Mercurial are all children of the distribution version control systems (some of them very popular) and they all use libcurl. How many users do they have? Since they’re all working on multiple platforms I would estimate the number of users of them collectively to be in the tens of millions range. Let’s say 10 million.

86 + 20 + 49 + 100 + 20 + 15 + 250 + 6 + 10 = 556 million users

And yes, of course a lot of these users will be the same actual human. But I may also just have counted all the numbers completely wrong to start with. I would say I’m probably within the correct magnitude!

550 million users out of the world’s 2.3 billion internet users. 1 out of 4 are using something that runs code I wrote. Kind of cool!

Sweden has a population of less than 10 million. 550 million is almost twice the entire USA, four times the population of Russia or almost eight times the population of Germany… As a comparison to some big browsers, a recent article claims Google Chrome has 200 million users in April 2012 which may be around 25% of the browser market and showing that basically none of the individual browsers have a lot more users than 300 million…

Of course I know that every single person who reads this is a knowing or unknowing user… Can you think of any other major users?