We have a sort of symbiosis between the curl project and the PHP project, at least we in the curl project get a lot of people learning about curl the first time when they hack PHP. This happens to the extent that to a lot of people, curl is but the name of a PHP extension.

We have a sort of symbiosis between the curl project and the PHP project, at least we in the curl project get a lot of people learning about curl the first time when they hack PHP. This happens to the extent that to a lot of people, curl is but the name of a PHP extension.

So while we can thank the PHP project for referring us a bunch of users that might not otherwise have found us, there is also quite some “friction” or perhaps better called “disagreements” between our projects and how we (don’t) interact.

name

CURL vs libcurl vs cURL. We only ever use the funny casing cURL when referring to the cURL project. The cURL project produces curl and libcurl. curl is a command line tool and libcurl is a file transfer library.

The PHP team provides and distributes an extension they call CURL which is a libcurl binding for PHP. This naming causes a great deal of confusion to PHP users who go to the curl site only to find that it isn’t at all devoted to (just) the PHP extension but instead there’s mostly a lot of other curl stuff there!

I’ve discussed this naming issue with the PHP team on several occasions but they don’t agree with me that this causes confusion, and even if it would cause confusion they seem to be of the opinion that it doesn’t matter since the PHP users should find all their info about CURL and related matters on the PHP site and thus it doesn’t matter what the curl site shows or not. (Or something similar to that, I really don’t mean to put words into their mouths so you better ask them about this to get their real and unaltered view – see my link to an old conversation for some info.)

I tend to call it PHP/CURL just to make sure it is clear that we’re talking about the binding. This of course also confuse users since that’s not what it is called in the PHP documentation…

irony

PHP themselves recognize the problem of related projects borrowing the name, so they forbid derivate projects to include “PHP” in their names. Clearly stated in paragraph 4 of their license.

versions

The binary build of PHP for windows have libcurl built in statically with the curl extension code, so people can’t easily replace the libcurl version used by PHP. And in general, Windows people using open source are much less likely to ever build anything on their own in my experience.

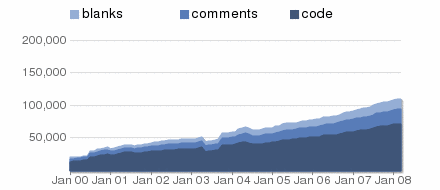

PHP 5.2.6 was released on May 1st 2008 still has libcurl 7.16.0 built into the Windows version. That libcurl version was released in October 2006 and right now we have released eight (8) releases after that one. All of them including many bug fixes. This is more than slightly annoying.

support

This isn’t anyone’s fault but… there really aren’t many PHP people who are involved or care about the libcurl binding so those who have PHP/CURL problems tend to ask questions on the curl-and-php mailing list and in the #curl IRC channel but there aren’t any PHP insiders around in those areas to answer PHP questions…

development

Is it just my imagination or isn’t there a lot of PHP users that have asked for the same features in the PHP libcurl binding for a long time by now, but really very few actually step forward and make a difference? So these features remain unfixed and not added. This is even “just” a binding, nothing of the really hard work is done in the binding itself… It might just be me and my head, but the ratio for doers/plain users in the PHP world seems to be exceptionally low in comparison to many other open source areas I see. Of course this is tainted by me only really seing the PHP/CURL side of the PHP world.

future

I have no reason to expect anything to change, nor do I know how I can make anything of this change on my own so I assume things will just continue working exactly like this in the future as well…