It’s time for a little “doomsday prophesy”.

Already seen happen

As was reported last year in Sweden, mobile operators here sell customer data (Swedish article) to companies who are willing to pay. Even though this might be illegal (Swedish article), all the major Swedish mobile phone operators do this. This second article mentions that the operators think this practice is allowed according to the contract every customer has signed, but that’s far from obvious in everybody else’s eyes and may in fact not be legal.

For the non-Swedes: one mobile phone user found himself surfing to a web site that would display his phone number embedded on the site! This was only possible due to the site buying this info from the operator.

While the focus on what data they sell has been on the phone number itself – and I do find that a pretty good privacy breach in itself – there’s just so much more the imaginative operators just very likely soon will offer companies who just pay enough.

Legislations going the wrong way

There’s this EU “directive” from a few years back:

Directive 2006/24/EC of the European Parliament and of the Council of 15 March 2006 on the retention of data generated or processed in connection with the provision of publicly available electronic communications services or of public communications networks and amending Directive 2002/58/EC

It basically says that Internet operators must store information of users’ connections made on the net and keep them around for a certain period. Sweden hasn’t yet ratified this but I hear other EU member states already have it implemented…

(The US also has some similar legislation being suggested.)

It certainly doesn’t help us who believe in maintaining a level of privacy!

What soon could happen

There’s hardly a secret that operators run network supervision equipments on their customer networks and thus they analyze and snoop on network data sent and received by each and every customer. They do this for network management reasons and for such legislations I mentioned above. (Disclaimer: I’ve worked and developed code for a client that makes and sells products for exactly this purpose.)

Anyway, it is thus easy for the operators to for example spot common URLs their users visit. They can spot what services (bittorrent, video sites, Internet radio, banks, porn etc) a user frequents. Given a particular company’s interest, it could certainly be easy to check for specific competitors in users’ visitor logs or whatever and sell that info.

If operators can sell the phone numbers of their individual users, what stops them from selling all this other info – given a proper stash of money from the ones who want to know? I’m convinced this will happen sooner or later, unless we get proper legislation that forbids the operators from doing this… In Sweden this sell of info is mostly likely to get done by the mobile network operators and not the regular Internet providers simply because the mobile ones have this end user contract to lean on that they claim gives them this right. That same style of contract and terminology, is not used for regular Internet subscriptions (I believe).

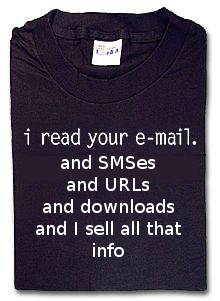

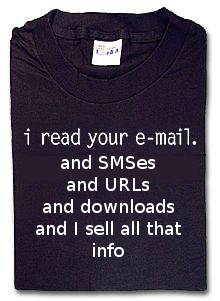

So here’s my suggestion for Think Geek to expand somewhat on their great shirt:

(yeah, I have one of those boring ones with only the first line on it…)