In the afternoon of October 17, 2013 we merged the first config file ever that would use Travis CI for the curl project using the nifty integration at GitHub. This was the actual introduction of the entire concept of building and testing the project on every commit and pull request for the curl project. Before this merge happened, we only had our autobuilds. They are systems run by volunteers that update the code from git maybe once per day, build and run the tests and then upload all the logs.

Don’t take this wrong: the autobuilds are awesome and have helped us make curl what it is. But they rely on individuals to host and admin the machines and to setup the specific configs that are tested.

With the introduction of “proper” CI, the configs that are tested are now also hosted in git and allows the project members to better control and adjust the tests and configs, plus that we can run them on already on pull-requests so that we can verify code before merge instead of having to first merge the code to master before the changes can get verified.

Seriously. Always.

Travis provided a free service with a great promise.

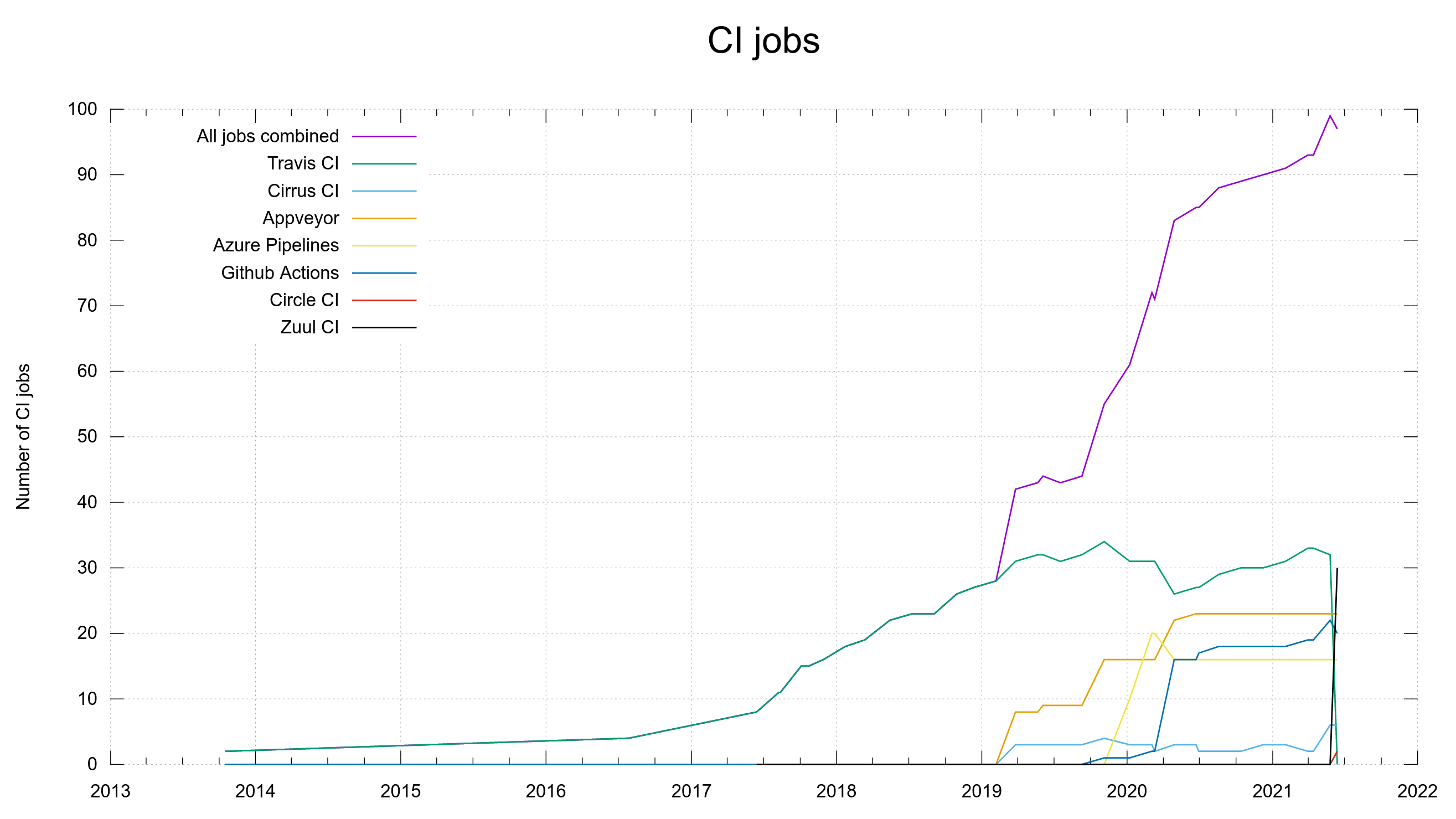

In 2017 we surpassed 10 jobs per commit, all still on Travis.

In early 2019 we reached 30 jobs per commit, and at that time we started to use and spread out the work on more CI services. Travis would still remain as the one we’d lean on the heaviest. It was there and we had custom-written a bunch of jobs for it and it performed well.

Travis even turned some levers for us so that we got more parallel processing powers than on the regular open source tier, and we listed them as sponsors of the curl project for their gracious help. This may or may not be related to the fact that I met Josh Kalderimis (co-founder of travis) in 2019 and we talked about curl’s use of it and they possibly helping us more.

Transition to death

This year, 2021, the curl project runs around 100 CI jobs per commit and PR. 33 of them ran on Travis when we were finally pushed over from travis-ci.org to their new travis-ci.com domain. A transition they’d been advertising for a while but wasn’t very clearly explained or motivated in my eyes.

The new domain also implied new rules and new tiers, we quickly learned. Now we would have to apply to be recognized as an open source project (after 7.5 years of using their services as an open source project). But also, in order to get to take advantage of their free tier being an open source project was no longer enough. Among the new requirements on the project was this:

Project must not be sponsored by a commercial company or

organization (monetary or with employees paid to work on the project)

We’re a small independent open source project, but yes I work on curl full-time thanks to companies paying for curl support. I’m paid to work on curl and therefore we cannot meet that requirement.

Not eligible but still there

I’m not sure why, but apparently we still got free “credits” for running CI on Travis. The CI jobs kept working and I think maybe I sighed a little from relief – of course I did it prematurely as it only took us a few days into the month of June until we had run out of the free credits. There’s no automatic refill but we can apparently ask for more. We asked, but many days after having asked we still had no more credits and no CI jobs could run on Travis anymore. CI on Travis at the same level as before would cost more than 249 USD/month. Maybe not so much “it will always be free”.

The 33 jobs on Travis were there for a purpose. They’re prerequisites for us to develop and ship a quality product. Without the CI jobs running, we risk landing bad code. This was not a sustainable situation.

We looked for alternative services and we quickly got several offers of help and assistance.

New service

Friends from both Zuul CI and Circle CI stepped up and helped us started to get CI jobs transitioned over from Travis over to their new homes.

At June 14th 2021, we officially had no more jobs running on Travis.

Visualized as a graph, we can see the Travis jobs “falling off a cliff” with Zuul rising to the challenge:

Services come and go. There’s no need to get hung up on that fact but instead keep moving forward with our eyes fixed on the horizon.

Thank you Travis CI for all those years of excellent service!

Pay up?

Lots of people have commented and think I’m “whining” about Travis CI charging for something that is useful and that I should rather just pay up. I could probably have gone with that but I dislike their broken promise and that they don’t consider us Open source anymore and I feel I have a responsibility to use the funds we get from gracious donors as wisely and economically as possible, and that includes using no-cost or cheap services rather than services charging thousands of dollars per year.

If there really were no other available and viable options, then paying could’ve been an alternative. Now, moving on to something else was the right choice for us.

Credits

Image by Gerd Altmann from Pixabay