I have been awarded the European Open Source Achievement Award! Proud, happy and humble I decided to accept it, as well as the associated nomination for president of the new European Open Source Academy for the coming two years.

This information was not made public until the very same day of the award ceremony, on January 30th 2025, so I was not able to talk about it before. Then FOSDEM kept me occupied the days immediately following.

Official letter

Dear Mr. Daniel Stenberg,

On behalf of the OSAwards.eu initiative, it is our great honour to invite you to receive the European Open Source Achievement Award, on the occasion of the inaugural ceremony of the European Open Source Awards to be held in Brussels on Thursday, 30 January 2025 at 18:30.

In recognition of your leadership quality, we would also like to extend this invitation for you to join the European Open Source Academy in the quality of Academy President, for a two year tenure. As Academy President you will play a critical role in guiding the establishment and reputability of the European Open Source Academy and its annual European Open Source Awards. You can find more information about the provisional structure of the European Open Source Academy and expected involvement from founding members in the attached project brief.

This inaugural award, corresponding with an invitation to the European Open Source Academy, recognises your exceptional contribution as a European open source leader whose impact has transformed the European and global technological landscape, and whose engagement has highly contributed to a thriving open source community in Europe. Your founding and continuous contribution to cURL has had a tremendous impact on the global and European technological landscape thanks to its innovative nature. We also want to recognise your continuous commitment to open source maintenance and knowledge sharing, positioning you as a leading and respected figure in the European open source community.

The inaugural ceremony will be a formal event followed by a gala cocktail reception. You are welcome to bring a guest with you, please let us know their name for us to add them to the guest list. You can find more information about the programme in the attached concept note. Logistical information for the ceremony will be shared upon confirmation of your attendance.

Thank you for considering our invitation to receive the European Open Source Achievement award and to join the European Open Source Academy. We hope that you will accept this testimony of recognition and we remain available should you have any questions.

On the award

I have worked on and with Open Source for some thirty years. I believe in the model, I like the community, I enjoy the challenges. Some of my work in Open Source has been successful beyond my wildest dreams.

Getting recognition for my work in the wider world outside the inner circle is huge. The many thousands of hours of starring on screens, debugging code, tearing hair and silently yelling at my past self for not writing better comments actually sometimes produce something useful.

There are many awesome people in the European Open Source universe. I can only imagine the struggle the award committee had to select a single awardee.

Thank you!

My wife Anja joined me in Brussels and we participated at the award gala dinner there on January 30th, 2025 when I was handed the actual physical award. The Thursday just before the FOSDEM weekend. I sported an extra large smile on my face during that entire following FOSDEM conference.

The actual physical award trophy is shown off in a little video below.

On the academy

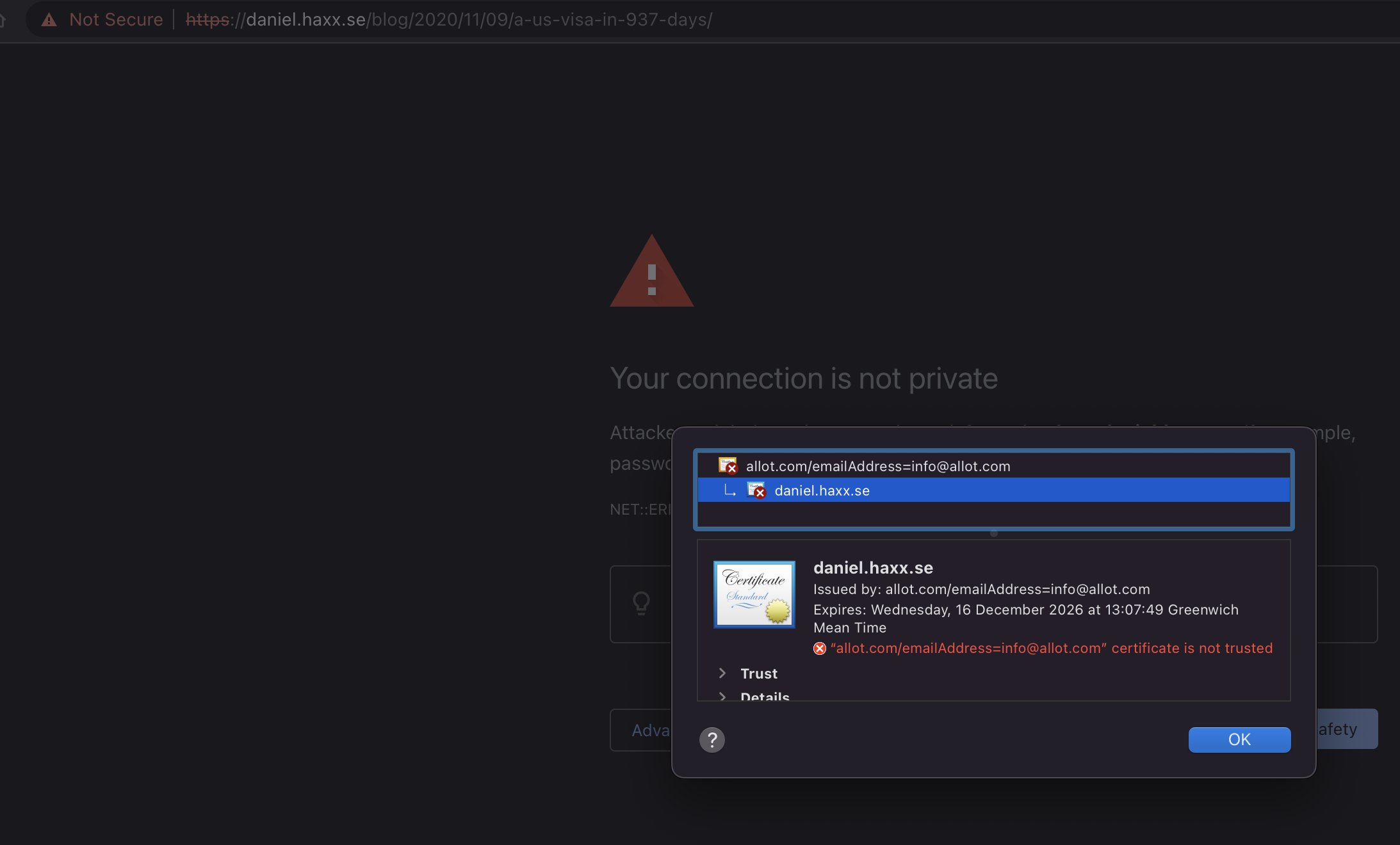

I believe Open Source has been an immense success story over the last three decades and it has become what is essentially the foundation of all current digital infrastructure. I am convinced that Europeans are already well positioned in this ecosystem but we should not lean back and think that anything is done or over. We need to keep on our toes and rather strengthen and enforce Open Source, our participation in and our understanding of it – and we need to fund it. This is where a lot of important software is made and controlled. We need to make sure that the EU leadership understands this.

Those are examples of what I hope this European Open Source Academy can help out with.

My role as president of the academy is not going to be a time-sink. I cannot allow myself that. I have curl work to do and I remain a full-time lead developer of curl. I will be president on the side, for a limited number of hours per month.

Next

It never gets old or boring to get awards, so even if I have been given a whole range of truly fabulous awards by now, every new one still makes me humble and super excited.

Getting recognition, awards and thank yous are superb ways to boost energy and motivation – I highly recommend it. I am totally set on continuing my work on curl and other Open Source for many more years to come.

I want to lead by example. I aspire to be the Open Source person I myself looked for and tried to mimic when I was younger.

Showing off

Some photos

Next to me you can also see the three additional awardees: Amandine Le Pape (The Business & Impact Award), Lydia Pintscher (The Advocacy & Awareness Award), and David Cuartielles (The Skills & Education Award). Awesome people.

The person handing me the award, seen on the photos, is Omar Mohsine, open source coordinator at the United Nations Office for Digital and Emerging Technologies.

Update

The seven stars on the OSAwards logo symbolize the core principles and values of open source software. They represent collaboration, transparency, community, innovation, freedom, diversity, and inclusivity.