We may not get a very warm and sunny summer this year in Sweden, but now at least we have the Haxx towels to use the very brief moments on the beach! It measures 140 x 70 centimeters and if you click on the image you’ll get a higher resolution version to browse.

curl ‘techJobs’ SanFran

I got this awesome curl sighting from the US101, near SFO posted to me (click for a slightly larger version) a few days ago – showing curl on a very large billboard in an ad for Dice.

It is clearly meant to look like a unix style command line that invokes curl. The command line would only be syntactically correct if the user truly has two host names named “techJobs” and “SanFran” and curl would attempt to get their root HTTP document off them on port 80.

It gets followed by that C++/C99 comment that really is an odd added context.

To me, all the sign shows is a company that desperately tries to look techy but in trying too hard it fails really miserably. I’m really honored my work found its way to such a high profile spot though!

Follow-up, September 11th:

Much to my surprise, today I received an email from Dice responding to my “critique” above. I have to say that this response was much more than I expected and I tip my hat to them for this response:

Hi Daniel,

I’m the marketing director for Dice.com and I wanted to reach out to you to thank you for spotting our billboard error on the 101. We are deeply embarrassed by this mistake to say the least. In a classic coding scenario, our QA failed us. Unfortunately for us, we bought this spot long-term and we are trying to figure out how quickly we can replace the content.

We’ve been working this year to do a better job communicating in a more authentic way in our marketing efforts. Obviously, making a mistake like this makes it look pathetically inauthentic. At the end of the day, getting it wrong is inexcusable, particularly in such a visible way.

(photo by Stan van de Burgt, emphasis added by me)

HTTP2 Expression of Interest: curl

For the readers of my blog, this is a copy of what I posted to the httpbis mailing list on July 12th 2012.

Hi,

This is a response to the httpis call for expressions of interest

BACKGROUND

I am the project leader and maintainer of the curl project. We are the open source project that makes libcurl, the transfer library and curl the command line tool. It is among many things a client-side implementation of HTTP and HTTPS (and some dozen other application layer protocols). libcurl is very portable and there exist around 40 different bindings to libcurl for virtually all languages/enviornments imaginable. We estimate we might have upwards 500

million users or so. We’re entirely voluntary driven without any paid developers or particular company backing.

HTTP/1.1 problems I’d like to see adressed

Pipelining – I can see how something that better deals with increasing bandwidths with stagnated RTT can improve the end users’ experience. It is not easy to implement in a nice manner and provide in a library like ours.

Many connections – to avoid problems with pipelining and queueing on the connections, many connections are used and and it seems like a general waste that can be improved.

HTTP/2.0

We’ve implemented HTTP/1.1 and we intend to continue to implement any and all widely deployed transport layer protocols for data transfers that appear on the Internet. This includes HTTP/2.0 and similar related protocols.

curl has not yet implemented SPDY support, but fully intends to do so. The current plan is to provide SPDY support with the help of spindly, a separate SPDY library project that I lead.

We’ve selected to support SPDY due to the momentum it has and the multiple existing implementaions that A) have multi-company backing and B) prove it to be a truly working concept. SPDY seems to address HTTP’s pipelining and many-connections problems in a decent way that appears to work in reality too. I believe SPDY keeps enough HTTP paradigms to be easily upgraded to for most parties, and yet the ones who can’t or won’t can remain with HTTP/1.1 without too much pain. Also, while Spindly is not production-ready, it has still given me the sense that implementing a SPDY protocol engine is not rocket science and that the existing protocol specs are good.

By relying on external libs for protocol and implementation details, my hopes is that we should be able to add support for other potentially coming HTTP/2.0-ish protocols that gets deployed and used in the wild. In the curl project we’re unfortunately rarely able to be very pro-active due to the nature of our contributors, which tends to make us follow the rest and implement and go with what others have already decided to go with.

I’m not aware of any competitors to SPDY that is deployed or used to any particular and notable extent on the public internet so therefore no other “HTTP/2.0 protocol” has been considered by us. The two biggest protocol details people will keep mention that speak against SPDY is SSL and the compression requirements, yet I like both of them. I intend to continue to participate in dicussions and technical arguments on the ietf-http-wg mailing list on HTTP details for as long as I have time and energy.

HTTP AUTH

curl currently supports Basic, Digest, NTLM and Negotiate for both host and proxy.

Similar to the HTTP protocol, we intend to support any widely adopted authentication protocols. The HOBA, SCRAM and Mutual auth suggestions all seem perfectly doable and fine in my perspective.

However, if there’s no proper logout mechanism provided for HTTP auth I don’t forsee any particular desire from browser vendor or web site creators to use any of these just like they don’t use the older ones either to any significant extent. And for automatic (non-browser) uses only, I’m not sure there’s motivation enough to add new auth protocols to HTTP as at least historically we seem to rarely be able to pull anything through that isn’t pushed for by at least one of the major browsers.

The “updated HTTP auth” work should be kept outside of the HTTP/2.0 work as far as possible and similar to how RFC2617 is separate from RFC2616 it should be this time around too. The auth mechnism should not be too tightly knit to the HTTP protocol.

The day we can clense connection-oriented authentications like NTLM from the HTTP world will be a happy day, as it’s state awareness is a pain to deal with in a generic HTTP library and code.

Three static code analyzers compared

I’m a fan of static code analyzing. With the use of fancy scanner tools we can get detailed reports about source code mishaps and quite decently pinpoint what source code that is suspicious and may contain bugs. In the old days we used different lint versions but they were all annoying and very often just puked out far too many warnings and errors to be really useful.

Out of coincidence I ended up getting analyses done (by helpful volunteers) on the curl 7.26.0 source base with three different tools. An excellent opportunity for me to compare them all and to share the outcome and my insights of this with you, my friends. Perhaps I should add that the analyzed code base is 100% pure C89 compatible C code.

Some general observations

First out, each of the three tools detected several issues the other two didn’t spot. I would say this indicates that these tools still have a lot to improve and also that it actually is worth it to run multiple tools against the same source code for extra precaution.

Secondly, the libcurl source code has some known peculiarities that admittedly is hard for static analyzers to figure out and not alert with false positives. For example we have several macros that look like functions and on several platforms and build combinations they evaluate as nothing, which causes dead code to be generated. Another example is that we have several cases of vararg-style functions and these functions are documented to work in ways that the analyzers don’t always figure out (both clang-analyzer and Coverity show problems with these).

Thirdly, the same lesson we knew from the lint days is still true. Tools that generate too many false positives are really hard to work with since going through hundreds of issues that after analyses turn out to be nothing makes your eyes sore and your head hurt.

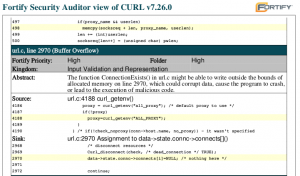

Fortify

The first report I got was done with Fortify. I had heard about this commercial tool before but I had never seen any results from a run but now I did. The report I got was a PDF containing 629 pages listing 1924 possible issues among the 130,000 lines of code in the project.

Fortify claimed 843 possible buffer overflows. I quickly got bored trying to find even one that could lead to a problem. It turns out Fortify has a very short attention span and warns very easily on lots of places where a very quick glance by a human tells us there’s nothing to be worried about. Having hundreds and hundreds of these is really tedious and hard to work with.

If we’re kind we call them all false positives. But sometimes it is more than so, some of the alerts are plain bugs like when it warns on a buffer overflow on this line, warning that it may write beyond the buffer. All variables are ‘int’ and as we know sscanf() writes an integer to the passed in variable for each %d instance.

sscanf(ptr, "%d.%d.%d.%d", &int1, &int2, &int3, &int4);

I ended up finding and correcting two flaws detected with Fortify, both were cases where memory allocation failures weren’t handled properly.

LLVM, clang-analyzer

Given the exact same code base, clang-analyzer reported 62 potential issues. clang is an awesome and free tool. It really stands out in the way it clearly and very descriptive explains exactly how the code is executed and which code paths that are selected when it reaches the passage is thinks might be problematic.

The reports from clang-analyzer are in HTML and there’s a single file for each issue and it generates a nice looking source code with embedded comments about which flow that was followed all the way down to the problem. A little snippet from a genuine issue in the curl code is shown in the screenshot I include above.

Coverity

Given the exact same code base, Coverity detected and reported 118 issues. In this case I got the report from a friend as a text file, which I’m sure is just one output version. Similar to Fortify, this is a proprietary tool.

As you can see in the example screenshot, it does provide a rather fancy and descriptive analysis of the exact the code flow that leads to the problem it suggests exist in the code. The function referenced in this shot is a very large function with a state-machine featuring many states.

Out of the 118 issues, many of them were actually the same error but with different code paths leading to them. The report made me fix at least 4 accurate problems but they will probably silence over 20 warnings.

Coverity runs scans on open source code regularly, as I’ve mentioned before, including curl so I’ve appreciated their tool before as well.

Conclusion

From this test of a single source base, I rank them in this order:

- Coverity – very accurate reports and few false positives

- clang-analyzer – awesome reports, missed slightly too many issues and reported slightly too many false positives

- Fortify – the good parts drown in all those hundreds of false positives

curl’s new HTTP cookies docs

Whenever libcurl saves cookies to a file, it saves them in the old “Netscape cookie format”.

Since ages ago libcurl has written a comment at the top of that cookie file (the file we refer to as the cookie jar in curl lingo) that explains that it is a cookie netscape cookie file format and then a URL pointing to the original Netscape document describing what cookies are and how they work. To be perfectly honest, we pointed to a local copy of that document since the original is since long removed and gone and now only available through archive.org.

The web page pointed to were never a perfect match since the page never explained the file format or its use, only the cookie protocol – as it was originally described to work and not even like cookies works today.

Starting with curl 7.27.0, it will write the header to point to another URL: http://curl.haxx.se/docs/http-cookies.html

Is there a case for a unified SSL front?

There are many, sorry very many, different SSL libraries today that various programs may want to use. In the open source world at least, it is more and more common that SSL (and other crypto) using programs offer build options to build with either at least OpenSSL or GnuTLS and very often they also offer optinal build with NSS and possibly a few other SSL libraries.

In the curl project we just added support for library number nine. In the libcurl source code we have an internal API that each SSL library backend must provide, and all the libcurl source code is internally using only that single and fixed API to do SSL and crypto operations without even knowing which backend library that is actually providing the functionality. I talked about libcurl’s internal SSL API before, and I asked about this on the libcurl list back in Feb 2011.

So, a common problem should be able to find a common solution. What if we fixed this in a way that would be possible for many projects to re-use? What if one project’s ability to select from 9 different provider libraries could be leveraged by others. A single SSL API with a simplified API but that still provides the functionality most “simple” SSL-using applications need?

Marc Hörsken and I have discussed this a bit, and pidgin/libpurble came up as a possible contender that could use such a single SSL. I’ve also talked about it with Claes Jakobsson and Magnus Hagander for postgresql and I know since before that wget certainly could use it. When I’ve performed my talks on the seven SSL libraries of libcurl I’ve been approached by several people who have expressed a desire in seeing such an externalized API, and I remember the guys from cyassl among those. I’m sure there will be a few other interested parities as well if this takes off.

What remains to be answered is if it is possible to make it reality in a decent way.

Upsides:

- will reduce code from the libcurl code base – in the amount of 10K or more lines of C code, and it should be a decreased amount of “own” code for all projects that would decide to use thins single SSL library

- will allow other projects to use one out of many SSL libraries, hopefully benefiting end users as a result

- should get more people involved in the code as more projects would use it, hopefully ending up in better tested and polished source code

There are several downsides with a unified library, from the angle of curl/libcurl and myself:

- it will undoubtedly lead to more code being added and implemented that curl/libcurl won’t need or use, thus grow the code base and the final binary

- the API of the unified library won’t be possible to be as tightly integrated with the libcurl internals as it is today, so there will be some added code and logic needed

- it will require that we produce a lot of documentation for all the functions and structs in the API which takes time and effort

- the above mention points will deduct time from my other projects (hopefully to benefit others, but still)

Some problems that would have to dealt with:

- how to deal with libraries that don’t provide functionality that the single SSL API does

- how to draw the line of what functionality to offer in the API, as the individual libraries will always provide richer and more complete APIs

- What is actually needed to get the work on this started for real? And if we do, what would we call the project…

PS, I know the term is more accurately “TLS” these days but somehow people (me included) seem to have gotten stuck with the word SSL to cover both SSL and TLS…

darwin native SSL for curl

I recently mentioned the new schannel support for libcurl that allows libcurl to do SSL natively without the use of any external libraries on Windows.

This “getting native support” obviously triggered Nick Zitzmann who stepped up and sent in Secure Transport support – the native API for doing SSL on Mac OS X and iOS. This ninth supported SSL library is now called ‘darwinssl’ in the curl code base. There have been some follow-up commits too to cleanup things and make use of that API for providing the necessary function calls when doing NTLM too etc.

This functionality is merged in to curl’s master git repository and will be part of the upcoming curl 7.27.0 release, planned to hit the public at the end of July 2012.

It could be noted that if your for example build curl/libcurl to also support SCP and SFTP, you’d be linking with libssh2 for that and libssh2 is still relying on a crypto library that is either OpenSSL or gcrypt so you may in fact still end up linking with a 3rd party crypto library… Nick mentioned in a separate mail how he has looked into making libssh2 use the Secure Transport API, but that he faced some issues regarding big numbers which made him hesitate and consider how to move forward.

lighting up that fiber

Exactly 10 hours and 34 minutes after Tyfon sent me the mail confirming they had received my order, the connection was up and I received an SMS saying so. Amazingly quick service I’d say. Unfortunately I wasn’t quite as fast to actually try it out…

Once I got home from work and got some time to fiddle, I inserted an RJ45 into port 1 of my media converter and the other end in my wifi router and wham, I was online.

My immediate reaction? First, check ping time to my server. Now it averages at 2.5 ms, down from some 32 ms over my ADSL line. Then check transfer speeds. Massive disappointment. Something is wrong since it goes very slow in both directions, with no more than 5-10KB/sec transfers. I emailed customer service at once, less than 24 hours after I ordered it… bredbandskollen.se says 0.20 mbit downlink and 75 mbit uplink! Weird.

They got back early this morning by email, and we communicated back and forth. For them to be able to file a report back to the fiber provider I need to report a MAC and IP address of a direct-connected (no router) computer, which of course had to wait until I get back home from work.

At home, when connecting two different windows-running laptops they don’t get an IP address. I’m suspecting this is due to packet-loss and thus it taking several DHCP retries to work and I didn’t have patience enough. I switched back to my ADSL connection again and emailed Tyfon the IP and MAC I believe my router used before…

A network provider for my fiber

In late May I finally got my media converter installed inside my house so now my fiber gets terminated into a 4-port gigabit switch.

Now the quest to find the right provider started. I have a physical 1 gigabit connection to “the station”, and out of the 12 providers (listed on bredbandswebben.se) I can select to get the internet service delivered by, at least two offer 1000 mbit download speeds (with 100mbit upload). I would ideally like a fixed IPv4-address and an IPv6 subnet, and I want my company to subscribe to this service.

The companies are T3 and Alltele. Strangely enough both of them failed to respond in a timely manner, so I went on to probe a few of the other companies that deliver less than 1000mbit services.

The one company that responded fastest and with more details than any other was Tyfon. They informed me that currently nobody can sell a “company subscription” on this service and that on my address I can only get at most a 100/100 mbit service right now. (Amusingly most of these operators also offer 250/25 and 500/50 rates but I would really like to finally get a decent upstream speed so that I for example can backup to a remote site at a decent speed.)

So, I went with 100/100 mbit for 395 SEK/month (~ 44 Euro or 57 USD). I just now submitted my order and their confirmation arrived at 23:00:24. They say it may take a little while to deliver so we’ll see (“normally within 1-2 weeks“). I’ll report back when I have news.

(And I’ve not yet gotten the invoice for the physical installation…)

schannel support in libcurl

schannel is the API Microsoft provides to allow applications to for example implement SSL natively, without needing any third part library.

On Monday June 11th we merged the 30+ commits Marc Hörsken brought us. This is now the 8th SSL variation supported by libcurl, and I figure this is going to become fairly popular now in the Windows camp coming the next release: curl 7.27.0.

So now my old talk about the seven SSL libraries libcurl supported has become outdated…

It can be worth noting that as long as you build (lib)curl to also support SCP and SFTP, powered by libssh2, that library will still require a separate crypto library and libssh2 supports to get built with either OpenSSL or gcrypt. Marc mentioned that he might work on making that one use schannel as well.