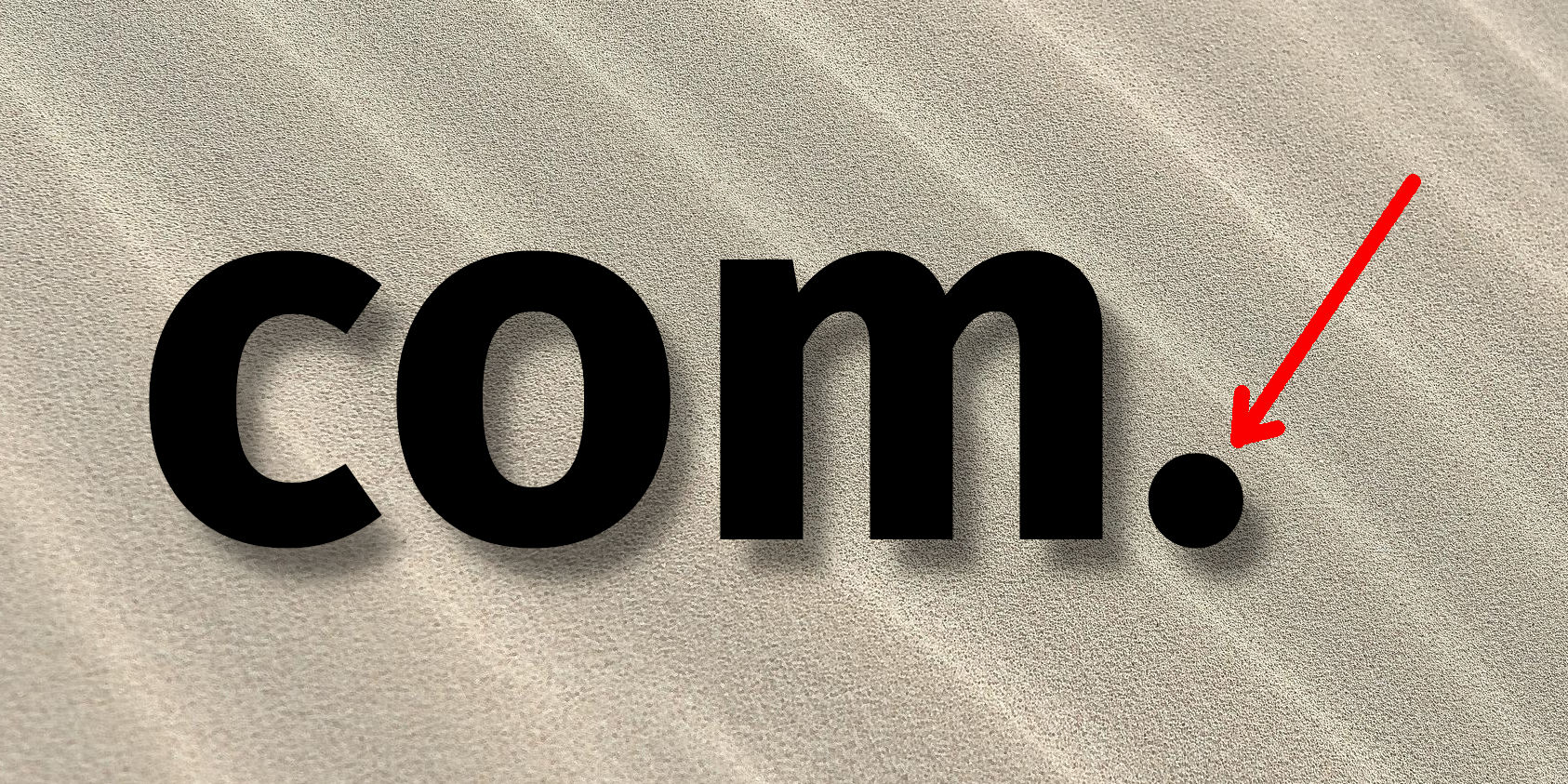

Trailing dots on host names in URLs is the gift that keeps on giving.

Let me take you through a dwindling story of how the dot is handled differently in different places through the stack of an Internet client. The evil trailing dot.

DNS

When a given host name is to be resolved to an IP address on a networked computer, there are dedicated functions to use. The host name example.com resolves to a number of IP addresses.

If you add a dot to the end of that host name, it does not change what is resolved. “example.com.” resolves to the same set of addresses as “example.com” does. (But putting two dots at the end will make it fail.)

The reason why it works like this is based on how DNS is built up with different “labels” (that when written in text are separated with dots) and then having a trailing dot is just an empty final label, just as with no dot. So, in the DNS protocol there are no trailing dots so to speak. When trying two dots at the end, it makes a zero-length label between them and that is not allowed.

People accustomed to fiddle with DNS are used to ending Fully Qualified Domain Names (FQDN) with a trailing dot.

Resolving names

In addition to the name actually being resolved (sent to a DNS resolver), native resolver functions usually puts a meaning and a semantic difference between resolving “hello” and “hello.” (with or without the trailing dot). The trailing dot then means the name is to be used actually exactly only like that, it is specified in full, while the name without a trailing dot can be tried with a domain name appended to it. Or even a list of domain names, until one resolves. This makes people want to use a trailing dot at times, to avoid that domain test.

HTTP names

HTTP clients that want to work with a given URL needs to extract the name part from the URL and use that name to:

- resolve the host name to a list of IP addresses to connect to

- pass that name in the

Host: or :authority: request headers, so that the HTTP server knows which specific server the clients speaks to – as it may run multiple servers on the same IP address

The HTTP spec says the name in the Host header should be used verbatim from the URL; the trailing dot should be included if it was present in the URL. This allows a server to host different content for “example.com” and “example.com.”, even if many servers will by default treat them as the same. Some hosts will just redirect the dot version to the non-dot. Some hosts will return error.

The HTTP client certainly connects to the same set of addresses for both.

For a lot of HTTP traffic, having the trailing dot there or not makes no difference. But they can be made to make a difference. And boy, they can certainly make a difference internally…

Cookies

Cookies are passed back and forth over HTTP using dedicated request and response headers. When a server wants to pass a cookie to the client, it can specify for which particular domain it is valid for and the client will send back cookies to the server only when there is a match of the domain it speaks to and for which domain cookies are set to etc.

The cookie spec RFC 6265 section 5.1.2 defines the host name in a way that makes it ignore trailing dots. Cookies set for a domain with a dot are valid for the same domain without one and vice versa.

SNI

When speaking to a HTTPS server, a client passes on the name of the remote server during the TLS handshake, in the SNI (Server Name Indication) field, so that the server knows which instance the client wants to speak to. What about the trailing dot you think?

The hostname is represented as a byte string using ASCII encoding without a trailing dot.

Meaning, that a HTTPS server cannot – in the TLS layer – make a distinction between a server for “example.com.” and “example.com”. Different hosts for HTTP, the same host for HTTPS.

curl’s dotted past

In the curl project, we – as everyone else – have struggled with the trailing dot over time.

As-is

We started out being mostly oblivious about the effects of the trailing dot and most of the code just treated it as part of the host name and it would be in the host name everywhere. Until one day someone pointed out that the SNI field does not approve of it. We fixed that.

Strip it

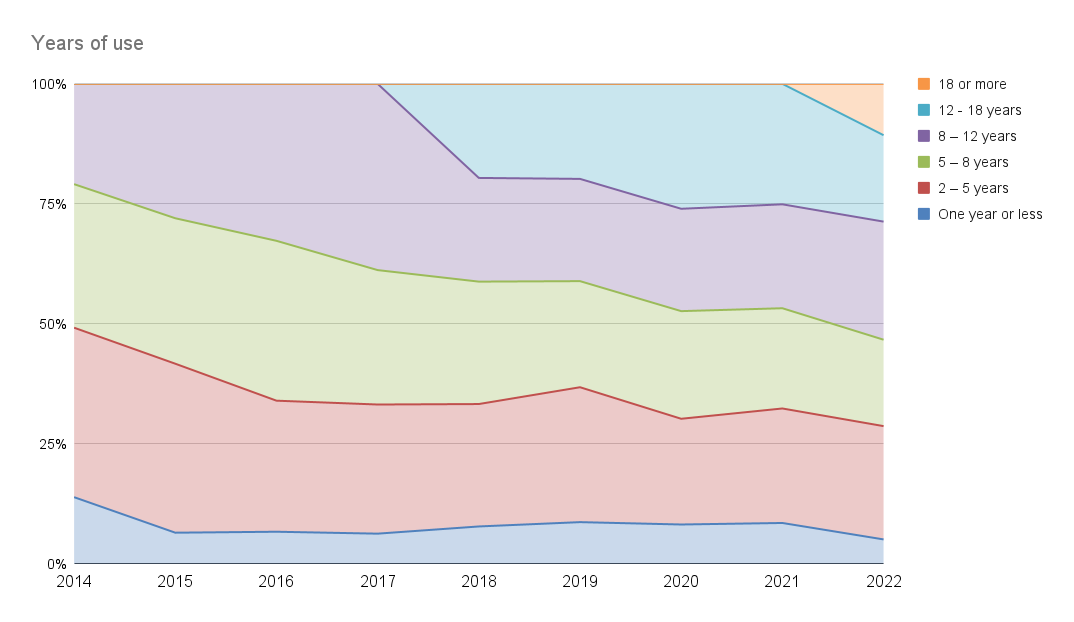

In 2014, curl started to always just cut off trailing dots internally if one was provided in the URL. The dot rarely makes a difference, it made the host name work fine with SNI and for HTTPS it is practically difficult to make a difference between them.

Keep it

In 2022, someone found a web site that actually requires a trailing dot in the Host: header to respond correctly and reported it to the curl project.

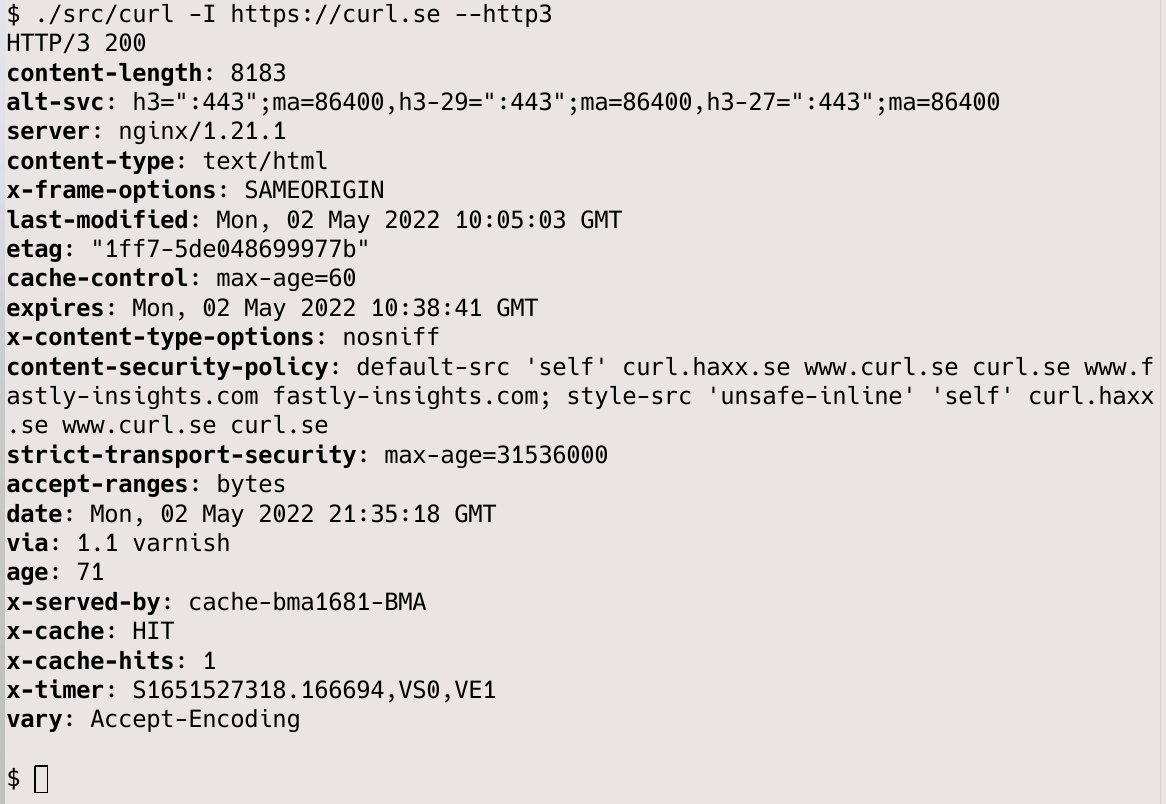

Sigh. We back-pedaled on the eight years old decision and decided to internally keep the dot in the name, but strip it for the purpose of the SNI field. This seems to be how the browsers are doing it. We released curl 7.82.0 with this change. That site that needed the trailing dot kept in the Host: header could now be retrieved with curl. Yay.

As a bonus curl also lowercases the SNI name field now, because that is what the browsers do even if the spec says the field is supposed to be used case insensitively. That habit has made sure there are servers on the Internet that won’t work properly if the SNI name is not lowercase…

In your face

That “back-peddle” for 7.82.0 when we brought back the dot into the host name field, turned out to be incomplete, but it was not totally obvious nor immediately apparent.

When we brought back the trailing dot into the name field, we accidentally broke several internal name checks.

The checks broke in the cookie handling of domains even though cookies, as mentioned above, are supposed to not care about trailing dots.

To understand this, we have to back up a little bit and talk about how cookies and cookie domains work.

Public Suffixes

Cookies are strange beasts and because the server can tell the client for which domain the cookie applies to, a client needs to check so that the server does not try to set the cookies too broadly or for other domains. It does not stop there, but there is also the concept of something called “Public Suffix List” (PSL), which are known domains for which setting a cookie is not accepted. (This list is also used for limiting other things in browsers but they are out of scope here.) One widely known such domain to mention as an example is “co.uk”. A server should not be allowed to set a cookie for “co.uk” as then it would basically be sent back for every web site that exist in the UK.

The PSL is a maintained list with a huge number of domains in it. To manage those and to make sure tools like curl can check for them in a convenient way, a dedicated library was made for this several years ago: libpsl. curl has optionally used this since 2015.

I said optional

That public suffix list is huge, which is a primary reason why many users still opt to build curl without support for it. This means that curl needs to provide backup functionality for the builds where libpsl is not present. Typically in a lot of embedded systems.

Without knowledge of the PSL curl will not reject cookies for “co.uk” but it should reject cookies for “.uk” or “.com” as even without PSL knowledge it still knows that setting cookies for top-level domains is not okay.

How did the curl check used without PSL verify if the given domain is a TLD only?

It checked – if there is a dot present in the name, then it is not a TLD.

CVE-2022-27779

Axel Chong figured out that for a curl build without PSL knowledge, the server could set a cookie for a TLD if you just made sure to end the name with a dot.

With the 7.82.0 change in place, where curl keeps the trailing dot for the host name, combined with that cookie set for TLD domain with a trailing dot, they have matching tail ends. This means that curl would send cookies to servers that match the criteria. The broken TLD check was benign all those years until we let the trailing dot in. This is security vulnerability CVE-2022-27779.

CVE-2022-30115

It did not stop there. Axel did not stop there. Since curl now keeps the trailing dot in the name and did not do it before, there was a second important string comparison that broke in unexpected ways that Axel figured out and reported. A second vulnerability introduced by the same change.

HSTS is the concept that allows curl to store a “cache” of host names and keep it around, so that if you want to do a subsequent transfer to one of those host names again before they expire, curl will go directly to HTTPS even if HTTP is used in the URL. As a way to avoid the clear-text insecure redirect step some URLs use.

The new treatment of trailing dots, that basically allows users to provide the same host name in two different ways and yet resolve to the exact same addresses exposed that the HSTS code did take care of (ignored) the trailing dot properly. If you let curl store HSTS info for the host name without a trailing dot, you can then later bypass the HSTS by using the same host name with a trailing dot. Or vice versa. This is security vulnerability CVE-2022-30115.

alt-svc

The code for alt-svc also needed adjustment for the dot, but fortunately that was “just” a bug and had not security impact.

All these three separate areas in which trailing dots caused problems have been fixed in curl 7.83.1 and all of them are now tested and verified with an extended set of tests to make sure they keep handle the dots correctly.

Someone called it a dot release.

Is this the end of dot problems?

I don’t know but it seems unlikely. The trailing dots have kept on haunting us since a long time by now so I would say the chances are big that there are both some more flaws lingering and some future changes pending. That then can make the cycle take another loop or two.

I suppose we will find out. Stay tuned!