Everyone and anyone is of course entitled to fork a project that is released under an open source license. This goes for my projects as well and I don’t mind it. Go ahead.

I think it may be a bit shortsighted and a stupid decision, but open source allows this and it sometimes actually leads to goodness.

libgnurl

Enter libgnurl. A libcurl fork created by Christian Grothoff.

For most applications, the more obscure protocols supported by cURL are close to dead code — mostly harmless, but not useful

<sarcasm>Of course a libcurl newcomer such Christian knows exactly what “most applications” want and need and thus what’s useful to them….</sarcasm>

cURL supports a bunch of crypto backends. In practice, only the OpenSSL, NSS (RedHat) and GnuTLS (Debian) variants seem to see widespread deployment

Originally he mentioned only OpenSSL and GnuTLS there until someone pointed out the massive amount of NSS users and then the page got updated. Quite telling I think. Lots of windows users these days use the schannel backend, Mac OS X users use the darwinssl backend and so on. Again statements based on his view and opinions and most probably without any closer checks done or even attempted.

As a side-node we could discuss what importance (perceived) “widespread deployment” has when selecting what to support or not, but let’s save that for another blog post a rainy day.

there exist examples of code that deadlocks on IPC if cURL is linked against OpenSSL while it works fine with GnuTLS

I can’t argue against something I don’t know about. I’m not aware of any bug reports on something like this. libcurl is not fully SSL layer agnostic, the SSL library choice “leaks” through to applications so yes an application can very well be written to be “forced” to use a libcurl built against a particular backend. That doesn’t seem what he’s complaining about here though.

Thus, application developers have to pray that the cURL version deployed by the distribution is compatible with their needs

Application developers that use a library – any library – surely always hope that it is compatible with their needs!?

it is also rather difficult to replace cURL for normal users if cURL is compiled in the wrong way

Is it really? As most autotools based projects, you just run configure –prefix=blablalba and install a separate build in a customer directory and then use that for your special-need projects. I suppose he means something else. I don’t know what.

For GNUnet, we need a modern version of GnuTLS. How modern? Well, while I write this, it hasn’t been released yet (update: the release has now happened, the GnuTLS guys are fast). So what happens if one tries to link cURL against this version of GnuTLS?

To verify his claims that building against the most recent gnutls is tricky, I tried:

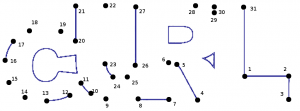

- download 3.2.5 tarball

- unpack it

- configure –prefix=$HOME/build-gnutls-3.2.5

- make

- make install

- cd [curl source tree]

- configure –without-ssl –with-gnutls=$HOME/build-gnutls-3.2.5 [and some more options if wanted]

- make

- invoke “./src/curl -V” to verify that the build is using the latest. Yes it does. Case closed.

How does forking fix it? Easy. First, we can get rid of all of the compatibility issues

That’s of course hard to argue with. If you introduce a brand new library it won’t have any compatibility issues since nobody used it before. Kind of shortsighted solution though, since as soon as someone starts to use it then compatibility becomes something to pay attention to.

Also, since Christian is talking about doing some changes to accomplish this new grand state, I suspect he will do this by breaking compatibility with libcurl in some aspects and then gnurl won’t be libcurl compatible so it will no longer be that easy to switch between them as desired.

Note that this pretty much CANNOT be done without a fork, as renaming is an essential part of the fix.

Is renaming the produced library really that hard to do without forking the project? If I want to produce a renamed output from an open source project out there, I apply a script or hack the makefile of that project and I keep that script or diff in my end. No fork needed. I think I must’ve misunderstood some subtle angle of this…

Now, there might be creative solutions to achieve the same thing within the standard cURL build system, but I’m not happy to wait for a decade for Daniel to review the patches.

Why would he need to send me such patches in the first place? Why would I have to review the patches? Why would we merge them?

That final paragraph is probably the most telling of his entire page. I think he did this entire fork because he is unhappy with the lack of speed in the reviewing and responses to his patches he sent to the libcurl mailing list. He’s publicly complained and whined about it several times. A very hostile attitude to actually get the help or review you want.

I want to note that the main motivations for this fork are technical

Yes sure, they are technical but also based on misunderstandings and just lack of will.

But I like to stress again that I don’t mind the fork. I just mind the misinformation and the statements made as if they were true and facts and represents what we stand for in the actual curl project.

I believe in collaboration. I try to review patches and provide feedback as soon as I can. I wish Christian every success with gnurl.

curl first implemented cookie support way back in the early days in the late 90s. I participated in the IETF work that much later

curl first implemented cookie support way back in the early days in the late 90s. I participated in the IETF work that much later

My “landline” phone in my house is connected over

My “landline” phone in my house is connected over

In this little piece I’ll explain why there won’t be any version 8 of curl and libcurl in a long time. I won’t rule out that it might happen at some point in the future. Just that it won’t happen anytime soon and explain the reasons why.

In this little piece I’ll explain why there won’t be any version 8 of curl and libcurl in a long time. I won’t rule out that it might happen at some point in the future. Just that it won’t happen anytime soon and explain the reasons why.