Welcome to the 200th curl release. We call it 200 OK. It coincides with us counting more than 900 commit authors and surpassing 2,400 credited contributors in the project. This is also the first release ever in which we thank more than 80 persons in the RELEASE-NOTES for having helped out making it and we’ve set two new record in the bug-bounty program: the largest single payout ever for a single bug (2,000 USD) and the largest total payout during a single release cycle: 3,800 USD.

This release cycle was 42 days only, two weeks shorter than normal due to the previous 7.76.1 patch release.

Release Presentation

Numbers

the 200th release

5 changes

42 days (total: 8,468)

133 bug-fixes (total: 6,966)

192 commits (total: 27,202)

0 new public libcurl function (total: 85)

2 new curl_easy_setopt() option (total: 290)

2 new curl command line option (total: 242)

82 contributors, 44 new (total: 2,410)

47 authors, 23 new (total: 901)

3 security fixes (total: 103)

3,800 USD paid in Bug Bounties (total: 9,000 USD)

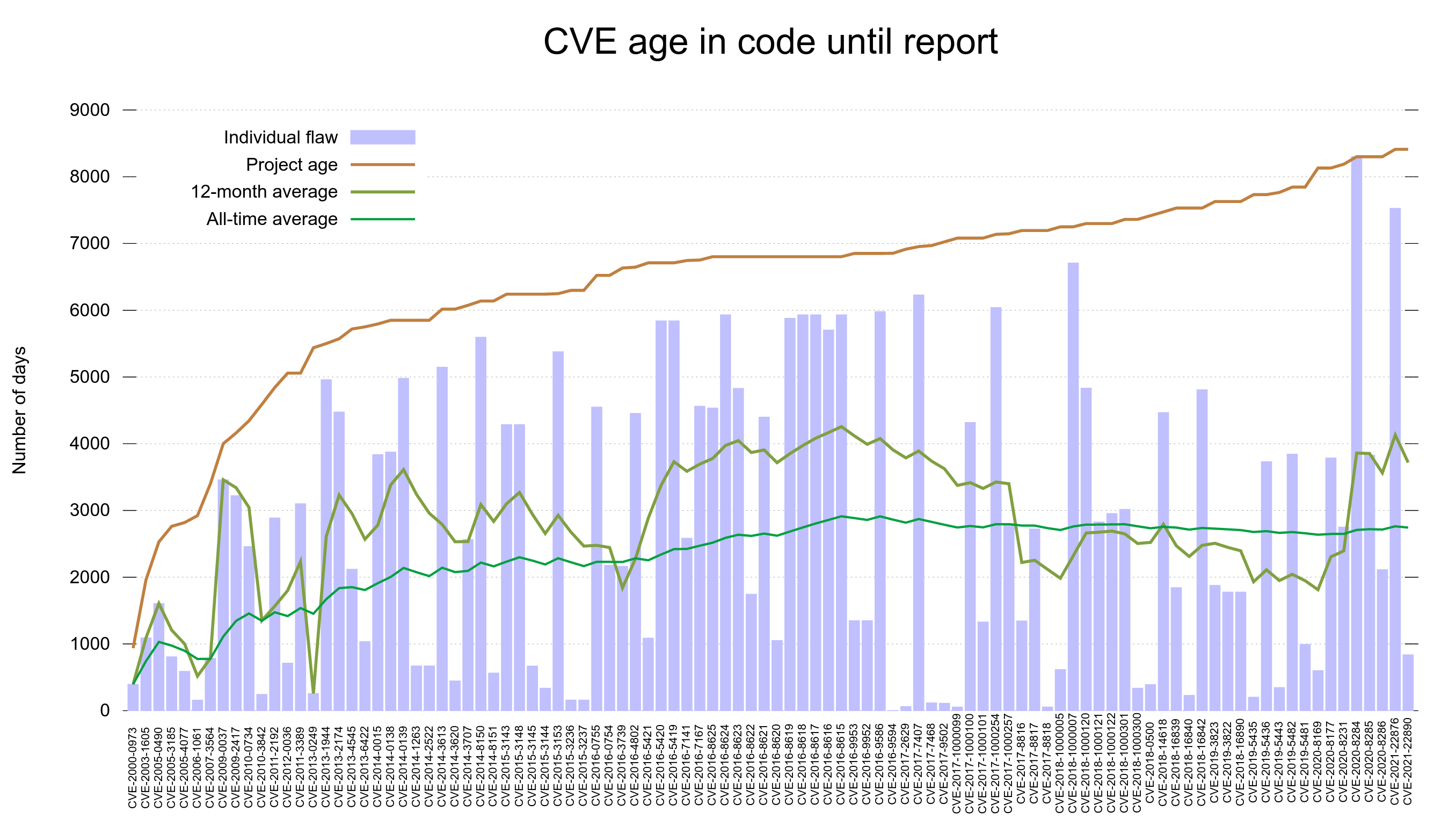

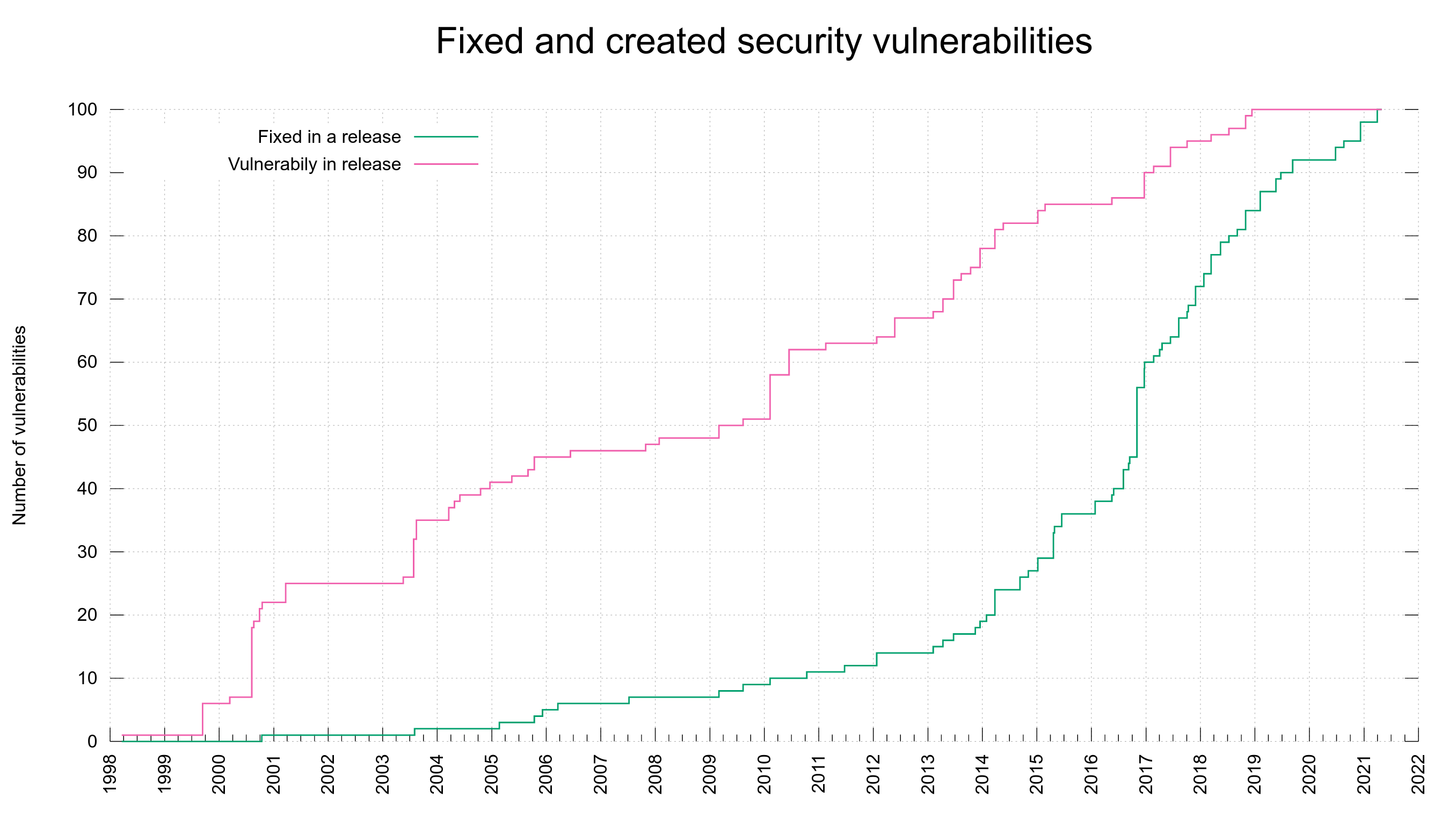

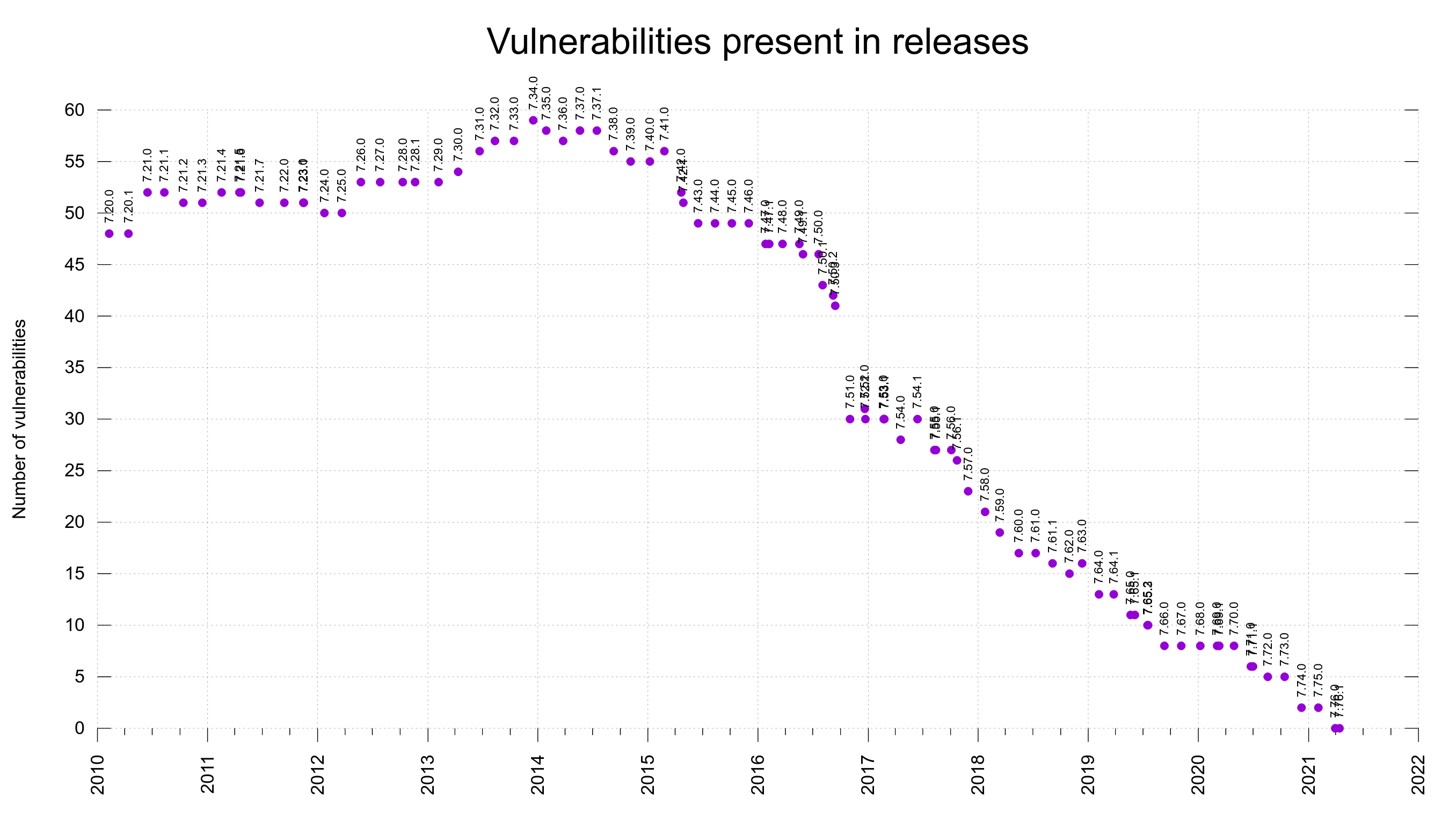

Security

We set two new records in the curl bug-bounty program this time as mentioned above. These are the issues that made them happen.

CVE-2021-22901: TLS session caching disaster

This is a Use-After-Free in the OpenSSL backend code that in the absolutely worst case can lead to an RCE, a Remote Code Execution. The flaw is reasonably recently added and it’s very hard to exploit but you should upgrade or patch immediately.

The issue occurs when TLS session related info is sent from the TLS server when the transfer that previously used it is already done and gone.

The reporter was awarded 2,000 USD for this finding.

CVE-2021-22898: TELNET stack contents disclosure

When libcurl accepts custom TELNET options to send to the server, it the input parser was flawed which could be exploited to have libcurl instead send contents from the stack.

The reporter was awarded 1,000 USD for this finding.

CVE-2021-22897: schannel cipher selection surprise

In the Schannel backend code, the selected cipher for a transfer done with was stored in a static variable. This caused one transfer’s choice to weaken the choice for a single set transfer could unknowingly affect other connections to a lower security grade than intended.

The reporter was awarded 800 USD for this finding.

Changes

In this release we introduce 5 new changes that might be interesting to take a look at!

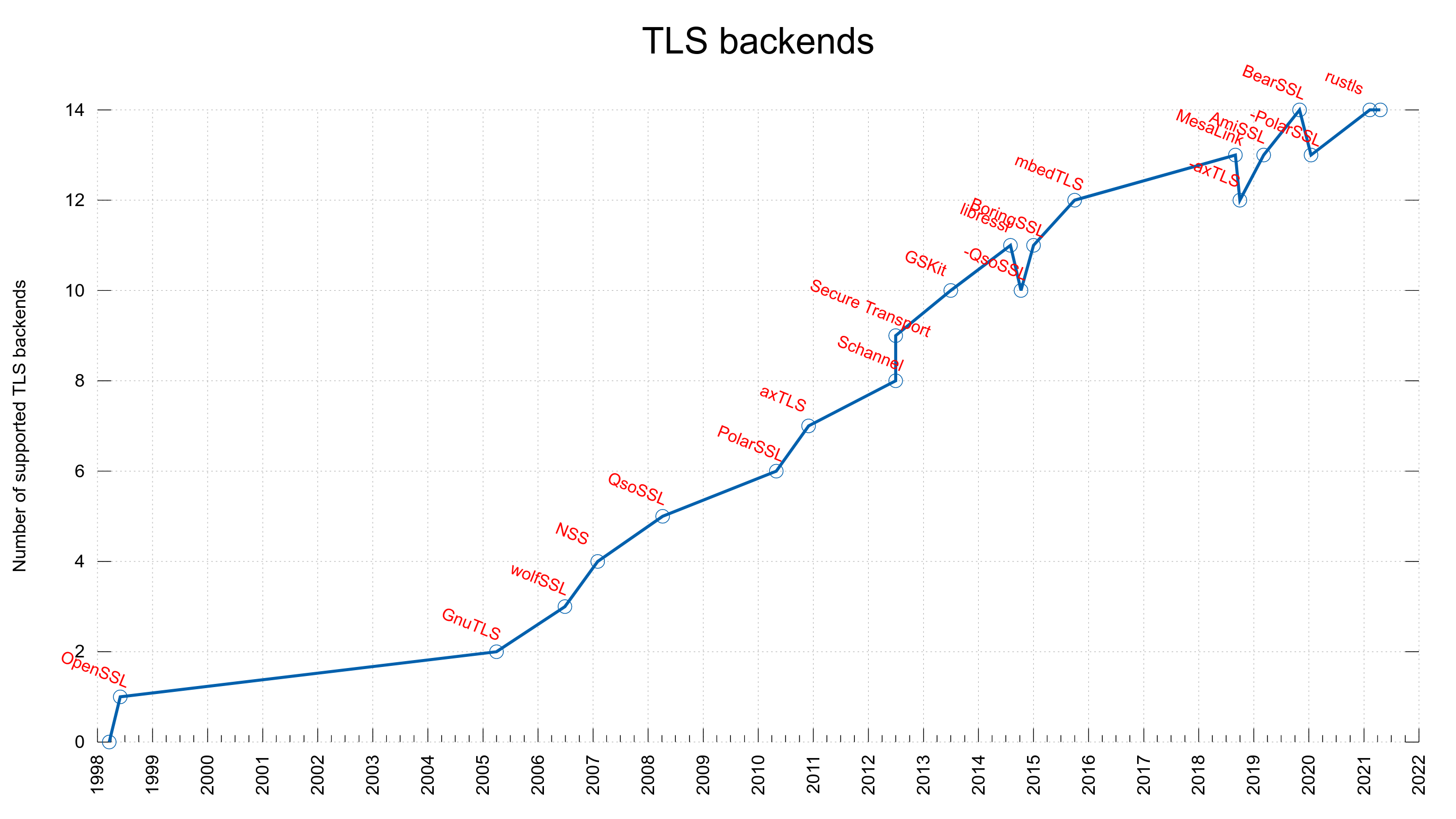

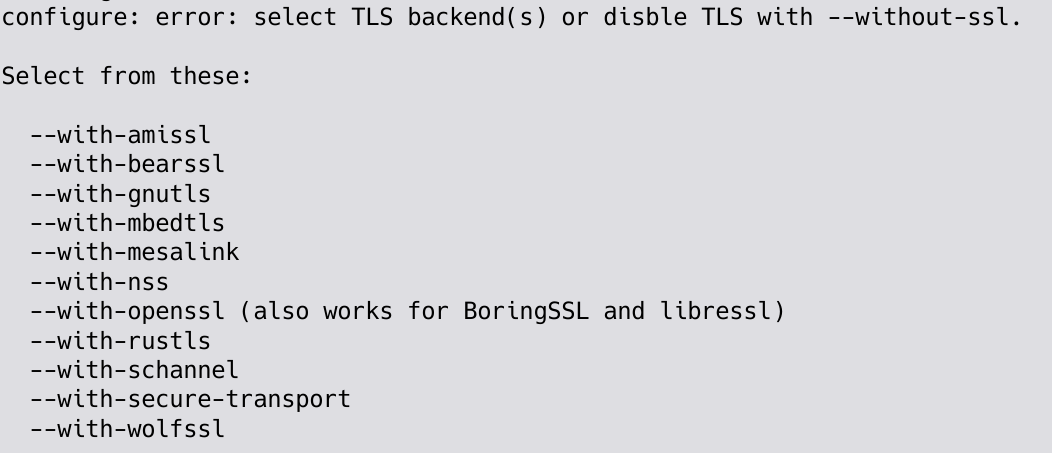

Make TLS flavor explicit

As explained separately, the curl configure script no longer defaults to selecting a particular TLS library. When you build curl with configure now, you need to select which library to use. No special treatment for any of them!

No more SSL

curl now has no more traces of support for SSLv2 or SSLv3. Those ancient and insecure SSL versions were already disabled by default by TLS libraries everywhere, but now it’s also impossible to activate them even in special build. Stripped out from both the curl tool and the library (thus counted as two changes).

HSTS in the build

We brought HSTS support a while ago, but now we finally remove the experimental label and ship it enabled in the build by default for everyone to use it more easily.

In-memory cert API

We introduce API options for libcurl that allow users to specify certificates in-memory instead of using files in the file system. See CURLOPT_CAINFO_BLOB.

Favorite bug-fixes

Again we manage to perform a large amount of fixes in this release, so I’m highlighting a few of the ones I find most interesting!

Version output

The first line of curl -V output got updated: libcurl now includes OpenLDAP and its version of that was used in the build, and then the curl tool can add libmetalink and its version of that was used in the build!

curl_mprintf: add description

We’ve provided the *printf() clone functions in the API since forever, but we’ve tried to discourage users from using them. Still, now we have a first shot at a man page that clearly describes how they work.

This is important as they’re not quite POSIX compliant and users who against our advice decide to rely on them need to be able to know how they work!

CURLOPT_IPRESOLVE: preventing wrong IP version from being used

This option was made a little stricter than before. Previously, it would be lax about existing connections and prefer reuse instead of resolving again, but starting now this option makes sure to only use a connection with the request IP version.

This allows applications to explicitly create two separate connections to the same host using different IP versions when desired, which previously libcurl wouldn’t easily let you do.

Ignore SIGPIPE in curl_easy_send

libcurl makes its best at ignoring SIGPIPE everywhere and here we identified a spot where we had missed it… We also made sure to enable the ignoring logic when built to use wolfSSL.

Several HTTP/2-fixes

There are no less than 6 separate fixes mentioned in the HTTP/2 module in this release. Some potential memory leaks but also some more behavior improving things. Possibly the most important one was the move of the transfer-related error code from the connection struct to the transfers struct since it was vulnerable to a race condition that could make it wrong. Another related fix is that libcurl no longer forcibly disconnects a connection over which a transfer gets HTTP_1_1_REQUIRED returned.

Partial CONNECT requests

When the CONNECT HTTP request sent to a proxy wasn’t all sent in a single send() call, curl would fail. It is baffling that this bug hasn’t been found or reported earlier but was detected this time when the reporter issued a CONNECT request that was larger than 16 kilobytes…

TLS: add USE_HTTP2 define

There was several remaining bad assumptions that HTTP/2 support in curl relies purely on nghttp2. This is no longer true as HTTP/2 support can also be provide by hyper.

normalize numerical IPv4 hosts

The URL parser now knows about the special IPv4 numerical formats and parses and normalizes URLs with numerical IPv4 addresses.

Timeout, timed out libssh2 disconnects too

When libcurl (built with libssh2 support) stopped an SFTP transfer because a timeout was triggered, the following SFTP disconnect procedure was subsequently also stopped because of the same timeout and therefore wasn’t allowed to properly clean up everything, leading to a memory-leak!

IRC network switch

We moved the #curl IRC channel to the new network libera.chat. Come join us there!

Next release

On Jul 21, 2021 we plan to ship the next release. The version number for that is not yet decided but we have changes in the pipeline, making a minor version number bump very likely.

Credits

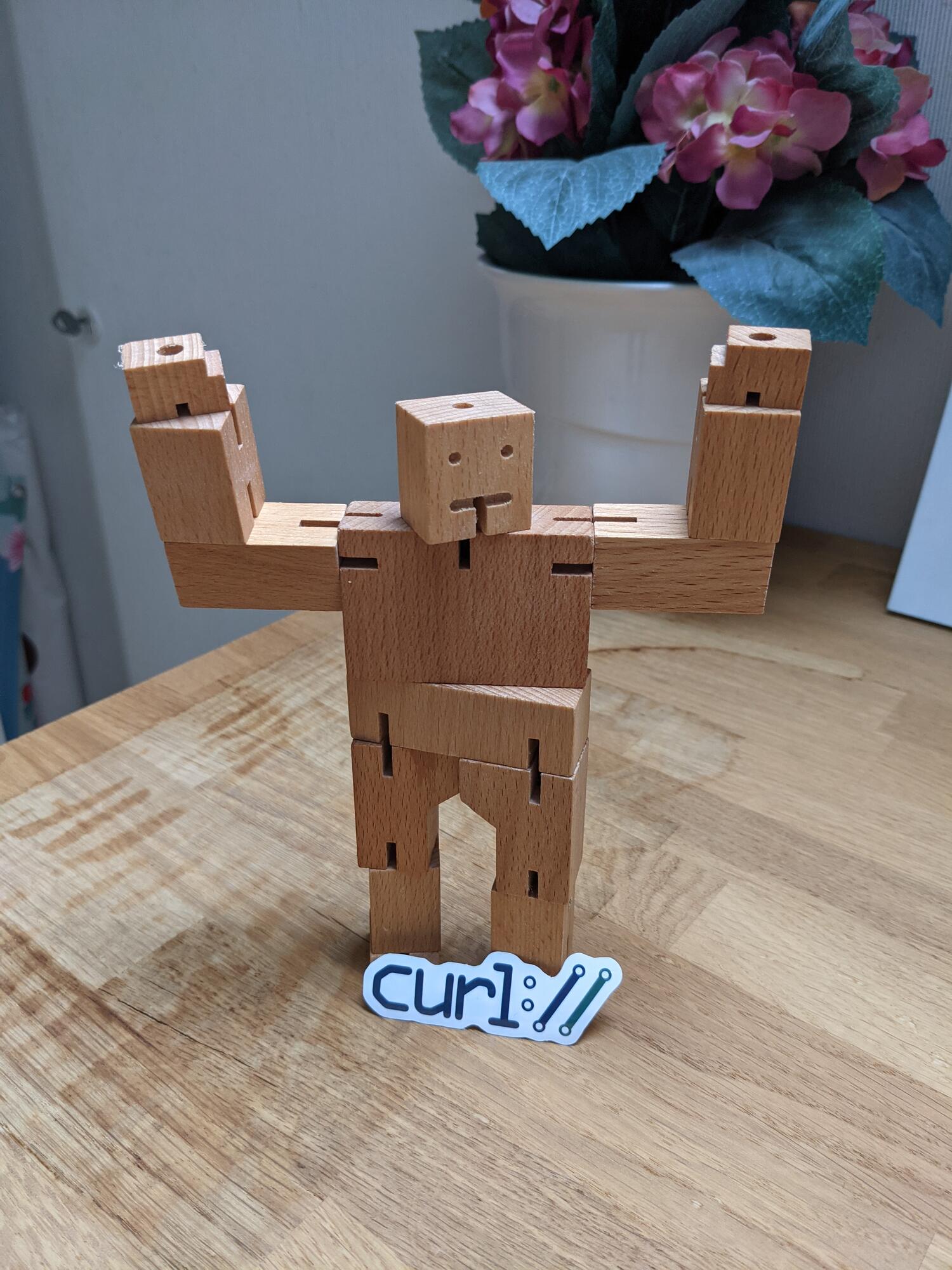

7.77.0 release image by Filip Dimitrovski.