There’s a plan for version 8 being forged! Let me just take you back a bit in time first..

The early days

When we first created libcurl, we bumped the major version number of the project from the previous version 6 to version 7. In late summer of 2000 we shipped curl and libcurl 7.1 as the first ever release that featured a separate library for Internet transfer powers. Everything before version 7 was just the command line tool, curl. That was the moment in time we decided we should leave kindergarten and we were ready to take on some tougher loads.

I had the main approach to the API worked out already from the start. It would be transfer-oriented, it would be built up around URLs and shouldn’t necessarily require that the users are themselves protocol experts to use it. I used inspiration from ioctl and fcntl when I made curl_easy_setopt and curl_easy_getinfo. They’re fixed functions with flexible arguments. The idea was that by doing that, we wouldn’t have to add new functions for new features but we would “just” add options and new options would simply just not work with older libcurls.

The first API we shipped also only provided synchronous single transfers. The “easy” interface.

I had no anticipations or particular hopes on the library then. It would be cool if someone found good use for it and it would be even cooler if someone would help out to improve it further.

It grew, I learned

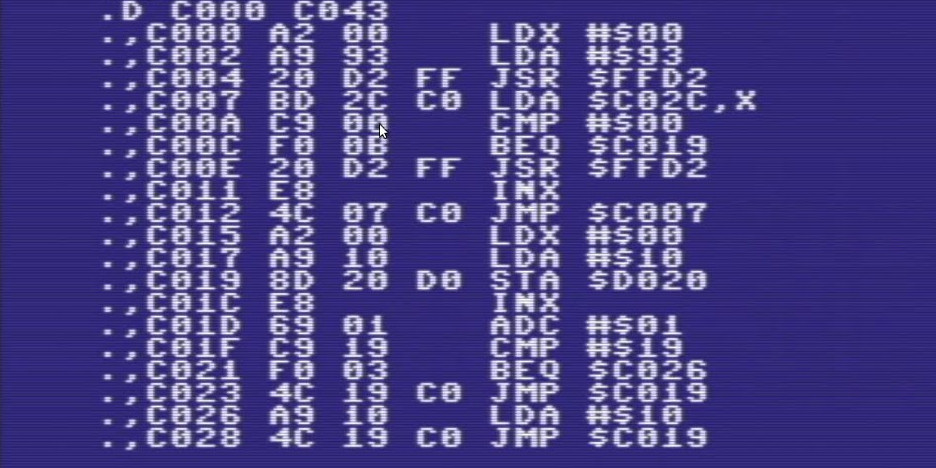

I had never before developed and shipped a library for the world to use. I hadn’t really fully grasped and considered the impact of APIs and ABI stability etc.

We gradually improved the library over time. We bumped the SONAME several times in the first few years as we modified internals. In the same time the library caught on a bigger and bigger audience and in September 2006 as I ripped out code for what is commonly referred to as “FTP third party transfers” I once again bumped the ABI number (to 4) since the older libcurl was no longer compatible with the new release.

People don’t like SONAME bumps

That bump was met with quite a lot of resistance and objections among users. Changing SONAME of a widely used library it turns out causes a lot of pain, agony and squeaking. Possibly this was one of the earlier signs that libcurl had grown up and I decided that we should try to avoid going through this again. We shall not break ABI compatibility again. Ever.

No bumps, no worries

In this world with no SONAME bumps I wanted to keep that solidity visible in the major number of the project so even though the version number of the releases aren’t strictly related to the SONAME we kept shipping curl version seven. In September 2021, we reach curl 7.79.0.

We’ve managed to stick to our goals and a binary libcurl using application built after September 2006 can run with the latest libcurl with no modification needed! This is one of the biggest edges and “selling points” we have in libcurl: We take compatibility and unmodified behavior very seriously.

In 2013 I even wrote blog post emphasizing this and in there I said there won’t be a curl version 8 “in a long time”. But read on, things have changed a little!

An ever-growing minor number

We bump the minor version number every time we “change” something in the project, or add features. If we only do bug-fixes we only bump the patch number. You know, in classic “major,minor.patch” style.

As we do curl releases at least every 8 weeks and most releases have changes added, we bump the minor number very frequently, up to 6 times a year or so.

We provide version number information for libcurl provided as a 24 bit number, using 8 bits for each field. This implies that none of the numbers can ever go above 255. We can’t ship a 7.256.0 for this practical reason.

But also, from what I’ve seen people do with and think about version numbers before, I’m concerned that increasing the minor number beyond 99 will cause confusions. Version 7.100.0 risks gettinged confused and mixed up with 7.1 or 7.10.0, two versions that are terribly old. And frankly that’s a very large minor number and it starts becoming many digits in that release version.

There exists a solution to this!

Reset minor, bump major, keep SONAME

The idea is simply to do “a Linux kernel”. We change to version 8.0.0 at a given point in time, but we stick to the same SONAME as before.

We don’t break any compatibility, there will no no API or functionality cleanups. There will just be a version number bump to lower the minor number and let us start over that journey. Reset the counter so to speak.

When do we do this? We have roughly 20 releases left before the minor counter can reach 100, 20 releases take at least 6*20 = 120 weeks. 120 weeks is 2.3 years.

Another event within this period

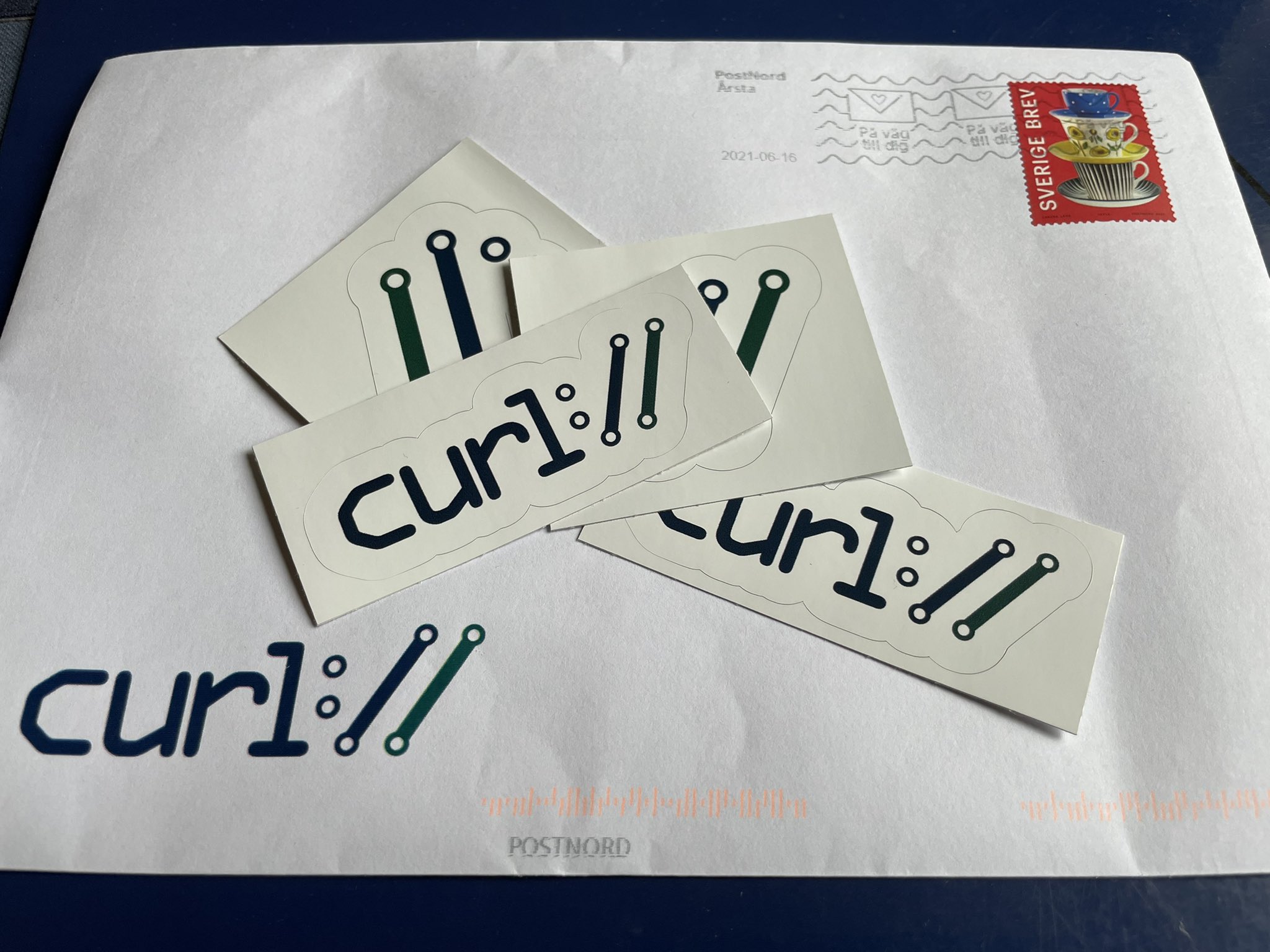

On March 20, 2023. About 18 months into the future, the curl project turns 25 years old. Here’s a golden opportunity! Let’s top off the 25 year celebrations with a major version number bump!

The plan

Independently of what version number we have reached to at that point, independently of if we add features or not in that release, independently of exactly how that date fits within the pre-determined release cycle and without changing any APIs, we ship curl version 8.0.0 on that day.

Turning 25 and bumping the major version number on the same day should be fun.

Version 8

I hope that a small side-effect of bumping the major number will make users still left on version 7 to slightly faster feel outdated and push for getting up to 8. It could work as a minor push to get users to catch up a bit.

The minor number will of course immediately start “climbing” again and in a worst-case scenario, we risk reaching minor number 100 again within another 17 years. Maybe we can plan another bump for the 40th birthday?