If you have more or better screenshots, please share!

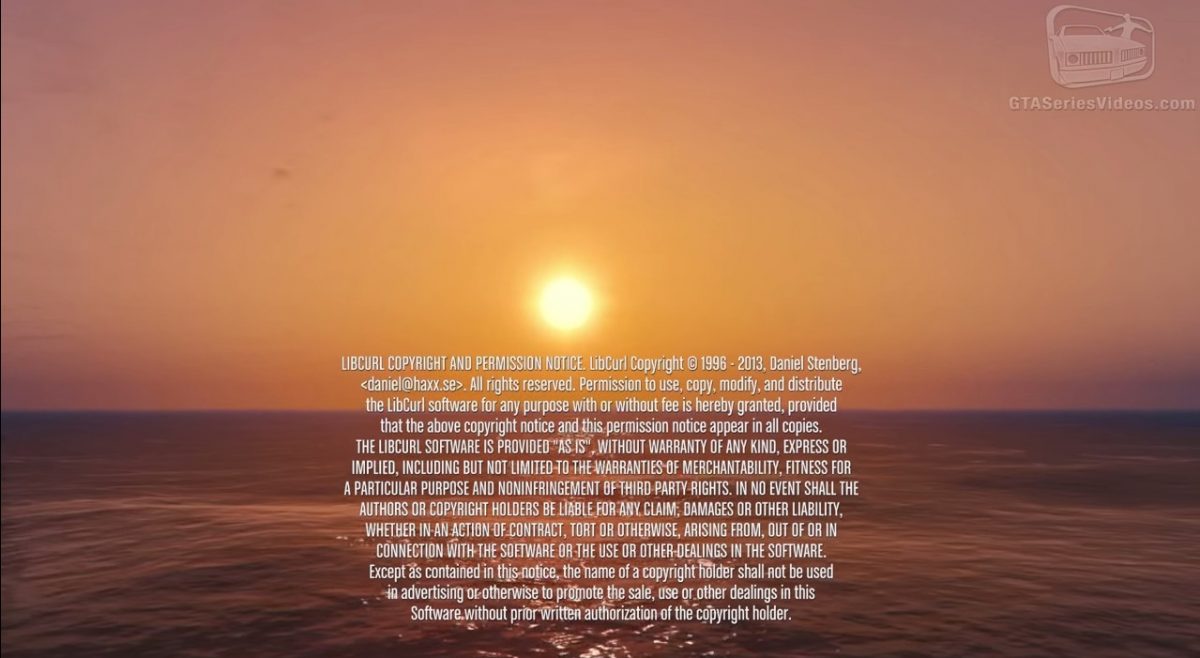

This shot is taken from the ending sequence of the PC version of the game Grand Theft Auto V. 44 minutes in! See the youtube version.

Sky HD is a satellite TV box.

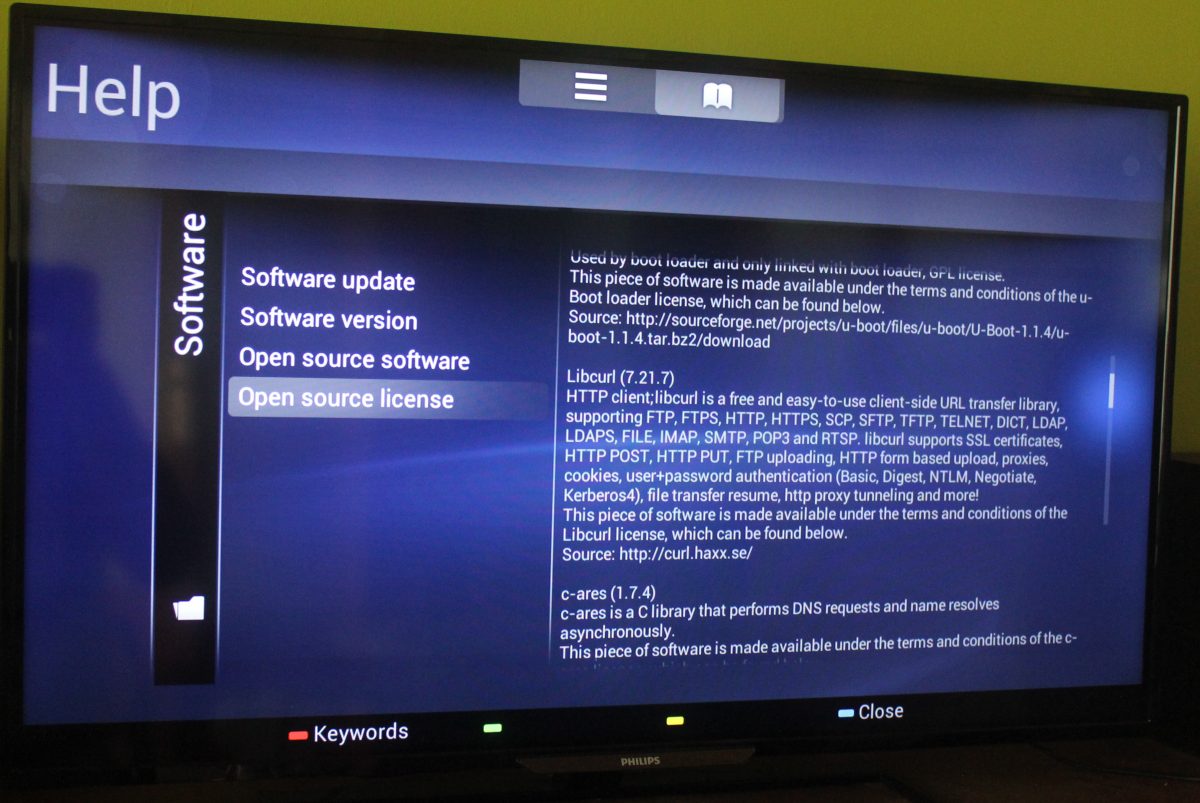

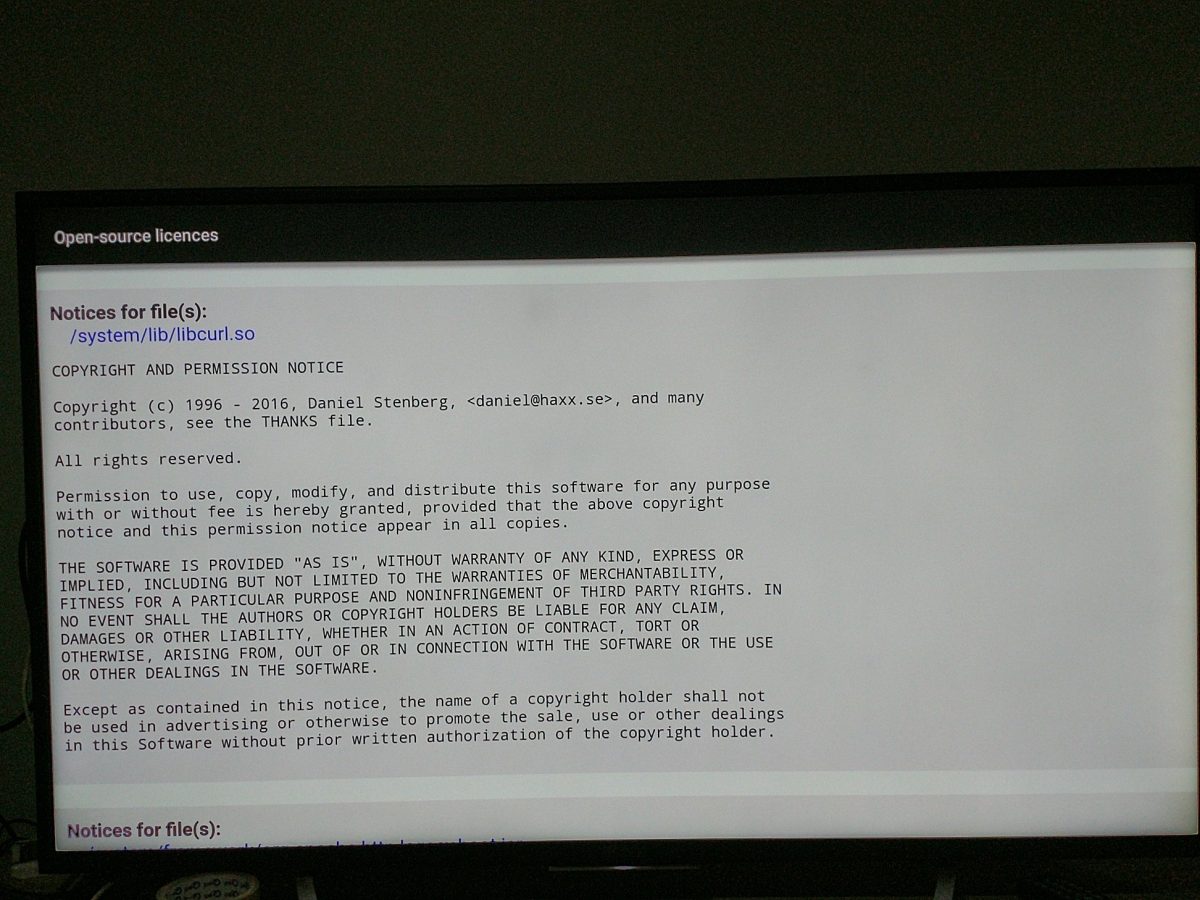

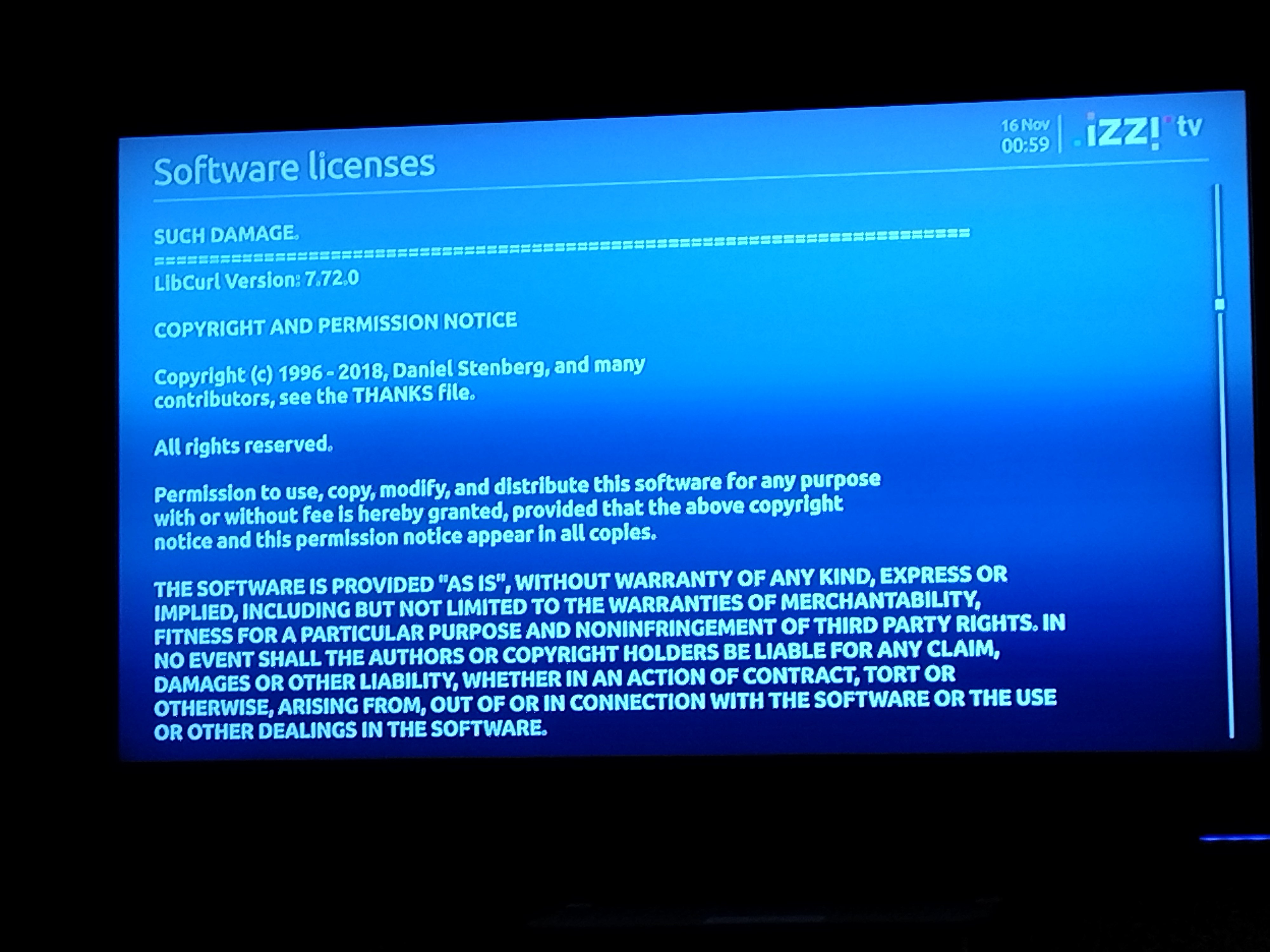

This is a Philips TV. The added use of c-ares I consider a bonus!

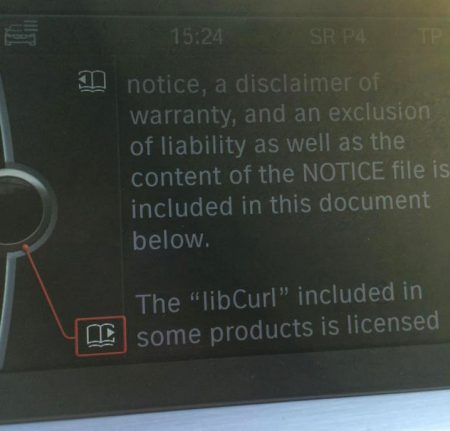

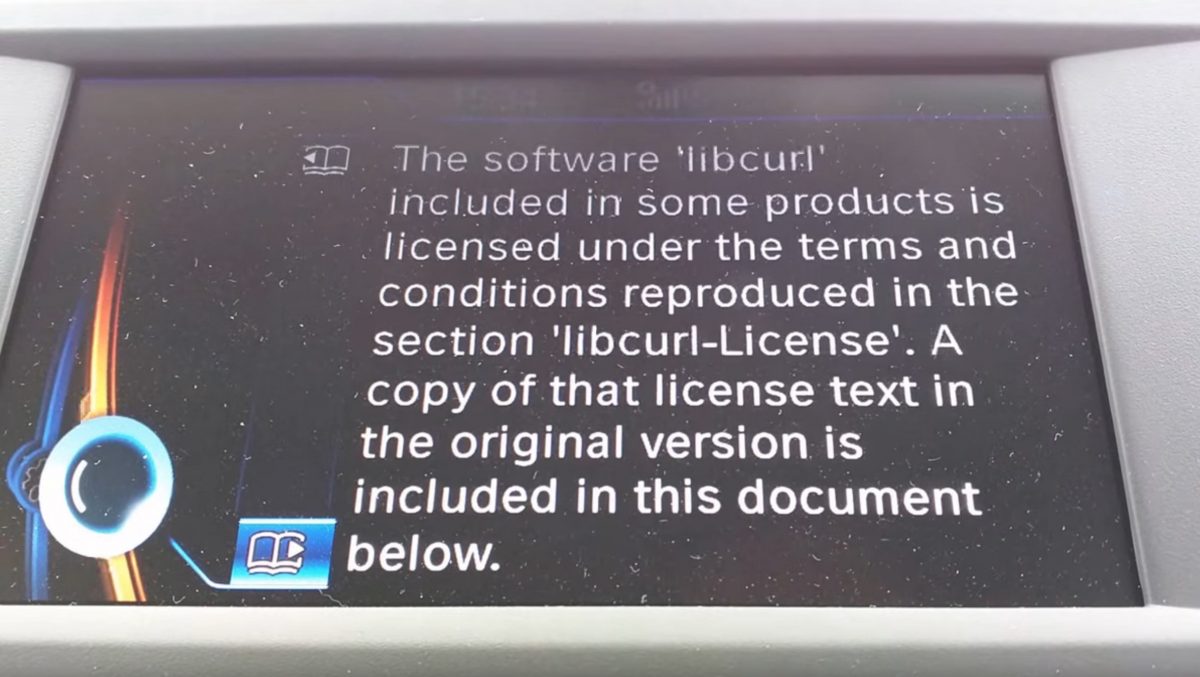

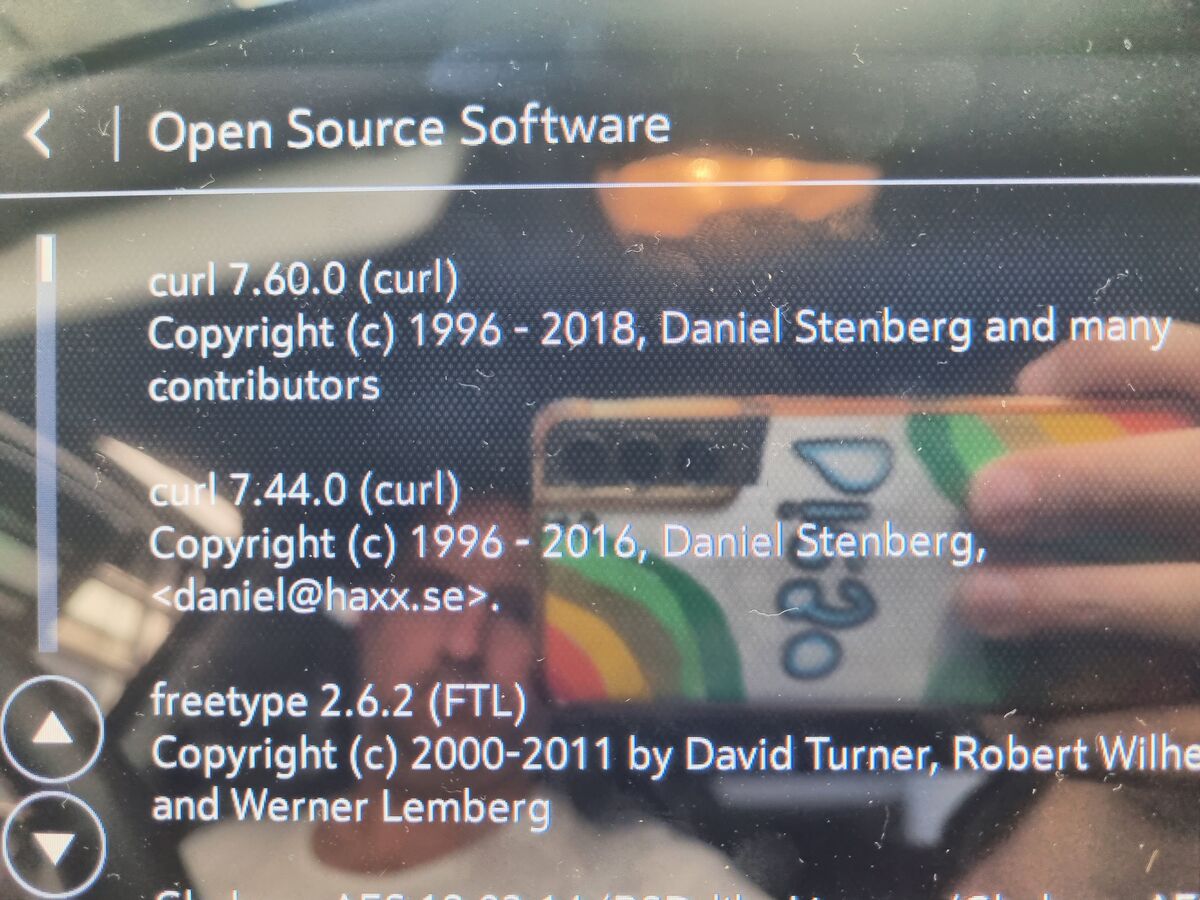

The infotainment display of a BMW car.

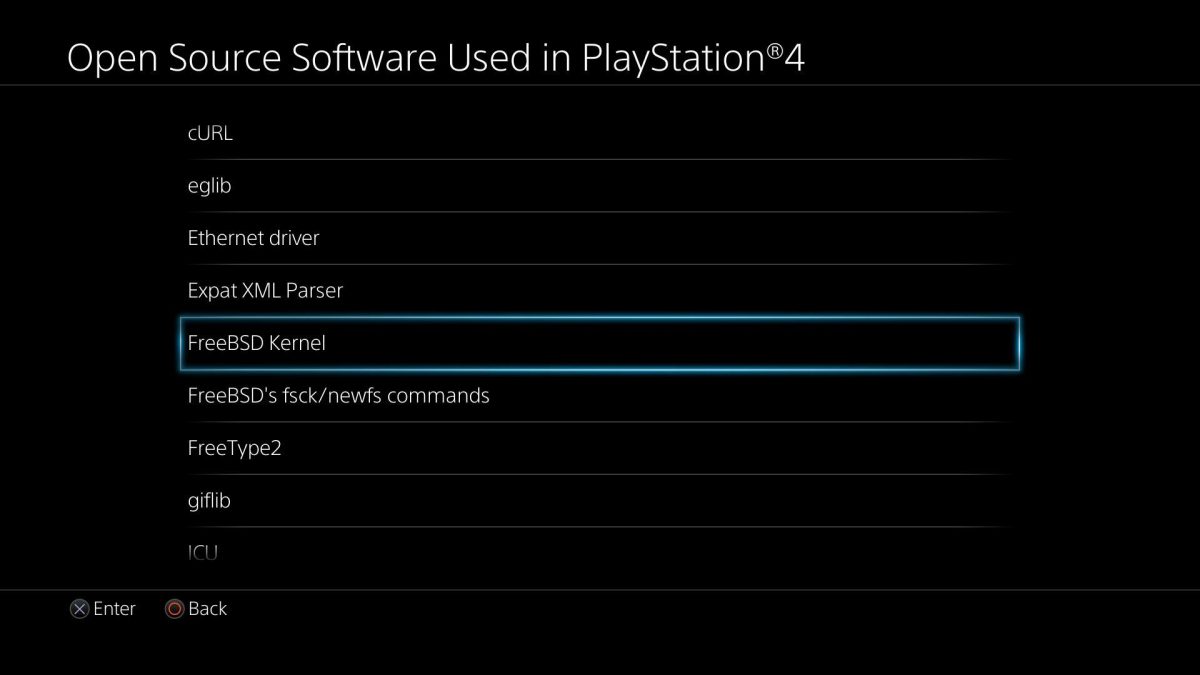

Playstation 4 lists open source products it uses.

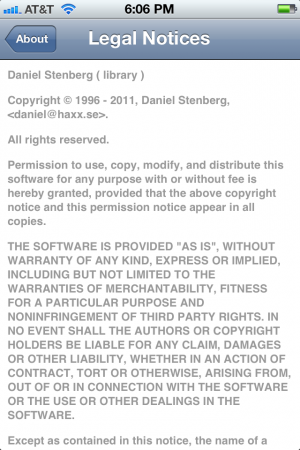

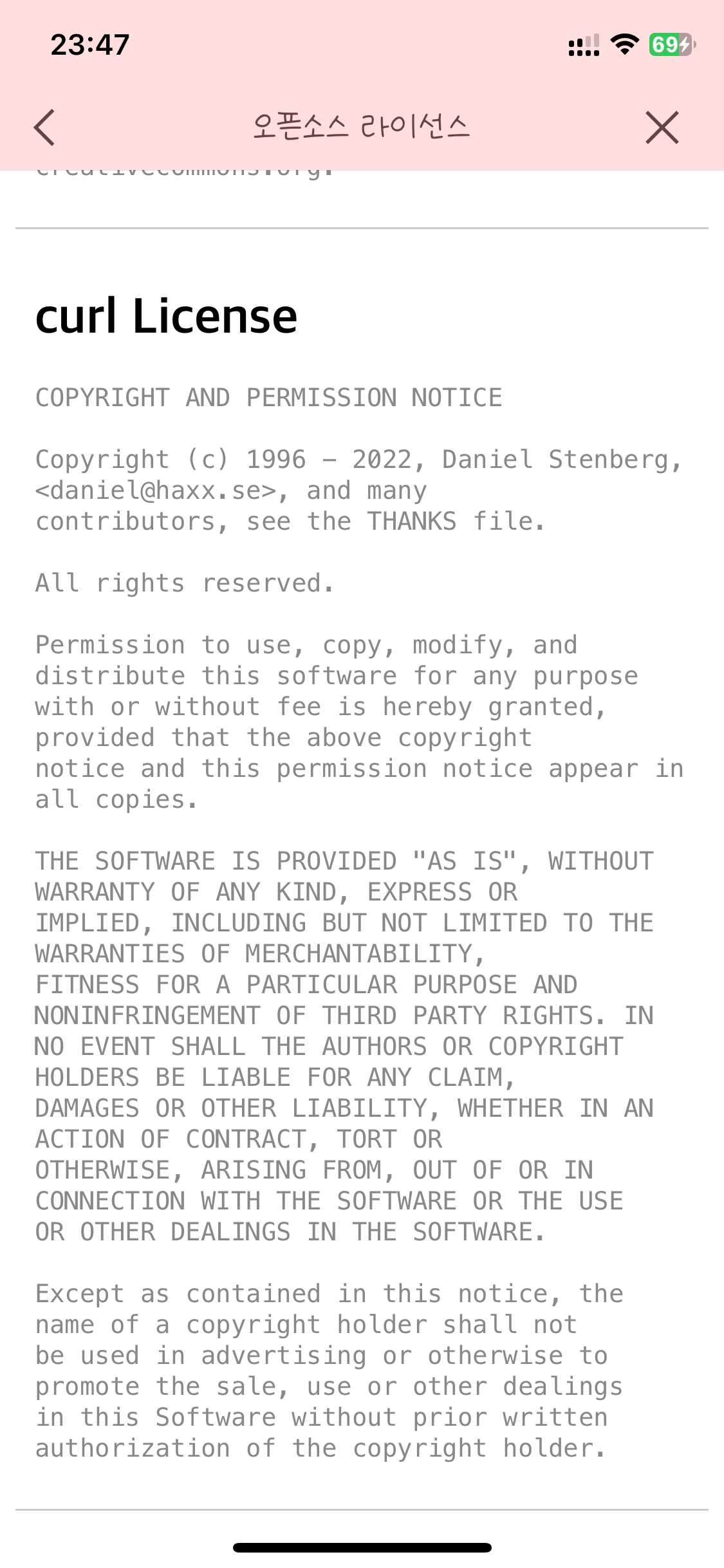

This is a screenshot from an Iphone open source license view. The iOS 10 screen however, looks like this:

curl in iOS 10 with an older year span than in the much older screenshot?

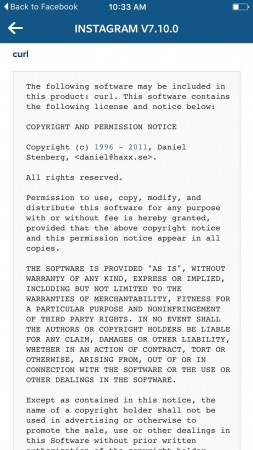

Instagram on an Iphone.

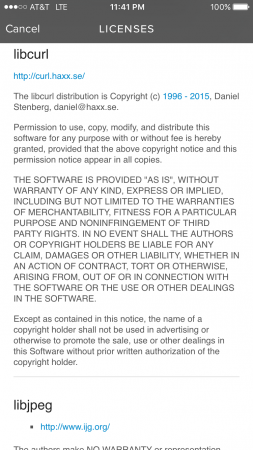

Spotify on an Iphone.

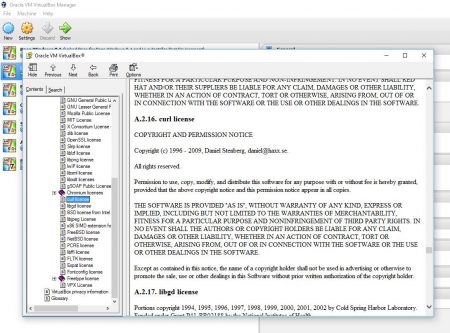

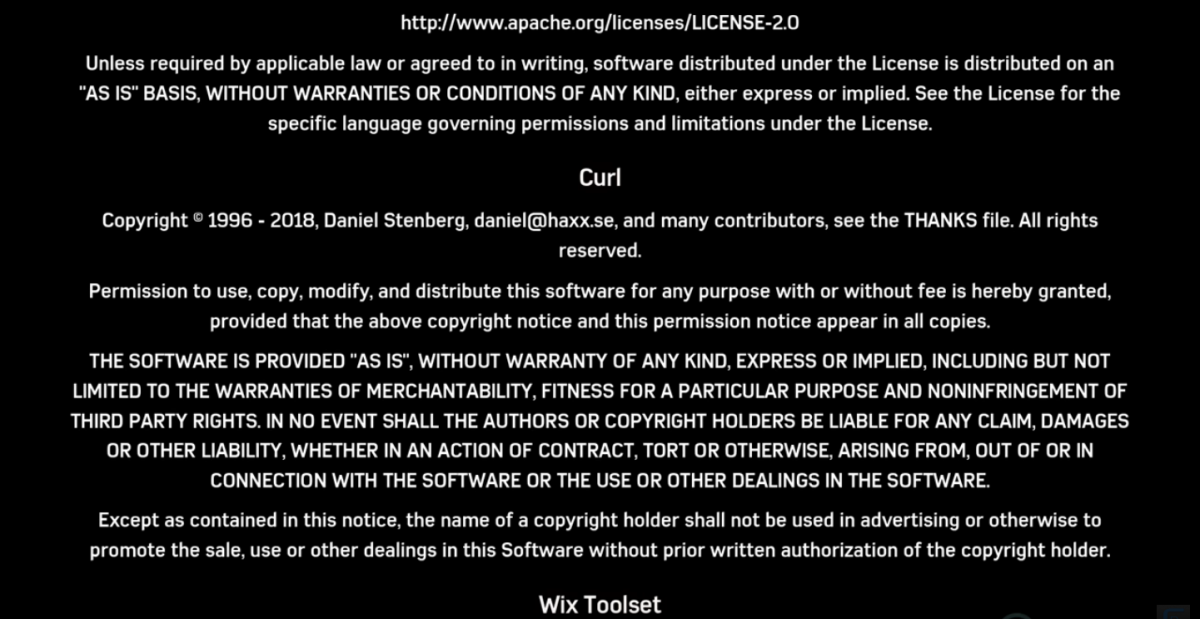

Virtualbox (thanks to Anders Nilsson)

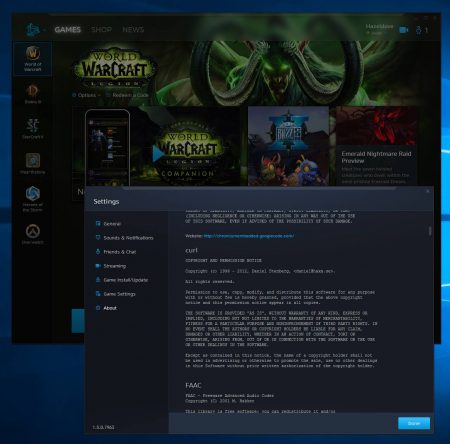

Battle.net (thanks Anders Nilsson)

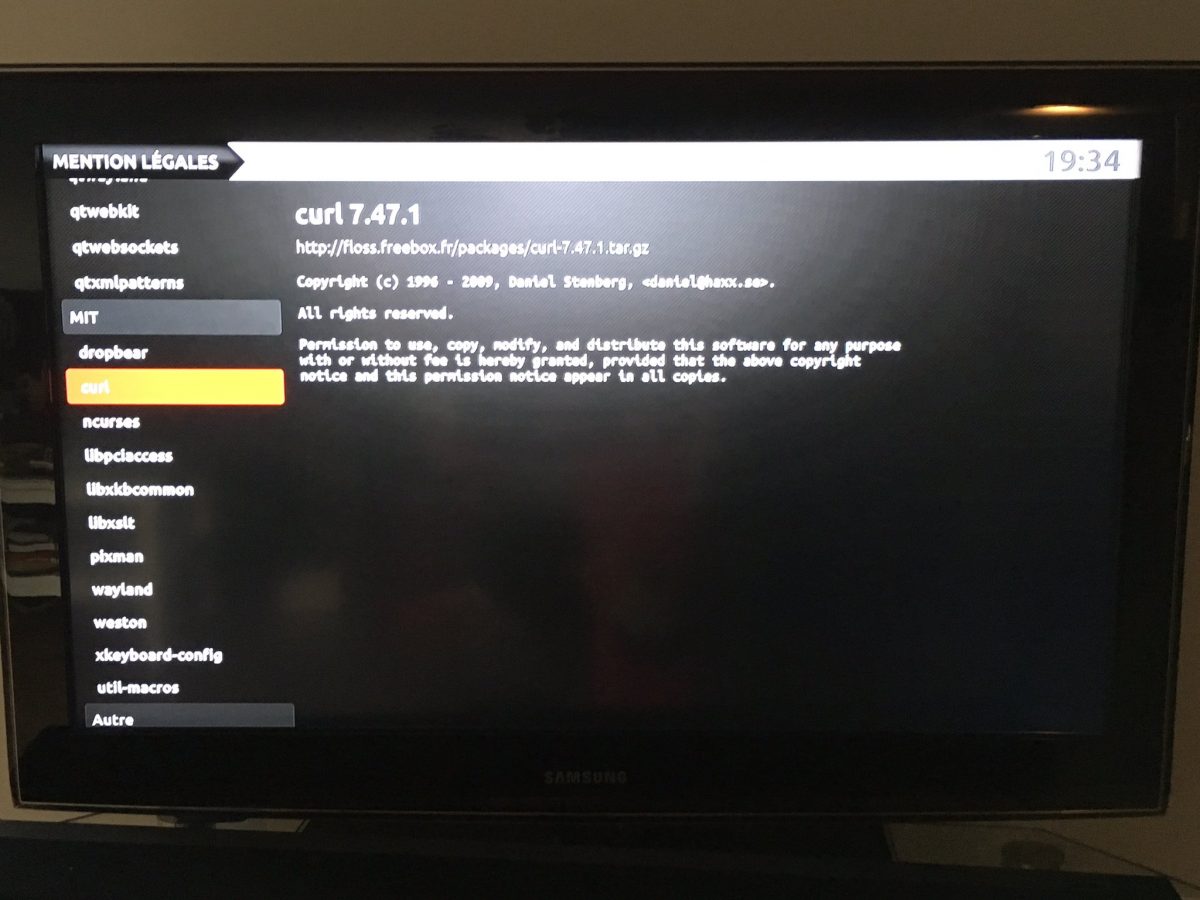

Freebox (thanks Alexis La Goutte)

The Youtube app on Android. (Thanks Ray Satiro)

The Youtube app on iOS (Thanks Anthony Bryan)

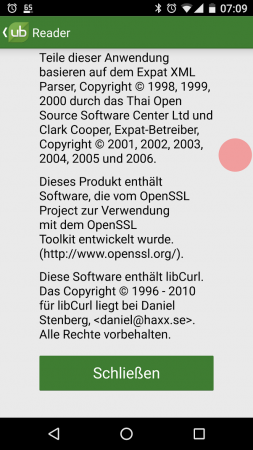

UBReader is an ebook reader app on Android.

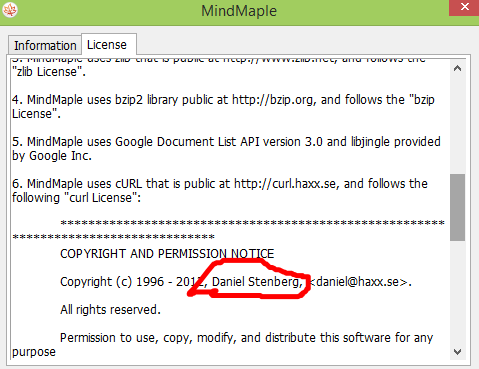

MindMaple is using curl (Thanks to Peter Buyze)

license screen from a VW Sharan car (Thanks to Jonas Lejon)

Skype on Android

Skype on an iPad

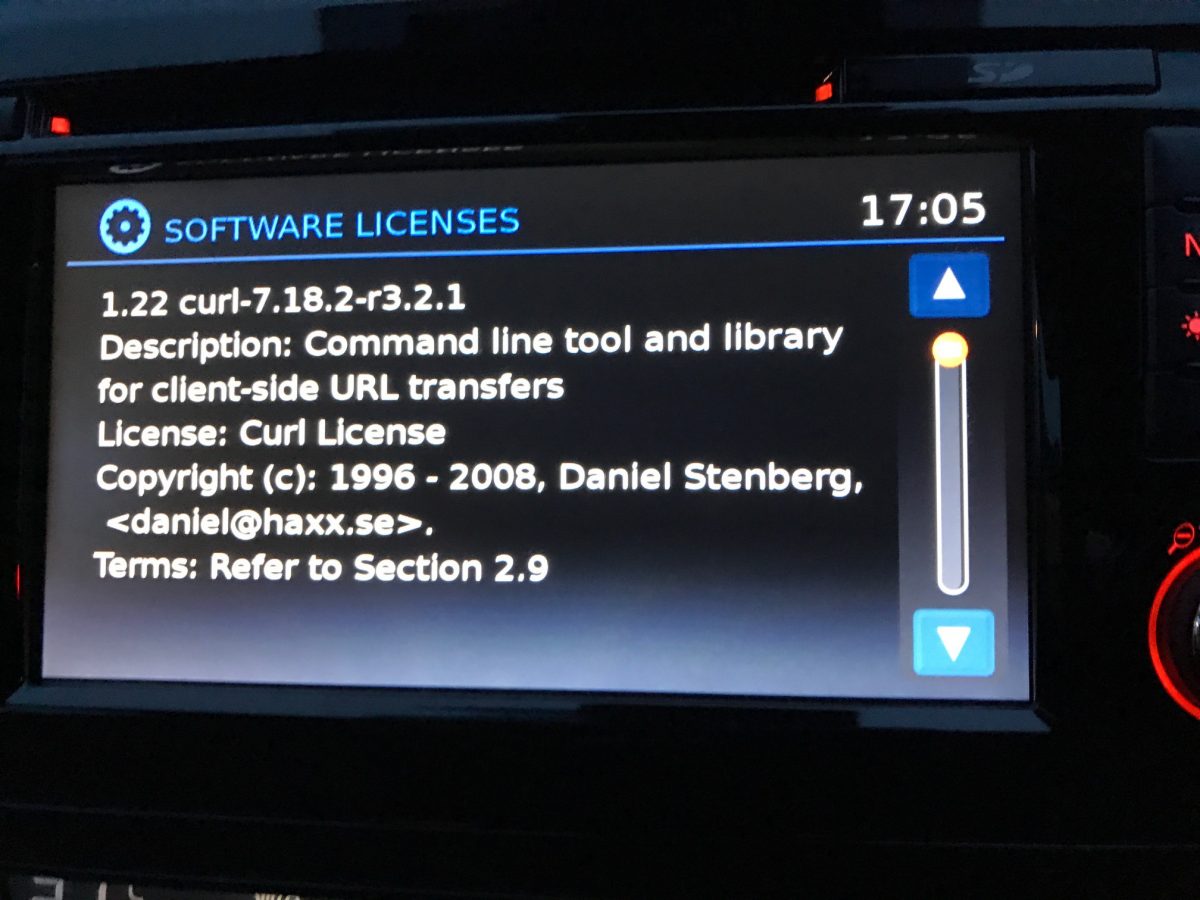

Nissan Qashqai 2016 (thanks to Peteski)

The Mercedes Benz license agreement from 2015 listing which car models that include curl.

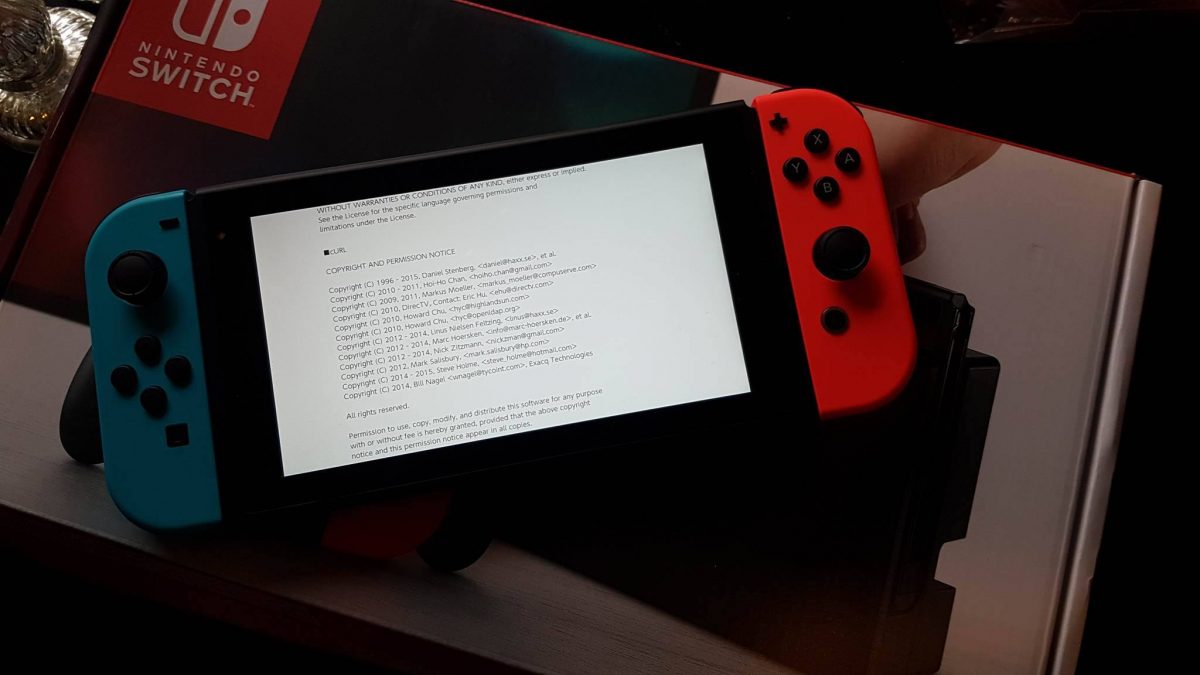

Nintendo Switch uses curl (Thanks to Anders Nilsson)

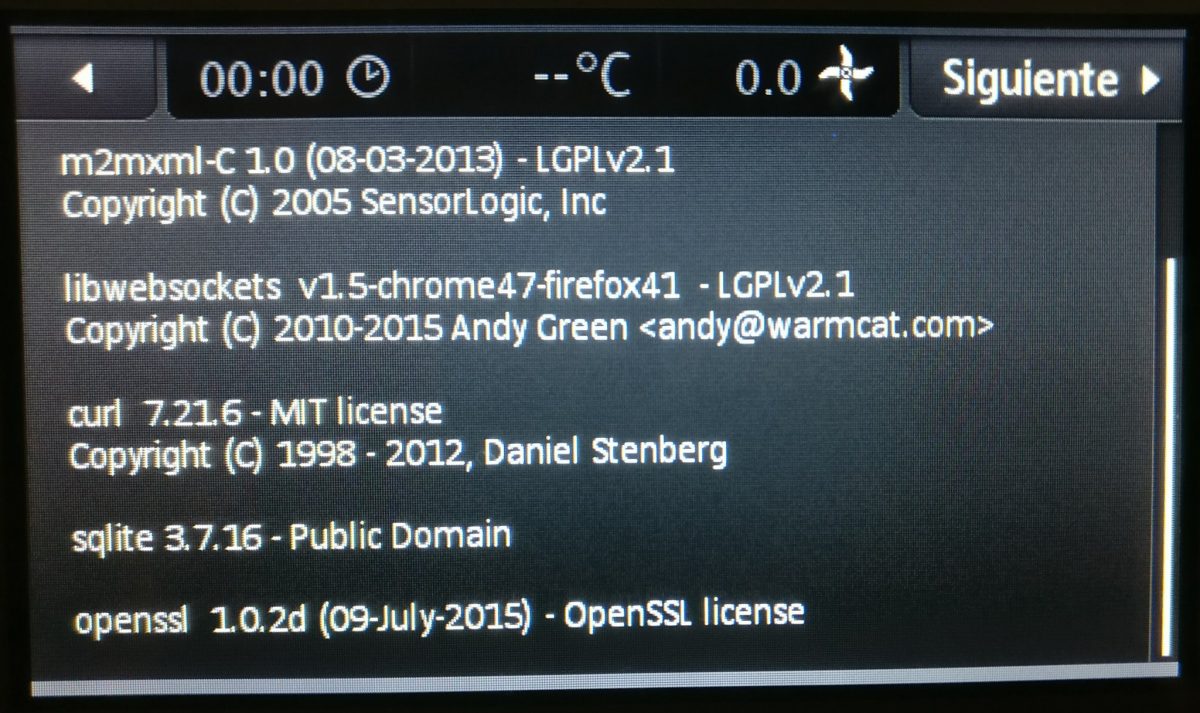

The Thermomix TM 5 kitchen/cooking appliance (Thanks to Sergio Conde)

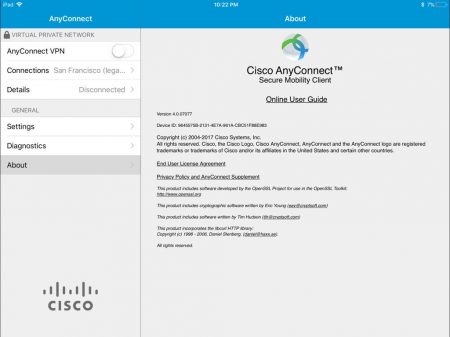

Cisco Anyconnect (Thanks to Dane Knecht) – notice the age of the curl copyright string in comparison to the main one!

Sony Android TV (Thanks to Sajal Kayan)

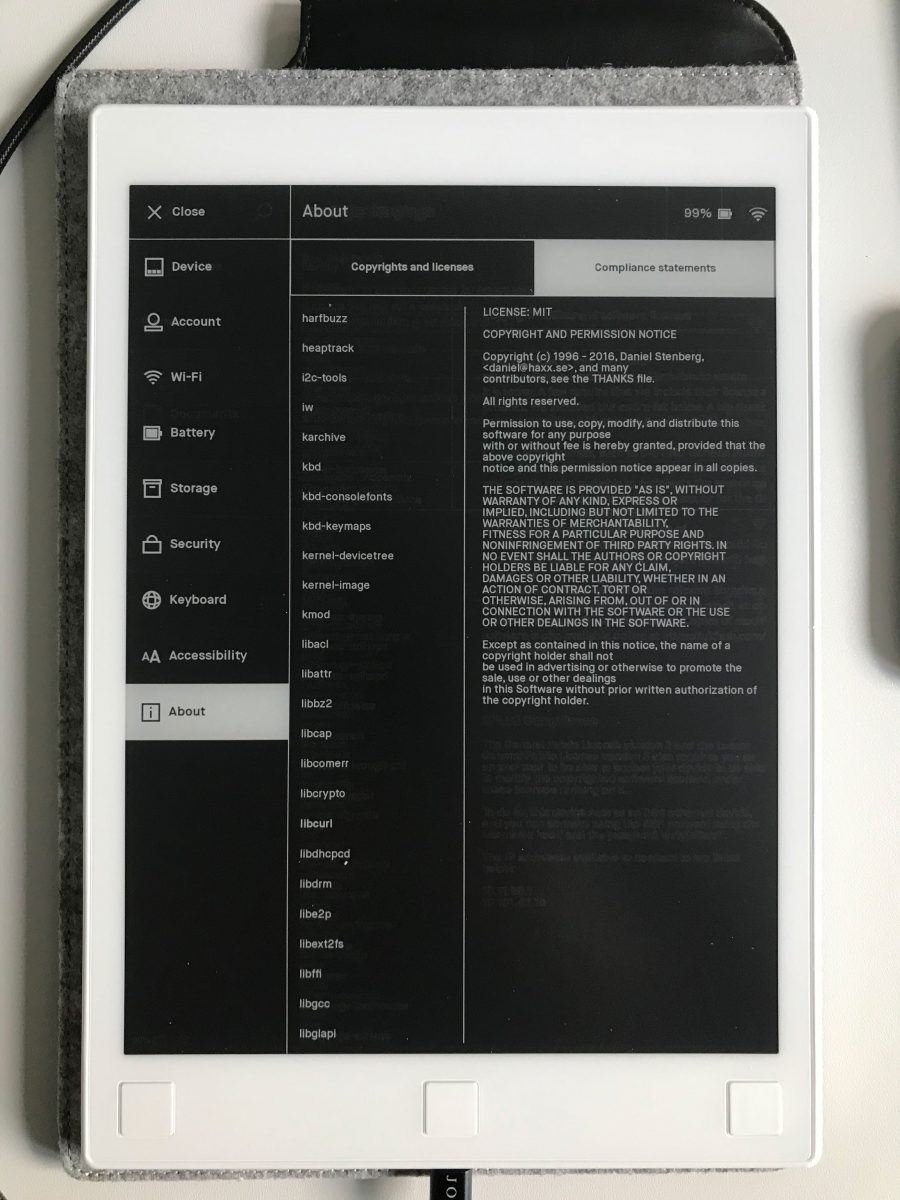

The reMarkable E-paper tablet uses curl. (Thanks to Zakx)

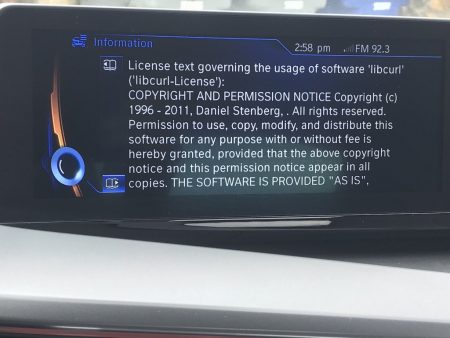

BMW i3, snapshot from this video (Thanks to Terence Eden)

BMW i8. (Thanks to eeeebbbbrrrr)

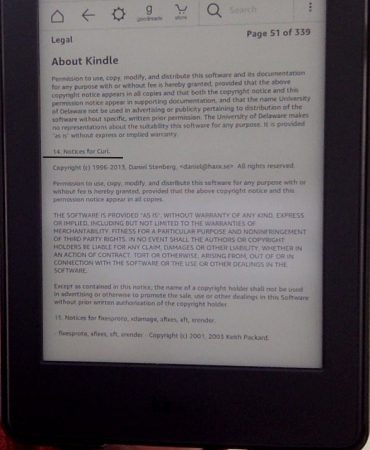

Amazon Kindle Paperwhite 3 (thanks to M Hasbini)

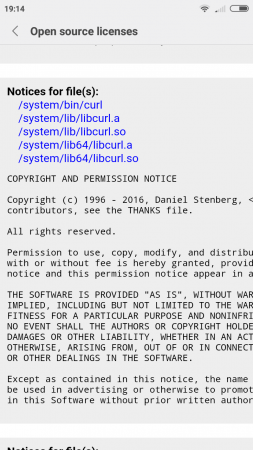

Xiaomi Android uses both curl and libcurl. (Thanks to Björn Stenberg)

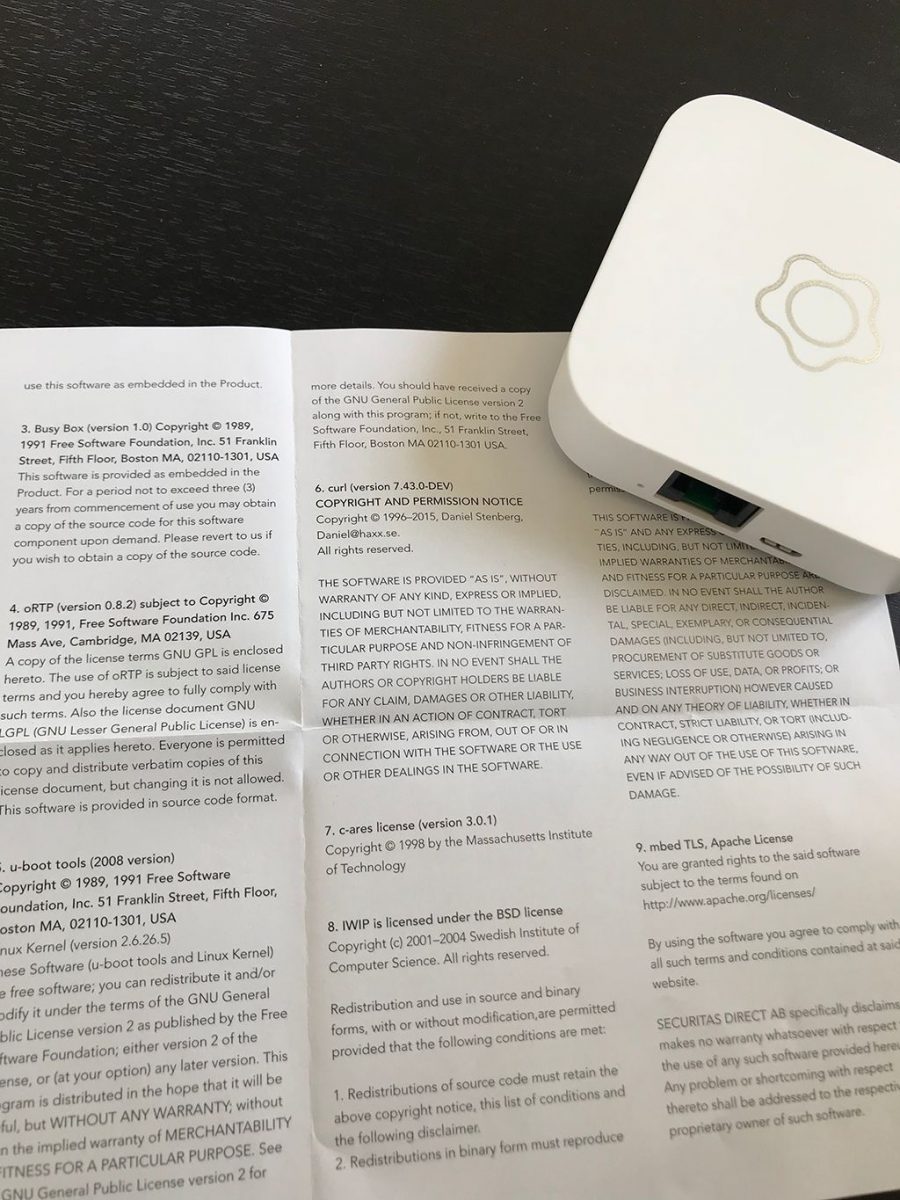

Verisure V box microenhet smart lock runs curl (Thanks to Jonas Lejon)

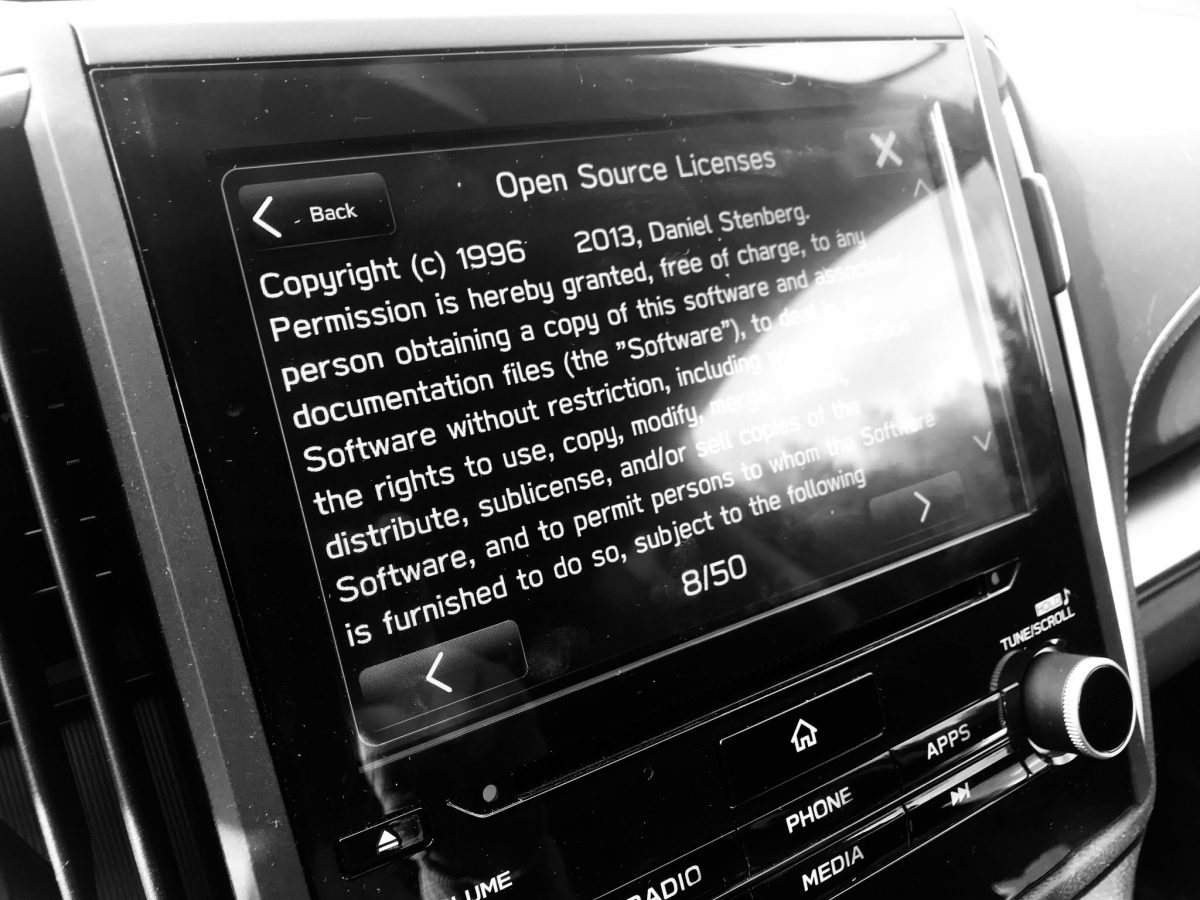

curl in a Subaru (Thanks to Jani Tarvainen)

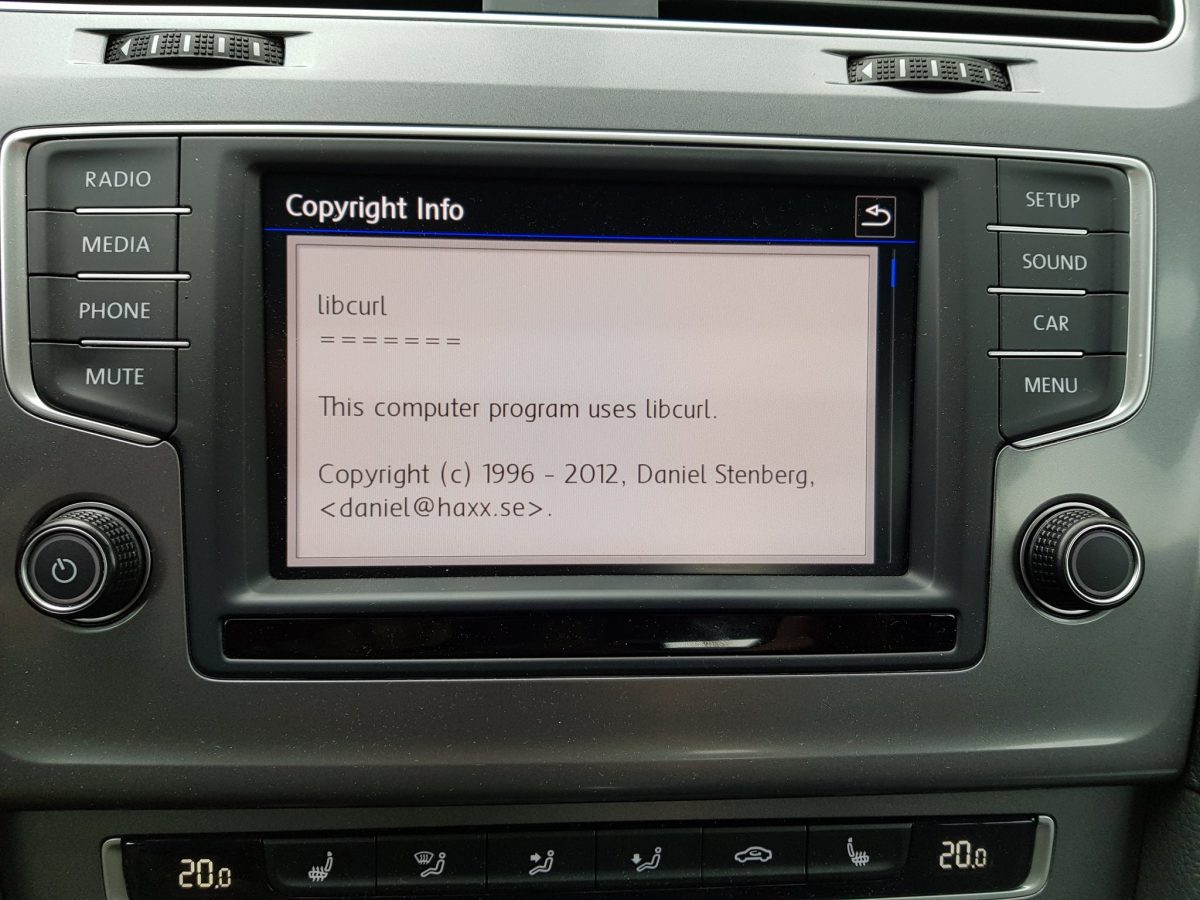

Another VW (Thanks to Michael Topal)

Oppo Android uses curl (Thanks to Dio Oktarianos Putra)

Chevrolet Traverse 2018 uses curl according to its owners manual on page 403. It is mentioned almost identically in other Chevrolet model manuals such as for the Corvette, the 2018 Camaro, the 2018 TRAX, the 2013 VOLT, the 2014 Express and the 2017 BOLT.

The curl license is also in owner manuals for other brands and models such as in the GMC Savana, Cadillac CT6 2016, Opel Zafira, Opel Insignia, Opel Astra, Opel Karl, Opel Cascada, Opel Mokka, Opel Ampera, Vauxhall Astra … (See 100 million cars run curl).

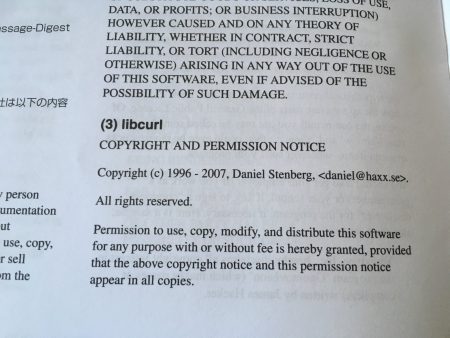

The Onkyo TX-NR609 AV-Receiver uses libcurl as shown by the license in its manual. (Thanks to Marc Hörsken)

Fortnite uses libcurl. (Thanks to Neil McKeown)

Red Dead Redemption 2 uses libcurl. The ending sequence video. (Thanks to @nadbmal)

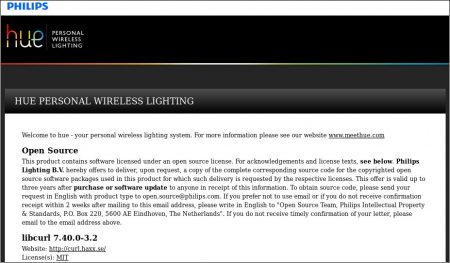

Philips Hue Lights uses libcurl (Thanks to Lorenzo Fontana)

Pioneer makes Blu-Ray players that use libcurl. (Thanks to Maarten Tijhof)

curl is credited in the game Marvel’s Spider-Man for PS4.

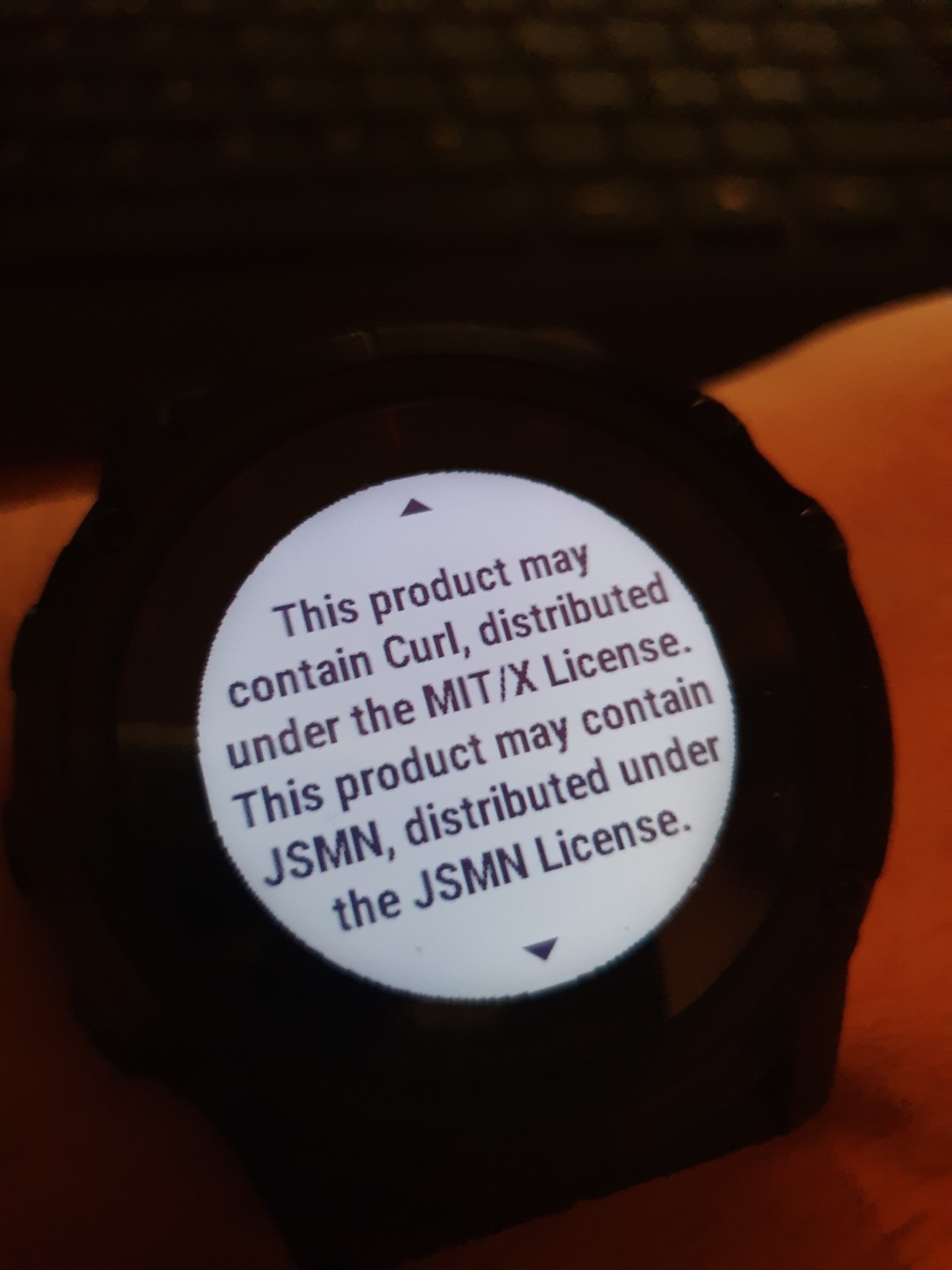

Garmin Fenix 5X Plus runs curl (thanks to Jonas Björk)

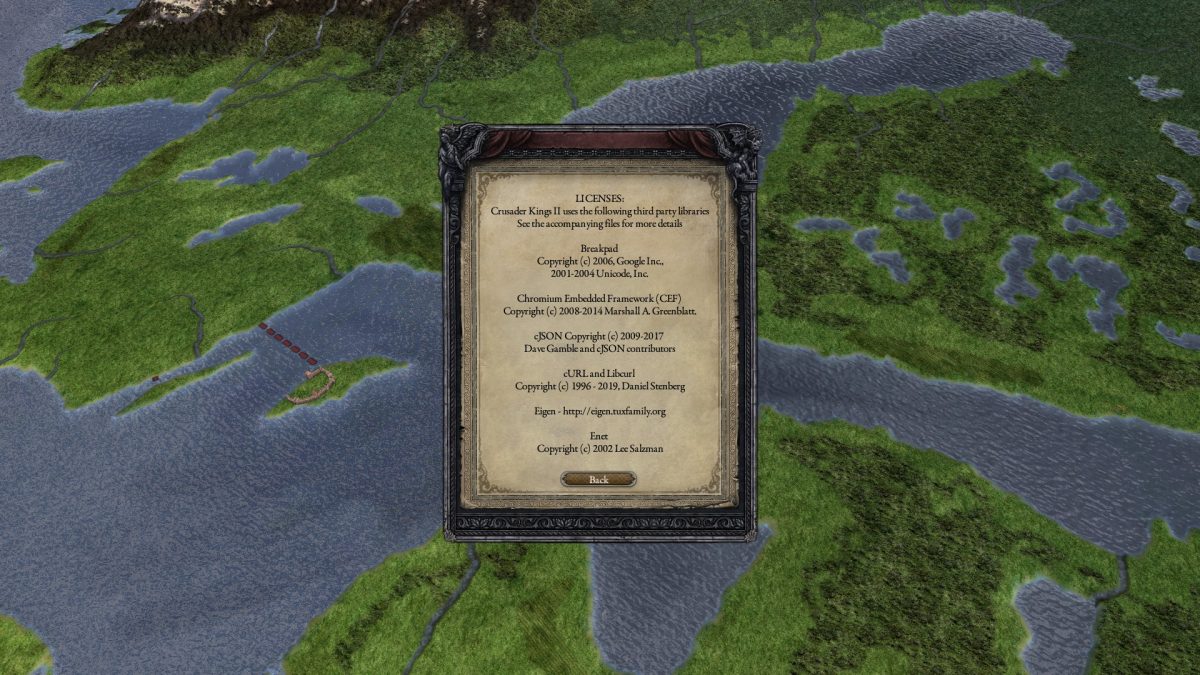

Crusader Kings II uses curl (thanks to Frank Gevaerts)

DiRT Rally 2.0 (PlayStation 4 version) uses curl (thanks to Roman)

Microsoft Flight Simulator uses libcurl. Thanks to Till von Ahnen.

Google Photos on Android uses curl.

Crusader Kings III uses curl (thanks to Frank Gevaerts)

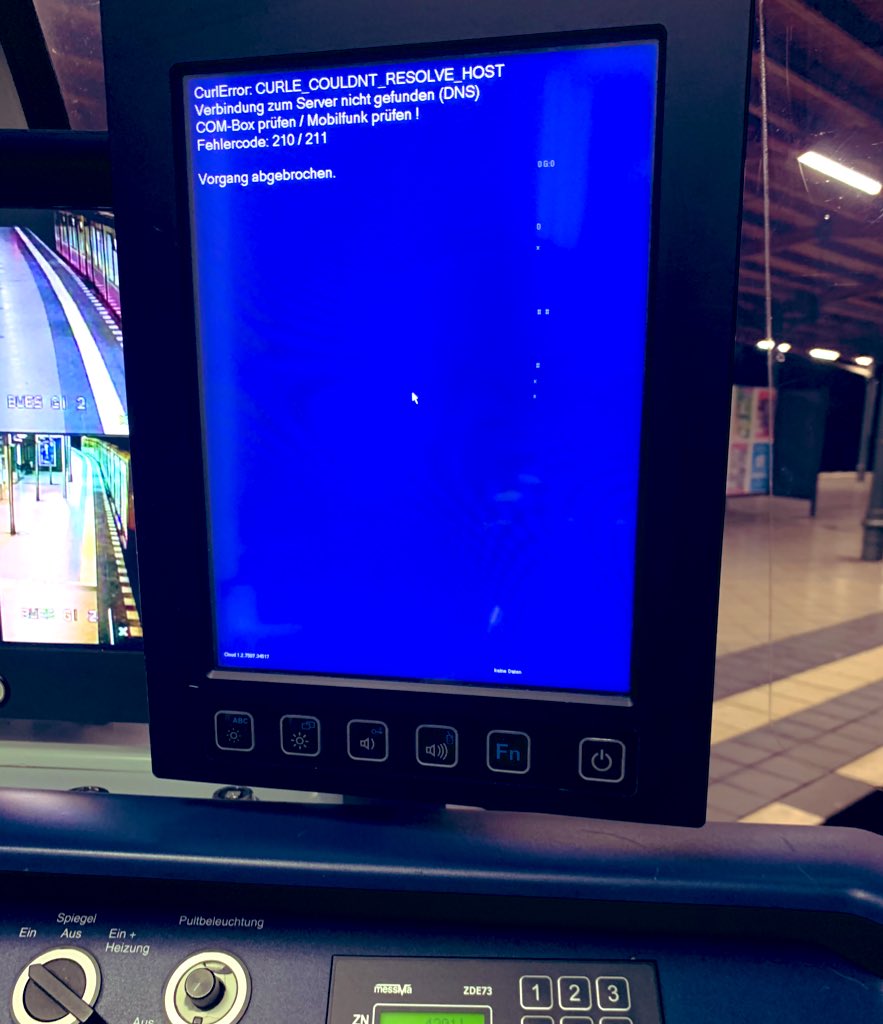

The SBahn train in Berlin uses curl! (Thanks to @_Bine__)

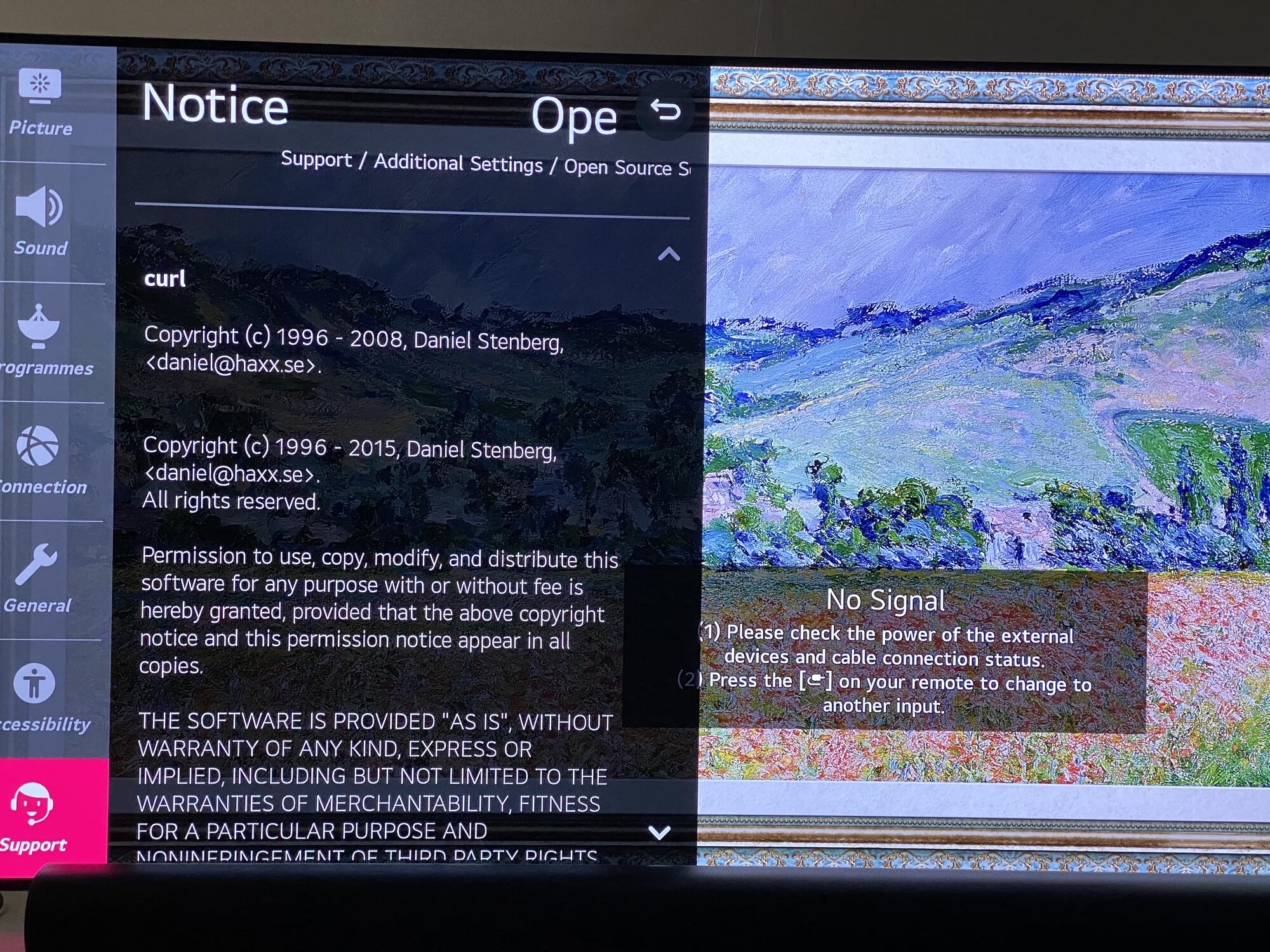

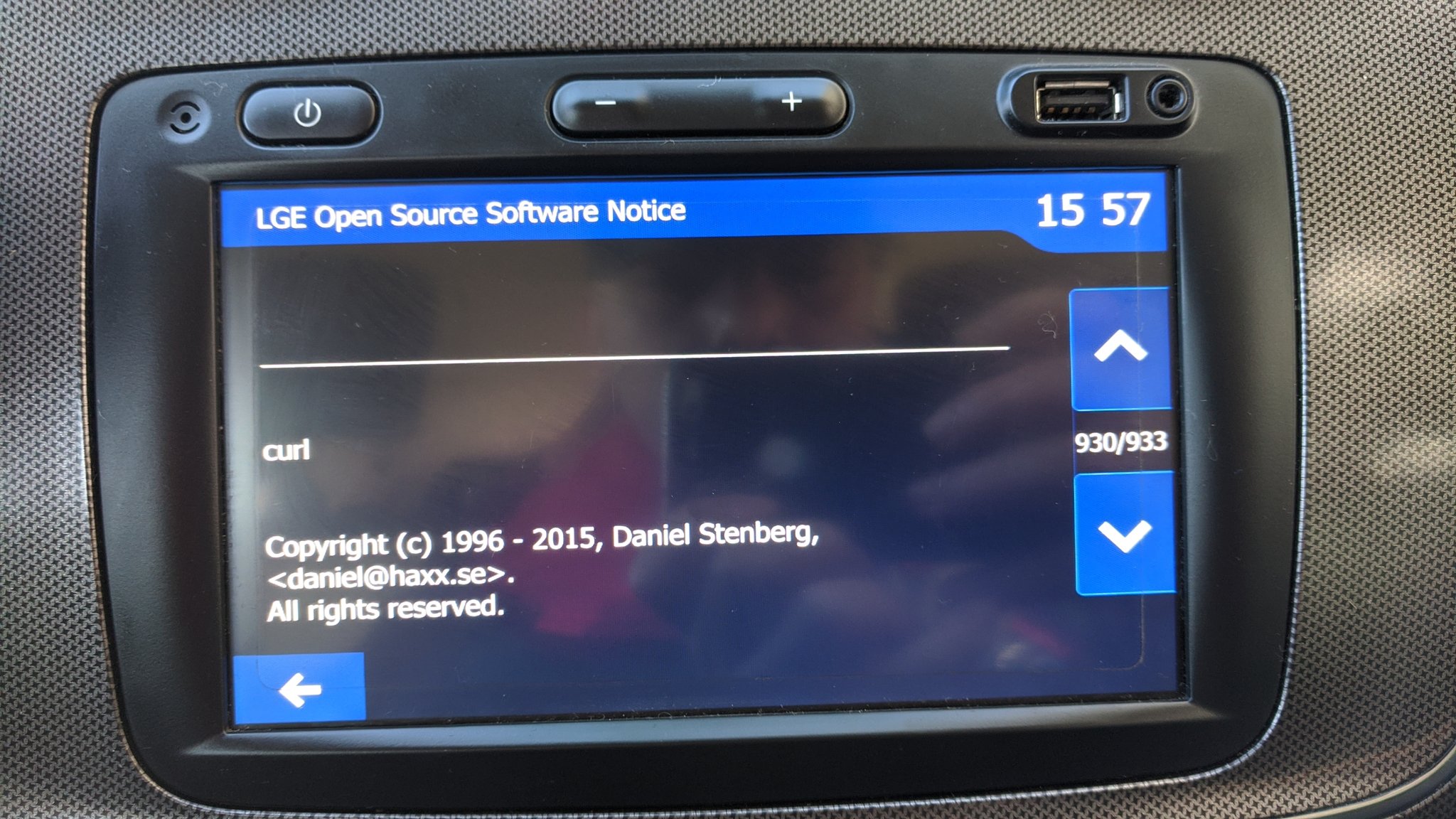

LG uses curl in TVs.

Garmin Forerunner 245 also runs curl (Thanks to Martin)

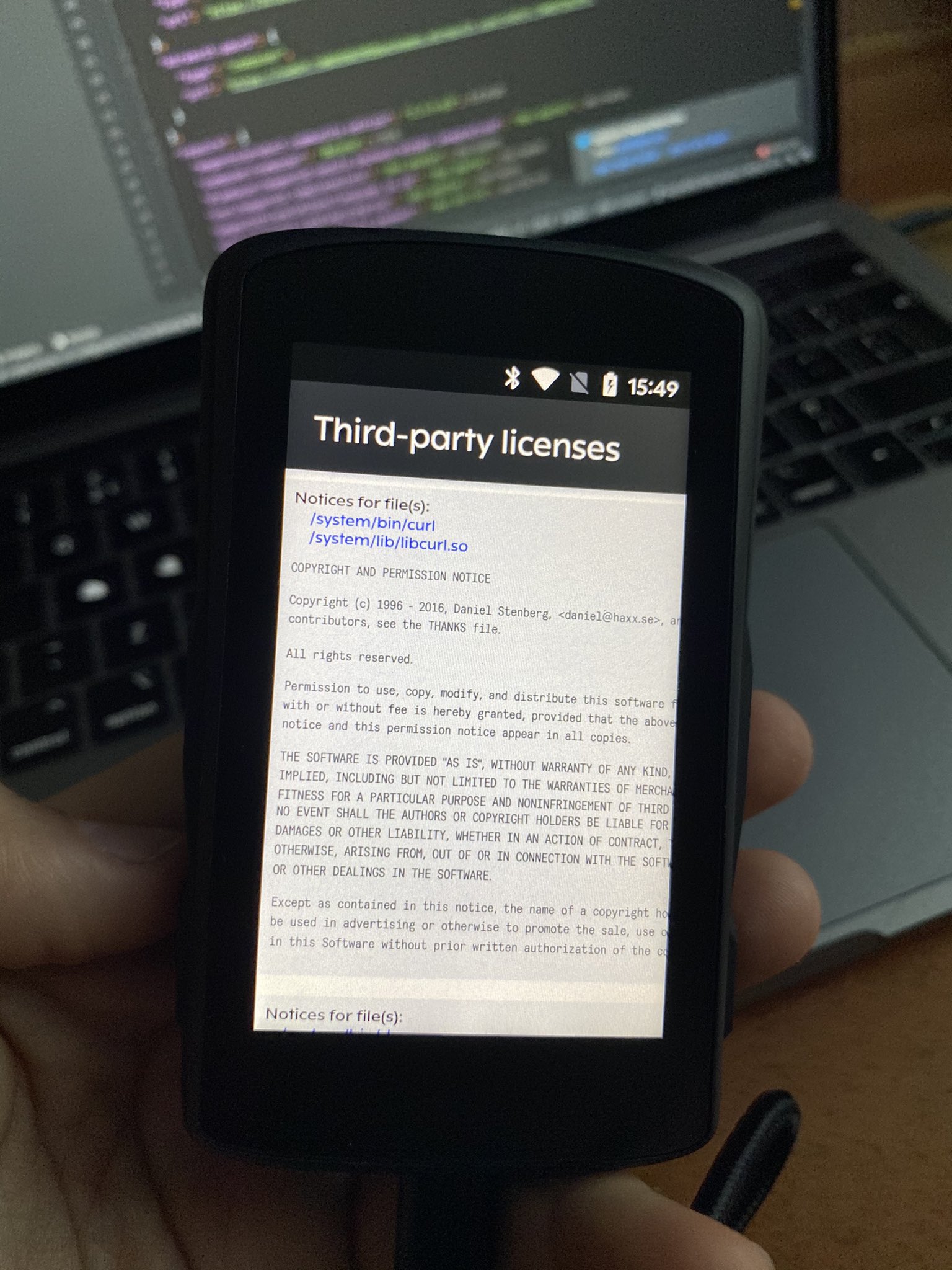

The bicycle computer Hammerheaed Karoo v2 (thanks to Adrián Moreno Peña)

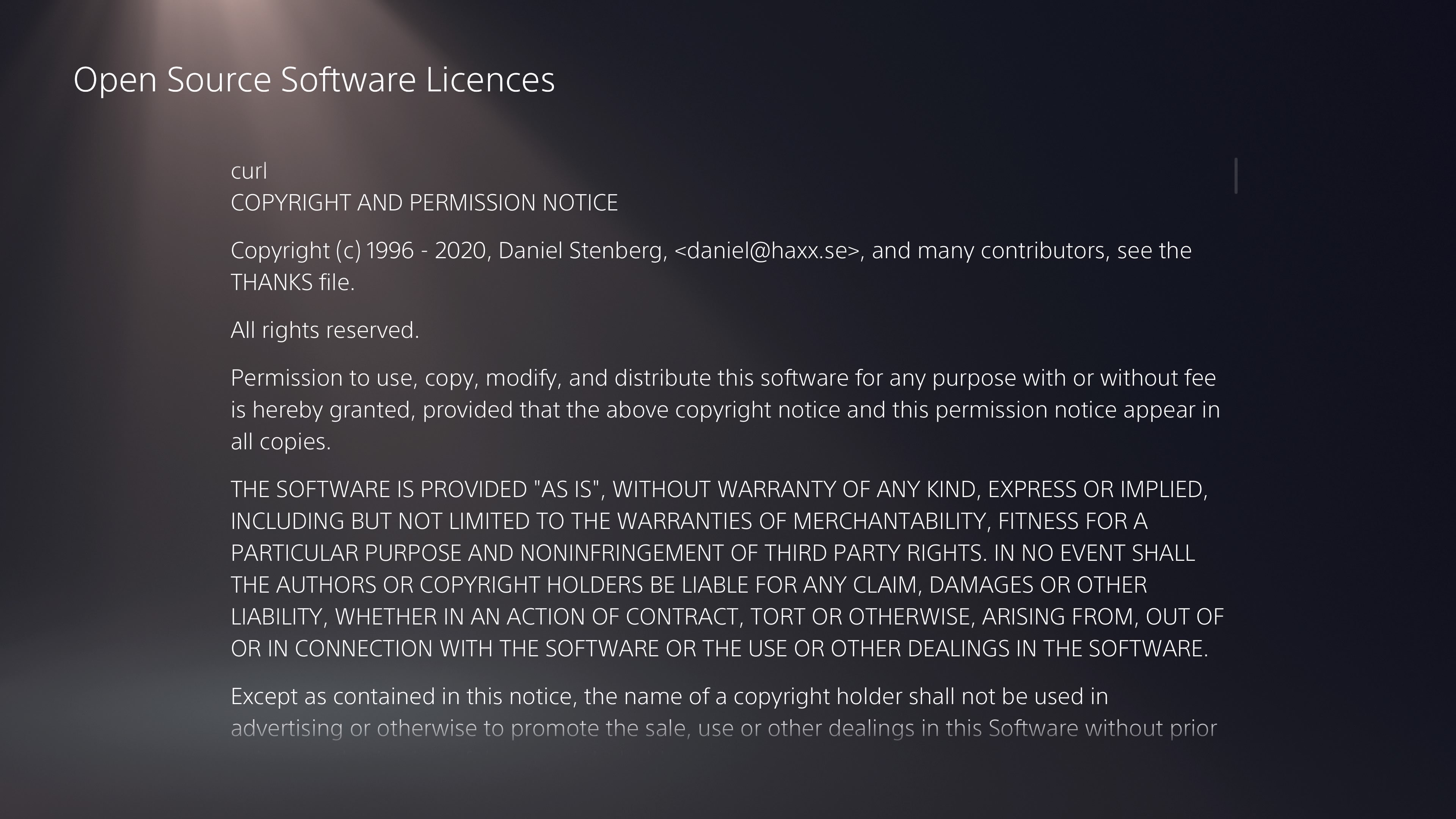

Playstation 5 uses curl (thanks to djs)

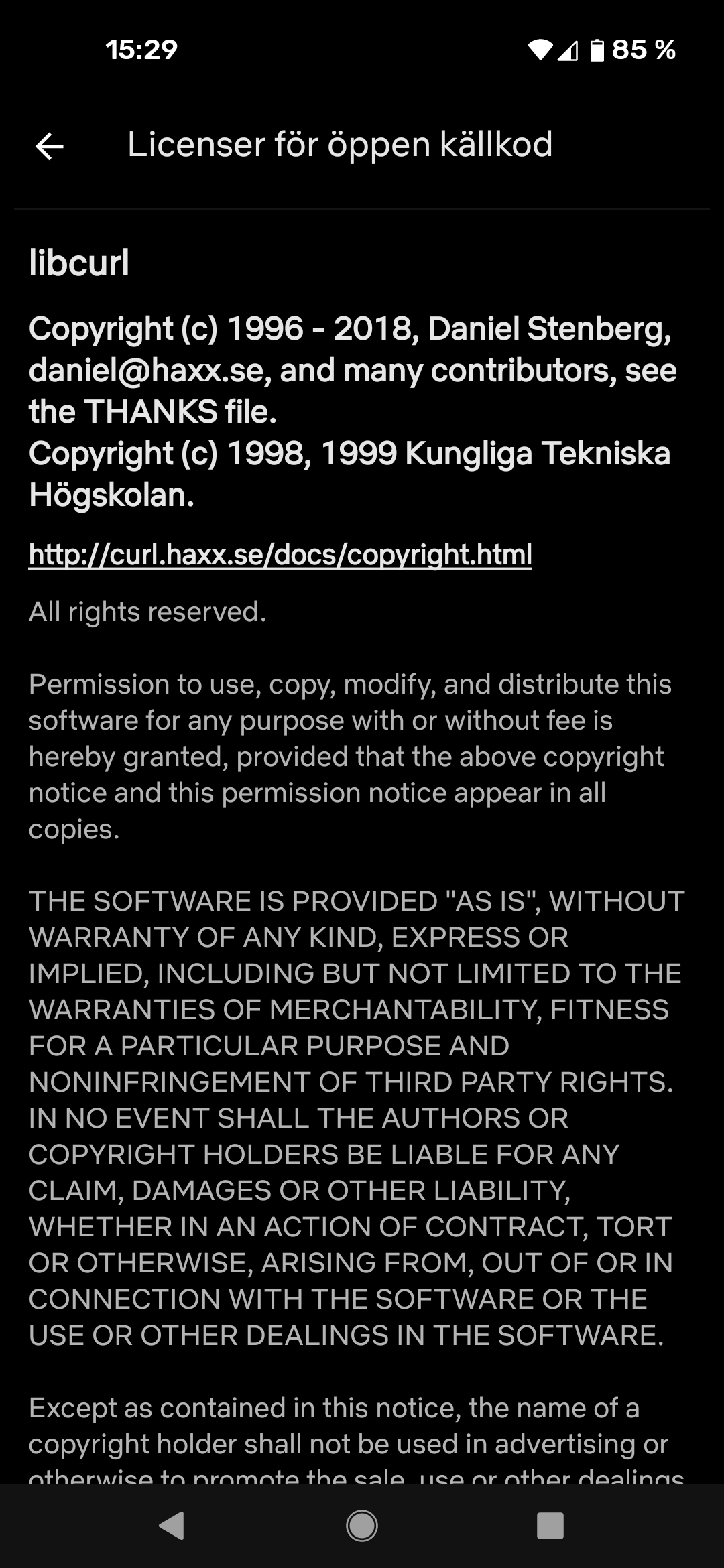

The Netflix app on Android uses libcurl (screenshot from January 29, 2021). Set to Swedish, hence the title of the screen.

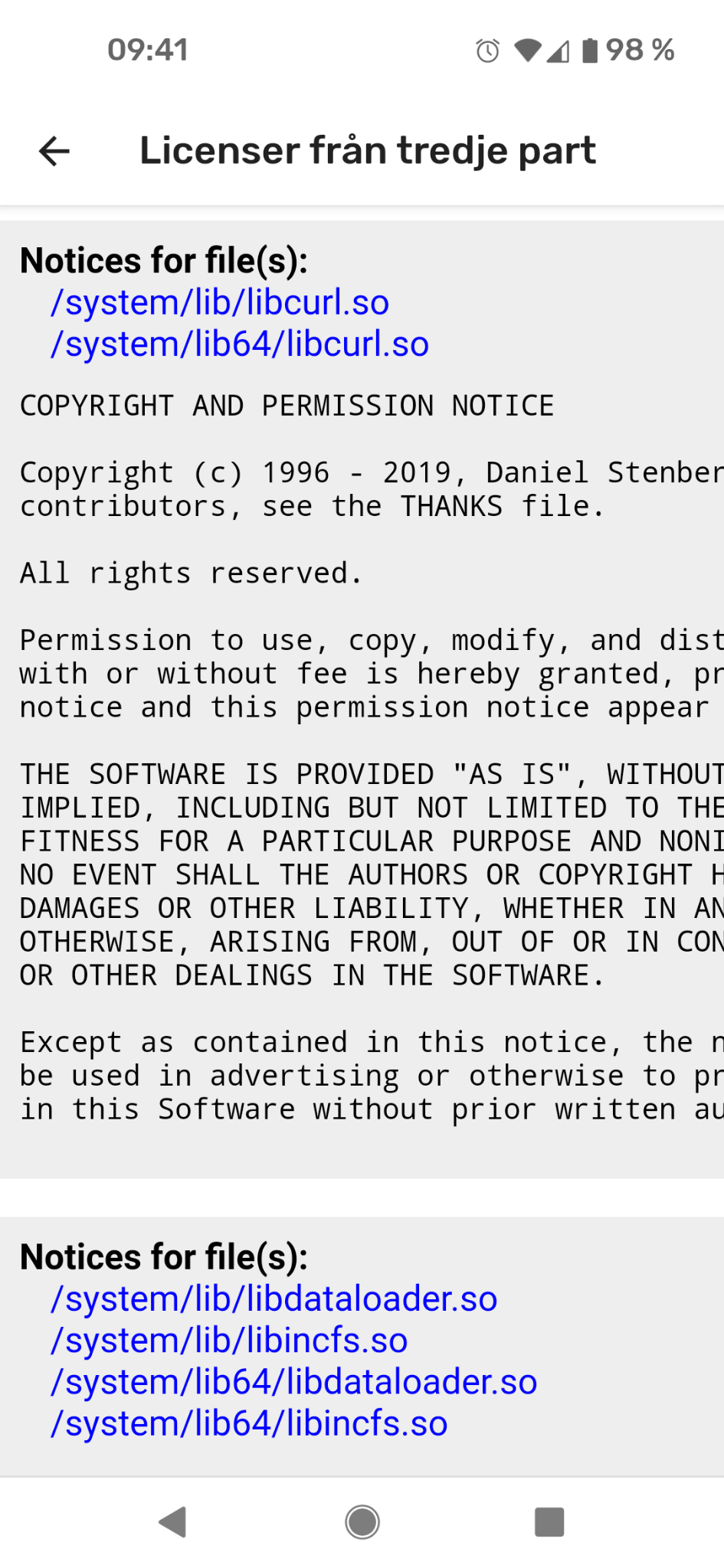

(Google) Android 11 uses libcurl. Screenshot from a Pixel 4a 5g.

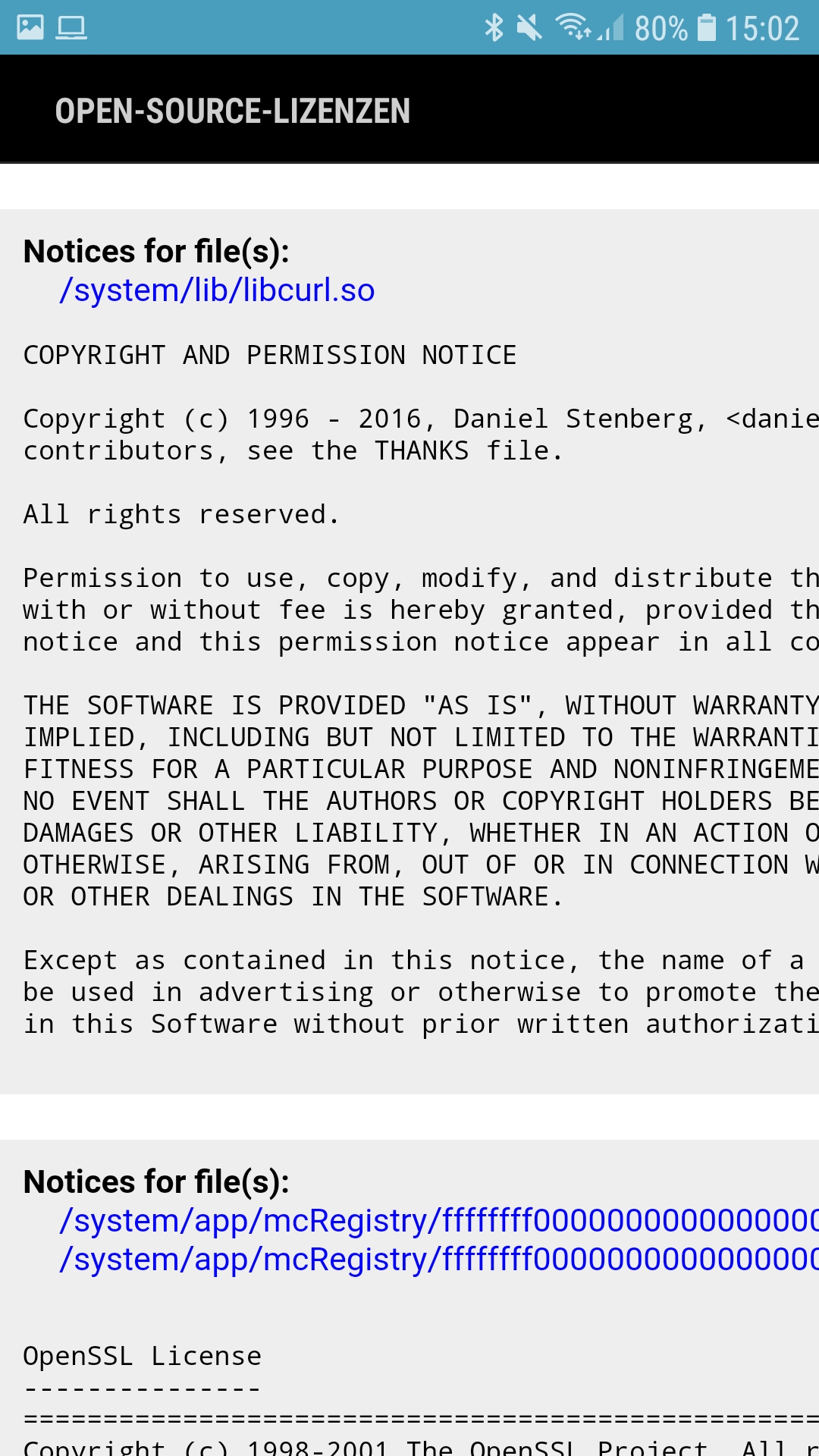

Samsung Android uses libcurl in presumably every model…

The ending sequence as seen on YouTube.

A Samsung TV speaking Swedish showing off a curl error code. Thanks to Thomas Svensson.

Polestar 2 (thanks to Robert Friberg for the picture)

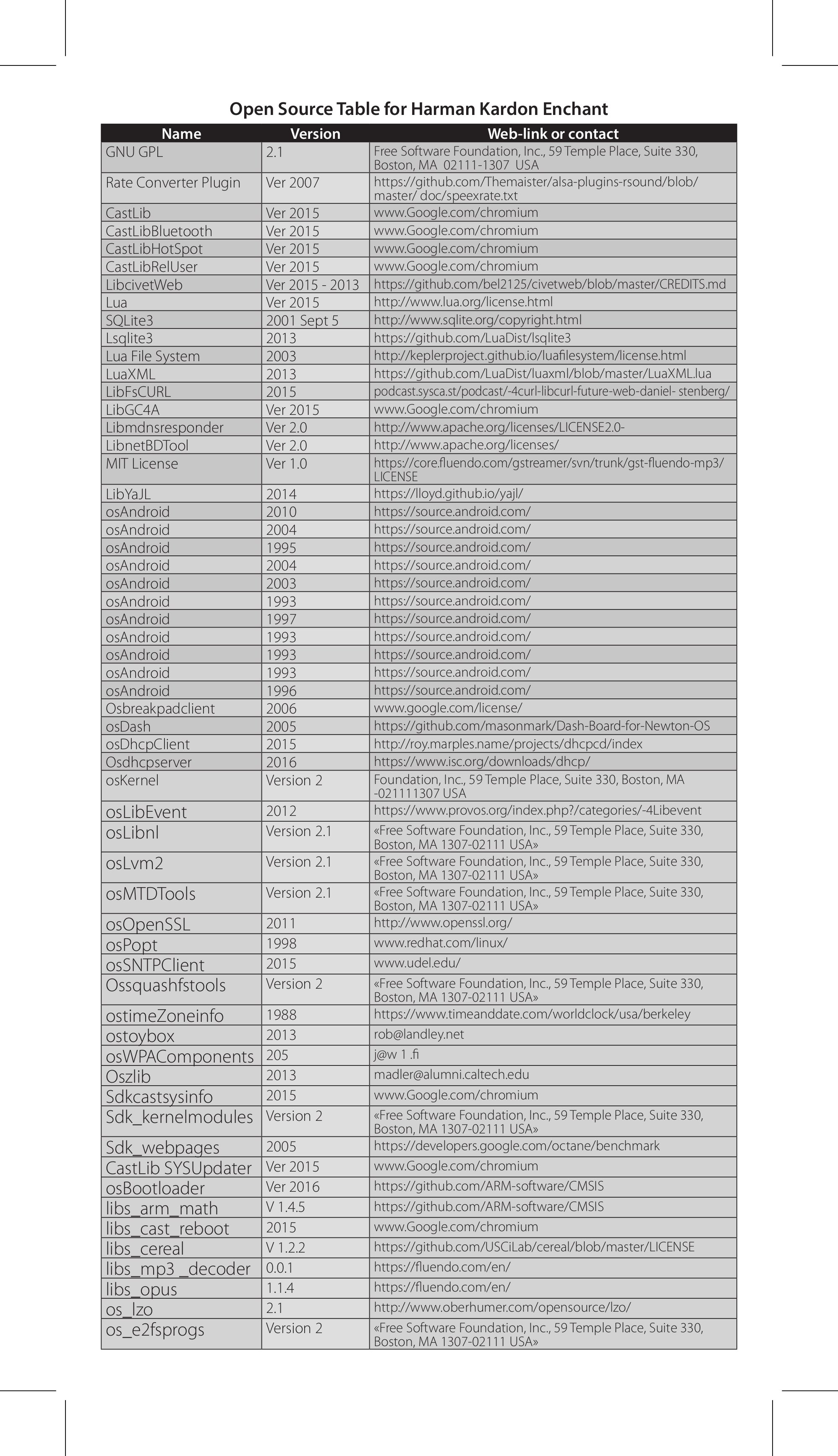

Harman Kardon uses libcurl in their Enchant soundbars (thanks to Fabien Benetou). The name and the link in that list are hilarious though.

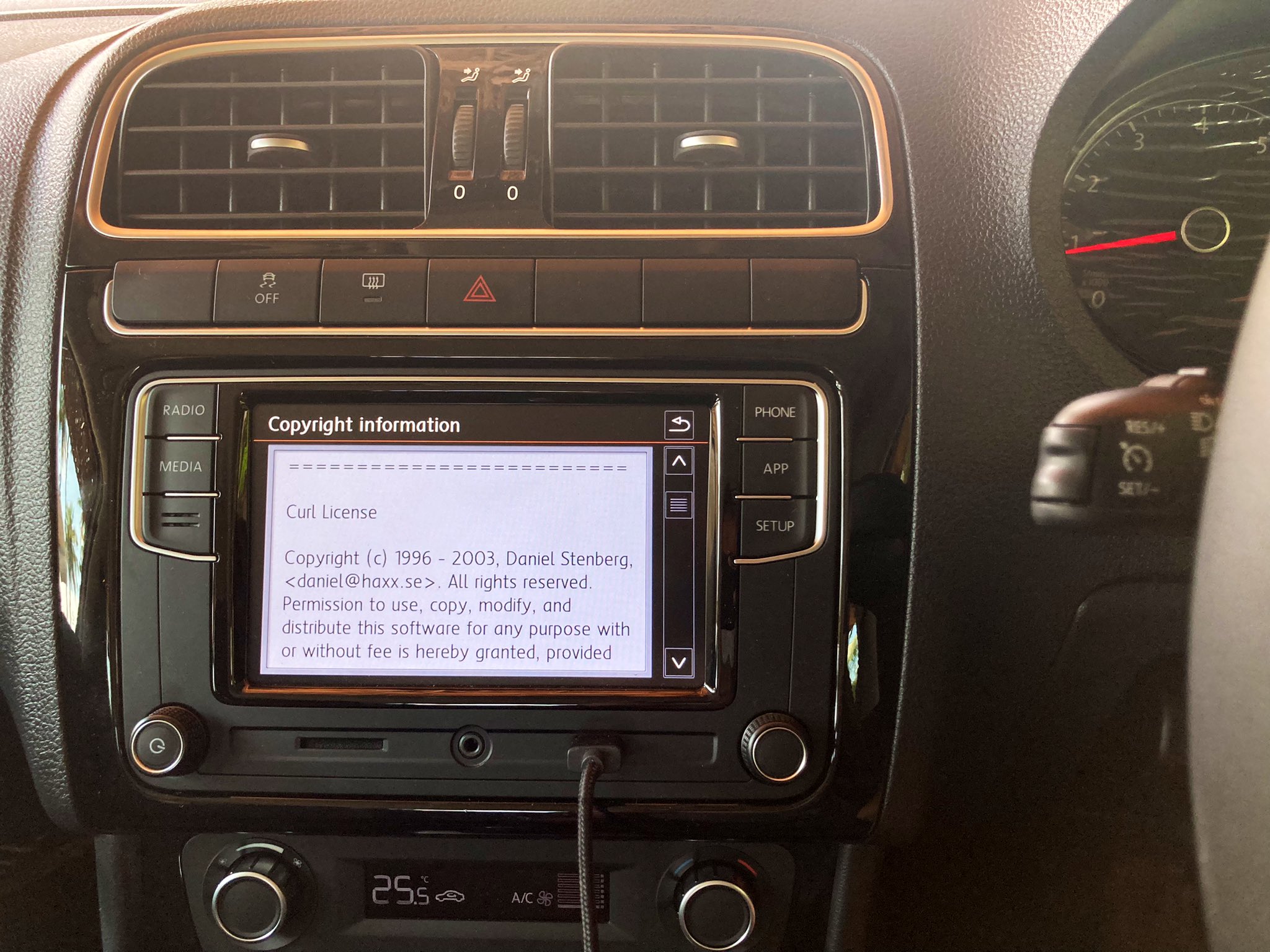

VW Polo running curl (Thanks to Vivek Selvaraj)

a BMW 2021 R1250GS motorcycle (Thanks to @growse)

Baldur’s Gate 3 uses libcurl (Thanks to Akhlis)

An Andersson TV using curl (Thanks to Björn Stenberg)

Ghost of Tsushima – a game. (Thanks to Patrik Svensson)

Sonic Frontier (Thanks to Esoteric Emperor)

The KAON NS1410 (set top box), possibly also called Mirada Inspire3 or Broadcom Nexus,. (Thanks to Aksel)

The Panasonic DC-GH5 camera. (Thanks fasterthanlime)

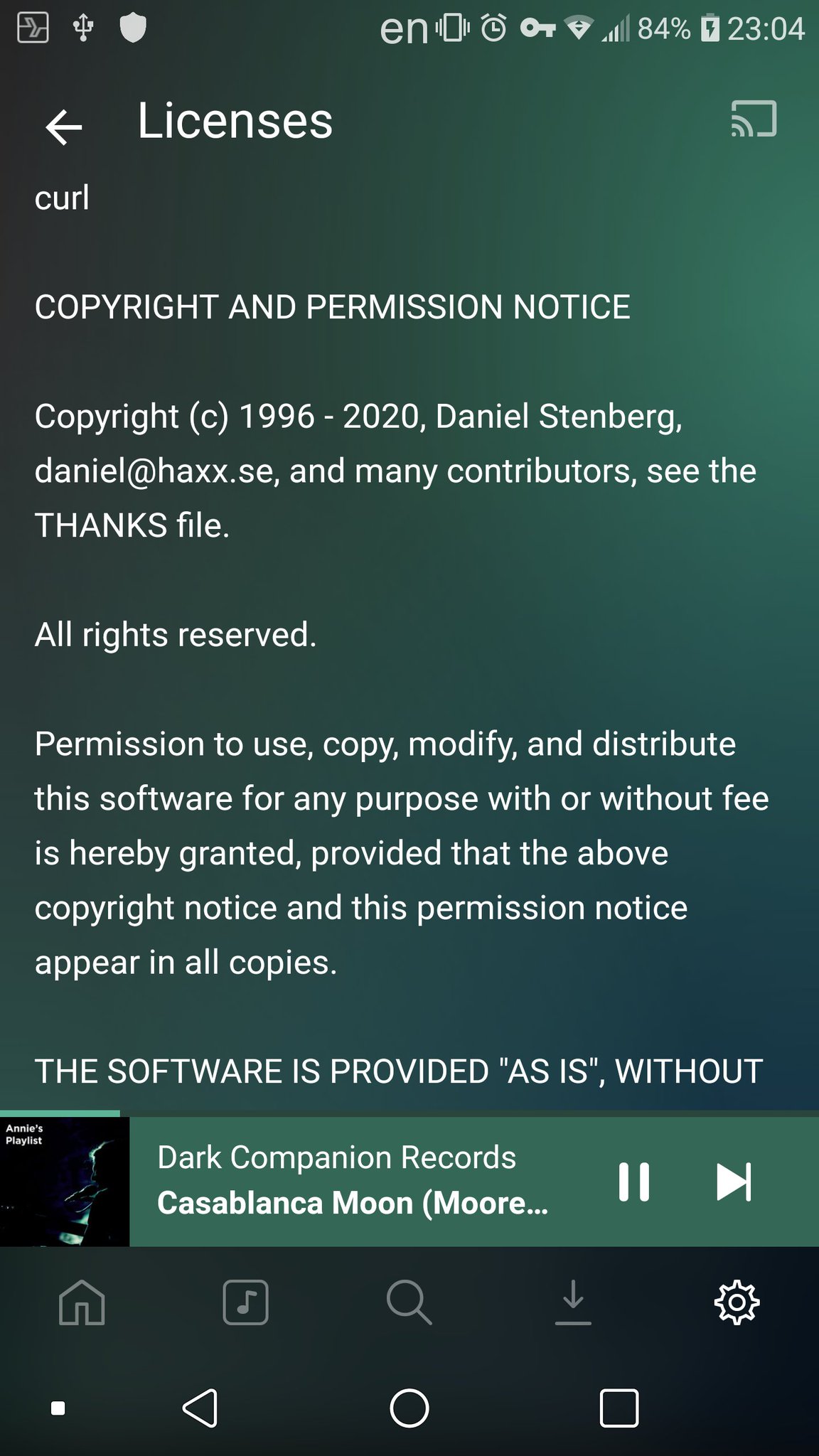

Plexamp, the Android app. (Thanks Fabio Loli)

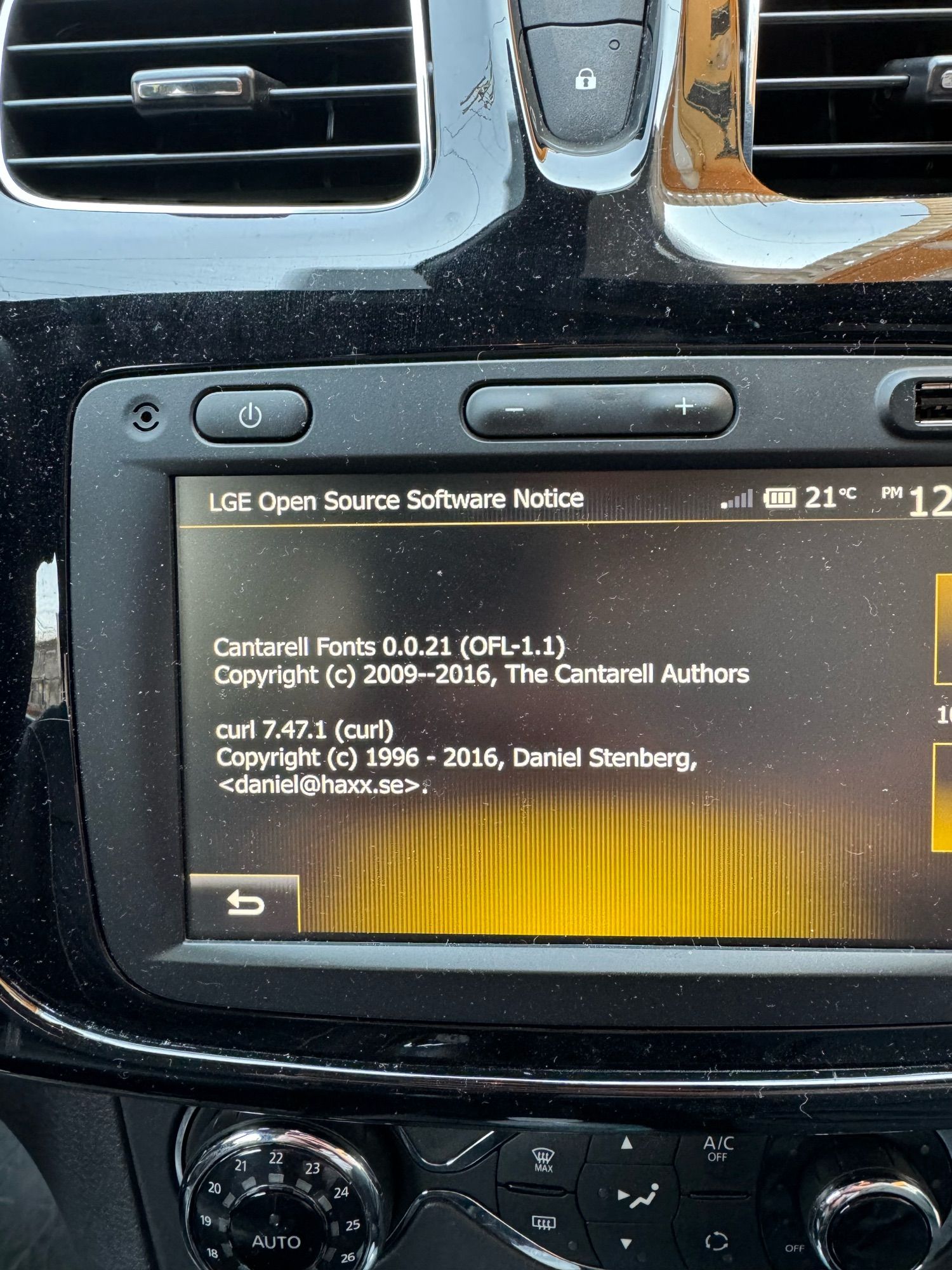

The Dacia Sandero Stepway car (Thanks Adnane Belmadiaf)

The Garmin Venu Sq watch (Thanks gergo)

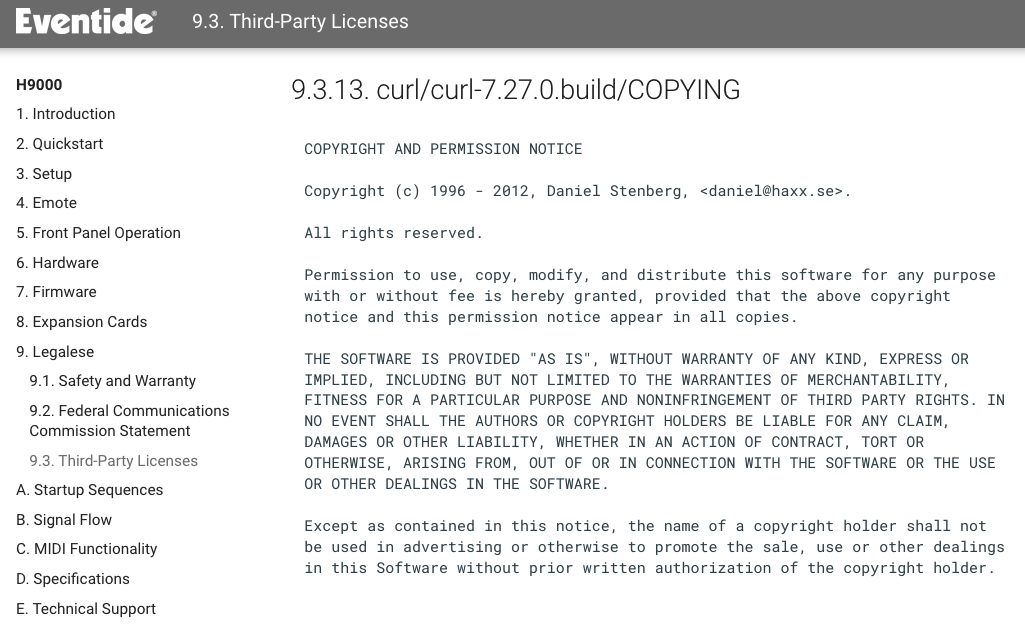

The Eventide H9000 runs curl. A high-end audio processing and effects device. (Thanks to John Baylies)

Diablo IV (Thanks to John Angelmo)

The Siemens EQ900 espresso machine runs curl. Screenshots below from a German version.

Thermomix TM6 by Vorwerk (Thanks to Uli H)

The Grandstream GXP2160 uses curl (thanks to Cameron Katri)

Assassin’s Creed Mirage (Thanks to Patrik Svensson)

Assassin’s Creed Shadows (Thanks to Mauricio Teixeira)

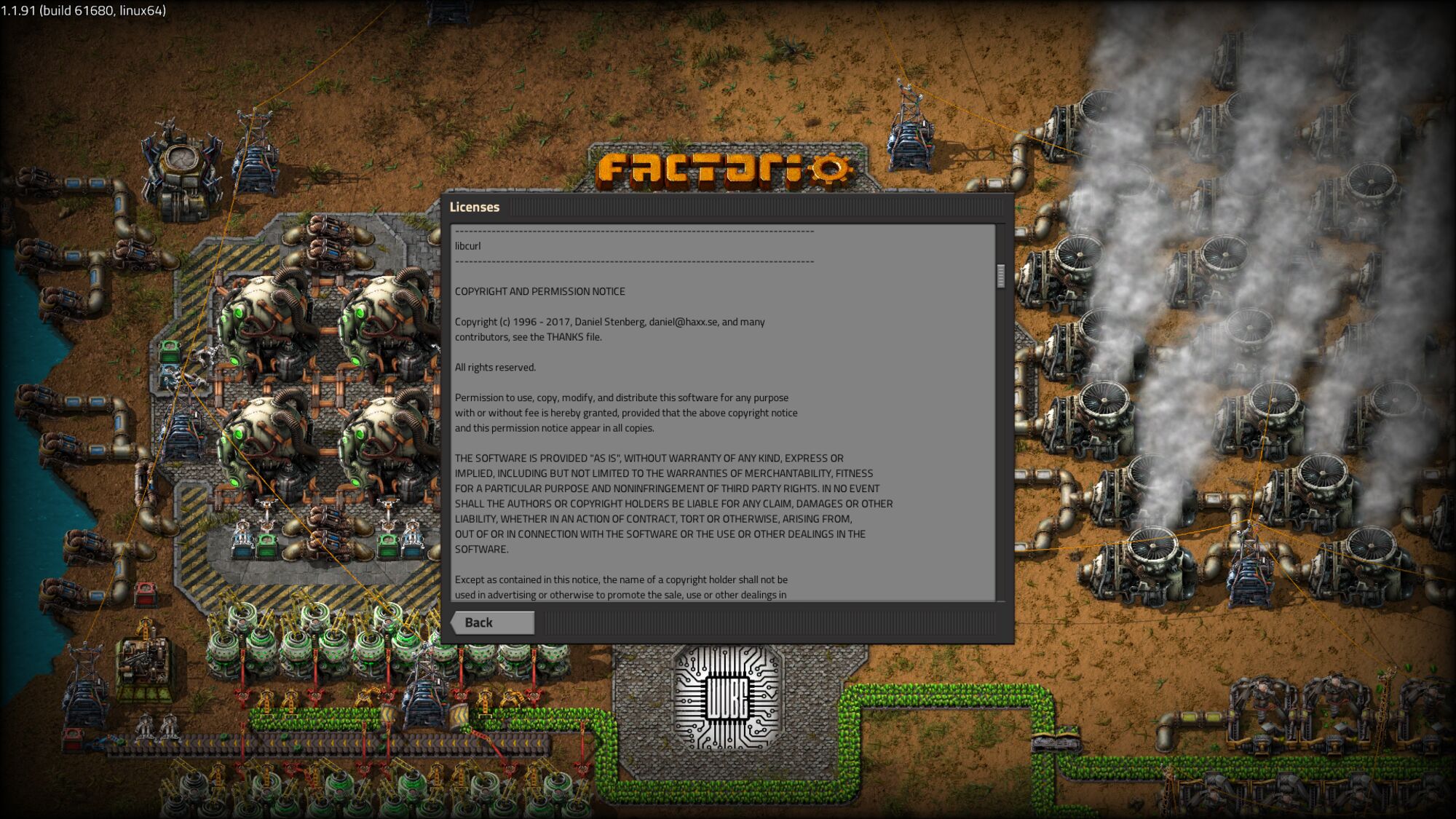

Factorio (Thanks to Der Große Böse Wolff)

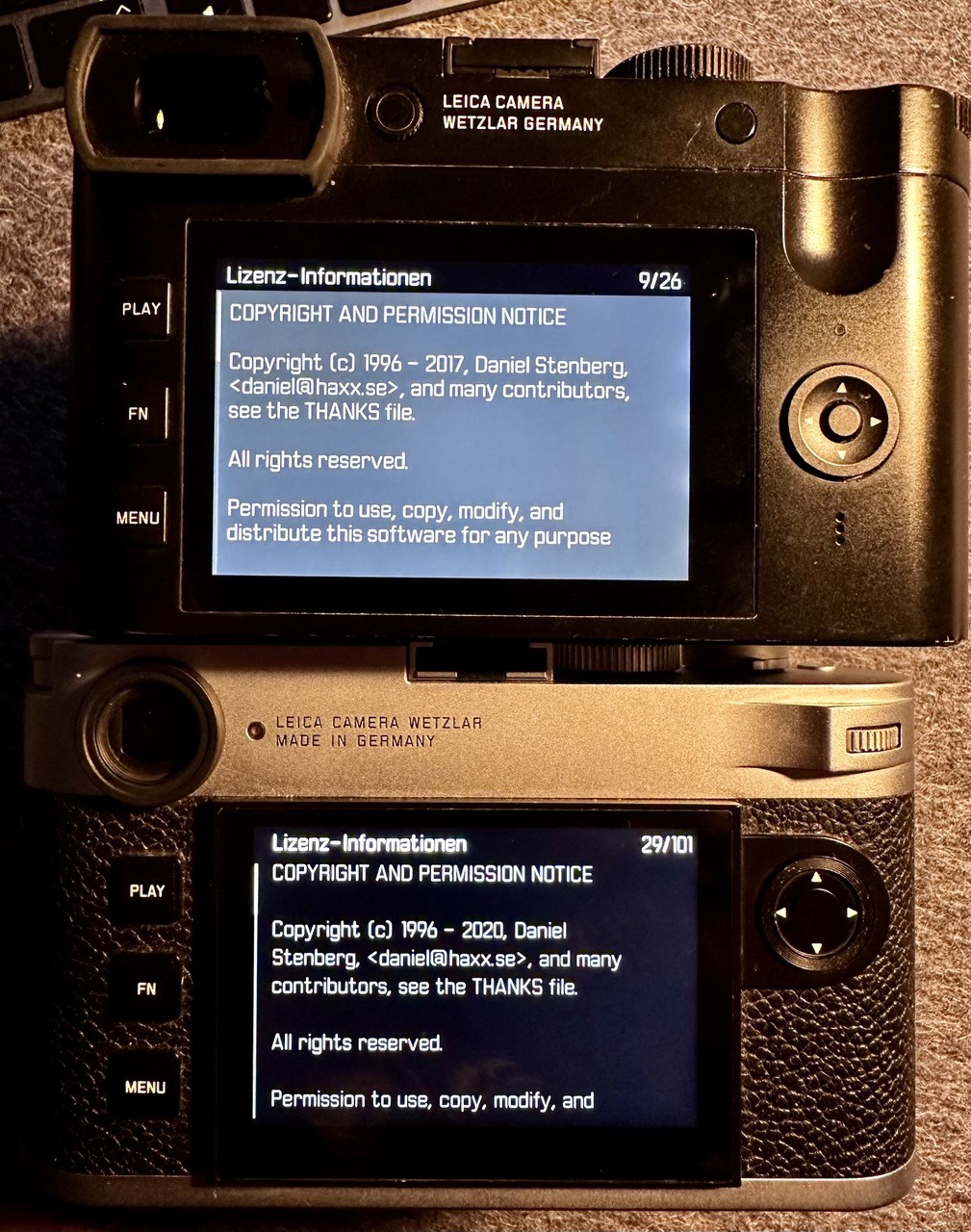

Leica Q2 and Leica M11 use curl (Thanks to PattaFeuFeu)

Renault Logan (thanks to Aksel)

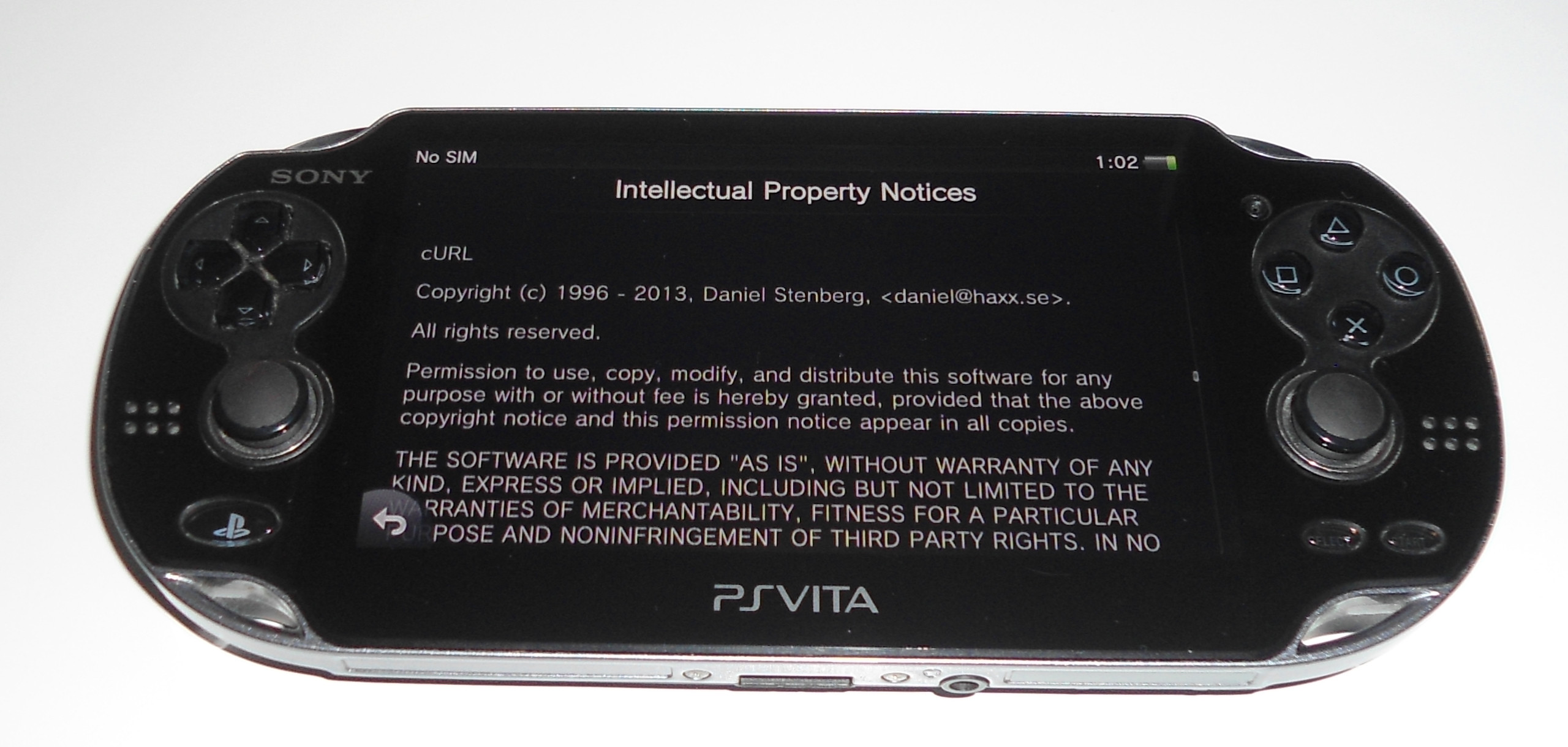

The original model of the PlayStation Vita (PCH-1000, 3G) (thanks to ml)

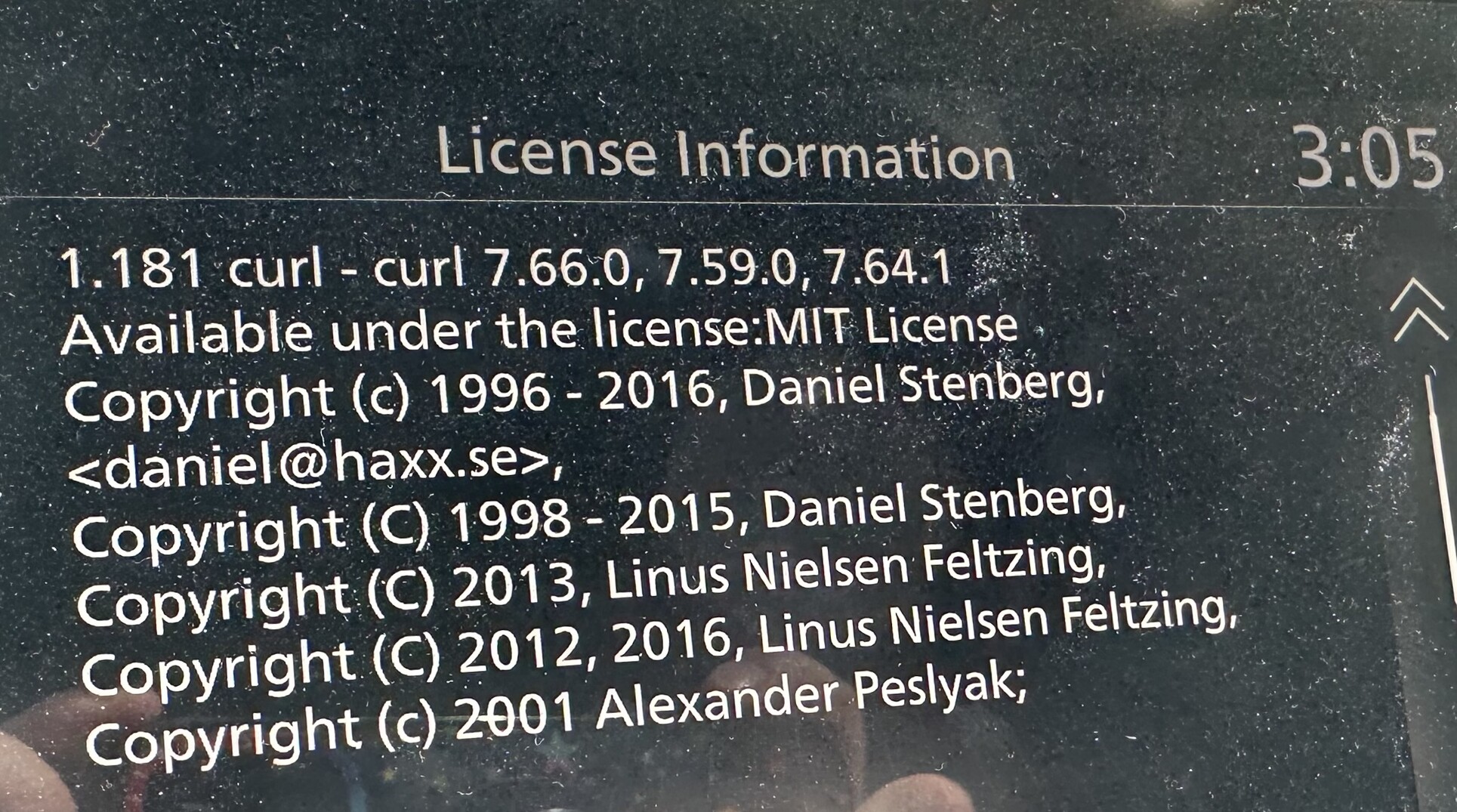

The 2023 Infiniti QX80, Premium Select trim level (an SUV)

Renault Scenic (thanks to Taxo Rubio)

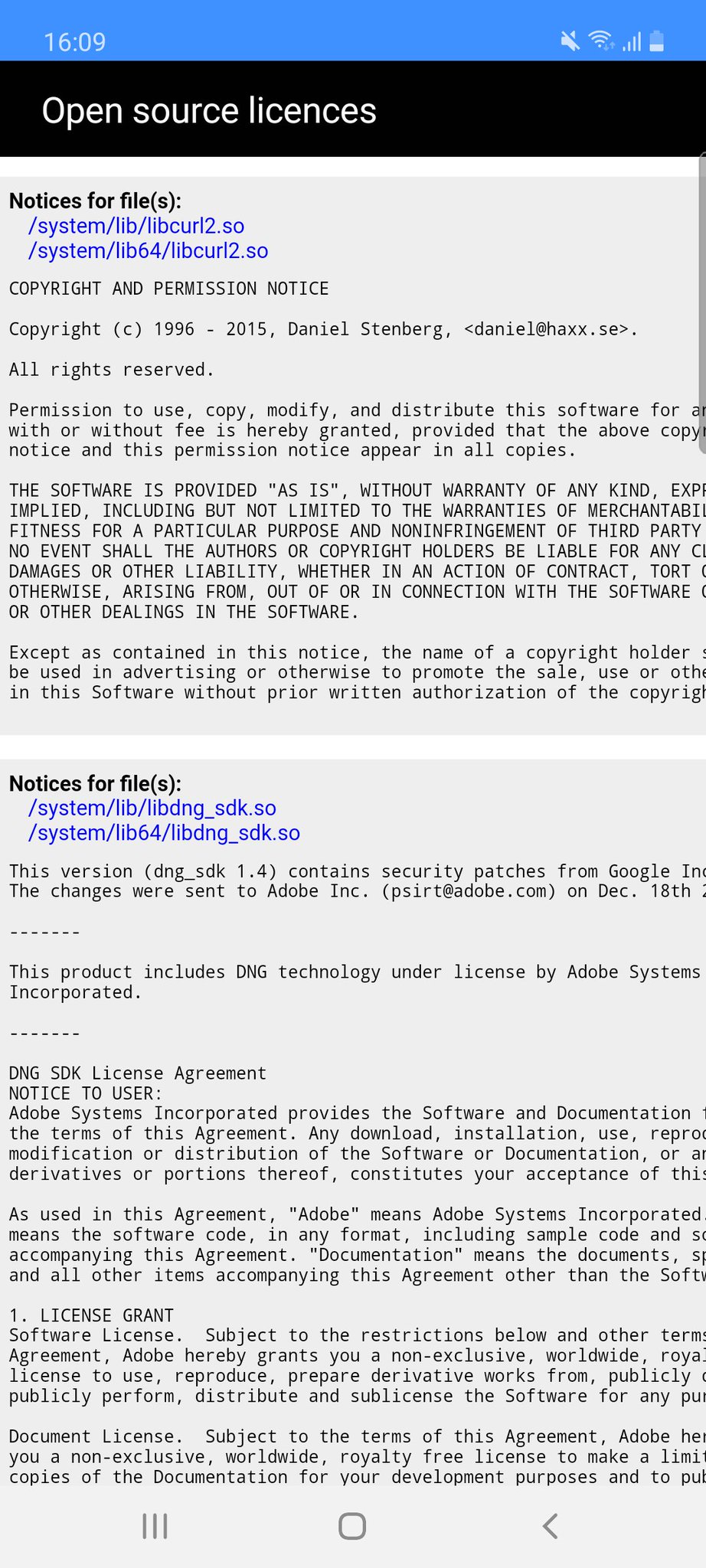

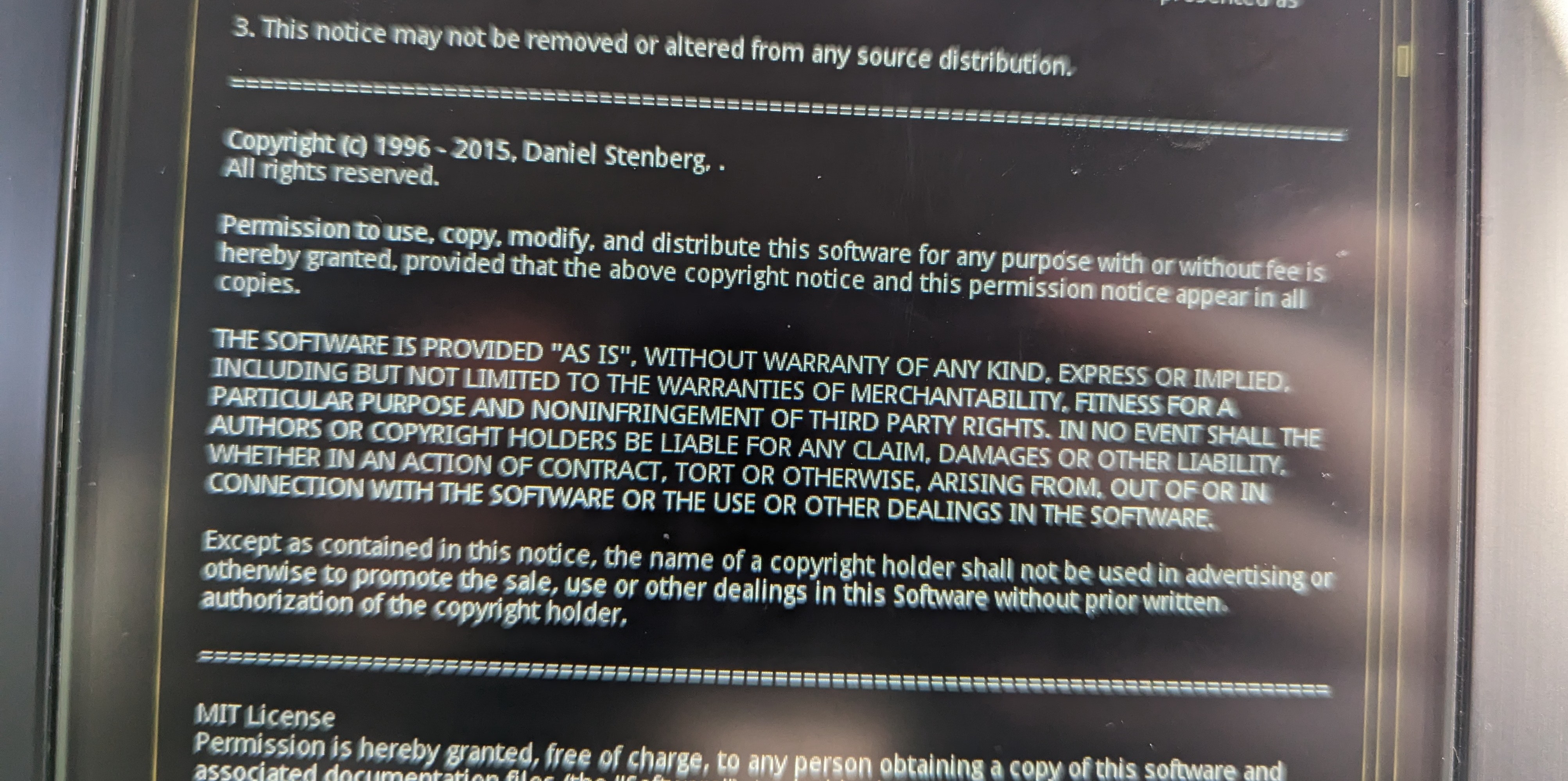

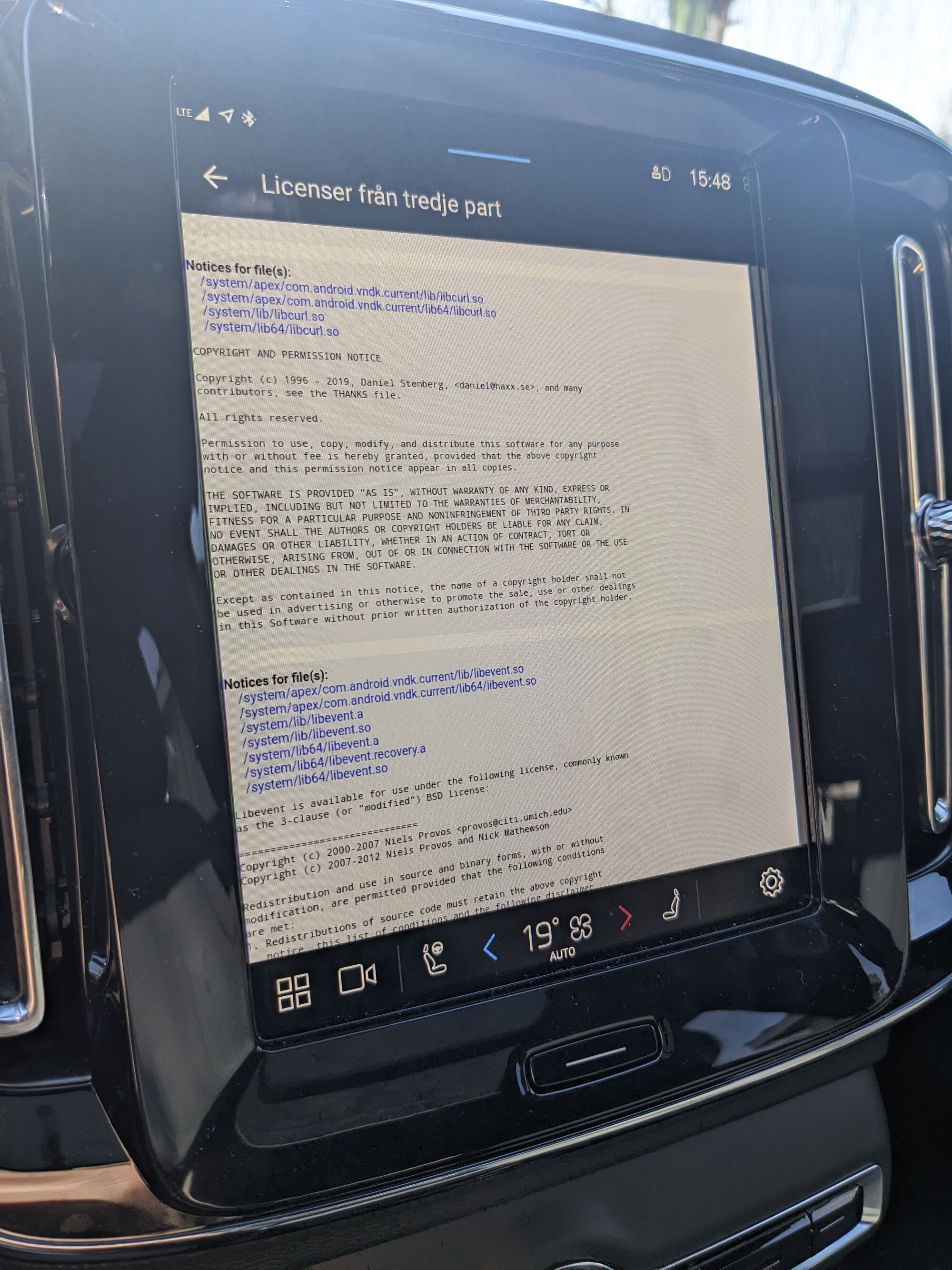

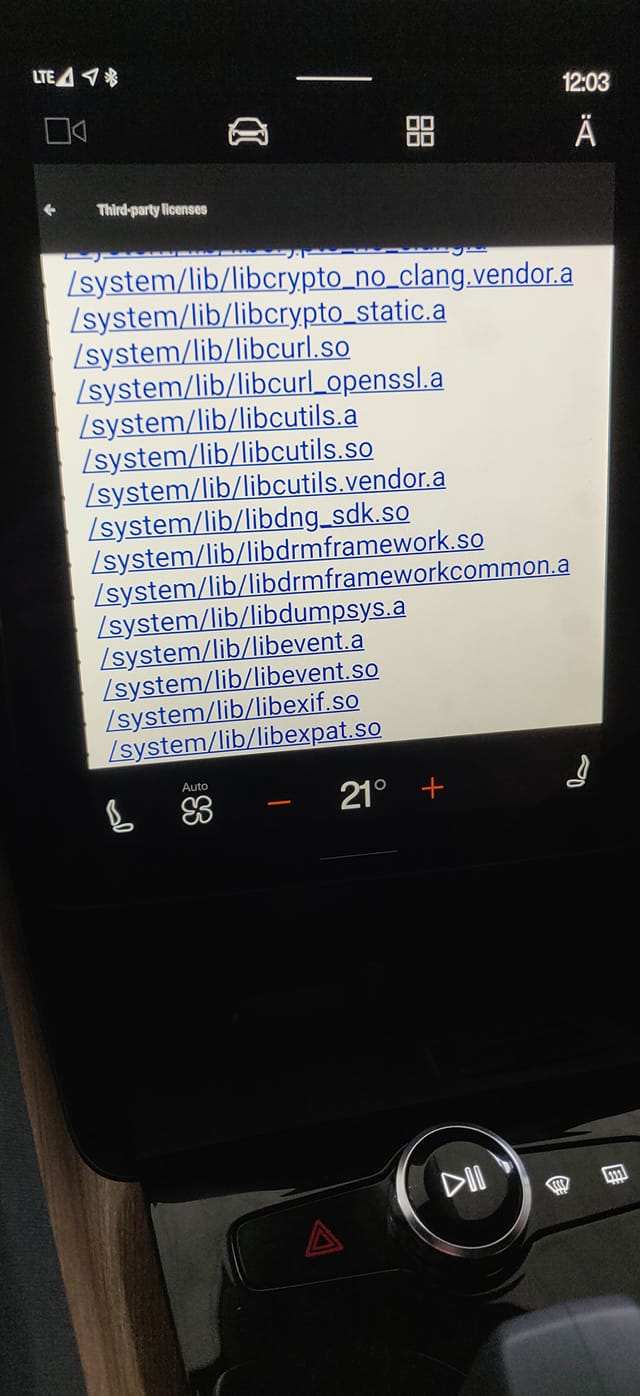

Volvo XC40 Recharge 2024 edition obviously features a libcurl from 2019…

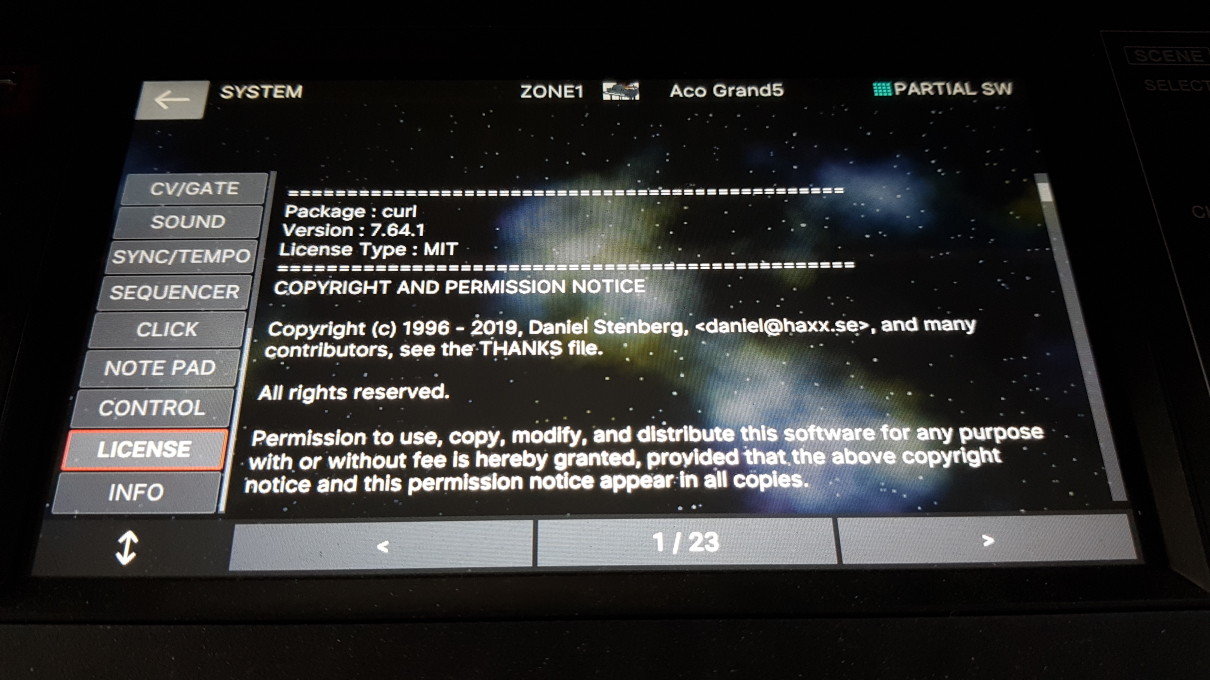

The Roland Fantom 6 Synthesizer Keyboard, runs curl (Thanks Anopka)

Used in the Mini Countryman (the car) (Thanks Alejandro Pablo Revilla)

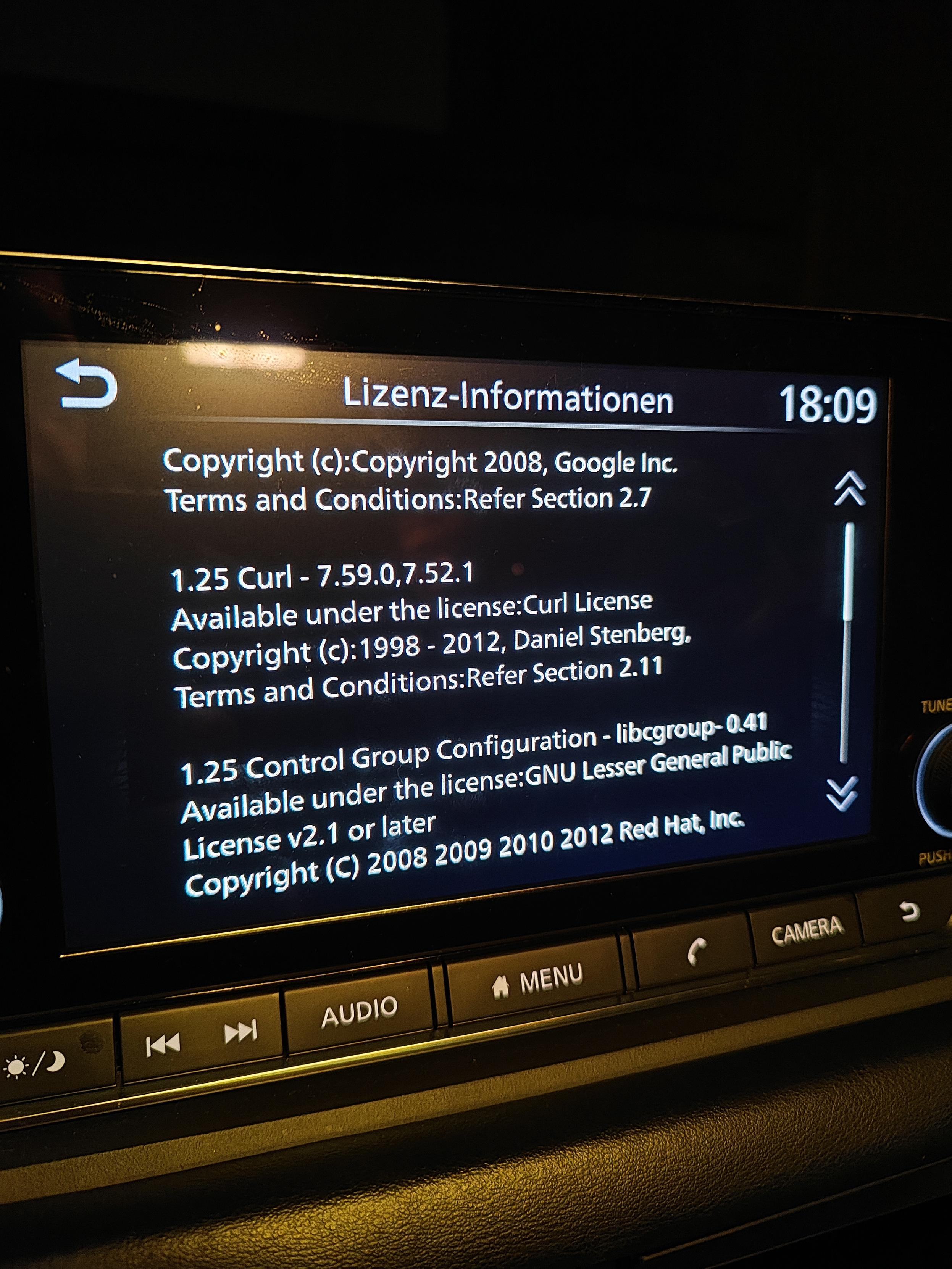

Nissan Qashqai MY21 (Thanks Michele Adduci)

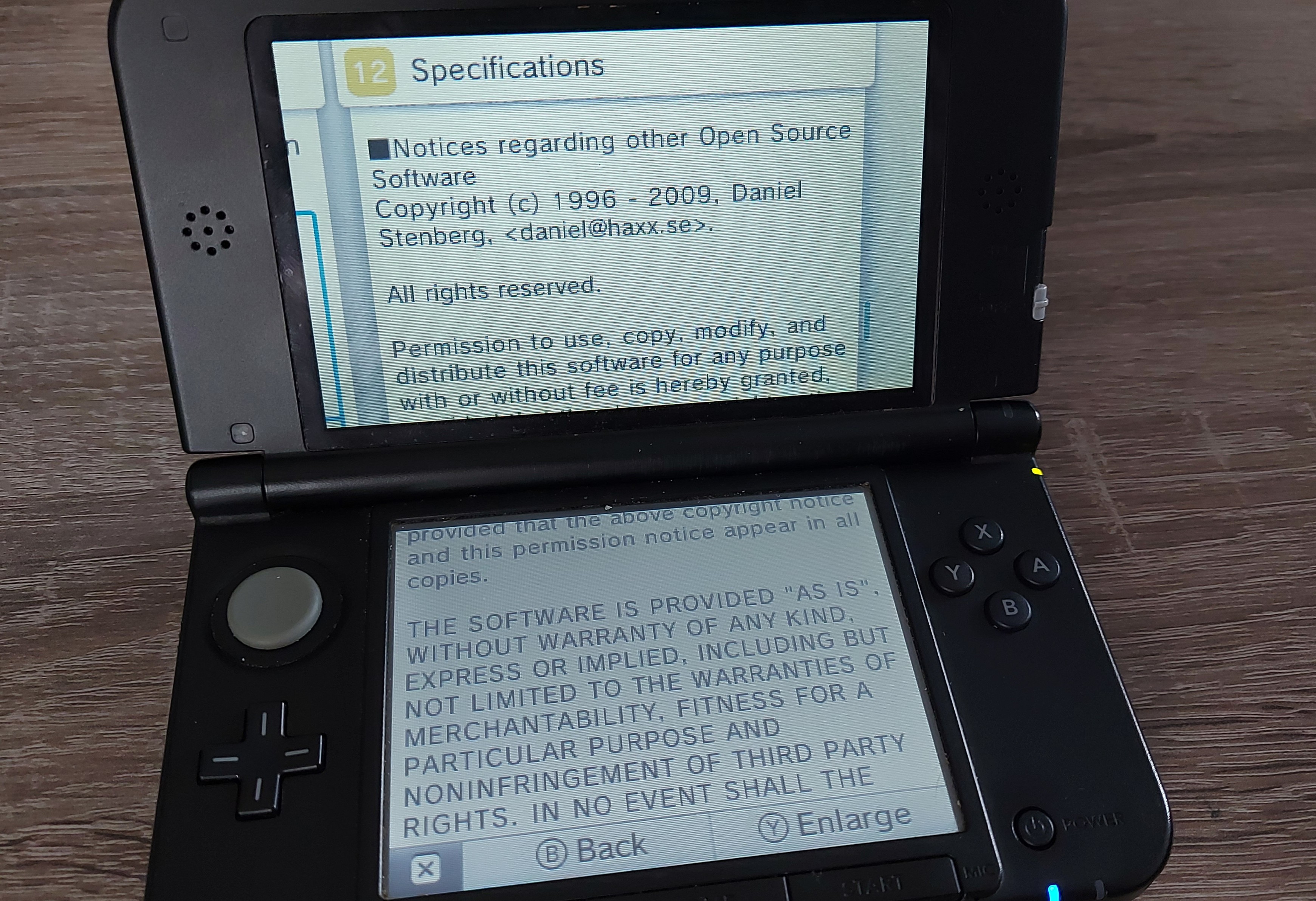

The Nintendo 3DS internet browser probably runs curl. It does not say so, but the license looks like the curl one. (Thanks to Marlon Pohl)

This is a Seat Leon (Thanks to Mormegil)

Chevrolet Tracker LTZ 2024 (Thanks Diego)

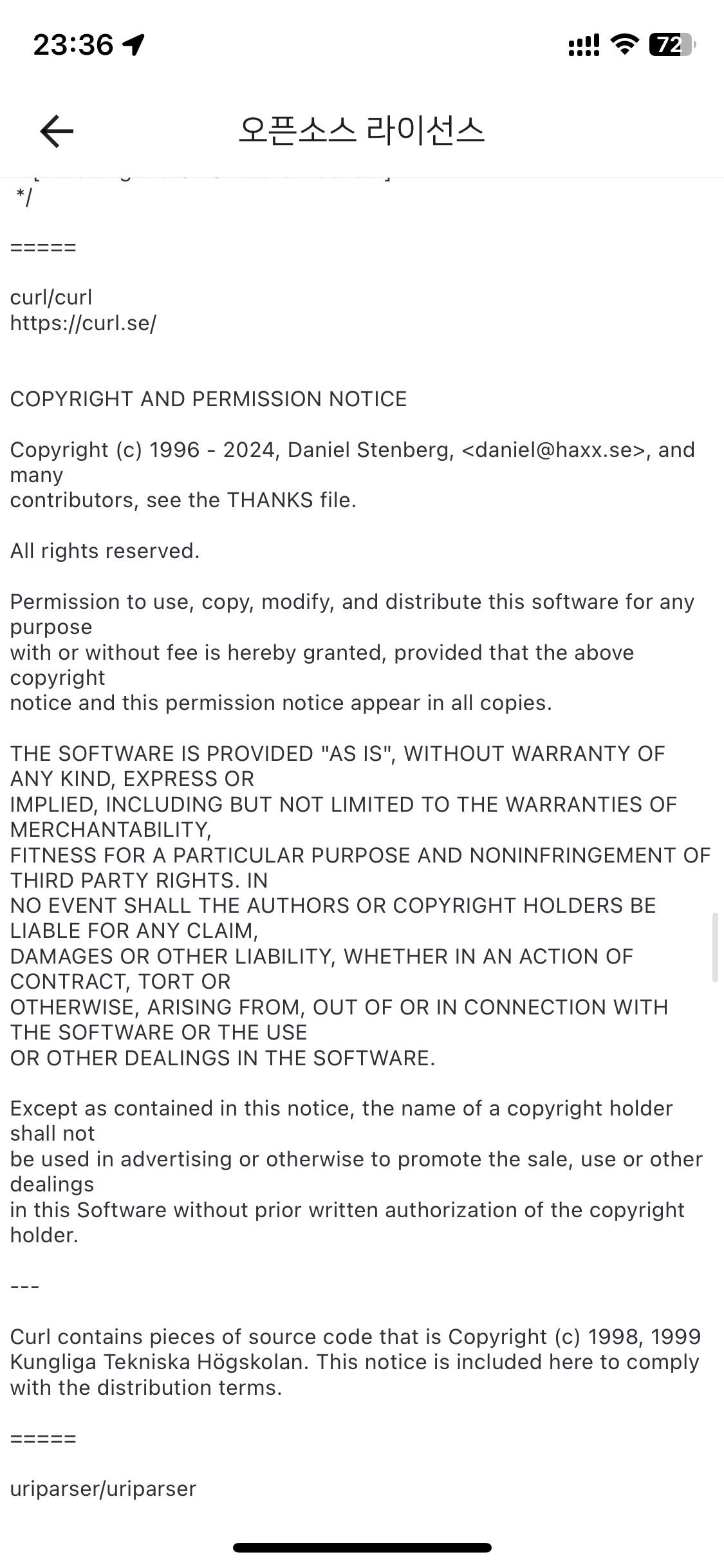

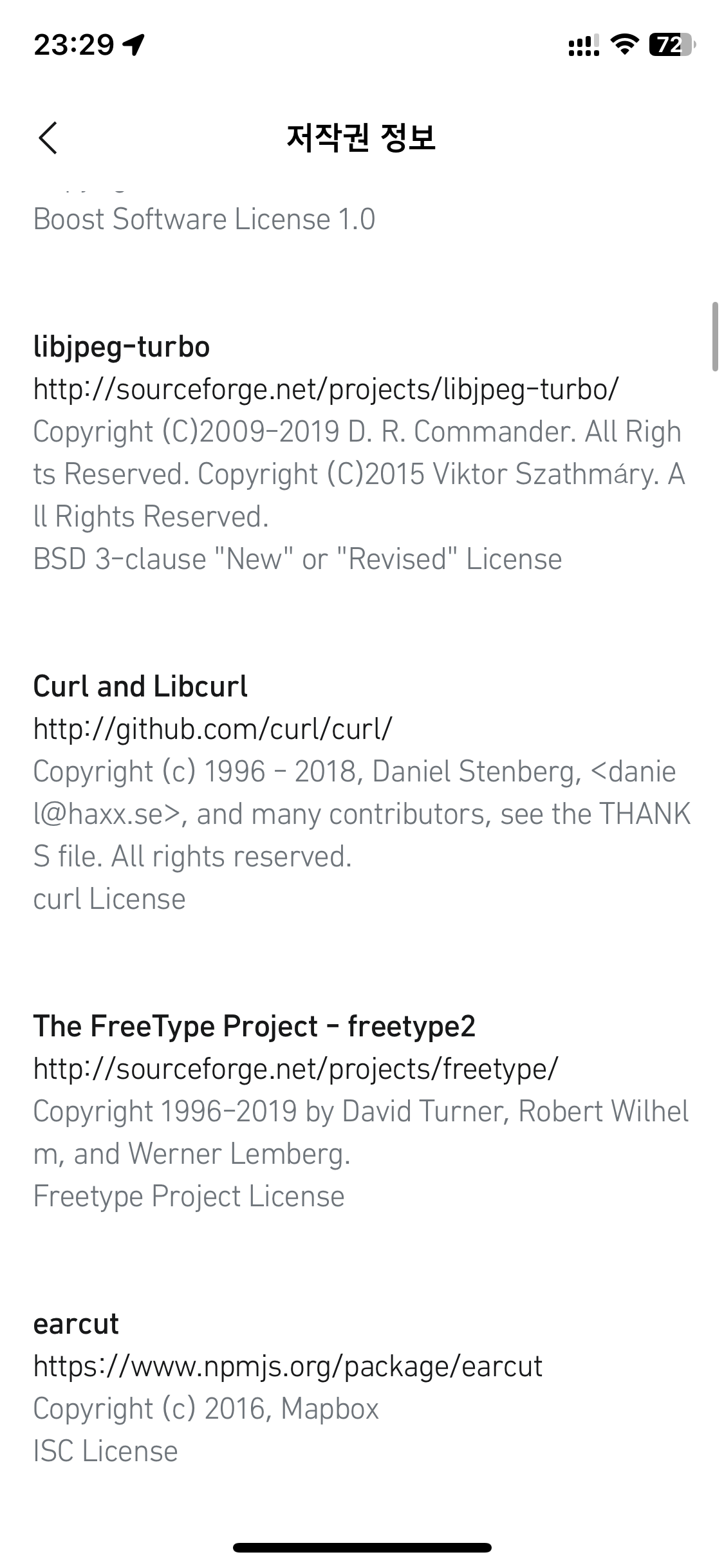

Used in Korean iOS apps. Left to right below: KakaoTalk, Naver and TMAP MOBILITY . Thanks to Hong Yongmin.

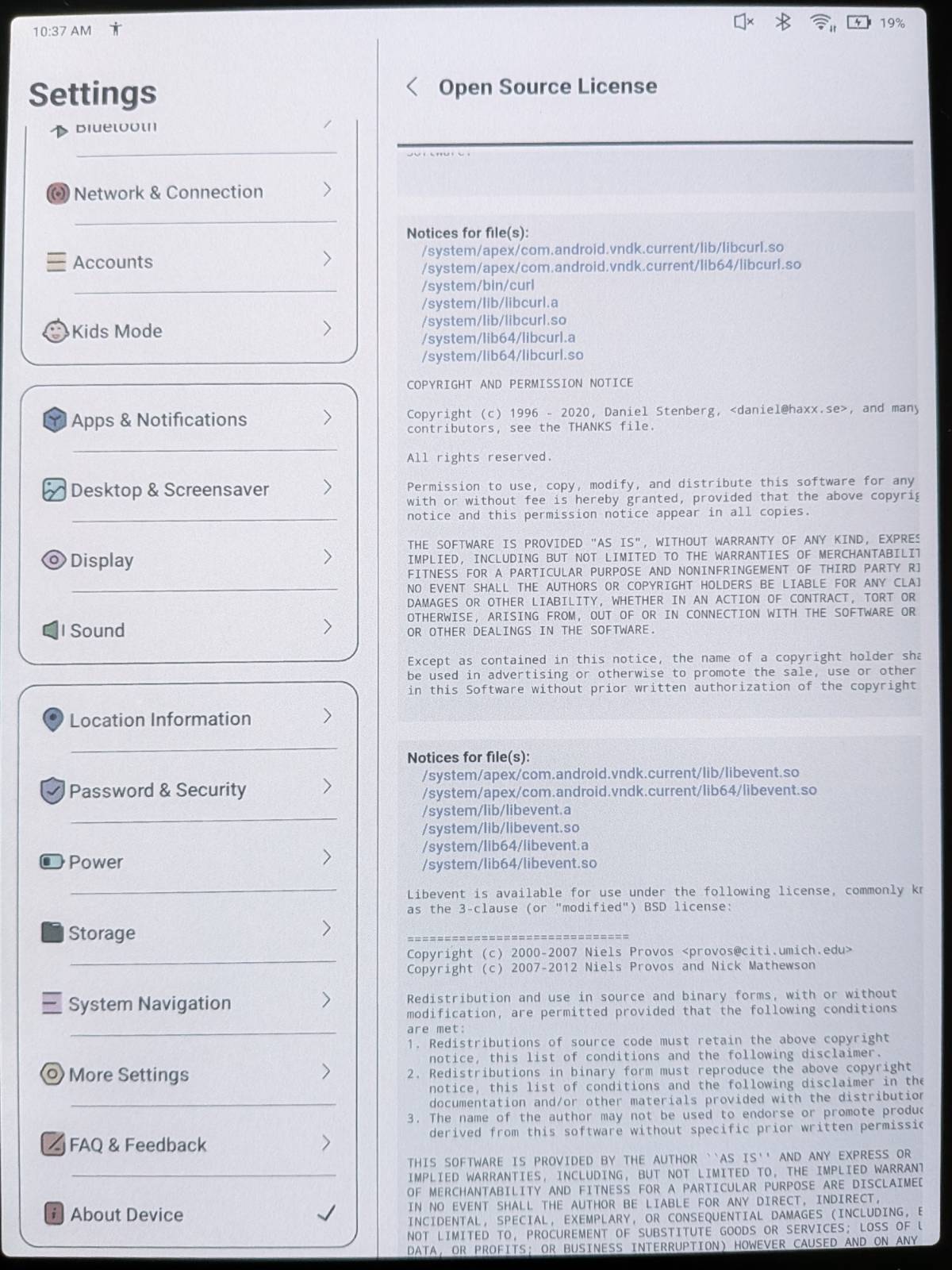

The BOOX 10.3″ Tab Ultra C Pro features curl. (Thanks Henrik Sandklef)

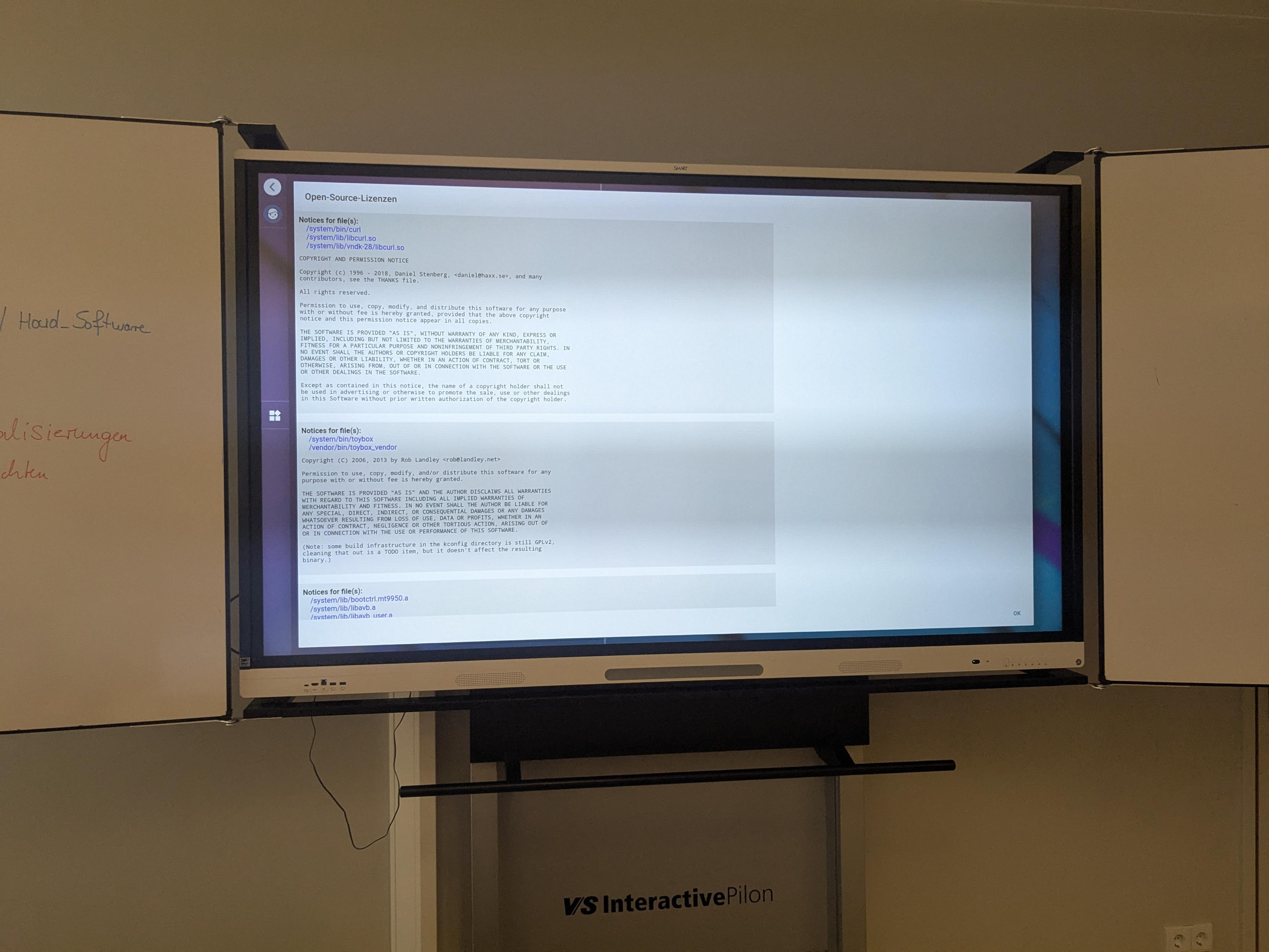

This SMART Interactive Display runs curl (Thanks Marcel)

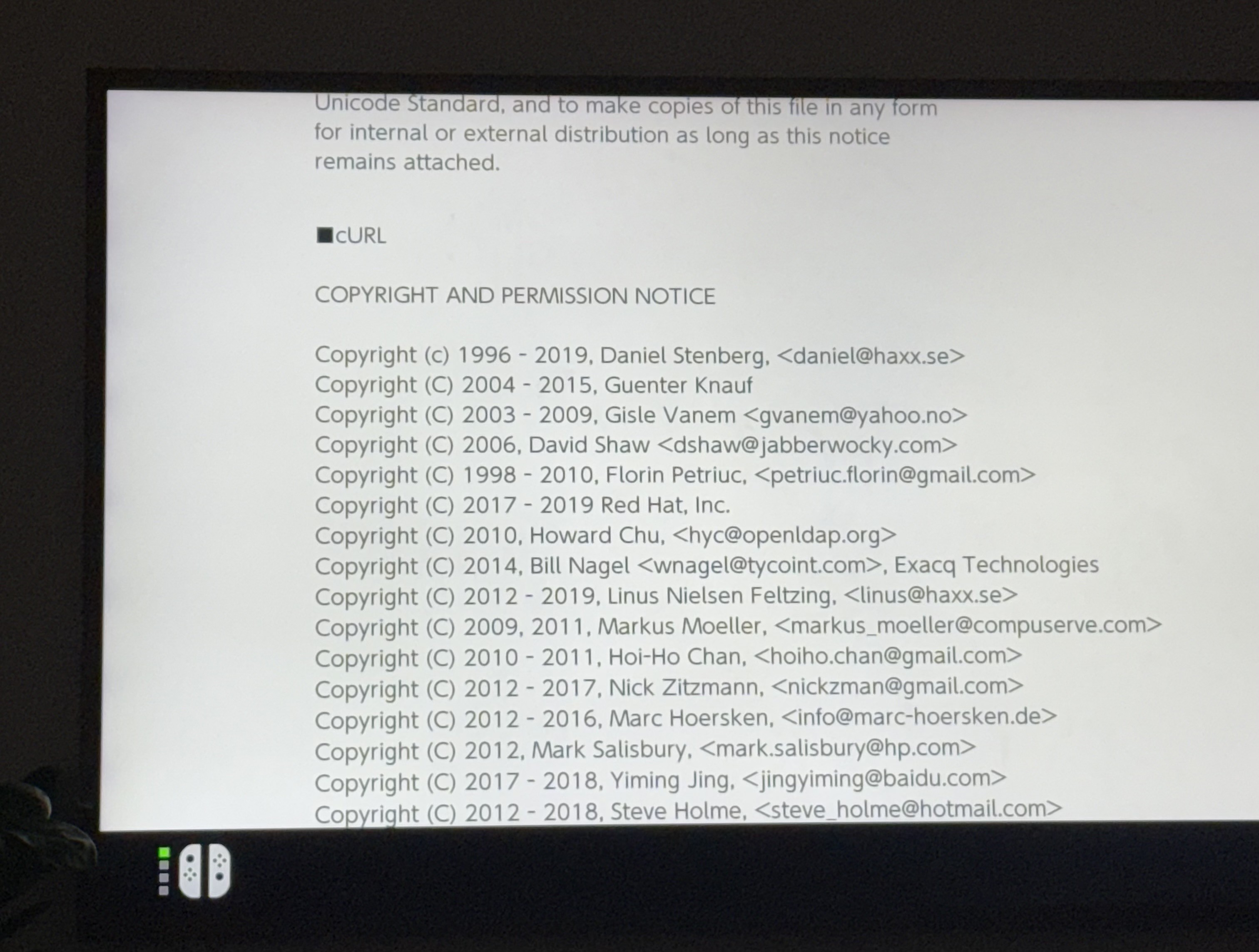

The Nintendo Switch 2 runs curl (Thanks to Patrik Svensson)

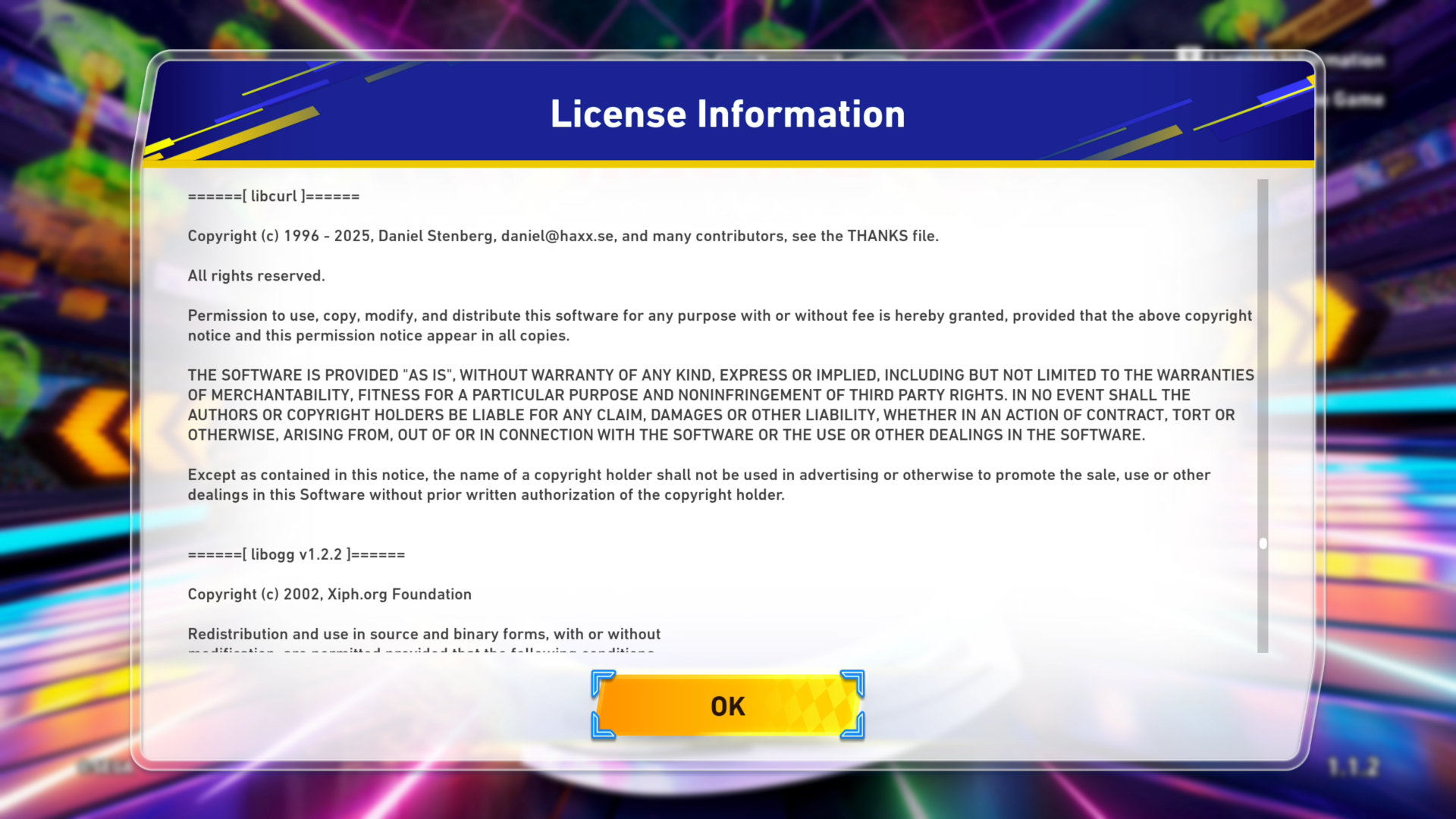

Sonic Racing: CrossWorlds uses libcurl. (Thanks to Mufasa)

Saints Row: IV (Thanks to DCoder)

Warframe and Soulframe (games) use curl. Thanks.

Originally, curl on Mac was built against OpenSSL for the TLS and SSL support, but over time our friends at Apple have switched more and more of their software over to use their own TLS and crypto library

Originally, curl on Mac was built against OpenSSL for the TLS and SSL support, but over time our friends at Apple have switched more and more of their software over to use their own TLS and crypto library  I decided I’d show up a little early at the Sheraton as I’ve been handling the interactions with hotel locally here in Stockholm where the workshop will run for the coming three days. Things were on track, if we ignore how they got the wrong name of the workshop on the info screens in the lobby, instead saying “Haxx Ab”…

I decided I’d show up a little early at the Sheraton as I’ve been handling the interactions with hotel locally here in Stockholm where the workshop will run for the coming three days. Things were on track, if we ignore how they got the wrong name of the workshop on the info screens in the lobby, instead saying “Haxx Ab”…