When I asked my surrounding in March 2015 to guess the expected HTTP/2 adoption by now, we as a group ended up with about 10%. OK, the question was vaguely phrased and what does it really mean? Let’s take a look at some aspects of where we are now.

When I asked my surrounding in March 2015 to guess the expected HTTP/2 adoption by now, we as a group ended up with about 10%. OK, the question was vaguely phrased and what does it really mean? Let’s take a look at some aspects of where we are now.

Perhaps the biggest flaw in the question was that it didn’t specify HTTPS. All the browsers of today only implement HTTP/2 over HTTPS so of course if every HTTPS site in the world would support HTTP/2 that would still be far away from all the HTTP requests. Admittedly, browsers aren’t the only HTTP clients…

During the fall of 2015, both nginx and Apache shipped release versions with HTTP/2 support. nginx made it slightly harder for people by forcing users to select either SPDY or HTTP/2 (which was a technical choice done by them, not really enforced by the protocols) and also still telling users that SPDY is the safer choice.

Let’s Encrypt‘s finally launching their public beta in the early December also helps HTTP/2 by removing one of the most annoying HTTPS obstacles: the cost and manual administration of server certs.

Amount of Firefox responses

This is the easiest metric since Mozilla offers public access to the metric data. It is skewed since it is opt-in data and we know that certain kinds of users are less likely to enable this (if you’re more privacy aware or if you’re using it in enterprise environments for example). This also then measures the share by volume of requests; making the popular sites get more weight.

Firefox 43 counts no less than 22% of all HTTP responses as HTTP/2 (based on data from Dec 8 to Dec 16, 2015).

Out of all HTTP traffic Firefox 43 generates, about 63% is HTTPS which then makes almost 35% of all Firefox HTTPS requests are HTTP/2!

Firefox 43 is also negotiating HTTP/2 four times as often as it ends up with SPDY.

Amount of browser traffic

One estimate of how large share of browsers that supports HTTP/2 is the caniuse.com number. Roughly 70% on a global level. Another metric is the one published by KeyCDN at the end of October 2015. When they enabled HTTP/2 by default for their HTTPS customers world wide, the average number of users negotiating HTTP/2 turned out to be 51%. More than half!

Cloudflare however, claims the share of supported browsers are at a mere 26%. That’s a really big difference and I personally don’t buy their numbers as they’re way too negative and give some popular browsers very small market share. For example: Chrome 41 – 49 at a mere 15% of the world market, really?

I think the key is rather that it all boils down to what you measure – as always.

Amount of the top-sites in the world

Netcraft bundles SPDY with HTTP/2 in their October report, but it says that “29% of SSL sites within the thousand most popular sites currently support SPDY or HTTP/2, while 8% of those within the top million sites do.” (note the “of SSL sites” in there)

That’s now slightly old data that came out almost exactly when Apache first release its HTTP/2 support in a public release and Nginx hadn’t even had it for a full month yet.

Facebook eventually enabled HTTP/2 in November 2015.

Amount of “regular” sites

There’s still no ideal service that scans a larger portion of the Internet to measure adoption level. The httparchive.org site is about to change to a chrome-based spider (from IE) and once that goes live I hope that we will get better data.

W3Tech’s report says 2.5% of web sites in early December – less than SPDY!

I like how isthewebhttp2yet.com looks so far and I’ve provided them with my personal opinions and feedback on what I think they should do to make that the preferred site for this sort of data.

Using the shodan search engine, we could see that mid December 2015 there were about 115,000 servers on the Internet using HTTP/2. That’s 20,000 (~24%) more than isthewebhttp2yet site says. It doesn’t really show percentages there, but it could be interpreted to say that slightly over 6% of HTTP/1.1 sites also support HTTP/2.

On Dec 3rd 2015, Cloudflare enabled HTTP/2 for all its customers and they claimed they doubled the number of HTTP/2 servers on the net in that single move. (The shodan numbers seem to disagree with that statement.)

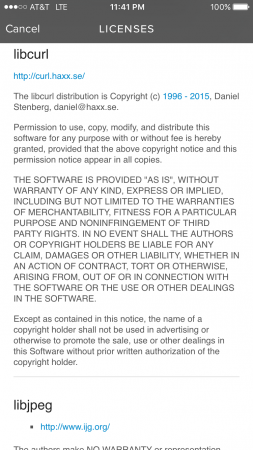

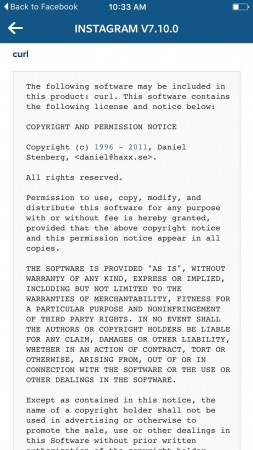

Amount of system lib support

iOS 9 supports HTTP/2 in its native HTTP library. That’s so far the leader of HTTP/2 in system libraries department. Does Mac OS X have something similar?

I had expected Window’s wininet or other HTTP libs to be up there as well but I can’t find any details online about it. I hear the Android HTTP libs are not up to snuff either but since okhttp is now part of Android to some extent, I guess proper HTTP/2 in Android is not too far away?

Amount of HTTP API support

I hear very little about HTTP API providers accepting HTTP/2 in addition or even instead of HTTP/1.1. My perception is that this is basically not happening at all yet.

Next-gen experiments

If you’re using a modern Chrome browser today against a Google service you’re already (mostly) using QUIC instead of HTTP/2, thus you aren’t really adding to the HTTP/2 client side numbers but you’re also not adding to the HTTP/1.1 numbers.

QUIC and other QUIC-like (UDP-based with the entire stack in user space) protocols are destined to grow and get used even more as we go forward. I’m convinced of this.

Conclusion

Everyone was right! It is mostly a matter of what you meant and how to measure it.

Future

Recall the words on the Chromium blog: “We plan to remove support for SPDY in early 2016“. For Firefox we haven’t said anything that absolute, but I doubt that Firefox will support SPDY for very long after Chrome drops it.