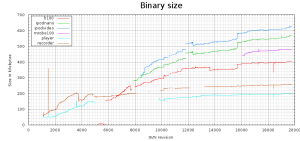

A client of mine and myself ran a bunch of tests doing FTP and SFTP transfers against localhost to measure how fast our custom solution is compared to a set of existing solutions.

The specific results from this aren’t what caught my eyes, mostly because they’re currently still only used for comparisons and to measure relative improvements, but it was instead the relative speed differences between the tests run on Mac 10.5.5, on Windows XP SP3 and on Linux 2.6.26.

Some of the Windows transfers took a magnitude more time than the others. Ten times longer. Since we could see this across multiple tests each being run multiple times and it was also visible with third party tools, the only conclusion I can draw from this is that Windows for some reason has a much slower localhost.

Does any reader of this have any further knowledge or details to share on this topic? Anyone knows if more recent Windows versions do this any better?

It should be noted that on Windows the ssh server used was running in cygwin, which may account for some of the slowness as cygwin isn’t really known for being blazingly fast…

Update:

Three friends responded to this question:

The first mention that he’d got problems on windows in the past where 127.0.0.1 worked but ‘localhost’ didn’t which might indicate that localhost for some reason would be treated differently.

The second said that it has been mentioned that Windows Vista has significant TCP improvements compared to older versions for which version the TCP/IP stack was rewritten completely.

Pierre (at Microsoft) pointed out that on Vista localhost resolves first to ::1 (ipv6) only, which may explain why some people experience quirks on Vista at least. This test was however done on XP…