It took me 422 days to do my most recent 1,000 commits in the curl source code repository. Now at 18,001 commits. This is the most recent.

It took me 422 days to do my most recent 1,000 commits in the curl source code repository. Now at 18,001 commits. This is the most recent.

I keep insisting that the CVE system is broken and that the database of existing CVEs hosted by MITRE (and imported into lots of other databases) is full of questionable content and plenty of downright lies. A primary explanation for us being in this ugly situation is that it is simply next to impossible to get rid of invalid CVEs.

I already wrote about the bogus curl CVE-2020-1909 last year and how it was denied being rejected because someone without a name at MITRE obviously knows the situation much better than any curl developer. This situation then forces us, the curl project, to provide documentation to explain how this is a documented CVE but it is not a vulnerability. Completely contrary to the very idea of CVEs.

A sane system would have a concept where rubbish is scrubbed off.

The curl project registered for and became a CNA in mid January 2024 to ideally help us filter out bad CVE input better. The future will tell if this effort works or not. (It was also recently highlighted that the Linux kernel is now also a CNA for similar reasons and I expect to see many more Open Source projects go the same route.)

However, in late December 2023, just weeks before we became CNA, someone (anonymous again) requested a CVE Id from MITRE for a curl issue. Sure enough they were immediately given CVE-2023-52071, according to how the system works.

This CVE was made public on January 30 2024, and the curl project was of course immediately made aware of it. A quick glance on the specifics was all we needed: this is another bogus claim. This is not a security problem and again this is a fact that does not require an experienced curl developer to analyze, it is quite easily discoverable.

Given the history of previous bogus CVEs, I was soon emailed by CVE db companies asking me for confirmations about this CVE and I was of course honest and told them that no, this is not a security problem. Do not warn your users about this.

We are a CNA now, meaning that we should be able to control curl issues better, even if this CVE was requested before we were officially given the keys to the kingdom. We immediately requested this CVE to be rejected. On the grounds that it was wrongly assigned in the first place.

In the first response from MITRE to our rejection request, they insisted that:

We discussed this internally and believe it does deserve a CVE ID. If we transfer, and Curl REJECTS, then the reporter will likely come back to us and dispute which will provide some confusion for the public.

They actually think putting DISPUTED on the issue is less confusing to the public than rejecting it, because rejecting risks an appeal from the original reporter?

They say in this response that they think it actually deserves a CVE Id. If there was any way to have a conversation with these guys I would like to ask them on what grounds they base this on. Then lecture them on how the world works.

This communication has only been done indirectly with MITRE via our root CNA (Red Hat).

So it did not fly.

According to the MITRE guidelines: When one party disagrees with another party’s assertion that a particular issue is a vulnerability, a CVE Record assigned to that issue may be designated with a “DISPUTED” tag.

If someone says the earth is flat, we need to say that fact is disputed? No it is not. It is plain wrong. Incorrect. Bad. Stupid. Silly. Remove-the-statement worthy.

This meant I needed to take the fight to the next level. This policy is not good enough and it needs to be adjusted. This is not a disagreement on the facts. I insist that this is not a vulnerability to begin with. It was wrongly assigned a CVE in the first place. It feels ridiculous that the burden of proof falls on me to prove how this is not a security problem instead of the other way around: if someone would just have had the spine to ask the original submitter to explain, prove, hint or suggest how this is a vulnerability then it would never have been a CVE created for this in the first place. Because that person could not have done that.

The plain truth is that there is no system for doing this. There is no requirement on the individual to actually back up or explain what they claim. The system is designed for good-faith reporters against bad-faith product organizations. So that bad companies cannot shut down whistleblowers basically. Instead it allows irresponsible or bad-faith reporters populate the CVE database with rubbish.

Once the CVE is in, the product organization, like curl here, is not allowed to REJECT it. We have to go the lame route and say that the facts in the CVE are DISPUTED. We are apparently in disagreement whether the totally incorrect claim is totally incorrect or not. Bizarre.

Did I mention this is a broken system?

Being a CNA at least means we have a foot in the door. An issue has been filed against the policy and guidelines and it has been elevated at MITRE via our root CNA (Red Hat). I cannot say if this eventually will make a difference or not, but I have decided to “take one for the team” and spend this time and effort on this case in the belief that if we manage to nudge the process ever so slightly in the right direction, it could be worth it.

For the sake of everyone. For the sake of my sanity.

In the curl documentation for CVE-2023-52071, which we unwittingly have to provide even though the issue is bogus, I have included this whole story including quoting the motivations from my email to MITRE as to why this CVE should be rejected in spite of the current procedure not allowing us to.

Hopefully, supposedly, ideally, crossing my fingers, future CVEs against curl or libcurl will immediately be passed via us since we are now a CNA. This is how it is supposed to work. We will of course immediately and with no mercy reject and refuse all attempts in filing silly CVEs for issues that aren’t vulnerabilities.

The “elevated issue” above might (hopefully) lead to non-CNA organizations getting an increased ability to filter off junk from the system – and then perhaps lessen the need for the entire world to become CNAs. I am not overly optimistic that we will reach that position anytime soon, as clearly the system has worked like this for a long time and I expect resistance to change.

I can almost guarantee that I will write more blog posts about CVEs in the future. Hopefully when I have great news about updated CVE rejection policies.

(Feb 23, 21:33 UTC) The CVE records have now been updated by MITRE and according to NVD for example, this CVE is now REJECTED. Wow.

I was not told about this, someone in a discussion thread mentioned it.

This is a frequently asked question: how will I handle the situation if/when I step away from the curl project? What happens if I get run over by a bus go on a permanent holiday tomorrow? What’s the contingency plan?

You would perhaps think that it could affect a few more things that I work on than just curl, but I rarely get questions about any other things or projects. But okay, I have since long accepted that curl is the single thing people are most likely to associate with me.

Let me start by saying that I have no plans to leave the curl project any time soon. curl is such a huge part of my life I would not know what to do if I did not spend a large chunk of it thinking about, talking about, blogging about and working on curl development. I am not ruling out that I might step back as a leader of the project in a distant future, but it sure does not feel like it will happen within the nearest decade.

I am far from done yet. curl is not done yet. The Internet has not stopped evolving yet.

Also: the most likely way I will leave the project in a distant future is slowly and in a controlled manner where I can make sure that everyone gets everything they need before I would completely disappear into the shadows.

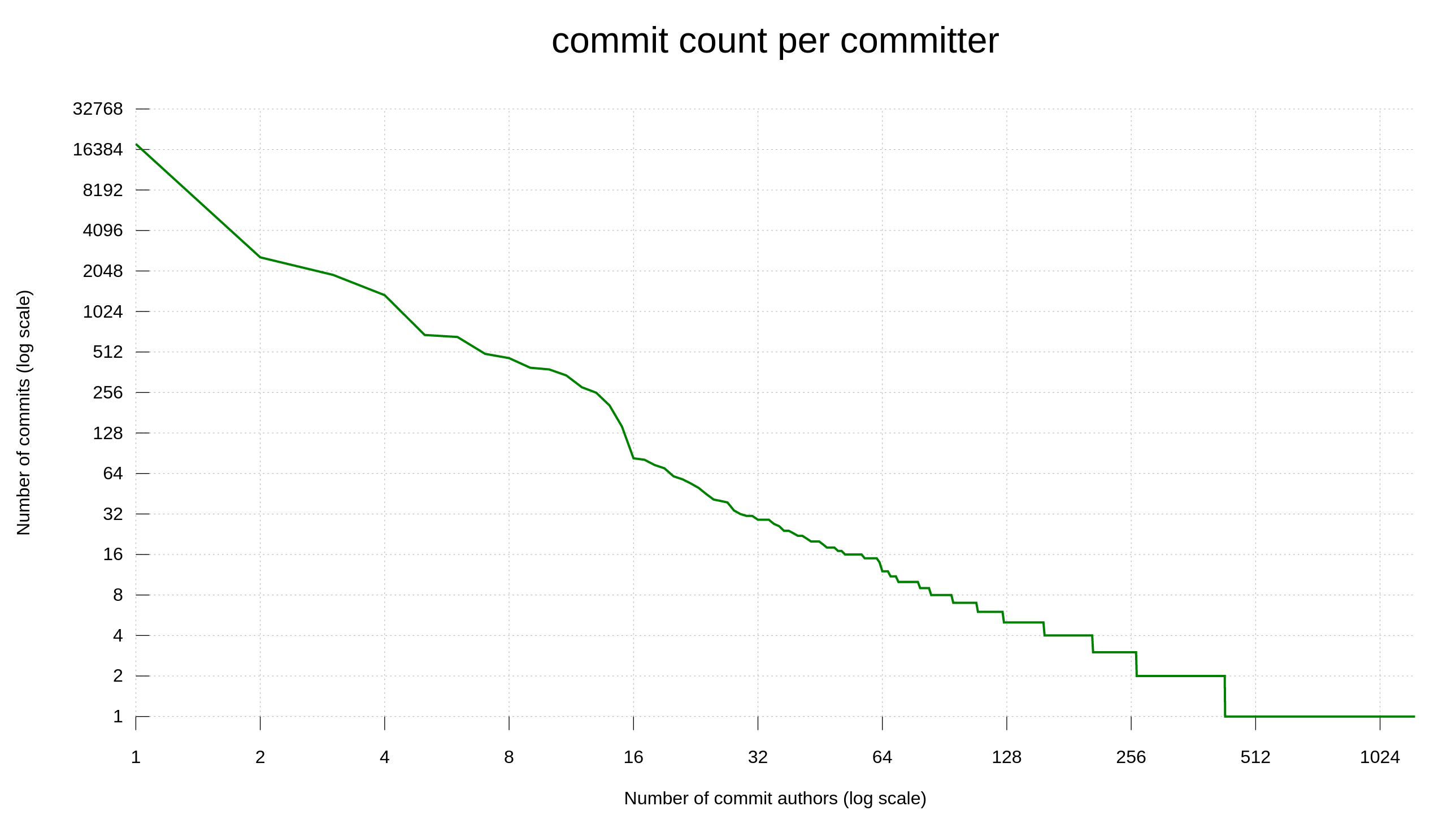

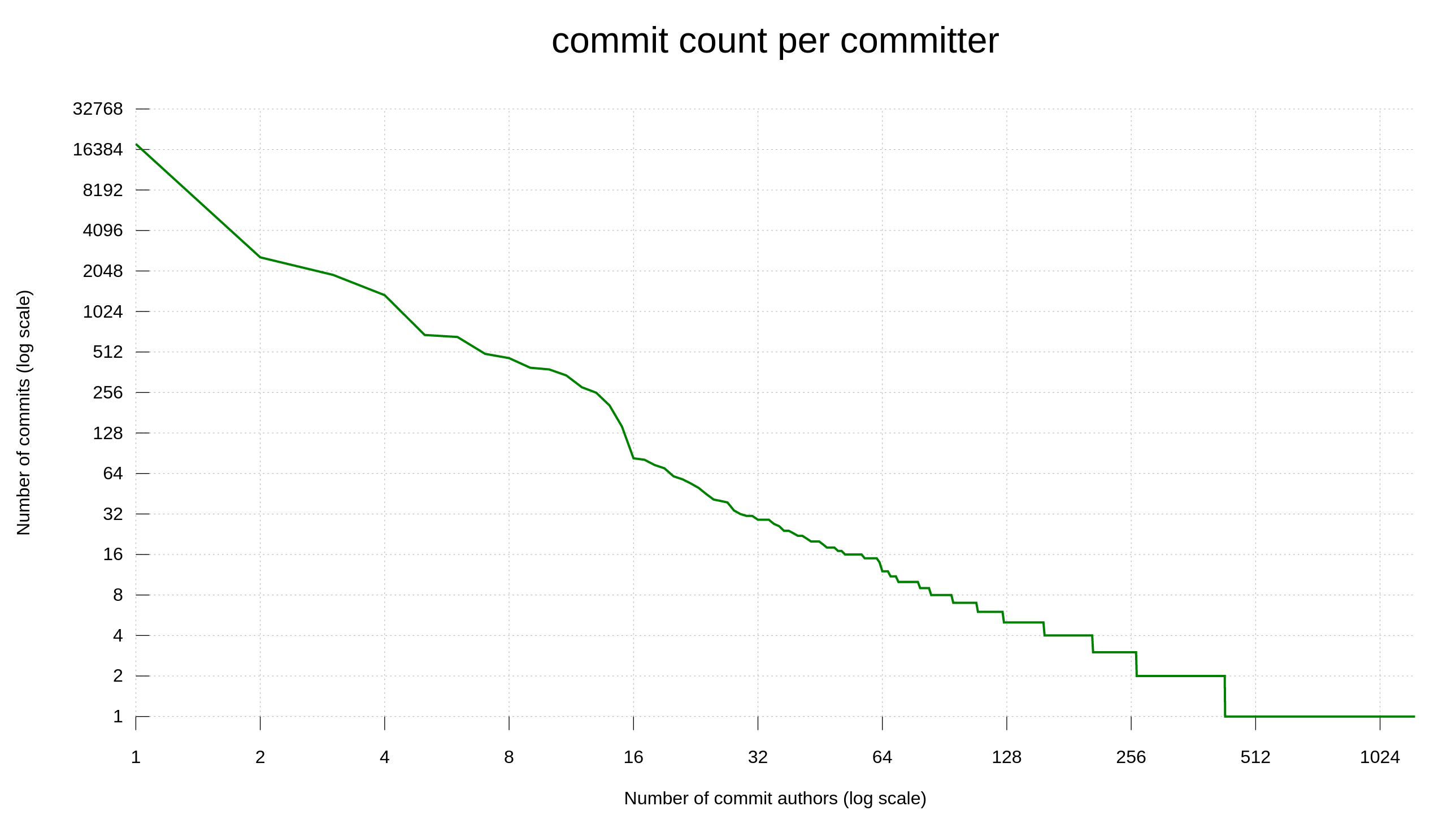

I also want to stress that curl is not a solo mission. We have surpassed 1200 commit authors in total and we average in 25 commit authors every month, with about 10 new committers arriving every month. My share of all commits has been continuously shrinking for many years.

A healthy and striving open source project should stand on its own legs and not rely on the presence or responses of single contributors. Everything should be documented and explained. How things work in the code, but also how processes work and how decisions are made etc. Someone who arrives at the project, alone in the middle of the night without network access, should be able to figure out everything without having to ask anyone.

I work hard at documenting everything curl as much and as well as possible. My ambition is to have curl stand out as one of the best documented projects/products – no matter what you compare it against.

If a single maintainer vanishes tomorrow, the project should survive it fine. Redundancy is key and we must make sure that we have a whole team of people with the necessary rights and knowledge to “carry the torch forward”. We invite new maintainers to the team every once in a while so that we are at least a dozen or so that can do things like merging code into the repository or updating the website. Many of those rarely exercise that right, but they have it and they can.

A single maintainer’s sudden absence can certainly be a blow to the project, but it should not be lethal.

My “BDFL role” in curl is not enforced by locking others out. There is a whole team that can do just about everything in the project that I do. When and if they want to.

I have logins and credentials to some services that the whole team does not. I use them to upload curl releases, manage the website and similar. My accounts. If I am gone tomorrow, getting into my accounts will offer challenges to those who want to shoulder those responsibilities. I have a few trusted dedicated individuals appointed to hopefully manage that in the unlikely event that ever becomes necessary.

(Benevolent Dictator For Life)

I may be a sort of dictator in the project, but I prefer to see myself as a “lead developer” as I hardly ever veto anything and I always encourage discussions and feedback rather than decreeing my opinions or ways of working onto others. I strive to be benevolent. I do not claim to always know the correct or proper way to do things.

When I leave, there is no dedicated prince or appointed heir that will take over after me, royal family style. Sure, someone else in the ranks of existing maintainers might step up and become a new project leader but it could also very well just become a group sharing the load or something else. It is not up to me to decide or control that. It is not decided ahead of time and it will not.

Similarly, I don’t try to carve my vision of curl into some stone tablets to pass on to the next generation. When I am gone, the people who remain will need to drive the ship and have their own visions and ideas. The kids got to do their own choices.

I don’t care about how or if people remember me or not. I try my best to do good now and I hope my efforts and work make a net positive to the world. If so, that is good enough for me.

This is the video recording of my talk with this title, done at February 4, 2024 10:00 in the K1.105 room at FOSDEM 2024. The room can hold some 800 people but there were a few hundred seats still unoccupied. Several people I met up with later have insisted that 10 am on a Sunday is way too early for attending talks…

When I was about to start my talk, the slides would not show on the projector. Yeah, sigh. Nothing surprising maybe, but you always hope you can avoid these problems – in particular in the last moment with a huge audience waiting.

There was this separate video monitor laptop that clearly showed that my laptop would output the correct thing – in a proper resolution (1280 x 720 as per auto-negotiation), but the projector refused to play ball. The live stream could also see my output, so the problem was somehow from the video box to the projector.

Several people eventually got involved, things were rebooted multiple times, cables were yanked and replugged in again. First after I installed arandr and forced-updated the resolution of my HDMI output to 1920×1080 the projector would suddenly show my presentation. (Later on I was told that people had the same problem in this room the day before…)

That was about nine minutes of technical difficulties that is cut out from the recording. Nine minutes to test my nerves and presentation finesse as I had to adapt.

A few weeks ago I mentioned how we fund Stefan’s work on improving HTTP(/3) in curl. Now, in similar spirit we are funding Dan Fandrich to work on further improving test infrastructure. Dan has worked fiercely on the introduction of parallel tests over the recent year or so and this is work that builds on that and continues down that road.

This funding is paid for by sponsors and donors, via Open Collective and GitHub sponsors. Thank you all!

curl contains a regression test suite of over 1900 individual test cases that are run automatically on every commit submission and on every pull request in almost 130 different environments., meaning that every change can result in more than 140,000 tests being run. A spurious test failure rate of a mere 0.001% is likely to cause a perfectly good PR to end up showing with a red failure. A new contributor that doesn’t understand this problem can spend hours poring over his or her patch and the related code in curl, searching for a problem that isn’t there.

Analyzing 140,000 tests for each change to the curl source code to find failure trends (such as flaky tests) demands an automated solution. Dan has created a system (working name Test Clutch) that has been successfully ingesting curl CI test results for much of the past year and has been used by him to find flaky tests as well as permanently failing tests (often submitted under the mistaken impression that the failed test was merely flaky). It collects individual test results from all the CI systems used in the curl project into a database where they can be analyzed.

This system has potential to be useful to a broader base of curl developers to help see test trends, test platform coverage and to better determine which tests are flaky and could use improvement. It has been written in a fashion such that test results for other projects besides curl can also be added and analyzed separately.

The current test ingestion and analysis system will be productionized and the analysis summary table will be integrated into the curl web site for easy access for developers.

This task will involve writing code to trigger the test analysis system to retrieve detailed PR test results when available. It must make a reasonable determination of when all the expected tests have been completed (since not all tests will run for every PR) then commenting on the PR with a summary of the test results and believability of any test failures.

These are project that will benefit the project when implemented but they are not time sensitive and Dan is not going to work full time on them. There are no exact end dates set for them.

The result of Dan’s work will become visible in PRs and website updates as we go forward.

Five years ago now, on February 2nd 2019, I started working for wolfSSL doing curl full time. I have now worked longer for wolfSSL than I previously did for Mozilla.

I have said it before and I will say it again: working full time on curl is my definition of living the dream.

Joining wolfSSL was not just me changing employer, it changed everything for me. First, I am not just a regular employee, I am the lead curl developer and the curl support we offer for commercial customers is unparalleled. No other business or individuals can offer the same level of support, knowledge, experience, insights and ability to merge fixes and changes back into curl mainline.

At wolfSSL we offer commercial services around and support for curl and libcurl. Contract development of new features, debugging, fixing problems and just about every other aspect of getting users get better use of (lib)curl in their products and services.

I think this change has been good for curl and curl project as well. The last five years have seen more and faster development than any other previous five year period. I have been able to work intensely and a lot on curl, When fixing bugs and adding features for customers, but even more just the general improving of things for everyone that the money from support customers makes possible.

the 254th release

7 changes

56 days (total: 9,448)

154 bug-fixes (total: 9,888)

257 commits (total: 31,684)

0 new public libcurl function (total: 93)

1 new curl_easy_setopt() option (total: 304)

0 new curl command line option (total: 258)

65 contributors, 40 new (total: 3,078)

36 authors, 18 new (total: 1,237)

1 security fix (total: 151)

CVE-2024-0853: OCSP verification bypass with TLS session reuse. curl inadvertently kept the SSL session ID for connections in its cache even when the verify status (OCSP stapling) test failed. A subsequent transfer to the same hostname could then succeed if the session ID cache was still fresh, which then skipped the verify status check.

CURLE_TOO_LARGE. A new libcurl error code for when “something” is growing too big to be allowed. Like a URL, a HTTP request or similar. Previously it would return out of memory for those situations which caused confusion to users.CURLINFO_QUEUE_TIME_T. Applications can now ask libcurl how long a transfer was “queued” internally before it actually started.CURLOPT_SERVER_RESPONSE_TIMEOUT_MS. A new millisecond version of the already existing option to allow applications higher resolution control.-gl just as you have been able to run it with gdb using -g for decades. Helps debugging difficult cases.Here some of my favorite bugfixes from this cycle:

configure: add libngtcp2_crypto_boringssl detection. Previously it would only detect and build out of the box with the quictls version of ngtcp2 builds.

configure: when enabling QUIC, check that TLS supports QUIC. More efforts trying to detect wrong and invalid build combinations earlier, to avoid users ending up with broken builds.

all libcurl man page examples are verified in CI. Every man page example now compiles cleanly. This step made us detect and fix numerous tiny mistakes of the most annoying kind: when you copy code from docs and it does not work.

curl shows ipfs and ipns as supported “protocols”. In the regular --version output. Even if they are converted to https:// internally.

curl bsearches command line options. The command line parsing is now magnitudes faster. Of course it will not really be noticeable outside of the most extreme cases.

curl stopped supporting @filename style for --cookie. This syntax was never documented and was not used in any test case. It was risking to cause unwanted surprises.

curl –remove-on-error only removes “real” files. Mostly as a precaution for when users are unclever enough to run curl with elevated privileges and would save to a device or named pipe etc.

curl no longer sets the file comment on Amiga. It would truncate the URL weirdly and also risk leak credentials if such were used in the URL.

lib: reduced use of the download buffer all over. The download buffer has over time been abused for all kinds of buffer purposes. This cycle we have made a lot of such buffer use instead start use their own buffers. With a little luck, this will make us possible use a single download buffer for all transfer in a multi handle, thereby drastically reducing the amount of used memory when doing parallel transfers. With no behavior difference or performance degradation. Details on this will follow later.

lib: use memdup0 instead of malloc + memcpy. This was a common code pattern, and with this we reduce the number of mallocs and memcpys at the same time – which we think is good since they are known “problem functions” that are easy to mess up.

lib: various conversions from malloc to dynbuf. In similar spirit as the above, we continued to switch more functions away from using malloc and family to instead use the internal dynbuf API for managing dynamic buffers in a way that is less likely to cause memory related issues.

resolving: with modern c-ares, use its default timeout. It means tighter timeouts by default but also that this combo now also respect the timeout that can be specified in resolve.conf.

headers API: make sure the trailing newline is never stored. A header with no content on the right side of the colon would erroneously get its trailing newline stored as content

mprintf: overhaul, performance and bugfixes. Now the curl printf functions work even more similar to the glibc counterparts especially when provided illegal %-combinations and when using the <num>$ operator. Performance measurements on this new code also says this code now executes around 30% faster on commonly used format strings.

ftp: handle the PORT parsing without allocation. Minor cleanup.

http3: initial support for OpenSSL 3.2 QUIC stack. The forth QUIC backend in curl is here.

http: check for “Host:” case insensitively. If you would ask to disable this header with a different casing that what was compared, it would still send an empty header in the request.

http: only act on 101 responses when they are HTTP/1.1. If a HTTP response says another protocol version with a 101 response, it is now considered an illegal combination.

openldap: fix an LDAP crash. LDAP without TLS would crash on basic use.

openldap: fix STARTTLS. It was recently broken in a refactor.

There are no revolutionary changes in the pipe, but there are a series of things we most likely are going to land in the next cycle making the next version number likely to become 8.7.0.

The curl project arranges a two hour video conference meeting on March 21, 2024, with the aim of getting together people from curl and persons packaging curl for distributions. Linux distros and others. If you distribute curl to your users, consider yourself invited!

The main purpose is to improve curl and how curl is shipped to end users. The idea is to make it a multi-party conversation to see how we can improve collaboration, information exchange, testing, experiences etc so that we in the curl project can help the distros ship curl better, faster and more convenient. To the benefit for everyone who uses curl and libcurl packaged by distributions.

Of course, getting people working on related matters together and talking could also help us get to know each other a little better, to get some faces and voices attached to email addresses and by that, making cooperation smoother in the future.

The meeting is open for anyone interested to join and participate in.

The event starts at 16:00 UTC and might last up to two hours.

All details and specifics for this event is put in this wiki page:

https://github.com/curl/curl/wiki/curl-distro-discussion-2024

Welcome!

On December 21 2023 I started using this new keyboard as my daily driver. I have used my previous one almost daily since August 2014. Back in 2015 I counted doing almost 7 million key-presses/year. I don’t think I’ve typed any less since then. I rather think there are signs that I have typed a lot more since, especially since I started working full-time on curl in 2019. My estimate is thus that my trusted old keyboard has served over 70 million key-presses. And it has done it very well.

I did not want to comment on the keyboard too early, but I also did not want too long so that I forget about the little details a transition like this can bring up. I have averaged around 30,000 key strokes per day since I started using the new keyboard (I used keyfreq to figure this out). I also toyed around on keybr.com (where I reach 84 words/minute typing speed – with a fair amount of typos), to get a feel for how efficient I can possibly become on this thing. Seems okay!

Keyboards belongs in this annoying category of products that are really hard to figure out and get a proper feel for ahead of time even if you would be able to try them out before purchase. They pretty much require that we use them for a while before we can tell for sure we like them and want to stay with them many hours every day for the next several years.

Based on a recommendation from my brother Björn, I went with Logitech G915 TKL – tactile keys flavored. “Carbon” they call this color. It apparently also is available in white. Without a recommendation, I would not have considered this brand.

This thing is branded a “gaming keyboard” and then of course the default keyboard RGB lightning effects consist of moving and changing colors across all the keys. There are 10 color style presets to select from, but the keyboard goes back to the default after idle timeouts, making the default mode really important. The factory default mode really screams hey look at me!, and is mostly disturbing to use.

I have read comments from Linux users online who even returned their G915 keyboards due to this crazy scheme not being possible to change properly on device, and the unfortunate truth is that I had to install their control software on a Windows box nearby to set a new default coloring mode: a solid single color on all keys – including that big G logo-symbol in the top left. Resetting back to this mode is fine. I appreciate some light on the keys, especially when using the keyboard at night.

It could perhaps also be mentioned that their control software on Windows, G Hub , while looking fancy, was not easy to figure out how to do this with.

I like that the lights on the keyboard go off completely after idling long enough, so that my workplace goes almost completely dark when left alone.

Ever since I got my previous keyboard I have been interested in a Ten Key Less (TKL) model. I never use any of those keys on the numerical part and I figured it could be good ergonomics to reduce the travel distance for my right hand when moving from keys to the mouse and back. A move that I must do hundreds if not thousands of times per day.

This keyboard is wireless but it is not a feature I care about. I use this quite stationary at my desk within cable distance and I find rather use a cable than having to recharge the device.

I’m using the traditional Swedish QWERTY layout, which some people legitimately will mention is not optimal for development compared to US keyboard layouts. This would be because of what key combos you need to write for parentheses, braces, forward-slash etc. But since the Swedish language has these commonly used extra letters (Å, Ä and Ö) and we have been using this keyboard layout for a long time in this country, I am used to it and where ever you go on Sweden and find a keyboard, almost every one of them will use this layout. It is the path of least resistance.

I have never been a fan of the ergonomic keyboard styles and layouts. Probably because I never really allowed myself enough time to get used to them.

With my previous keyboard I had to press Fn plus an F-key to do media control, while this new one has a set of dedicated media keys above the F-keys as you can see on the image above. I can also control the volume with the scroll wheel thing on the top right. Pretty convenient actually.

I have grown to appreciate having media control keys on the keyboard, and I find that having dedicated keys like this is a step up as it saves me from doing combo-presses frequently. A little less finger gymnastics.

Logitech does not seem to have a specific brand name for their switches. They offer the same keyboard with three different styles: linear, clicky or tactile. I went with tactile and I’m satisfied. The feel is not substantially different than my previous cherry reds.

I had Cherry MX Red switches in my previous keyboard, and this new one uses Logitech switches without any distinct name (that I could find). I did not have any particular problem with the noise level before but I hoped that this new one would perhaps be a little quieter. For the sake of family members when I type away on my keyboard when they are asleep and maybe to be less audible when I do video recordings etc. My home office and this keyboard setup is not far away from where they sleep.

I think maybe this keyboard is a little more silent, but not by much. I asked family members if they had noticed any difference in sound levels since my keyboard switch but none of them had noticed any change.

Length: 368 mm

Width: 150 mm

Height: 22 mm

Weight: 810 g

The actual size of the keyboard and keys is almost identical to my previous keyboard, I mean when excluding the removed numerical keyboard. The weight of the G915 makes it feel solid and it sits firmly and fixated on the table reliably even during most my intense typing sessions. I suppose it is also good for wireless use as it won’t feel as “flimsy” as regular all-plastic wireless ones tend to feel. But I have not used it much in wireless mode.

It feels solid and like it can survive being used daily for a long time.

I trust you have figured out already that I have the highest ambitions for the curl documentation. I want everything documented in a clear, easy-to-read and easy-to-find manner. This takes a lot of work and is not something that happens without effort.

Part of providing best-in-class documentation has in my mind always been to provide and ship man pages. And to make sure that those latest up-to-date man pages are always offered to users as good-looking webpages on the curl site.

Over twenty years ago I decided to render the web versions of the man pages by creating my own tool for that purpose: roffit. We still use it on the site today. It is a simple tool that converts nroff formatted man pages to HTML and adds cross-referencing links to other man pages. There is no concept of links in nroff, but I decided to use a combination of text and markup to use as a signal and it has worked out fine since. In part of course because I have then also subsequently made sure to format the curl man pages in this roffit-friendly way.

We started writing the curl man pages in the nroff format some time in the beginning of 1999. Mostly then because we did not know or have any tool that would convert nicely into nroff and later on to make sure we got things formatted the way we wanted. This approach works and can be used, but nroff is not a terribly human-friendly format and over time we decided that documenting several hundred command line options in a single nroff file was not ideal.

In November 2016 we revamped the system and started to generate the curl.1 man page at build time from multiple source files. Every command line option would then be documented in its own stand-alone file. Easier to read, easier to manage. Yet we controlled the output nroff format so that the web version would still look excellent. The individual files got .d extensions (for document) but were mostly still using nroff, and were mostly all appended to create the result.

Over time, we introduced more convenience formatting aids to the .d files so that we could write them using less and less nroff, and as a result they also grew more readable in their source format.

The .d file format never had its own name. It is just how we documented 250+ command line options for the curl command line tool.

The libcurl docs however remained authored in nroff. In 2014, the number of libcurl man pages exploded when we started doing a separate document for every libcurl option. We went from 59 man pages in the project to 270 between two releases. Since then we have added many more. Today in early 2024, we have a total of 496 individual man pages in the project. 493 of them concern libcurl.

When curl started, markdown did not exist – that format was created first in 2004. The early documentation we created in the project that was not nroff, we made using plain text files. It was first in 2014 when we slowly started to convert some of our text documents into markdown.

Markdown has several upsides compared to plain old text. In particular it is easier to render good-looking web versions of them, which we do on the curl web site and it offers cross-document linking etc. Over the years we have converted almost all our text documents into markdown. Markdown is also a user-friend format as it is easy to read and easy to edit for contributors, new and old.

The move away from using nroff for the libcurl man pages came with the introduction of the “curldown” document format in early 2024. Meant to make it easy to generate the same kind of nroff files we previously hand-edited, but using “markdown”. I use quotes around it because it is not a full-fledged markdown support, but it looks similar enough to trick casual users and we decided to use “.md” file extensions to make editors, GitHub and more treat and show them as such.

The file format is inspired by the one we created for the command line tool: with a header holding meta-data and then simplified markdown. Easy to read, easy to edit – for everyone. No more strange nroff syntax to copy and paste. The fact that GitHub render the sources files clean and good-looking is also attractive.

Switching to a markdown lookalike format had some other side-effects: suddenly a lot of CI jobs we have running caught these files and had a go at them: spellchecks, prose checks, checks for capitalized words after periods and more. This improved the language significantly.

Also, generating the nroff files rather than editing them has more benefits than just avoiding the baroque syntax: it also allows us to change styles and escape certain sequences through-out all documentation with just a few fixes in the scripts producing the output.

After this 47,000 lines added and removed patch was merged, we have a completely new source format system for the libcurl docs, that generates the same style of nroff output we had previously. With improved language.

With the huge switch to “curldown” for libcurl options, it felt wrong to keep the old curl .d files for the curl.1 man page, seeing as they were almost there already. I did the work and converted them over to the same markdown lookalike style I did for libcurl: they are now also .md files and now they too get spell-checked and scrutinized much more.

I took this move one step further: previously we generated the single curl.1 man page using a fixed header file and a footer file that we prepended and appended to the nroff generated from the (converted) .d documentation.

Now, we instead have a mainpage.idx file that lists which (markdown) file names to include and convert to nroff. This allows us to split the two big headers and footers into several smaller markdown files, each with its own header. Like “DESCRIPTION”, “URL”, and “GLOBBING”. Separating them makes them easier to manage for contributors and the index file makes it easy to change the order of sections etc.

We end up with a curl.1 man page that looks quite similar to before, but with improve language and easier format to edit the documentation going forward.

When I type this, we have 836 files with .md extensions in the curl git repository. At the time of the most recent release, we had 61.

I’m sure it does not stop here. I already have some ideas on how to improve this setup further.

I’m considering doing something to completely avoid the nroff step for the website HTML versions of the man pages and instead go straight from markdown.

The curl tool has the manpage built-in, (shown with the -M option), and I would like to fix the build to do this without using nroff.

I have some more CI jobs to add to verify and check the language in the markdown files. Mostly to make sure the language is consistent.