Update: parts of the change mentioned in this blog post has subsequently been reverted since clearly I had a too positive view of the Internet.

One of the first bugs that fell into my lap when I started working for Mozilla not a very long time ago, was bug 237623. Anyone involved in Mozilla knows a bug in that range is fairly old (we just recently passed one million filed bugs). This particular bug was filed in March 2004 and there are (right now) 26 other bugs marked as duplicates of this. Today, the fix for this problem has landed.

One of the first bugs that fell into my lap when I started working for Mozilla not a very long time ago, was bug 237623. Anyone involved in Mozilla knows a bug in that range is fairly old (we just recently passed one million filed bugs). This particular bug was filed in March 2004 and there are (right now) 26 other bugs marked as duplicates of this. Today, the fix for this problem has landed.

The core of the problem is that when a HTTP server sends contents back to a client, it can send a header along indicating the size of the data in the response. The header is called “Content-Length:”. If the connection gets broken during transfer for whatever reason and the browser hasn’t received as much data as was initially claimed to be delivered, that’s a very good hint that something is wrong and the transfer was incomplete.

The perhaps most annoying way this could be seen is when you download a huge DVD image or something and for some reason the connection gets cut off after only a short time, way before the entire file is downloaded, but Firefox just silently accept that as the end of the transfer and think everything was fine and dandy.

What complicates the issue is the eternal problem: not everything abides to the protocol. This said, if there are frequent violators of the protocol we can’t strictly fail on each case of problem we detect but we must instead do our best to handle it anyway.

Is Content-Length a frequently violated HTTP response header?

Let’s see…

- Back in the HTTP 1.0 days, the Content-Length header was not very important as the connection was mostly shut down after each response anyway. Alas, clients/browsers would swiftly learn to just wait for the disconnect anyway.

- Back in the old days, there were cases of problems with “large files” (files larger than 2 or 4GB) which every now and then caused the Content-Length: header to turn into negative or otherwise confused values when it wrapped. That’s not really happening these days anymore.

- With HTTP 1.1 and its persuasive use of persistent connections it is important to get the size right, as otherwise the chain of requests get messed up and we end up with tears and sad faces

- In curl‘s HTTP parser we’ve always been strictly abiding to this header and we’ve bailed out hard on mismatches. This is a very rare error for users to get and based on this (admittedly unscientific data) I believe that there is not a widespread use of servers sending bad Content-Length headers.

- It seems Chrome at least in some aspects is already much more strict about this header.

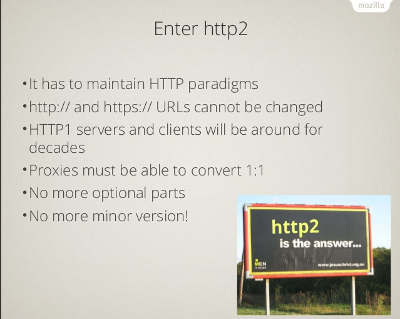

My fix for this problem takes a slightly careful approach and only enforces the strictness for HTTP 1.1 or later servers. But then as a bonus, it has grown to also signal failure if a chunked encoded transfer ends without the ending trailer or if a SPDY or http2 transfer gets prematurely stopped.

This is basically a 6-line patch at its core. The rest is fixing up old test cases, added new tests etc.

As a counter-point, Eric Lawrence apparently worked on adding stricter checks in IE9 three years ago as he wrote about in Content-Length in the Real World. They apparently subsequently added the check again in IE10 which seems to have caused some problems for them. It remains to be seen how this change affects Firefox users out in the real world. I believe it’ll be fine.

This patch also introduces the error code for a few other similar network situations when the connection is closed prematurely and we know there are outstanding data that never arrived, and I got the opportunity to improve how Firefox behaves when downloading an image and it gets an error before the complete image has been transferred. Previously (when a partial transfer wasn’t an error), it would always throw away the image on an error and instead show the “image not found” picture. That really doesn’t make sense I believe, as a partial image is better than that default one – especially when a large portion of the image has been downloaded already.

Follow-up effects

Other effects of this change that possibly might be discovered and cause some new fun reports: prematurely cut off transfers of javascript or CSS will discard the entire javascript/CSS file. Previously the partial file would be used.

Of course, I doubt that these are the files that are as commonly cut off as many other file types but still on a very slow and bad connection it may still happen and the new behavior will make Firefox act as if the file wasn’t loaded at all, instead of previously when it would happily used the portions of the files that it had actually received. Partial CSS and partial javascript of course could lead to some “fun” effects of brokenness.

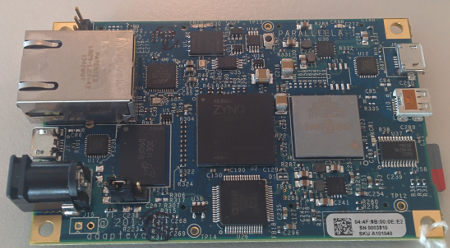

I ripped the thing out, booted up again and I could still work since my source code and OS are on the SSD. I ordered a new one at once. Phew.

I ripped the thing out, booted up again and I could still work since my source code and OS are on the SSD. I ordered a new one at once. Phew.

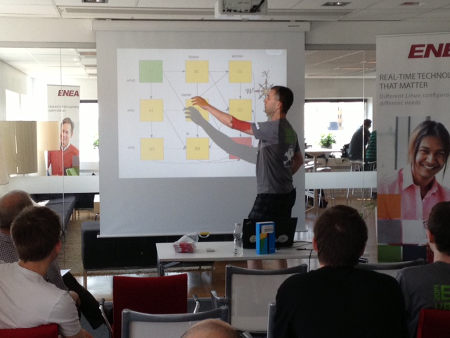

tshirt and then they found themselves a spot somewhere in the crowd and the socializing and hacking could start. I got the pleasure of loudly interrupting everyone once in a while to say welcome or point out that a talk was about to begin…

tshirt and then they found themselves a spot somewhere in the crowd and the socializing and hacking could start. I got the pleasure of loudly interrupting everyone once in a while to say welcome or point out that a talk was about to begin…