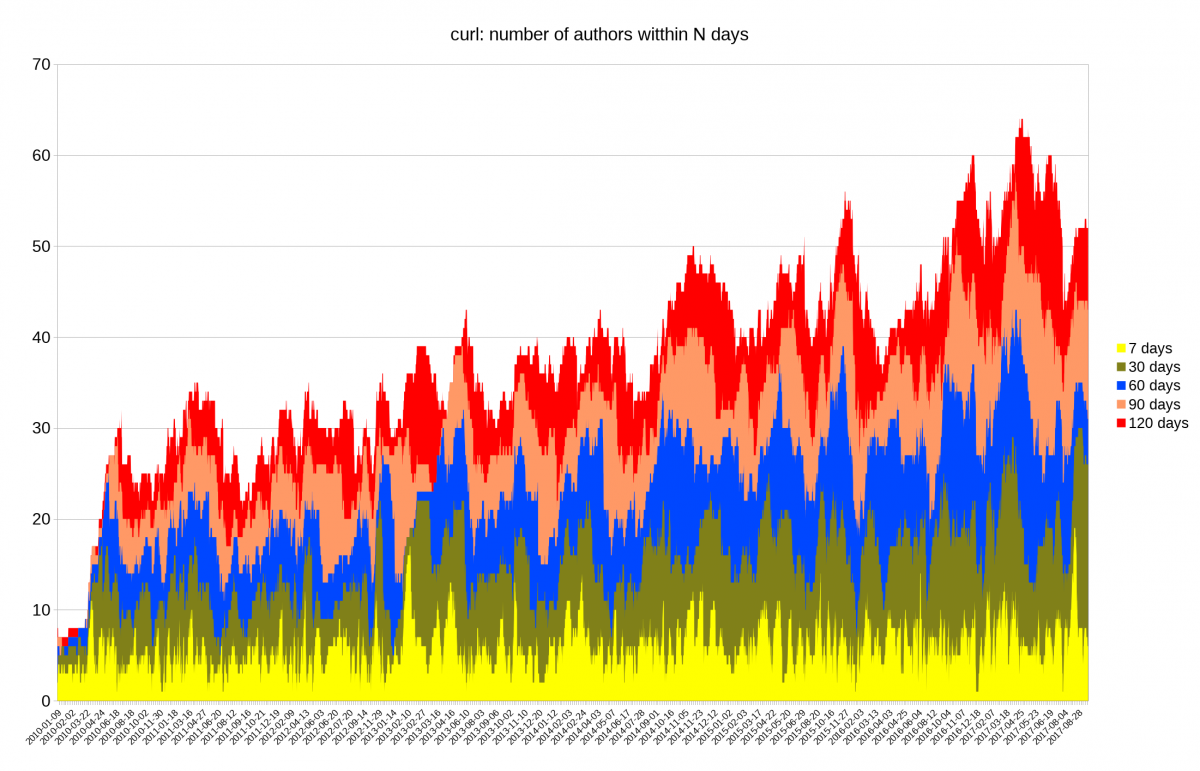

curl supports some twenty-three protocols (depending on exactly how you count).

In order to properly test and verify curl’s implementations of each of these protocols, we have a test suite. In the test suite we have a set of handcrafted servers that speak the server-side of these protocols. The more used a protocol is, the more important it is to have it thoroughly tested.

We believe in having test servers that are “stupid” and that offer buttons, levers and thresholds for us to control and manipulate how they act and how they respond for testing purposes. The control of what to send should be dictated as much as possible by the test case description file. If we want a server to send back a slightly broken protocol sequence to check how curl supports that, the server must be open for this.

In order to do this with a large degree of freedom and without restrictions, we’ve found that using “real” server software for this purpose is usually not good enough. Testing the broken and bad cases are typically not easily done then. Actual server software tries hard to do the right thing and obey standards and protocols, while we rather don’t want the server to make any decisions by itself at all but just send exactly the bytes we ask it to. Simply put.

Of course we don’t always get what we want and some of these protocols are fairly complicated which offer challenges in sticking to this policy all the way. Then we need to be pragmatic and go with what’s available and what we can make work. Having test cases run against a real server is still better than no test cases at all.

Now SOCKS

“SOCKS is an Internet protocol that exchanges network packets between a client and server through a proxy server. Practically, a SOCKS server proxies TCP connections to an arbitrary IP address, and provides a means for UDP packets to be forwarded.

(according to Wikipedia)

Recently we fixed a bug in how curl sends credentials to a SOCKS5 proxy as it turned out the protocol itself only supports user name and password length of 255 bytes each, while curl normally has no such limits and could pass on credentials with virtually infinite lengths. OK, that was silly and we fixed the bug. Now curl will properly return an error if you try such long credentials with your SOCKS5 proxy.

As a general rule, fixing a bug should mean adding at least one new test case, right? Up to this time we had been testing the curl SOCKS support by firing up an ssh client and having that setup a SOCKS proxy that connects to the other test servers.

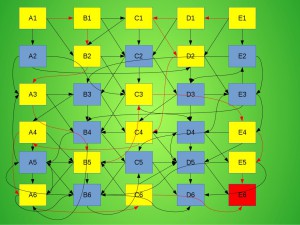

curl -> ssh with SOCKS proxy -> test server

Since this setup doesn’t support SOCKS5 authentication, it turned out complicated to add a test case to verify that this bug was actually fixed.

This test problem was fixed by the introduction of a newly written SOCKS proxy server dedicated for the curl test suite (which I simply named socksd). It does the basic SOCKS4 and SOCKS5 protocol logic and also supports a range of commands to control how it behaves and what it allows so that we can now write test cases against this server and ask the server to misbehave or otherwise require fun things so that we can make really sure curl supports those cases as well.

It also has the additional bonus that it works without ssh being present so it will be able to run on more systems and thus the SOCKS code in curl will now be tested more widely than before.

curl -> socksd -> test server

Going forward, we should also be able to create even more SOCKS tests with this and make sure to get even better SOCKS test coverage.

I’ve tried to imagine of what kind of person that would not have or use any piece of

I’ve tried to imagine of what kind of person that would not have or use any piece of

As a reaction to the whole

As a reaction to the whole  We can’t have it like that! I rolled up my imaginary sleeves (imaginary since my swag tshirt doesn’t really have sleeves) and I now offer

We can’t have it like that! I rolled up my imaginary sleeves (imaginary since my swag tshirt doesn’t really have sleeves) and I now offer

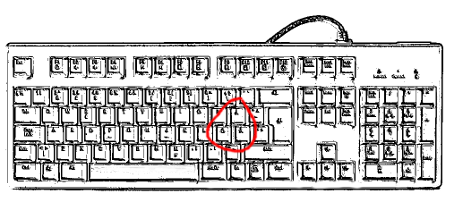

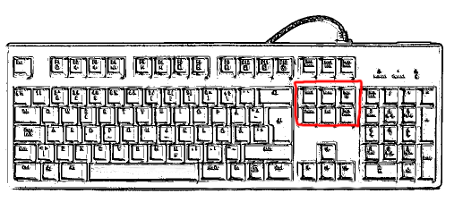

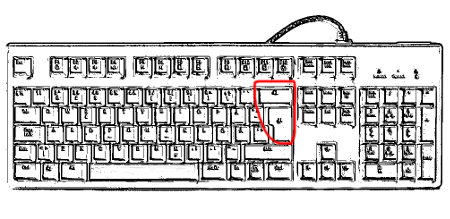

Backspace

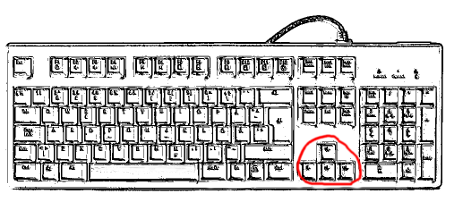

Backspace Further details

Further details