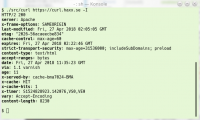

This curl release was developed and put together over a period of six weeks (two weeks less than usual). This was done to accommodate to my personal traveling plans – and to avoid doing a release too close to Christmas in case we would ship any security fixes, but ironically, we have no security advisories this time!

Numbers

the 178th release

3 changes

42 days (total: 7,572)

79 bug fixes (total: 4,837)

122 commits (total: 23,799)

0 new public libcurl functions (total: 80)

1 new curl_easy_setopt() options (total: 262)

0 new curl command line option (total: 219)

51 contributors, 21 new (total: 1,829)

31 authors, 14 new (total: 646)

0 security fixes (total: 84)

Changes

With the new CURLOPT_CURLU option, an application can now pass in an already parsed URL to libcurl instead of a string.

When using libcurl’s URL API, introduced in 7.62.0, the result is held in a “handle” and that handle is what now can be passed straight into libcurl when setting up a transfer.

In the command line tool, the –write-out option got the ability to optionally redirect its output to stderr. Previously it was always a given file or stdout but many people found that a bit limiting.

Interesting bug-fixes

Weirdly enough we found and fixed a few cookie related bugs this time. I say “weirdly” because you’d think this is functionality that’s been around for a long time and should’ve been battle tested and hardened quite a lot already. As usual, I’m only covering some bugs here. The full list is in the changelog!

Cookie saving – One cookie bug that we fixed was related to libcurl not saving a cookie jar when no cookies are kept in memory (any more). This turned out to be a changed behavior due to us doing more aggressive expiry of old cookies since a while back, and one user had a use case where they would load cookies from a cookie jar and then expect that the cookies would update and write to the jar again, overwriting the old one – although when no cookies were left internally it didn’t touch the file and the application thus reread the old cookies again on the next invoke. Since this was subtly changed behavior, libcurl will now save an empty jar in this situation to make sure such apps will note the blank jar.

Cookie expiry – For the received cookies that get ‘Max-Age=0’ set, curl would treat the zero value the same way as any number and therefore have the cookie continue to exist during the whole second it arrived (time() + 0 basically). The cookie RFC is actually rather clear that receiving a zero for this parameter is a special case and means that it should rather expire it immediately and now curl does.

Timeout handling – when calling curl_easy_perform() to do a transfer, and you ask libcurl to timeout that transfer after say 5.1 seconds, the transfer hasn’t completed in that time and the connection is in fact totally idle at that time, a recent regression would make libcurl not figure this out until a full 6 seconds had elapsed.

NSS – we fixed several minor issues in the NSS back-end this time. Perhaps the most important issue was if the installed NSS library has been built with TLS 1.3 disabled while curl was built knowing about TLS 1.3, as then things like the ‘–tlsv1.2’ option would still cause errors. Now curl will fall back correctly. Fixes were also made to make sure curl again works with NSS versions back to 3.14.

OpenSSL – with TLS 1.3 session resumption was changed for TLS, but now curl will support it with OpenSSL.

snprintf – curl has always had its own implementation of the *printf() family of functions for portability reasons. First, traditionally snprintf() was not universally available but then also different implementations have different support for things like 64 bit integers or size_t fields and they would disagree on return values. Since curl’s snprintf() implementation doesn’t use the same return code as POSIX or other common implementations we decided we shouldn’t use the same name so that we don’t fool readers of code into believing that they are fully compatible. For that reason, we now also “ban” the use of snprintf() in the curl code.

URL parsing – there were several regressions from the URL parsing news introduced in curl 7.62.0. That was the first release that offers the new URL API for applications, and we also then switched the internals to use that new code. Perhaps the funniest error was how a short name plus port number (hello:80) was accidentally treated as a “scheme” by the parser and since the scheme was unknown the URL was rejected. The numerical IPv6 address parser was also badly broken – I take the blame for not writing good enough test cases for it which made me not realize this in time. Two related regressions that came from the URL work broke HTTP Digest auth and some LDAP transfers.

DoH over HTTP/1 – DNS-over-HTTPS was simply not enabled in the build if HTTP/2 support wasn’t there, which was an unnecessary restriction and now h2-disabled builds will also be able to resolve host names using DoH.

Trailing dots in host name – an old favorite subject came back to haunt us and starting in this version, curl will keep any trailing dot in the host name when it resolves the name, and strip it off for all the rest of the uses where the name will be passed in: for cookies, for the HTTP Host: header and for the TLS SNI field. This, since most resolver APIs makes a difference between resolving “host” compared to “host.” and we wouldn’t previously acknowledge or support the two versions.

HTTP/2 – When we enabled HTTP/2 by default for more transfers in 7.62.0, we of course knew that could force more latent bugs to float up to the surface and get noticed. We made curl understand HTTP_1_1_REQUIRED error when received over HTTP/2 and then retry over HTTP/1.1. and if NTLM is selected as the authentication to use curl now forces HTTP/1 use.

Next release

We have suggested new features already lined up waiting to get merged so the next version is likely to be called 7.64.0 and it is scheduled to happen on February 6th 2019.

This was actually a whole set of small problems that together made the new

This was actually a whole set of small problems that together made the new  Old readers of this blog may remember my ramblings on

Old readers of this blog may remember my ramblings on