Okay you might ask, what’s the news here? We’ve been able to get HTTP response headers with curl since virtually the stone age. Yes we have. Get the page and also show the headers:

curl -i https://example.com/

Make a HEAD request and see what headers we get back:

curl -I https://example.com/

Save the response headers in a separate file:

curl -D headers.txt https://example.com/

Get a specific header

This gets a little more complicated but you can always do

curl -I https://example.com/ | grep Date:

Which of course will fail if the casing is different, you need to check for it case insensitively. There might also be another header ending with “date:” that matches so you need to make sure that this an exact match

curl -I https://example.com/ | grep -i ^Date:

Now this shows the entire header, but for most cases you only want the value. So get it with cut:

curl -I https://example.com/ | grep -i ^Date: | cut -d: -f2-

You have the header value extracted now, but the leading and trailing white spaces in the content are probably not what you want in there so let’s strip them as well:

curl -I https://example.com/ | grep -i ^Date: | cut -d: -f2- | sed 's/^ *\(.*\).*/\1/'

There are of course many different ways you can do this operation and some of them are more clever than the methods I’ve used here. They are still often more or less convoluted and error-prone.

If we imagine that this is a fairly common use case for curl users in the world, then this kind of operation is found duplicated in quite a few scripts, applications and devices in the world.

Maybe we could make this easier for curl users?

A headers API

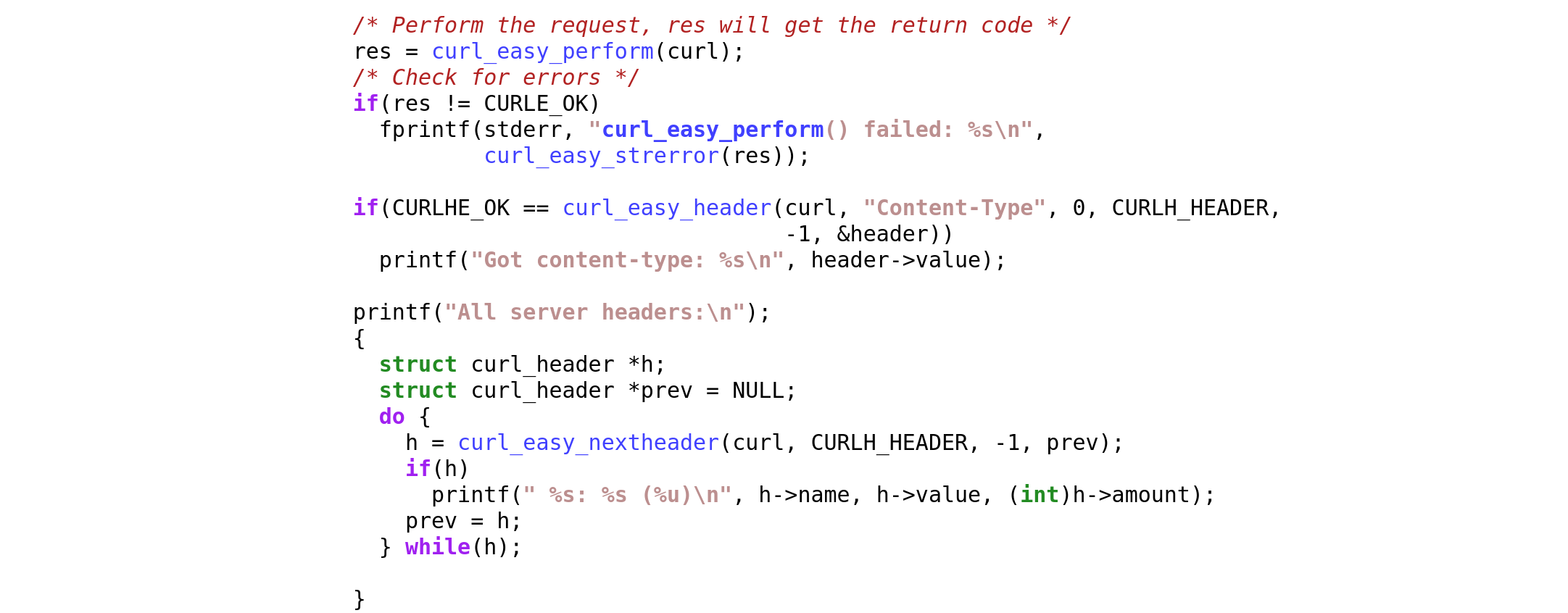

The other day we introduced a new experimental headers API to libcurl. Using this API, an application using libcurl gets an easy to use API to extract individual or several headers and their content.

As curl is such a libcurl-using application, we have expanded it to make use of this new API and this brings some new fun features to the curl tool.

Let me emphasize that since this API is labeled experimental it is not enabled in a default build. You need to explicitly enable it!

Get a single header, the new way

I decided to extend the -w output feature for this.

To extract a single header, get the value with leading and trailing spaces trimmed, use %header{name}. To repeat the operation from above and get the Date: header

curl -I -w '%header{date}' https://example.com/

‘date’ in this example is a case insensitive header name without the trailing colon and you can of course use any header name you please there. If the given header did not actually arrive in the response, it outputs nothing.

If you want more headers output, just repeat the %header{name} construct as many times as you like. If the -w output string gets unwieldy and hard to manage on the command line, then make it into a text file instead and tell -w about it with -w @filename.

curl -I -w @filename https://example.com/

Which headers?

There are several different kinds of headers and there can be multiple requests used for a transfer, but this option outputs the “normal” server response headers from the most recent request done. The option only works for HTTP(S) responses.

All headers – as JSON

As dealing with formatted data in the form of JSON has become very popular, I want to help fertilize this by making curl able to output all response headers as a JSON object.

This way, you can move the header handling, parsing and perhaps filtering to your JSON aware tool.

Tell curl to output the received HTTP headers as a JSON object:

curl -o save -w "%{header_json}" https://example.com/

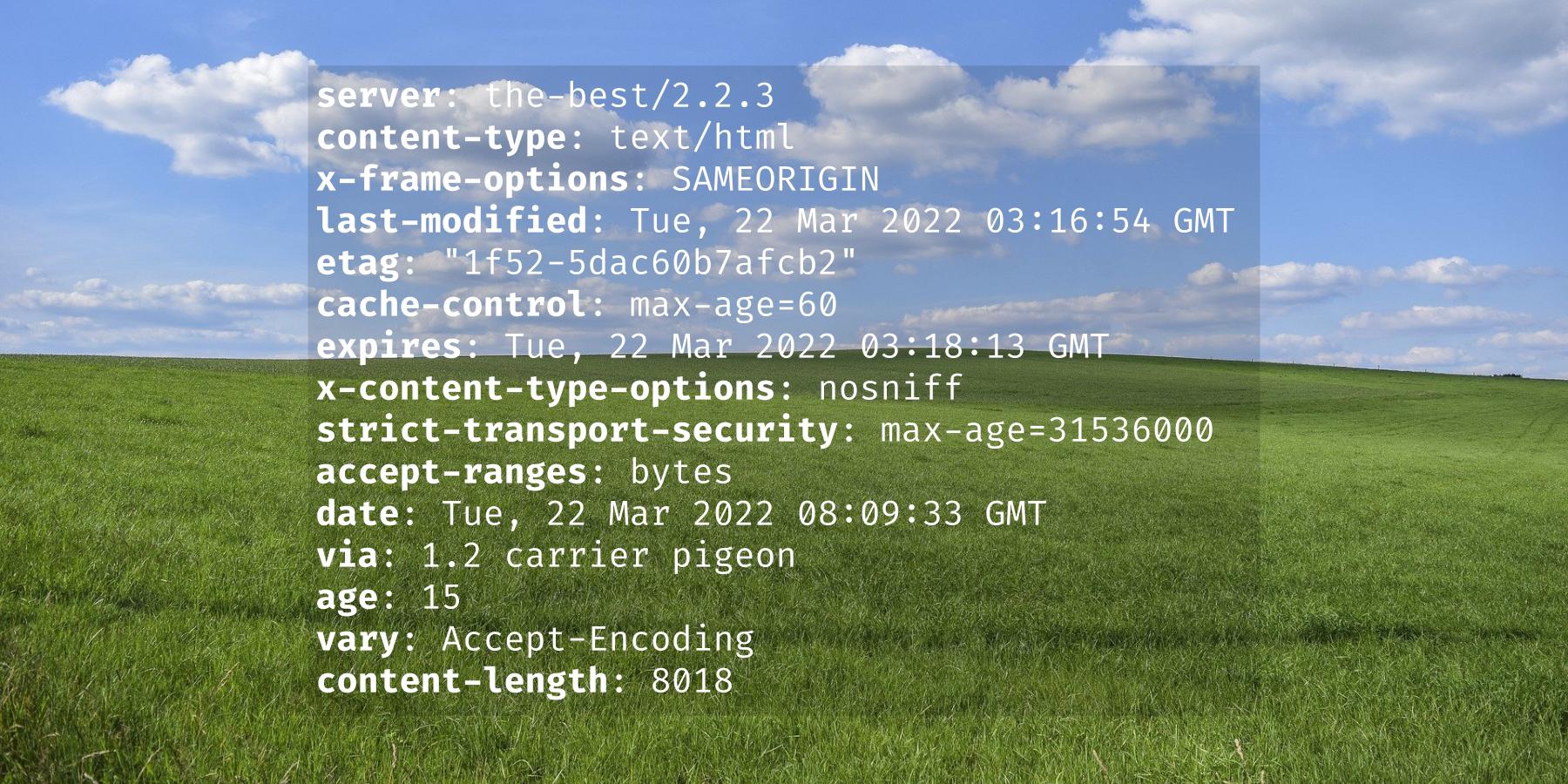

curl itself does not pretty-print this, but if you pass the JSON from curl to a beautifier such as jq, the output ends up looking like this:

{

"age": [

"269578"

],

"cache-control": [

"max-age=604800"

],

"content-type": [

"text/html; charset=UTF-8"

],

"date": [

"Tue, 22 Mar 2022 08:35:21 GMT"

],

"etag": [

"\"3147526947+ident\""

],

"expires": [

"Tue, 29 Mar 2022 08:35:21 GMT"

],

"last-modified": [

"Thu, 17 Oct 2019 07:18:26 GMT"

],

"server": [

"ECS (nyb/1D2E)"

],

"vary": [

"Accept-Encoding"

],

"x-cache": [

"HIT"

],

"content-length": [

"1256"

]

}

JSON details

The headers are presented in the same order as received over the wire. Except if there are duplicated header names, as then they are grouped on the first occurrence and all values are provided there as a JSON array.

All headers are arrays just because there can be multiple headers using the same name .

The casing for the header names are kept unmodified from what was received, but for duplicated headers the casing used for the first occurrence will be used in the output.

Update: we lowercase all header names in the JSON output.

The “status line” of HTTP 1.x response, that first line that says “HTTP1.1 200 OK” when everything is fine, is not counted as a header by this function and will therefor not be included in this output.

Ships in 7.83.0

This feature is present in source code that will ship in curl 7.83.0, scheduled to happen late April 2022. Run your own build with it enabled, or ask your packager to provide an experimental build for you.

With enough positive feedback we should be able to move this out of experimental state fairly quickly.