The webinar from June 30, now on video. The slides are here.

All posts by Daniel Stenberg

curl 7.71.1 – try again

This is a follow-up patch release a mere week after the grand 7.71.0 release. While we added a few minor regressions in that release, one of them were significant enough to make us decide to fix and ship an update sooner rather than later. I’ll elaborate below.

Every early patch release we do is a minor failure in our process as it means we shipped annoying/serious bugs. That of course tells us that we didn’t test all features and areas good enough before the release. I apologize.

Numbers

the 193rd release

0 changes

7 days (total: 8,139)

18 bug fixes (total: 6,227)

32 commits (total: 25,943)

0 new public libcurl function (total: 82)

0 new curl_easy_setopt() option (total: 277)

0 new curl command line option (total: 232)

16 contributors, 8 new (total: 2,210)

5 authors, 2 new (total: 805)

0 security fixes (total: 94)

0 USD paid in Bug Bounties

Bug-fixes

compare cert blob when finding a connection to reuse – when specifying the client cert to libcurl as a “blob”, it needs to compare that when it subsequently wants to reuse a connection, as curl already does when specifying the certificate with a file name.

curl_easy_escape: zero length input should return a zero length output – a regression when I switched over the logic to use the new dynbuf API: I inadvertently modified behavior for escaping an empty string which then broke applications. Now verified with a new test.

set the correct URL in pushed HTTP/2 transfers – the CURLINFO_EFFECTIVE_URL variable previously didn’t work for pushed streams. They would all just claim to be the parent stream’s URL.

fix HTTP proxy auth with blank password – another dynbuf conversion regression that now is verified with a new test. curl would pass in “(nil)” instead of a blank string (“”).

terminology: call them null-terminated strings – after discussions and an informal twitter poll, we’ve rephrased all documentation for libcurl to use the phrase “null-terminated strings” and nothing else.

allow user + password to contain “control codes” for HTTP(S) – previously byte values below 32 would maybe work but not always. Someone with a newline in the user name reported a problem. It can be noted that those kind of characters will not work in the credentials for most other protocols curl supports.

Reverted the implementation of “wait using winsock events” – another regression that apparently wasn’t tested good enough before it landed and we take the opportunity here to move back to the solution we have before. This change will probably take another round and aim to get landed in a better shape in a future.

ngtcp2: sync with current master – interestingly enough, the ngtcp2 project managed to yet again update their API exactly this week between these two curl releases. This means curl 7.71.1 can be built against the latest ngtcp2 code to speak QUIC and HTTP/3.

In parallel with that ngtcp2 sync, I also ran into a new problem with BoringSSL’s master branch that is fixed now. Timely for us, as we can now also boast with having the quiche backend in sync and speaking HTTP/3 fine with the latest and most up-to-date software.

Next

We have not updated the release schedule. This means we will have almost three weeks for merging new features coming up then four weeks of bug-fixing only until we ship another release on August 19 2020. And on and on we go.

curl ootw: –remote-time

Previous command line options of the week.

--remote-time is a boolean flag using the -R short option. This option was added to curl 7.9 back in September 2001.

Downloading a file

One of the most basic curl use cases is “downloading a file”. When the URL identifies a specific remote resource and the command line transfers the data of that resource to the local file system:

curl https://example.com/file -O

This command line will then copy every single byte of that file and create a duplicated resource locally – with a time stamp using the current time. Having this time stamp as a default seems natural as it was created just now and it makes it work fine with other options such as --time-cond.

Use the remote file’s time stamp please

There are times when you rather want the download to get the exact same modification date and time as the remote file has. We made --remote-time do that.

By adding this command line option, curl will figure out the exact date and time of the remote file and set that same time stamp on the file it creates locally.

This option works with several protocols, including FTP, but there are and will be many situations in which curl cannot figure out the remote time – sometimes simply because the server won’t tell – and then curl will simply not be able to copy the time stamp and it will instead keep the current date and time.

Not be default

This option is not by default because.

- curl mimics known tools like cp which creates a new file stamp by default.

- For some protocols it requires an extra operation which then can be avoided if the time stamp isn’t actually used for anything.

Combine this with…

As mentioned briefly above, the --remote-time command line option can be really useful to combine with the --time-cond flag. An example of a practical use case for this is a command line that you can invoke repeatedly, but only downloads the new file in case it was updated remotely since the previous time it was downloaded! Like this:

curl --remote-name --time-cond cacert.pem https://curl.haxx.se/ca/cacert.pem

This particular example comes from the curl’s CA extract web page and downloads the latest Mozilla CA store as a PEM file.

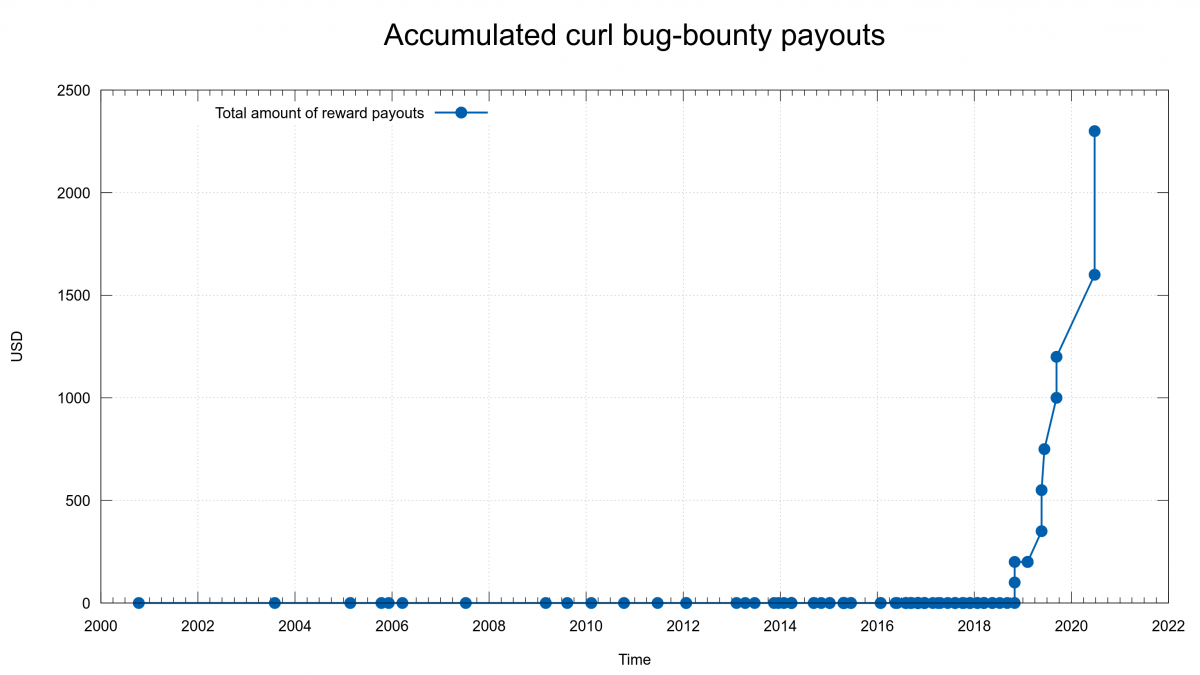

bug-bounty reward amounts in curl

A while ago I tweeted the good news that we’ve handed over our largest single monetary reward yet in the curl bug-bounty program: 700 USD. We announced this security problem in association with the curl 7.71.0 release the other day.

Someone responded to me and wanted this clarified: we award 700 USD to someone for reporting a curl bug that potentially affects users on virtually every computer system out there – while Apple just days earlier awarded a researcher 100,000 USD for an Apple-specific security flaw.

The difference in “amplitude” is notable.

A bug-bounty

I think first we should start with appreciating that we have a bug-bounty program at all! Most open source projects don’t, and we didn’t have any program like this for the first twenty or so years. Our program is just getting started and we’re getting up to speed.

Donations only

How can we in the curl project hand out any money at all? We get donations from companies and individuals. This is the only source of funds we have. We can only give away rewards if we have enough donations in our fund.

When we started the bug-bounty, we also rather recently had started to get donations (to our Open Collective fund) and we were careful to not promise higher amounts than we would be able to pay, as we couldn’t be sure how many problems people would report and exactly how it would take off.

The more donations the larger the rewards

Over time it has gradually become clear that we’re getting donations at a level and frequency that far surpasses what we’re handing out as bug-bounty rewards. As a direct result of that, we’ve agreed in the the curl security team to increase the amounts.

For all security reports we get now that end up in a confirmed security advisory, we will increase the handed out award amount – until we reach a level we feel we can be proud of and stand for. I think that level should be more than 1,000 USD even for the lowest graded issues – and maybe ten times that amount for an issue graded “high”. We will however never get even within a few magnitudes of what the giants can offer.

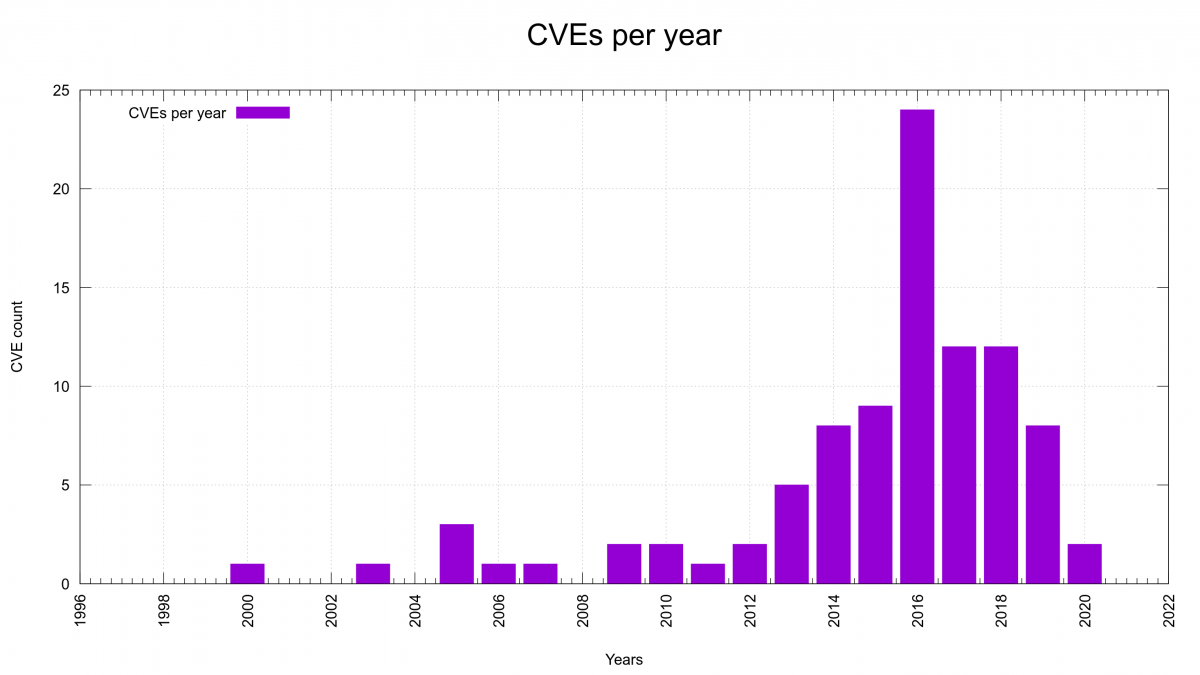

Are we improving security-wise?

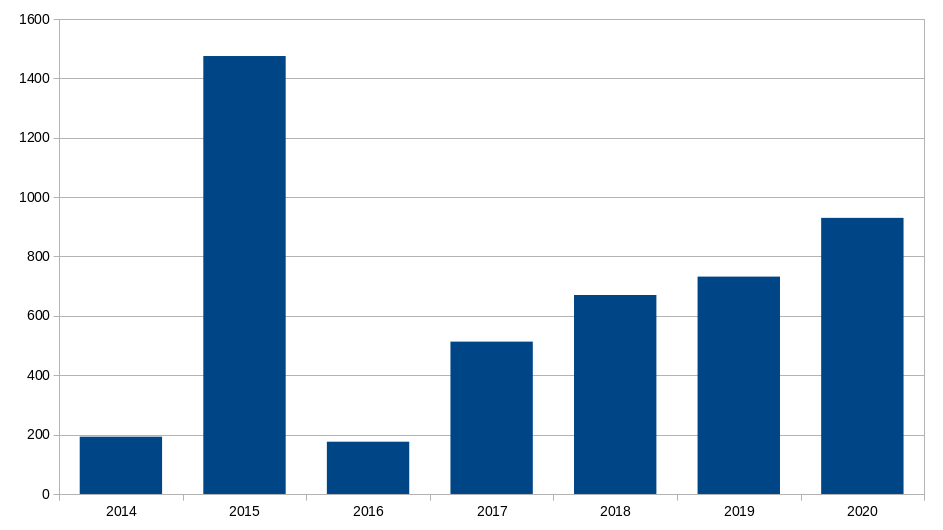

The graph with number of reported CVEs per year shows that we started to get a serious number of reports in 2013 (5 reports) and it also seems to show that we’ve passed the peak. I’m not sure we have enough enough data and evidence to back this up, but I’m convinced we do a lot of things much better in the project now that should help to keep the amount of reports down going forward. In a few years when we look back we can see if I was right.

We’re at mid year 2020 now with only two reports so far, which if we keep this rate will make this the best CVE-year after 2012. This, while we offer more money than ever for reported issues and we have a larger amount of code than ever to find problems in.

The companies surf along

One company suggests that they will chip in and pay for an increased curl bug bounty if the problem affects their use case, but for some reason the problems just never seem to affect them and I’ve pretty much stopped bothering to even ask them.

curl is shipped with a large number of operating systems and in a large number of applications but yet not even the large volume users participate in the curl bug bounty program but leave it to us (and they rarely even donate). Perhaps you can report curl security issues to them and have a chance of a higher reward?

You would possibly imagine that these companies should be keen on helping us out to indirectly secure users of their operating systems and applications, but no. We’re an open source project. They can use our products for free and they do, and our products improve their end products. But if there’s a problem in our stuff, that issue is ours to sort out and fix and those companies can then subsequently upgrade to the corrected version…

This is not a complaint, just an observation. I personally appreciate the freedom this gives us.

What can you do to help?

Help us review code. Report bugs. Report all security related problems you can find or suspect exists. Get your company to sponsor us. Write awesome pull requests that improve curl and the way it does things. Use curl and libcurl in your programs and projects. Buy commercial curl support from the best and only provider of commercial curl support.

curl 7.71.0 – blobs and retries

Welcome to the “prose version” of the curl 7.71.0 change log. There’s just been eight short weeks since I last blogged abut a curl release but here we are again and there’s quite a lot to say about this new one.

Presentation

Numbers

the 192nd release

4 changes

56 days (total: 8,132)

136 bug fixes (total: 6,209)

244 commits (total: 25,911)

0 new public libcurl function (total: 82)

7 new curl_easy_setopt() option (total: 277)

1 new curl command line option (total: 232)

59 contributors, 33 new (total: 2,202)

33 authors, 17 new (total: 803)

2 security fixes (total: 94)

1,100 USD paid in Bug Bounties

Security

CVE-2020-8169 Partial password leak over DNS on HTTP redirect

This is a nasty bug in user credential handling when doing authentication and HTTP redirects, which can lead to a part pf the password being prepended to the host name when doing name resolving, thus leaking it over the network and to the DNS server.

This bug was reported and we fixed it in public – and then someone else pointed out the security angle of it! Just shows my lack of imagination. As a result, even though this was a bug already reported – and fixed – and therefor technically not subject for a bug bounty, we decide to still reward the reporter, just maybe not with the full amount this would otherwise had received. We awarded the reporter 400 USD.

CVE-2020-8177 curl overwrite local file with -J

When curl -J is used it doesn’t work together with -i and there’s a check that prevents it from getting used. The check was flawed and could be circumvented, which the effect that a server that provides a file name in a Content-Disposition: header could overwrite a local file, since the check for an existing local file was done in the code for receiving a body – as -i wasn’t supposed to work… We awarded the reporter 700 USD.

Changes

We’re counting four “changes” this release.

CURLSSLOPT_NATIVE_CA – this is a new (experimental) flag that allows libcurl on Windows, built to use OpenSSL to use the Windows native CA store when verifying server certificates. See CURLOPT_SSL_OPTIONS. This option is marked experimental as we didn’t decide in time exactly how this new ability should relate to the existing CA store path options, so if you have opinions on this you know we’re interested!

CURLOPT-BLOBs – a new series of certificate related options have been added to libcurl. They all take blobs as arguments, which are basically just a memory area with a given size. These new options add the ability to provide certificates to libcurl entirely in memory without using files. See for example CURLOPT_SSLCERT_BLOB.

CURLOPT_PROXY_ISSUERCERT – turns out we were missing the proxy version of CURLOPT_ISSUERCERT so this completed the set. The proxy version is used for HTTPS-proxy connections.

--retry-all-errors is the new blunt tool of retries. It tells curl to retry the transfer for all and any error that might occur. For the cases where just --retry isn’t enough and you know it should work and retrying can get it through.

Interesting bug-fixes

This is yet another release with over a hundred and thirty different bug-fixes. Of course all of them have their own little story to tell but I need to filter a bit to be able to do this blog post. Here are my collected favorites, in no particular order…

- Bug-fixed happy eyeballs– turns out the happy eyeballs algorithm for doing parallel dual-stack connections (also for QUIC) still had some glitches…

- Curl_addrinfo: use one malloc instead of three – another little optimize memory allocation step. When we allocate memory for DNS cache entries and more, we now allocate the full struct in a single larger allocation instead of the previous three separate smaller ones. Another little cleanup.

- options-in-versions – this is a new document shipped with curl, listing exactly which curl version added each command line option that exists today. Should help everyone who wants their curl-using scripts to work on their uncle’s ancient setup.

- dynbuf – we introduced a new internal generic dynamic buffer functions to cake care of dynamic buffers, growing and shrinking them. We basically simplified and reduced the number of different implementations into a single one with better checks and stricter controls. The internal API is documented.

- on macOS avoid DNS-over-HTTPS when given a numerical IP address – this bug made for example FTP using DoH fail on macOS. The reason this is macOS-specific is that it is the only OS on which we call the name resolving functions even for numerical-only addresses.

- http2: keep trying to send pending frames after req.upload_done – HTTP/2 turned 5 years old in May 2020 but we can still find new bugs. This one was a regression that broke uploads in some conditions.

- qlog support – for the HTTP/3 cowboys out there. This makes curl generate QUIC related logs in the directory specified with the environment variable QLOGDIR.

- OpenSSL: have CURLOPT_CRLFILE imply CURLSSLOPT_NO_PARTIALCHAIN – another regression that had broken CURLOPT_CRLFILE. Two steps forward, one step back.

- openssl: set FLAG_TRUSTED_FIRST unconditionally – with this flag set unconditionally curl works around the issue with OpenSSL versions before 1.1.0 when it would have problems if there are duplicate trust chains and one of the chains has an expired cert. The AddTrust issue.

- fix expected length of SOCKS5 reply – my recent SOCKS overhaul and improvements brought this regression with SOCKS5 authentication.

- detect connection close during SOCKS handshake – the same previous overhaul also apparently made the SOCKS handshake logic not correctly detect closed connection, which could lead to busy-looping and using 100% CPU for a while…

- add https-proxy support to the test suite – Finally it happened. And a few new test cases for it was also then subsequently provided.

- close connection after excess data has been read – a very simple change that begged the question why we didn’t do it before! If a server provides more data than what it originally told it was gonna deliver, the connection is one marked for closure and won’t be re-used. Such a re-use would usually just fail miserably anyway.

- accept “any length” credentials for proxy auth – we had some old limits of 256 byte name and password for proxy authentication lingering for no reason – and yes a user ran into the limit. This limit is now gone and was raised to… 8MB per input string.

- allocate the download buffer at transfer start– just more clever way to allocate (and free) the download buffers, to only have them around when they’re actually needed and not longer. Helps reducing the amount of run-time memory curl needs and uses.

- accept “::” as a valid IPv6 address – the URL parser was a tad bit too strict…

- add SSLKEYLOGFILE support for wolfSSL – SSLKEYLOGFILE is a lovely tool to inspect curl’s TLS traffic. Now also available when built with wolfSSL.

- enable NTLM support with wolfSSL – yeps, as simple as that. If you build curl with wolfSSL you can now play with NTLM and SMB!

- move HTTP header storage to Curl_easy from connectdata – another one of those HTTP/2 related problems that surprised me still was lingering. Storing request-related data in the connection-oriented struct is a bad idea as this caused a race condition which could lead to outgoing requests with mixed up headers from another request over the same connection.

- CODE_REVIEW: how to do code reviews in curl – thanks to us adding this document, we could tick off the final box and we are now at gold level…

- leave the HTTP method untouched in the set.* struct – when libcurl was told to follow a HTTP redirect and the response code would tell libcurl to change that the method, that new method would be set in the easy handle in a way so that if the handle was re-used at that point, the updated and not the original method would be used – contrary to documentation and how libcurl otherwise works.

- treat literal IPv6 addresses with zone IDs as a host name – the curl tool could mistake a given numerical IPv6 address with a “zone id” containing a dash as a “glob” and return an error instead…

Coming up

There are more changes coming and some PR are already pending waiting for the feature window to open. Next release is likely to become version 7.72.0 and have some new features. Stay tuned!

curl ootw: –connect-timeout

--connect-timeout [seconds] was added in curl 7.7 and has no short option version. The number of seconds for this option can (since 7.32.0) be specified using decimals, like 2.345.

How long to allow something to take?

curl shipped with support for the -m option already from the start. That limits the total time a user allows the entire curl operation to spend.

However, if you’re about to do a large file transfer and you don’t know how fast the network will be so how do you know how long time to allow the operation to take? In a lot of of situations, you then end up basically adding a huge margin. Like:

This operation usually takes 10 minutes, but what if everything is super overloaded at the time, let’s allow it 120 minutes to complete.

Nothing really wrong with that, but sometimes you end up noticing that something in the network or the remote server just dropped the packets and the connection wouldn’t even complete the TCP handshake within the given time allowance.

If you want your shell script to loop and try again on errors, spending 120 minutes for every lap makes it a very slow operation. Maybe there’s a better way?

Introducing the connect timeout

To help combat this problem, the --connect-timeout is a way to “stage” the timeout. This option sets the maximum time curl is allowed to spend on setting up the connection. That involves resolving the host name, connecting TCP and doing the TLS handshake. If curl hasn’t reached its “established connection” state before the connect timeout limit has been reached, the transfer will be aborted and an error is returned.

This way, you can for example allow the connect procedure to take no more than 21 seconds, but then allow the rest of the transfer to go on for a total of 120 minutes if the transfer just happens to be terribly slow.

Like this:

curl --connect-timeout 21 --max-time 7200 https://example.com

Sub-second

You can even set the connection timeout to be less than a second (with the exception of some special builds that aren’t very common) with the use of decimals.

Require the connection to be established within 650 milliseconds:

curl --connect-timeout 0.650 https://example.com

Just note that DNS, TCP and the local network conditions etc at the moment you run this command line may vary greatly, so maybe restricting the connection time a lot will have the effect that it sometimes aborts a connection a little too easily. Just beware.

A connection that stalls

If you prefer a way to detect and abort transfers that stall after a while (but maybe long before the maximum timeout is reached), you might want to look into using –limit-speed.

Also, if a connection goes down to zero bytes/second for a period of time, as in it doesn’t send any data at all, and you still want your connection and transfer to survive that, you might want to make sure that you have your –keepalive-time set correctly.

webinar: testing curl for security

Alternative title: “testing, Q&A, CI, fuzzing and security in curl”

June 30 2020, at 10:00 AM in Pacific Time (17:00 GMT, 19:00 CEST).

Time: 30-40 minutes

Abstract: curl runs in some ten billion installations in the world, in

virtually every connected device on the planet and ported to more operating systems than most. In this presentation, curl’s lead developer Daniel Stenberg talks about how the curl project takes on testing, QA, CI and fuzzing etc, to make sure curl remains a stable and secure component for everyone while still getting new features and getting developed further. With a Q&A session at the end for your questions!

Register here at attend the live event. The video will be made available afterward.

curl user survey 2020 analysis

In 2020, the curl user survey ran for the 7th consecutive year. It ended on May 31 and this year we manage to get feedback donated by 930 individuals.

Analysis

Analyzing this huge lump of data, comments and shared experiences is a lot of work and I’m sorry it’s taken me several weeks to complete it. I’m happy to share this 47 page PDF document here with you:

curl user survey 2020 analysis

If you have questions on the content or find mistakes or things looking odd in the data or graphs, do let me know!

If you want to help out to do a better survey or analysis next year, I hope you know that you’d be much appreciated…

QUIC with wolfSSL

We have started the work on extending wolfSSL to provide the necessary API calls to power QUIC and HTTP/3 implementations!

Small, fast and FIPS

The TLS library known as wolfSSL is already very often a top choice when users are looking for a small and yet very fast TLS stack that supports all the latest protocol features; including TLS 1.3 support – open source with commercial support available.

As manufacturers of IoT devices and other systems with memory, CPU and footprint constraints are looking forward to following the Internet development and switching over to upcoming QUIC and HTTP/3 protocols, wolfSSL is here to help users take that step.

A QUIC reminder

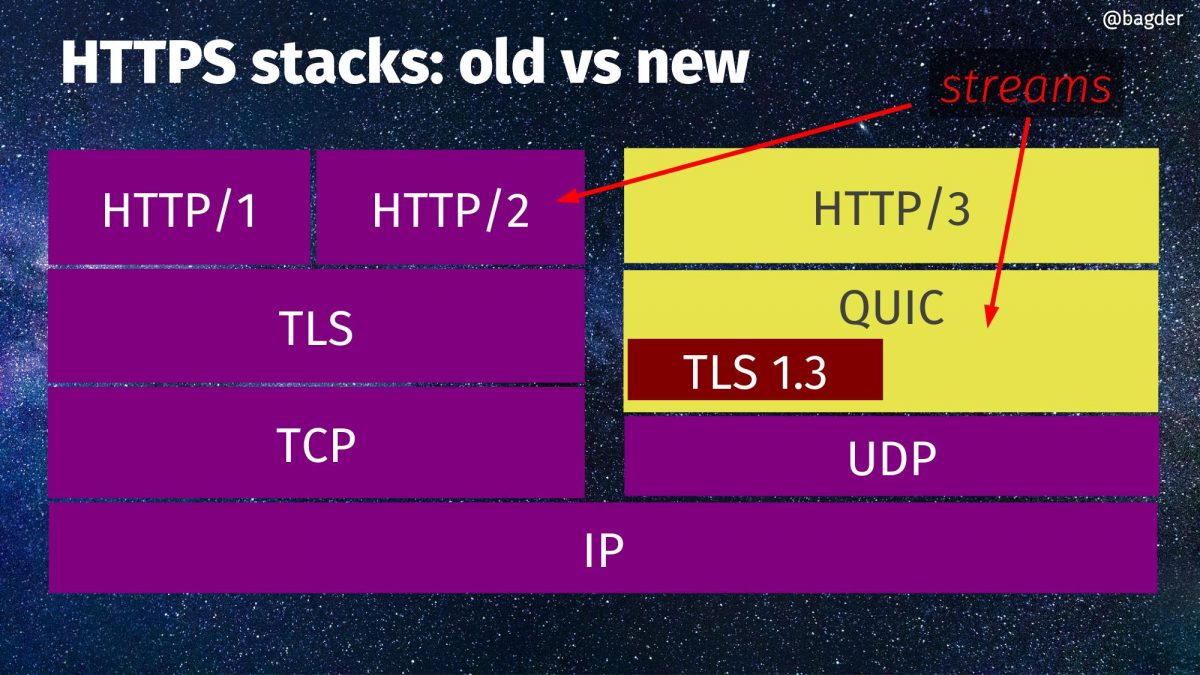

In case you have forgot, here’s a schematic view of HTTPS stacks, old vs new. On the right side you can see HTTP/3, QUIC and the little TLS 1.3 box there within QUIC.

ngtcp2

There are no plans to write a full QUIC stack. There are already plenty of those. We’re talking about adjustments and extensions of the existing TLS library API set to make sure wolfSSL can be used as the TLS component in a QUIC stack.

One of the leading QUIC stacks and so far the only one I know of that does this, ngtcp2 is written to be TLS library agnostic and allows different TLS libraries to be plugged in as different backends. I believe it makes perfect sense to make such a plugin for wolfSSL to be a sensible step as soon as there’s code to try out.

A neat effect of that, would be that once wolfSSL works as a backend to ngtcp2, it should be possible to do full-fledged HTTP/3 transfers using curl powered by ngtcp2+wolfSSL. Contact us with other ideas for QUIC stacks you would like us to test wolfSSL with!

FIPS 140-2

We expect wolfSSL to be the first FIPS-based implementation to add support for QUIC. I hear this is valuable to a number of users.

When

This work begins now and this is just a blog post of our intentions. We and I will of course love to get your feedback on this and whatever else that is related. We’re also interested to get in touch with people and companies who want to be early testers of our implementation. You know where to find us!

I can promise you that the more interest we can sense to exist for this effort, the sooner we will see the first code to test out.

It seems likely that we’re not going to support any older TLS drafts for QUIC than draft-29.

curl meets gold level best practices

About four years ago I announced that curl was 100% compliant with the CII Best Practices criteria. curl was one of the first projects on that train to reach a 100% – primarily of course because we were early joiners and participants of the Best Practices project.

The point of that was just to highlight and underscore that we do everything we can in the curl project to act as a responsible open source project and citizen of the larger ecosystem. You should be able to trust curl, in every aspect.

Going above and beyond basic

Subsequently, the best practices project added higher levels of compliance. Basically adding a bunch of requirements so if you want to grade yourself at silver or even gold level there are a whole series of additional requirements to meet. At the time those were added, I felt they were asking for quite a lot of specifics that we didn’t provide in the curl project and with a bit of a sigh I felt I had to accept the fact that we would remain on “just” 100% compliance and only reaching a part of the way toward Silver and Gold. A little disheartened of course because I always want curl to be in the top.

So maybe Silver?

I had left the awareness of that entry listing in a dusty corner of my brain and hadn’t considered it much lately, when I noticed the other day that it was announced that the Linux kernel project reached gold level best practice.

That’s a project with around 50 times more developers and commits than curl for an average release (and even a greater multiplier for amount of code) so I’m not suggesting the two projects are comparable in any sense. But it made me remember our entry on CII Best Practices web site.

I came back, updated a few fields that seemed to not be entirely correct anymore and all of a sudden curl quite unexpectedly had a 100% compliance at Silver level!

Further?

If Silver was achievable, what’s actually left for gold?

Sure enough, soon there were only a few remaining criteria left “unmet” and after some focused efforts in the project, we had created the final set of documents with information that were previously missing. When we now finally could fill in links to those docs in the final few entries, project curl found itself also scoring a 100% at gold level.

Best Practices: Gold Level

What does it mean for us? What does it mean for you, our users?

For us, it is a double-check and verification that we’re doing the right things and that we are providing the right information in the project and we haven’t forgotten anything major. We already knew that we were doing everything open source in a pretty good way, but getting a bunch of criteria that insisted on a number of things also made us go the extra way and really provide information for everything in written form. Some of what previously really only was implied, discussed in IRC or read between lines in various pull requests.

I’m proud to lead the curl project and I’m proud of all our maintainers and contributors.

For users, having curl reach gold level makes it visible that we’re that kind of open source project. We’re part of this top clique of projects. We care about every little open source detail and this should instill trust and confidence in our users. You can trust curl. We’re a golden open source project. We’re with you all the way.

The final criteria we checked off

Which was the last criteria of them all for curl to fulfill to reach gold?

The project MUST document its code review requirements, including how code review is conducted, what must be checked, and what is required to be acceptable (link)

This criteria is now fulfilled by the brand new document CODE_REVIEW.md.

What’s next?

We’re working on the next release. We always do. Stop the slacking now and get back to work!

Credits

Gold image by Erik Stein from Pixabay