This morning, my debug session was interrupted for a brief moment when two friends independently of each other pinged me to inform me about a talk at the current SEC-T conference going on here in Stockholm right now. It was yet again time to bring up the good old fun called libcurl API bashing. Again from the angle that users who don’t read the API docs might end up using it wrong.

Updated: You can see Meredith Patterson’s talk here, and the libcurl parts start at 24:15.

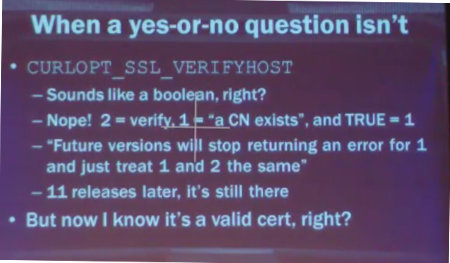

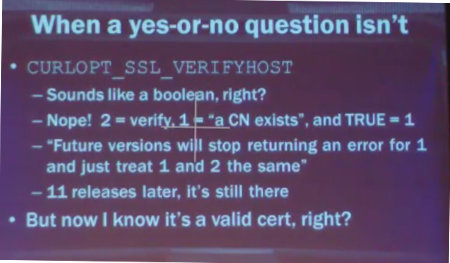

The specific libcurl topic at hand once again mostly had the CURLOPT_VERIFYHOST option in focus, with basically is the same argument that was thrown at us two years ago when libcurl was said to be dangerous. It is not a boolean. It is an option that takes (or took) three different values, where 2 is the secure level and 0 is disabled.

(This picture is a screengrab from the live stream off youtube, I don’t have any link to a stored version of it yet. Click it for slightly higher resolution.)

Speaker Meredith L. Patterson actually spoke for quite a long time about curl and its options to verify server certificates. While I will agree that she has a few good points, it was still riddled with errors and I think she deliberately phrased things in a manner to make the talk good and snappy rather than to be factually correct and trying to understand why things are like they are.

The VERIFYHOST option apparently sounds as if it takes a boolean (accordingly), but it doesn’t. She says verifying a certificate has to be a Yes/No question so obviously it is a boolean. First, let’s be really technical: the libcurl options that take numerical values always accept a ‘long’ and all documentation specify which values you can pass in. None of them are boolean, not by actual type in the C language and not described like that in the man pages. There are however language bindings running on top of libcurl that may use booleans for the values that take 0 or 1, but there’s no guarantee we won’t add more values in a future to numerical options.

I wrote down a few quotes from her that I’d like to address.

“In order for it to do anything useful, the value actually has to be set to two”

I get it, she wants a fun presentation that makes the audience listen and grin cheerfully. But this is highly inaccurate. libcurl has it set to verify by default. An application doesn’t have to set it to anything. The only reason to set this value is if you’re not happy with checking the cert unconditionally, and then you’ve already wondered off the secure route.

“All it does when set to to two is to check that the common name in the cert matches the host name in the URL. That’s literally all it does.”

No, it’s not. It “only” verifies the host name curl connects to against the name hints in the server cert, yes, but that’s a lot more than just the common name field.

“there’s been 10 versions and they haven’t fixed this yet […] the docs still say they’re gonna fix this eventually […] I wanna know when eventually is”

Qualified BS and ignorance of details. Let’s see the actual code first: it ignores the 1 value and returns an error and thus leaves the internal default 2, Alas, code that sets 1 or 2 gets the same effect == verified certificate. Why is this a problem?

Then, she says she really wants to know when “eventually” is. (The docs say “Future versions will…”) So if she was so curious you’d think she would’ve tried to ask us? We’re an accessible bunch, on mailing lists, on IRC and on twitter. No she didn’t ask.

But perhaps most importantly: did she really consider why it returns an error for 1? Since libcurl silently accepted 1 as a value for something like 10 years, there are a lot of old installations “out there” in the wild, and by returning an error for 1 we try to make applications notice and adjust. By silently accepting 1 without errors, there would be no notice and people will keep using 1 in new applications as well and thus when running such an newly written application with an older libcurl – you’d be back to having the security problem again. So, we have the error there to improve the situation.

“a peer is someone like you […] a host is a server”

I’m a networking guy since 20+ years and I’m not used to people having a hard time to understand these terms. While perhaps there are rookies out in the world who don’t immediately understand some terms in the curl option names, should we really be criticized for that? I find that a hilarious critique. Also, these names were picked 13 years ago and we have them around for compatibility and API stability.

“why would you ever want to …”

Welcome to the real world. Why would an application author ever want to have these options to something else than just full check and no check? Because people and software development is a large world with many different desires and use case scenarios and curl is more widely used and abused than what many people consider. Lots of people have wanted something else than just a Yes/No to server cert verification. In fact, I’ve had many users ask for even more switches and fine-grained ways to fiddle with verification. Yes/No is a lay mans simplified view of certificate verification.

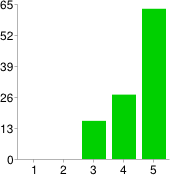

(This picture is the slide from the above picture, just zoomed and straightened out a bit.)

API age, stability and organic growth

We started working on libcurl in spring 1999, we added the CURLOPT_SSL_VERIFYPEER option in October 2000 and we added CURLOPT_SSL_VERIFYHOST in August 2001. All that quite a long time ago.

Then add thousands of hours, hundreds of hackers, thousands of applications, a user count that probably surpasses one billion users by now. Then also add the fact that option names are sticky in the way we write docs, examples pop up all over the internet and everyone who’s close to the project learns them by name and spirit and we quite simply grow attached to them and the way they work. Changing the name of an option is really painful and cause of a lot of confusion.

I’ve instead tried to more and more emphasize the functionality in the docs, to stress what the options do and how to do server cert verifications with curl the safe way.

I can’t force users to read docs. I can’t forbid users to blindly assume something and I’m not in control of, nor do I want to affect, the large population of third party bindings that exist for using on top of libcurl to cater for every imaginable programming language – and some of them may of course themselves have documentation problems and what not.

Would I change some of the APIs and names for options we have in libcurl if I would redo them today? Yes I would.

So what do we do about it?

I think this is the only really interesting question to take from all this. Everyone wants stable APIs. Everyone wants sensible and easy to understand APIs and as we can see they should also basically be possible to figure out without reading any documentation. And yet the API has to be powerful and flexible enough to be really useful for all those different applications.

At this point where we have these options that we do, when you’ve done your mud slinging and the finger of blame is firmly pointed at us. How exactly do you suggest we move forward to fix these claimed problems?

Taking it personally

Before anyone tells me to not take it personally: curl is my biggest hobby and a project I’ve spent many years and thousands of hours on. Of course I take it personally, otherwise I would’ve stopped working in the project a long time ago. This is personal to me. I give it my loving care and personal energy and then someone comes here and throw ill-founded and badly researched criticisms at me. I think criticizers of open source projects should learn to discuss the matters with the projects as their primary way instead of using it to make their conference presentations become more feisty.

Bear with me. It is time to take a deep dive into the libcurl internals and see how it handles timeouts and timers. This is meant as useful information to libcurl users but even more as insights for people who’d like to fiddle with libcurl internals and work on its source code and architecture.

Bear with me. It is time to take a deep dive into the libcurl internals and see how it handles timeouts and timers. This is meant as useful information to libcurl users but even more as insights for people who’d like to fiddle with libcurl internals and work on its source code and architecture.

stashed downstairs, on the wired in-house gigabit Ethernet. I run an rsync job every night that syncs the important stuff to the NAS and I run a second rsync that also mirrors relevant data

stashed downstairs, on the wired in-house gigabit Ethernet. I run an rsync job every night that syncs the important stuff to the NAS and I run a second rsync that also mirrors relevant data  Next to the NAS downstairs is the

Next to the NAS downstairs is the  I have fiber

I have fiber I have a lowly D-Link DIR 635 router and wifi access point providing wifi for the 2.4GHz and 5GHz bands and gigabit speed on the wired side. It was dead cheap it just works. It NATs my traffic and port forwards some ports through to my desktop machine.

I have a lowly D-Link DIR 635 router and wifi access point providing wifi for the 2.4GHz and 5GHz bands and gigabit speed on the wired side. It was dead cheap it just works. It NATs my traffic and port forwards some ports through to my desktop machine. When I installed the fiber I gave up the copper connection to my home and since then

When I installed the fiber I gave up the copper connection to my home and since then