FOSDEM 2020 is over for this time and I had an awesome time in Brussels once again.

Stickers

I brought a huge collection of stickers this year and I kept going back to the wolfSSL stand to refill the stash and it kept being emptied almost as fast. Hundreds of curl stickers were given away! The photo on the right shows my “sticker bag” as it looked before I left Sweden.

Lesson for next year: bring a larger amount of stickers! If you missed out on curl stickers, get in touch and I’ll do my best to satisfy your needs.

The talk

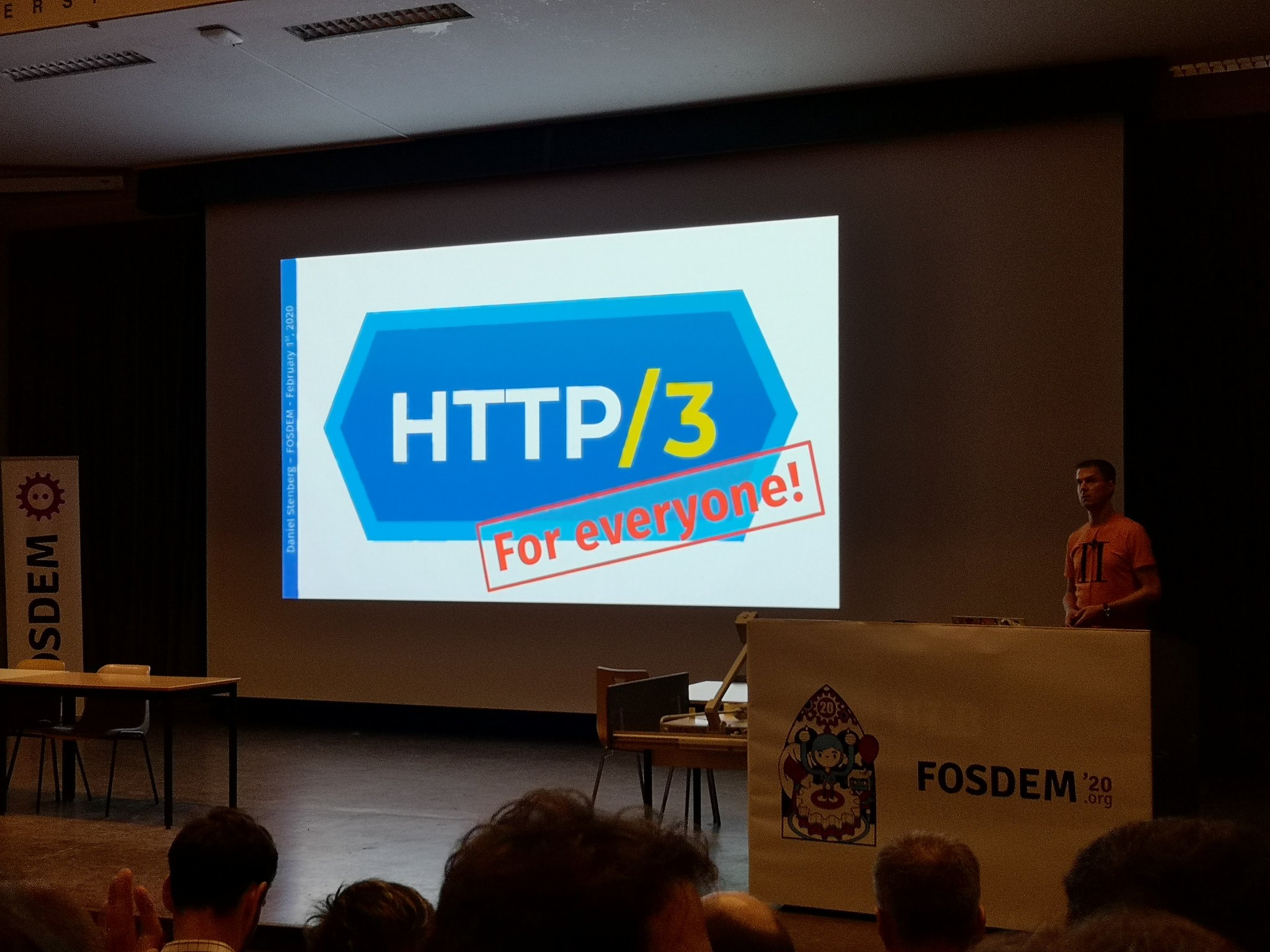

“HTTP/3 for everyone” was my single talk this FOSDEM. Just two days before the talk, I landed updated commits in curl’s git master branch for doing HTTP/3 up-to-date with the latest draft (-25). Very timely and I got to update the slide mentioning this.

As I talked HTTP/3 already last year in the Mozilla devroom, I also made sure to go through the slides I used then to compare and make sure I wouldn’t do too much of the same talk. But lots of things have changed and most of the content is updated and different this time around. Last year, literally hundreds of people were lining up outside wanting to get into room when the doors were closed. This year, I talked in the room Janson, which features 1415 seats. The biggest one on campus. It was pack full!

It is kind of an adrenaline rush to stand in front of such a wall of people. At one time in my talk I paused for a brief moment and then I felt I could almost hear the complete silence when a huge amount of attentive faces captured what I had to say.

I got a lot of positive feedback on the presentation. I also thought that my decision to not even try to take question in the big room was a correct and I ended up talking and discussing details behind the scene for a good while after my talk was done. Really fun!

The video

The video is also available from the FOSDEM site in webm and mp4 formats.

The slides

If you want the slides only, run over to slideshare and view them.