These days, operating system kernels provide TCP/IP stacks that can do really fast network transfers. It’s not even unusual for ordinary people to have gigabit connections at home and of course we want our applications to be able take advantage of them.

I don’t think many readers here will be surprised when I say that fulfilling this desire turns out much easier said than done in the Windows world.

Autotuning?

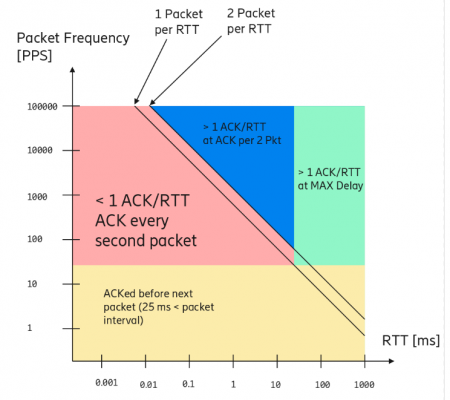

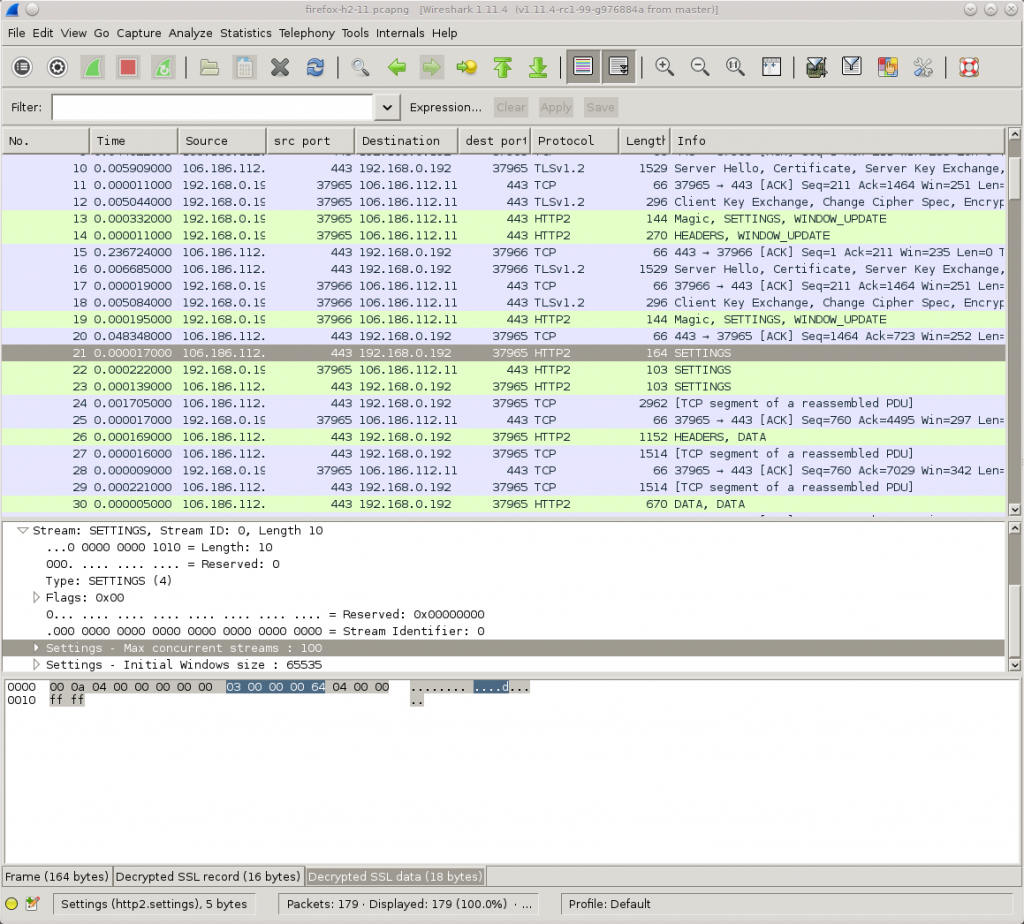

Since Windows 7 / 2008R2, Windows implements send buffer autotuning. Simply put, the faster transfer and longer RTT the connection has, the larger the buffer it uses (up to a max) so that more un-acked data can be outstanding and thus enable the system to saturate even really fast links.

Turns out this useful feature isn’t enabled when applications use non-blocking sockets. The send buffer isn’t increased at all then.

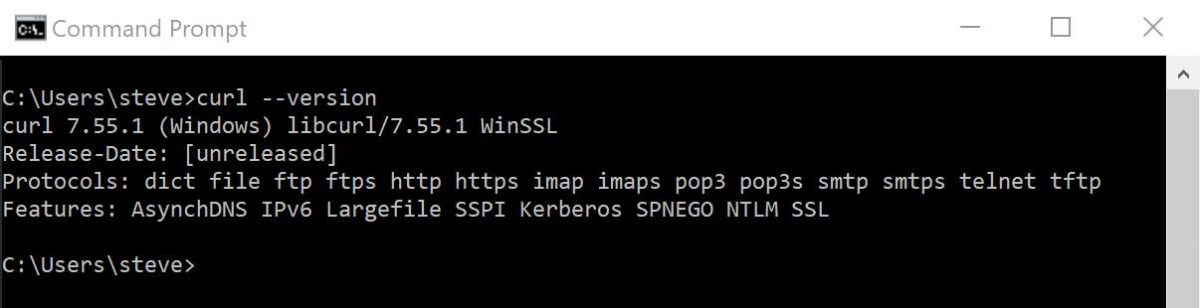

Internally, curl is using non-blocking sockets and most of the code is platform agnostic so it wouldn’t be practical to switch that off for a particular system. The code is pretty much independent of the target that will run it, and now with this latest find we have also started to understand why it doesn’t always perform as well on Windows as on other operating systems: the upload buffer (SO_SNDBUF) is fixed size and simply too small to perform well in a lot of cases

Applications can still enlarge the buffer, if they’re aware of this bottleneck, and get better performance without having to change libcurl, but I doubt a lot of them do. And really, libcurl should perform as good as it possibly can just by itself without any necessary tuning by the application authors.

Users testing this out

Daniel Jelinski brought a fix for this that repeatedly poll Windows during uploads to ask for a suitable send buffer size and then resizes it on the go if it deems a new size is better. In order to figure out that if this patch is indeed a good idea or if there’s a downside for some, we went wide and called out for users to help us.

The results were amazing. With speedups up to almost 7 times faster, exactly those newer Windows versions that supposedly have autotuning can obviously benefit substantially from this patch. The median test still performed more than twice as fast uploads with the patch. Pretty amazing really. And beyond weird that this crazy thing should be required to get ordinary sockets to perform properly on an updated operating system in 2018.

Windows XP isn’t affected at all by this fix, and we’ve seen tests running as VirtualBox guests in NAT-mode also not gain anything, but we believe that’s VirtualBox’s “fault” rather than Windows or the patch.

Landing

The commit is merged into curl’s master git branch and will be part of the pending curl 7.61.1 release, which is due to ship on September 5, 2018. I think it can serve as an interesting case study to see how long time it takes until Windows 10 users get their versions updated to this.

Table of test runs

The Windows versions, and the test times for the runs with the unmodified curl, the patched one, how much time the second run needed as a percentage of the first, a column with comments and last a comment showing the speedup multiple for that test.

Thank you everyone who helped us out by running these tests!

| Version | Time vanilla | Time patched | New time | Comment | speedup |

| 6.0.6002 | 15.234 | 2.234 | 14.66% | Vista SP2 | 6.82 |

| 6.1.7601 | 8.175 | 2.106 | 25.76% | Windows 7 SP1 Enterprise | 3.88 |

| 6.1.7601 | 10.109 | 2.621 | 25.93% | Windows 7 Professional SP1 | 3.86 |

| 6.1.7601 | 8.125 | 2.203 | 27.11% | 2008 R2 SP1 | 3.69 |

| 6.1.7601 | 8.562 | 2.375 | 27.74% | 3.61 | |

| 6.1.7601 | 9.657 | 2.684 | 27.79% | 3.60 | |

| 6.1.7601 | 11.263 | 3.432 | 30.47% | Windows 2008R2 | 3.28 |

| 6.1.7601 | 5.288 | 1.654 | 31.28% | 3.20 | |

| 10.0.16299.309 | 4.281 | 1.484 | 34.66% | Windows 10, 1709 | 2.88 |

| 10.0.17134.165 | 4.469 | 1.64 | 36.70% | 2.73 | |

| 10.0.16299.547 | 4.844 | 1.797 | 37.10% | 2.70 | |

| 10.0.14393 | 4.281 | 1.594 | 37.23% | Windows 10, 1607 | 2.69 |

| 10.0.17134.165 | 4.547 | 1.703 | 37.45% | 2.67 | |

| 10.0.17134.165 | 4.875 | 1.891 | 38.79% | 2.58 | |

| 10.0.15063 | 4.578 | 1.907 | 41.66% | 2.40 | |

| 6.3.9600 | 4.718 | 2.031 | 43.05% | Windows 8 (original) | 2.32 |

| 10.0.17134.191 | 3.735 | 1.625 | 43.51% | 2.30 | |

| 10.0.17713.1002 | 6.062 | 2.656 | 43.81% | 2.28 | |

| 6.3.9600 | 2.921 | 1.297 | 44.40% | Windows 2012R2 | 2.25 |

| 10.0.17134.112 | 5.125 | 2.282 | 44.53% | 2.25 | |

| 10.0.17134.191 | 5.593 | 2.719 | 48.61% | 2.06 | |

| 10.0.17134.165 | 5.734 | 2.797 | 48.78% | run 1 | 2.05 |

| 10.0.14393 | 3.422 | 1.844 | 53.89% | 1.86 | |

| 10.0.17134.165 | 4.156 | 2.469 | 59.41% | had to use the HTTPS endpoint | 1.68 |

| 6.1.7601 | 7.082 | 4.945 | 69.82% | over proxy | 1.43 |

| 10.0.17134.165 | 5.765 | 4.25 | 73.72% | run 2 | 1.36 |

| 5.1.2600 | 10.671 | 10.157 | 95.18% | Windows XP Professional SP3 | 1.05 |

| 10.0.16299.547 | 1.469 | 1.422 | 96.80% | in a VM runing on Linux | 1.03 |

| 5.1.2600 | 11.297 | 11.046 | 97.78% | XP | 1.02 |

| 6.3.9600 | 5.312 | 5.219 | 98.25% | 1.02 | |

| 5.2.3790 | 5.031 | 5 | 99.38% | Windows 2003 | 1.01 |

| 5.1.2600 | 7.703 | 7.656 | 99.39% | XP SP3 | 1.01 |

| 10.0.17134.191 | 1.219 | 1.531 | 125.59% | FTP | 0.80 |

| TOTAL | 205.303 | 102.271 | 49.81% | 2.01 | |

| MEDIAN | 43.51% | 2.30 |

The

The