The report

Usually, security problems in the curl project come to us out of the blue. Someone has found a bug they suspect may have a security impact and they tell us about it on the curl-security@haxx.se email address. Mails sent to this address reach a private mailing list with the curl security team members as the only subscribers.

An important first step is that we respond to the sender, acknowledging the report. Often we also include a few follow-up questions at once. It is important to us to keep the original reporter in the loop and included in all subsequent discussions about this issue – unless they prefer to opt out.

If we find the issue ourselves, we act pretty much the same way.

In the most obvious and well-reported cases there are no room for doubts or hesitation about what the bugs and the impact of them are, but very often the reports lead to discussions.

The assessment

Is it a bug in the first place, is it perhaps even documented or just plain bad use?

If it is a bug, is this a security problem that can be abused or somehow put users in some sort of risk?

Most issues we get reported as security issues are also in the end treated as such, as we tend to err on the safe side.

The time plan

Unless the issue is critical, we prefer to schedule a fix and announcement of the issue in association with the pending next release, and as we do releases every 8 weeks like clockwork, that’s never very far away.

Unless the issue is critical, we prefer to schedule a fix and announcement of the issue in association with the pending next release, and as we do releases every 8 weeks like clockwork, that’s never very far away.

We communicate the suggested schedule with the reporter to make sure we agree. If a sooner release is preferred, we work out a schedule for an extra release. In the past we’ve did occasional faster security releases also when the issue already had been made public, so we wanted to shorten the time window during which users could be harmed by the problem.

We really really do not want a problem to persist longer than until the next release.

The fix

The curl security team and the reporter work on fixing the issue. Ideally in part by the reporter making sure that they can’t reproduce it anymore and we add a test case or two.

We keep the fix undisclosed for the time being. It is not committed to the public git repository but kept in a private branch. We usually put it on a private URL so that we can link to it when we ask for a CVE, see below.

All security issues should make us ask ourselves – what did we do wrong that made us not discover this sooner? And ideally we should introduce processes, tests and checks to make sure we detect other similar mistakes now and in the future.

we should introduce processes, tests and checks to make sure we detect other similar mistakes now and in the future.

Typically we only generate a single patch from the git master master and offer that as the final solution. In the curl project we don’t maintain multiple branches. Distros and vendors who ship older or even multiple curl versions backport the patch to their systems by themselves. Sometimes we get backported patches back to offer users as well, but those are exceptions to the rule.

The advisory

In parallel to working on the fix, we write up a “security advisory” about the problem. It is a detailed description about the problem, what impact it may have if triggered or abused and if we know of any exploits of it.

What conditions need to be met for the bug to trigger. What’s the version range that is affected, what’s the remedies that can be done as a work-around if the patch is not applied etc.

We work out the advisory in cooperation with the reporter so that we get the description and the credits right.

The advisory also always contains a time line that clearly describes when we got to know about the problem etc.

The CVE

Once we have an advisory and a patch, none of which needs to be their final versions, we can proceed and ask for a CVE. A CVE is a unique “ID” that is issued for security problems to make them easy to reference. CVE stands for Common Vulnerabilities and Exposures.

Depending on where in the release cycle we are, we might have to hold off at this point. For all bugs that aren’t proprietary-operating-system specific, we pre-notify and ask for a CVE on the distros@openwall mailing list. This mailing list prohibits an embargo longer than 14 days, so we cannot ask for a CVE from them longer than 2 weeks in advance before our release.

The idea here is that the embargo time gives the distributions time and opportunity to prepare updates of their packages so they can be pretty much in sync with our release and reduce the time window their users are at risk. Of course, not all operating system vendors manage to actually ship a curl update on two weeks notice, and at least one major commercial vendor regularly informs me that this is a too short time frame for them.

For flaws that don’t affect the free operating systems at all, we ask MITRE directly for CVEs.

The last 48 hours

When there is roughly 48 hours left until the coming release and security announcement, we merge the private security fix branch into master and push it. That immediately makes the fix public and those who are alert can then take advantage of this knowledge – potentially for malicious purposes. The security advisory itself is however not made public until release day.

We use these 48 hours to get the fix tested on more systems to verify that it is not doing any major breakage. The weakest part of our security procedure is that the fix has been worked out in secret so it has not had the chance to get widely built and tested, so that is performed now.

The release

We upload the new release. We send out the release announcement email, update the web site and make the advisory for the issue public. We send out the security advisory alert on the proper email lists.

Bug Bounty?

Unfortunately we don’t have any bug bounties on our own in the curl project. We simply have no money for that. We actually don’t have money at all for anything.

Unfortunately we don’t have any bug bounties on our own in the curl project. We simply have no money for that. We actually don’t have money at all for anything.

Hackerone offers bounties for curl related issues. If you have reported a critical issue you can request one from them after it has been fixed in curl.

Unless the issue is critical, we prefer to schedule a fix and announcement of the issue in association with the pending next release, and as we do releases every 8 weeks like clockwork, that’s never very far away.

Unless the issue is critical, we prefer to schedule a fix and announcement of the issue in association with the pending next release, and as we do releases every 8 weeks like clockwork, that’s never very far away. we should introduce processes, tests and checks to make sure we detect other similar mistakes now and in the future.

we should introduce processes, tests and checks to make sure we detect other similar mistakes now and in the future. Unfortunately we don’t have any bug bounties on our own in the curl project. We simply have no money for that. We actually don’t have money at all for anything.

Unfortunately we don’t have any bug bounties on our own in the curl project. We simply have no money for that. We actually don’t have money at all for anything.

I think the highest risk scenario is when users download pre-built curl or libcurl binaries from various places on the internet that isn’t the official curl web site. How can you know for sure what you’re getting then, as you couldn’t review the code or changes done. You just put your trust in a remote person or organization to do what’s right for you.

I think the highest risk scenario is when users download pre-built curl or libcurl binaries from various places on the internet that isn’t the official curl web site. How can you know for sure what you’re getting then, as you couldn’t review the code or changes done. You just put your trust in a remote person or organization to do what’s right for you. Some people argue that projects could or should pledge for every release that there’s no deliberate backdoor planted so that if the day comes in the future when a three-letter secret organization forces us to insert a backdoor, the lack of such a pledge for the subsequent release would function as an alarm signal to people that something is wrong.

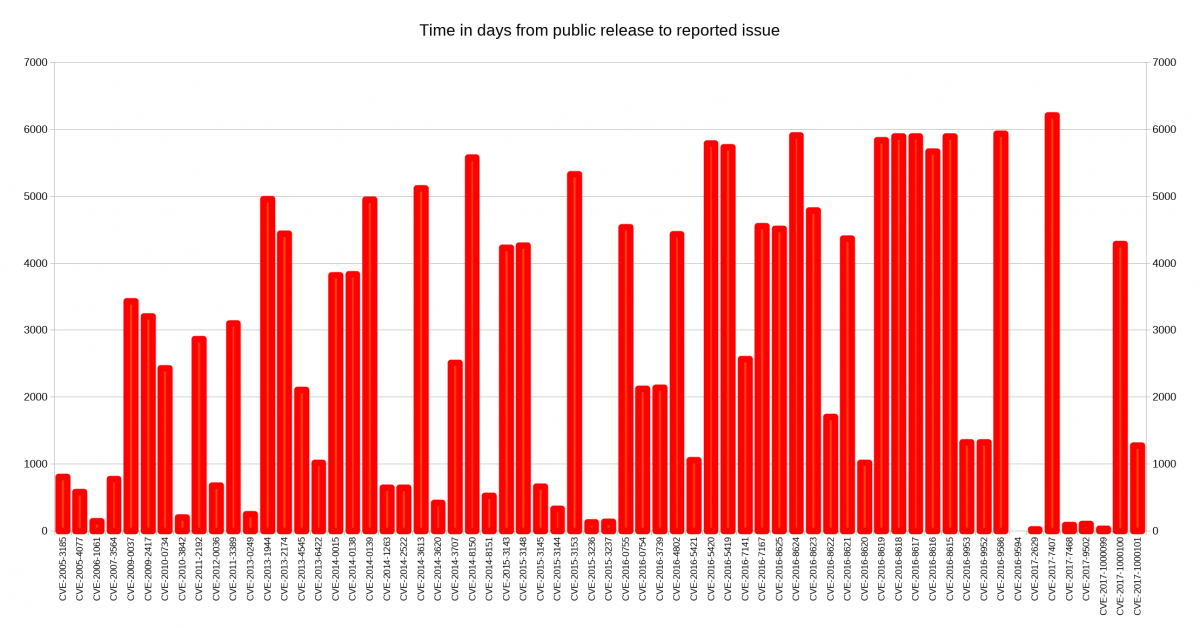

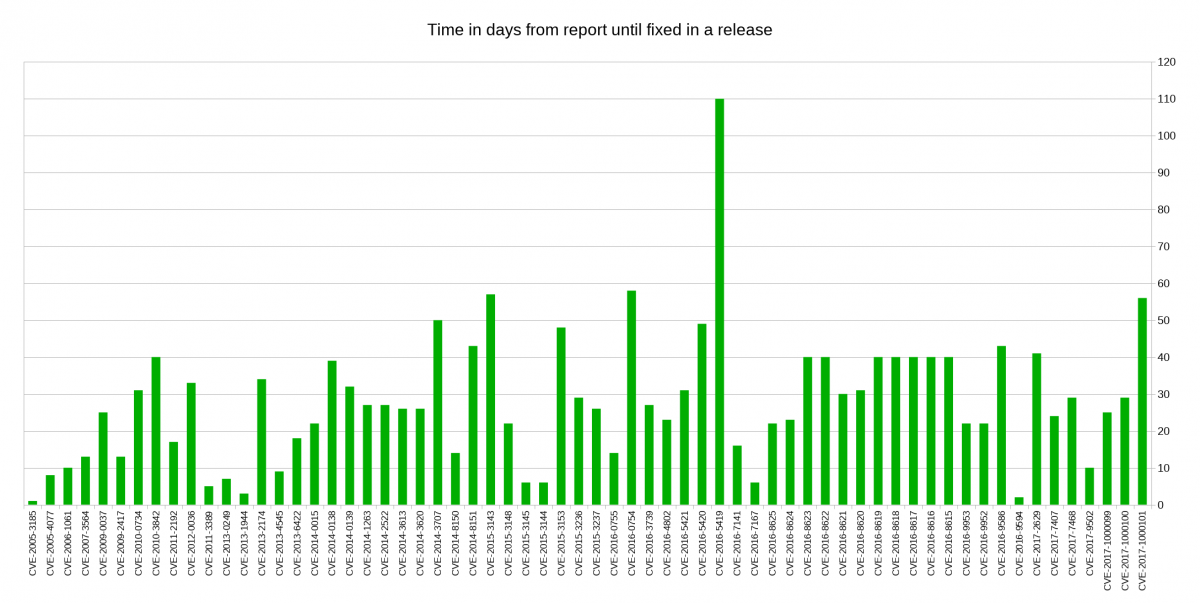

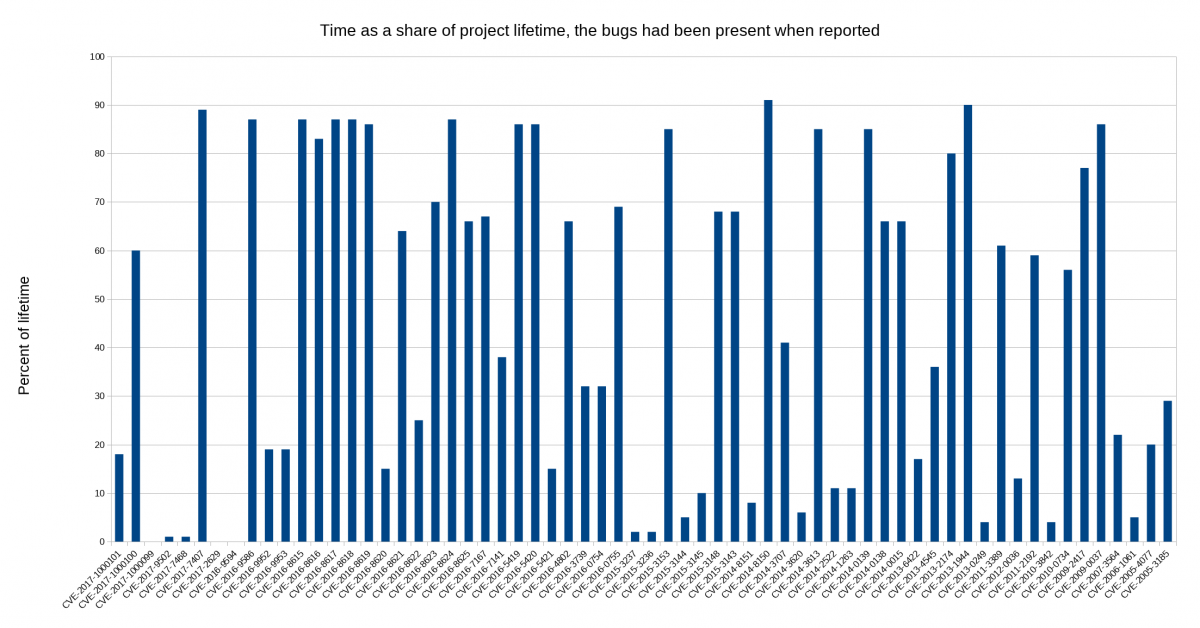

Some people argue that projects could or should pledge for every release that there’s no deliberate backdoor planted so that if the day comes in the future when a three-letter secret organization forces us to insert a backdoor, the lack of such a pledge for the subsequent release would function as an alarm signal to people that something is wrong. I decided to look closer at security problems and the age of the reported issues in

I decided to look closer at security problems and the age of the reported issues in

(Season three, episode two)

(Season three, episode two)

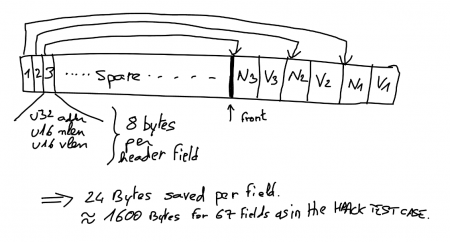

observed 2x CPU usage increase for QUIC connections as compared to h2+TLS is mostly blamed on the Linux kernel which apparently is not at all up for this job as good is should be. Things have clearly been more optimized for TCP over the years, leaving room for improvement in the UDP areas going forward. “Making kernel bypassing an interesting choice”.

observed 2x CPU usage increase for QUIC connections as compared to h2+TLS is mostly blamed on the Linux kernel which apparently is not at all up for this job as good is should be. Things have clearly been more optimized for TCP over the years, leaving room for improvement in the UDP areas going forward. “Making kernel bypassing an interesting choice”.