There seems to be no end to updated posts about bug bounties in the curl project these days. Not long ago I mentioned the then new program that sadly enough was cancelled only a few months after its birth.

Now we are back with a new and refreshed bug bounty program! The curl bug bounty program reborn.

This new program, which hopefully will manage to survive a while, is setup in cooperation with the major bug bounty player out there: hackerone.

Basic rules

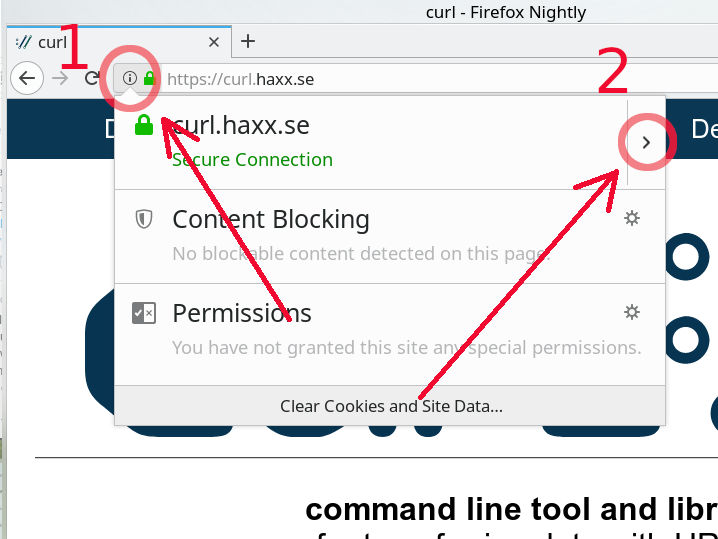

If you find or suspect a security related issue in curl or libcurl, report it! (and don’t speak about it in public at all until an agreed future date.)

You’re entitled to ask for a bounty for all and every valid and confirmed security problem that wasn’t already reported and that exists in the latest public release.

The curl security team will then assess the report and the problem and will then reward money depending on bug severity and other details.

Where does the money come from?

We intend to use funds and money from wherever we can. The Hackerone Internet Bug Bounty program helps us, donations collected over at opencollective will be used as well as dedicated company sponsorships.

We will of course also greatly appreciate any direct sponsorships from companies for this program. You can help curl getting even better by adding funds to the bounty program and help us reward hard-working researchers.

Why bounties at all?

We compete for the security researchers’ time and attention with other projects, both open and proprietary. The projects that can help put food on these researchers’ tables might have a better chance of getting them to use their tools, time, skills and fingers to find our problems instead of someone else’s.

Finding and disclosing security problems can be very time and resource consuming. We want to make it less likely that people give up their attempts before they find anything. We can help full and part time security engineers sustain their livelihood by paying for the fruits of their labor. At least a little bit.

Only released code?

The state of the code repository in git is not subject for bounties. We need to allow developers to do mistakes and to experiment a little in the git repository, while we expect and want every actual public release to be free from security vulnerabilities.

So yes, the obvious downside with this is that someone could spot an issue in git and decide not to report it since it doesn’t give any money and hope that the flaw will linger around and ship in the release – and then reported it and claim reward money. I think we just have to trust that this will not be a standard practice and if we in fact notice that someone tries to exploit the bounty in this manner, we can consider counter-measures then.

How about money for the patches?

There’s of course always a discussion as to why we should pay anyone for bugs and then why just pay for reported security problems and not for heroes who authored the code in the first place and neither for the good people who write the patches to fix the reported issues. Those are valid questions and we would of course rather pay every contributor a lot of money, but we don’t have the funds for that. And getting funding for this kind of dedicated bug bounties seem to be doable, where as a generic pay contributors fund is trickier both to attract money but it is also really hard to distribute in an open project of curl’s nature.

How much money?

At the start of this program the award amounts are as following. We reward up to this amount of money for vulnerabilities of the following security levels:

Critical: 2,000 USD

High: 1,500 USD

Medium: 1,000 USD

Low: 500 USD

Depending on how things go, how fast we drain the fund and how much companies help us refill, the amounts may change over time.

The

The