On December 19 2017, Microsoft announced that since insider build 17063 of Windows 10, curl is now a default component. I’ve been away from home since then so I haven’t really had time to sit down and write and explain to you all what this means, so while I’m a bit late, here it comes!

I see this as a pretty huge step in curl’s road to conquer the world.

curl was already existing on Windows

Ever since we started shipping curl, it has been possible to build curl for Windows and run it on Windows. It has been working fine on all Windows versions since at least Windows 95. Running curl on Windows is not new to us. Users with a little bit of interest and knowledge have been able to run curl on Windows for almost 20 years already.

Then we had the known debacle with Microsoft introducing a curl alias to PowerShell that has put some obstacles in the way for users of curl.

Default makes a huge difference

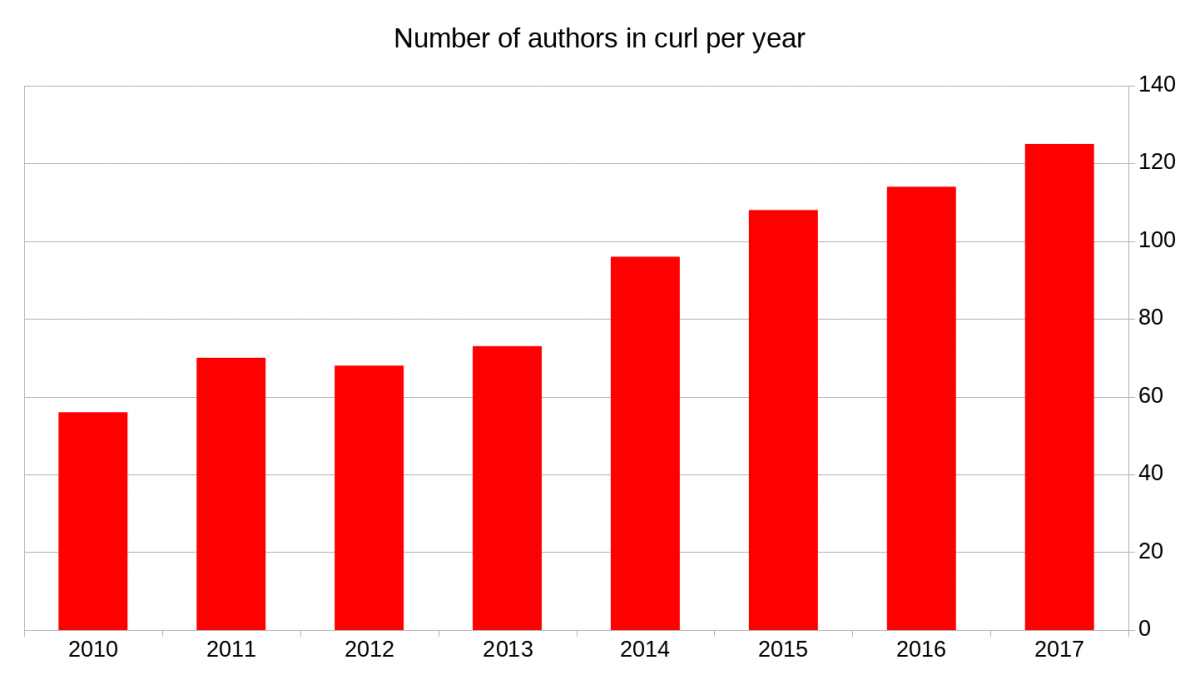

Having curl shipped by default by the manufacturer of an operating system of course makes a huge difference. Once this goes out to the general public, all of a sudden several hundred million users will get a curl command line tool install for them without having to do anything. Installing curl yourself on Windows still requires some skill and knowledge and on places like stackoverflow, there are many questions and users showing how it can be problematic.

I expect this to accelerate the curl command line use in the world. I expect this to increase the number of questions on how to do things with curl.

Lots of people mentioned how curl is a “good” new tool to use for malicious downloads of files to windows machines if you manage to run code on someone’s Windows computer. curl is quite a capable thing that you truly do not want to get invoked involuntarily. But sure, any powerful and capable tool can of course be abused.

About the installed curl

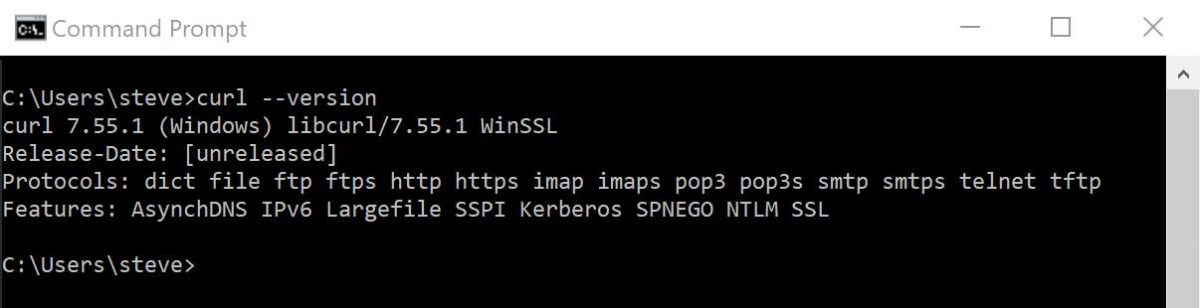

This is what it looks when you check out the curl version on this windows build:

(screenshot from Steve Holme)

I don’t think this means that this is necessarily exactly what curl will look like once this reaches the general windows 10 installation, and I also expect Microsoft to update and upgrade curl as we go along.

Some observations from this simple screenshot, and if you work for Microsoft you may feel free to see this as some subtle hints on what you could work on improving in future builds:

- They ship 7.55.1, while 7.57.0 was the latest version at the time. That’s just three releases away so I consider that pretty good. Lots of distros and others ship (much) older releases. It’ll be interesting to see how they will keep this up in the future.

- Unsurprisingly, they use a build that uses the WinSSL backend for TLS.

- They did not build it with IDN support.

- They’ve explicitly disabled support a whole range of protocols that curl supports natively by default (gopher, smb, rtsp etc), but they still have a few rare protocols enabled (like dict).

- curl supports LDAP using the windows native API, but that’s not used.

- The Release-Date line shows they built curl from unreleased sources (most likely directly from a git clone).

- No HTTP/2 support is provided.

- There’s no automatic decompression support for gzip or brotli content.

- The build doesn’t support metalink and no PSL (public suffix list).

(curl gif from the original Microsoft curl announcement blog post)

Independent

Finally, I’d like to add that like all operating system distributions that ship curl (macOS, Linux distros, the BSDs, AIX, etc) Microsoft builds, packages and ships the curl binary completely independently from the actual curl project.

Sure I’ve been in contact with the good people working on this from their end, but they are working totally independently of us in the curl project. They mostly get our code, build it and ship it.

I of course hope that we will get bug fixes and improvement from their end going forward when they find problems or things to polish.

The future looks as great as ever before!

Update: in March 2018, they mentioned that curl comes in Windows 10 version 1803.

intend to do it again:

intend to do it again:

I went to London and “represented curl” in the

I went to London and “represented curl” in the

Unless the issue is critical, we prefer to schedule a fix and announcement of the issue in association with the pending next release, and as we do releases every 8 weeks like clockwork, that’s never very far away.

Unless the issue is critical, we prefer to schedule a fix and announcement of the issue in association with the pending next release, and as we do releases every 8 weeks like clockwork, that’s never very far away. we should introduce processes, tests and checks to make sure we detect other similar mistakes now and in the future.

we should introduce processes, tests and checks to make sure we detect other similar mistakes now and in the future. Unfortunately we don’t have any bug bounties on our own in the curl project. We simply have no money for that. We actually don’t have money at all for anything.

Unfortunately we don’t have any bug bounties on our own in the curl project. We simply have no money for that. We actually don’t have money at all for anything.