I attended a TCP/IP Geeks Stockholm meetup yesterday and did a talk about HTTP/2. Below is the slide set, but as usual it might not be entirely self explanatory…

I attended a TCP/IP Geeks Stockholm meetup yesterday and did a talk about HTTP/2. Below is the slide set, but as usual it might not be entirely self explanatory…

Update: the poll is now closed. The responses can be found here.

Now is the time. If you use curl or libcurl from time to time, please consider helping us out with providing your feedback and opinions on a few things:

It’ll take you a couple of minutes and it’ll help us a lot when making decisions going forward.

The poll is hosted by Google and that short link above will take you to:

https://docs.google.com/forms/d/1uQNYfTmRwF9RX5-oq_HV4VyeT1j7cxXpuBIp8uy5nqQ/viewform

I’m right now working on adding proper multiplexing to libcurl’s HTTP/2 code. So far we’ve only done a single stream per connection and while that works fine and is HTTP/2, applications will still want more when switching to HTTP/2 as the multiplexing part is one of the key components and selling features of the new protocol version.

I’m right now working on adding proper multiplexing to libcurl’s HTTP/2 code. So far we’ve only done a single stream per connection and while that works fine and is HTTP/2, applications will still want more when switching to HTTP/2 as the multiplexing part is one of the key components and selling features of the new protocol version.

As a starting point, I’m using the “enable HTTP pipelining” switch to tell libcurl it should consider multiplexing. It makes libcurl work as before by default. If you use the multi interface and enable pipelining, libcurl will try to re-use established connections and just add streams over them rather than creating new connections. Yes this means that A) you need to use the multi interface to get the full HTTP/2 stuff and B) the curl tool won’t be able to take advantage of it since it doesn’t use the multi interface! (An old outstanding idea is to move the tool to use the multi interface and this would yet another reason why this could be a good idea.)

We still have some decisions to make about how we want libcurl to act by default – especially when we can expect application to use both HTTP/1.1 and HTTP/2 at the same time. Since we don’t know if the server supports HTTP/2 until after a certain point in the negotiation, we need to decide on how to do when we issue N transfers at once to the same server that might speak HTTP/2… Right now, we get the best HTTP/2 behavior by telling libcurl we only want one connection per host but that is probably not ideal for an application that might use a mix of HTTP/1.1 and HTTP/2 servers.

There are some drawbacks with using that pipelining switch to allow multiplexing since users may very well want HTTP/2 multiplexing but not HTTP/1.1 pipelining since the latter is just riddled with interop problems.

Also, re-using the same options for limited connections to host names etc for both HTTP/1.1 and HTTP/2 may not at all be what real-world applications want or need.

libcurl API wise, each HTTP/2 stream is its own easy handle. It makes it simple and keeps the API paradigm very much in the same way it works for all the other protocols. It comes very natural for the libcurl application author. If you setup three easy handles, all identifying a resource on the same server and you tell libcurl to use HTTP/2, it makes perfect sense that all these three transfers are made using a single connection.

As multiplexed data means that when reading from the socket, there is data arriving that belongs to other streams than just a single one. So we need to feed the received data into the different “data buckets” for the involved streams. It gives us a little internal challenge: we get easy handles with no socket activity to trigger a read, but there is data to take care of in the incoming buffer. I’ve solved this so far with a special trigger that says that there is data to take care of, that it should make a read anyway that then will get the data from the buffer.

HTTP/2 supports server push. That’s a stream that gets initiated from the server side without the client specifically asking for it. A resource the server deems likely that the client wants since it asked for a related resource, or similar. My idea is to support server push with the application setting up a transfer with an easy handle and associated options, but the URL would only identify the server so that it knows on which connection it would accept a push, and we will introduce a new option to libcurl that would tell it that this is an easy handle that should be used for the next server pushed stream on this connection.

Of course there are a few outstanding issues with this idea. Possibly we should allow an easy handle to get created when a new stream shows up so that we can better deal with a dynamic number of new streams being pushed.

It’d be great to hear from users who have ideas on how to use server push in a real-world application and how you’d imagine it could be used with libcurl.

My work in progress code for this drive can be found in two places.

First, I do the libcurl multiplexing development in the separate http2-multiplex branch in the regular curl repo:

https://github.com/bagder/curl/tree/http2-multiplex.

Then, I put all my test setup and test client work in a separate repository just in case you want to keep up and reproduce my testing and experiments:

https://github.com/bagder/curl-http2-dev

All comments, questions, praise or complaints you may have on this are best sent to the curl-library mailing list. If you are planning on doing a HTTP/2 capable applications or otherwise have thoughts or ideas about the API for this, please join in and tell me what you think. It is much better to get the discussions going early and work on different design ideas now before anything is set in stone rather than waiting for us to ship something semi-stable as the closer to an actual release we get, the harder it’ll be to change the API.

As I write this, I’m repeatedly doing 99 parallel HTTP/2 streams with no data corruption… But there’s a lot more to be done before I’ll call it a victory.

The changelog is the name of a weekly podcast on which the hosts discuss open source and stuff.

Last Friday I was invited to participate and I joined hosts Adam and Jerod for an hour long episode about curl. It all started as a response to my post on curl 17 years, so we really got into how things started out and how curl has developed through the years, how much time I’ve spent on it and if I could mention a really great moment in time that stood out over the years?

They day before, they released the little separate teaser we made about about the little known –remote-name-all command line option that basically makes curl default to do -O on all given URLs.

The full length episode can be experienced in all its glory here: https://changelog.com/podcast/153

Apigee posted this lovely picture over at twitter. A curl command line on the NASDAQ tower.

The protocol HTTP/2 as defined in the draft-17 was approved by the IESG and is being implemented and deployed widely on the Internet today, even before it has turned up as an actual RFC. Back in February, already upwards 5% or maybe even more of the web traffic was using HTTP/2.

The protocol HTTP/2 as defined in the draft-17 was approved by the IESG and is being implemented and deployed widely on the Internet today, even before it has turned up as an actual RFC. Back in February, already upwards 5% or maybe even more of the web traffic was using HTTP/2.

My prediction: We’ll see >10% usage by the end of the year, possibly as much as 20-30% a little depending on how fast some of the major and most popular platforms will switch (Facebook, Instagram, Tumblr, Yahoo and others). In 2016 we might see HTTP/2 serve a majority of all HTTP requests – done by browsers at least.

Counted how? Yeah the second I mention a rate I know you guys will start throwing me hard questions like exactly what do I mean. What is Internet and how would I count this? Let me express it loosely: the share of HTTP requests (by volume of requests, not by bandwidth of data and not just counting browsers). I don’t know how to measure it and we can debate the numbers in December and I guess we can all end up being right depending on what we think is the right way to count!

Who am I to tell? I’m just a person deeply interested in protocols and HTTP/2, so I’ve been involved in the HTTP work group for years and I also work on several HTTP/2 implementations. You can guess as well as I, but this just happens to be my blog!

The HTTP/2 Implementations wiki page currently lists 36 different implementations. Let’s take a closer look at the current situation and prospects in some areas.

Firefox and Chome have solid support since a while back. Just use a recent version and you’re good.

Internet Explorer has been shown in a tech preview that spoke HTTP/2 fine. So, run that or wait for it to ship in a public version soon.

There are no news about this from Apple regarding support in Safari. Give up on them and switch over to a browser that keeps up!

Other browsers? Ask them what they do, or replace them with a browser that supports HTTP/2 already.

My estimate: By the end of 2015 the leading browsers with a market share way over 50% combined will support HTTP/2.

Apache HTTPd is still the most popular web server software on the planet. mod_h2 is a recent module for it that can speak HTTP/2 – still in “alpha” state. Give it time and help out in other ways and it will pay off.

Nginx has told the world they’ll ship HTTP/2 support by the end of 2015.

IIS was showing off HTTP/2 in the Windows 10 tech preview.

H2O is a newcomer on the market with focus on performance and they ship with HTTP/2 support since a while back already.

nghttp2 offers a HTTP/2 => HTTP/1.1 proxy (and lots more) to front your old server with and can then help you deploy HTTP/2 at once.

Apache Traffic Server supports HTTP/2 fine. Will show up in a release soon.

Also, netty, jetty and others are already on board.

HTTPS initiatives like Let’s Encrypt, helps to make it even easier to deploy and run HTTPS on your own sites which will smooth the way for HTTP/2 deployments on smaller sites as well. Getting sites onto the TLS train will remain a hurdle and will be perhaps the single biggest obstacle to get even more adoption.

My estimate: By the end of 2015 the leading HTTP server products with a market share of more than 80% of the server market will support HTTP/2.

Squid works on HTTP/2 support.

HAproxy? I haven’t gotten a straight answer from that team, but Willy Tarreau has been actively participating in the HTTP/2 work all the time so I expect them to have work in progress.

While very critical to the protocol, PHK of the Varnish project has said that Varnish will support it if it gets traction.

My estimate: By the end of 2015, the leading proxy software projects will start to have or are already shipping HTTP/2 support.

Google (including Youtube and other sites in the Google family) and Twitter have ran HTTP/2 enabled for months already.

Lots of existing services offer SPDY today and I would imagine most of them are considering and pondering on how to switch to HTTP/2 as Chrome has already announced them going to drop SPDY during 2016 and Firefox will also abandon SPDY at some point.

My estimate: By the end of 2015 lots of the top sites of the world will be serving HTTP/2 or will be working on doing it.

Akamai plans to ship HTTP/2 by the end of the year. Cloudflare have stated that they “will support HTTP/2 once NGINX with it becomes available“.

Amazon has not given any response publicly that I can find for when they will support HTTP/2 on their services.

Not a totally bright situation but I also believe (or hope) that as soon as one or two of the bigger CDN players start to offer HTTP/2 the others might feel a bigger pressure to follow suit.

curl and libcurl support HTTP/2 since months back, and the HTTP/2 implementations page lists available implementations for just about all major languages now. Like node-http2 for javascript, http2-perl, http2 for Go, Hyper for Python, OkHttp for Java, http-2 for Ruby and more. If you do HTTP today, you should be able to switch over to HTTP/2 relatively easy.

I’m sure I’ve forgotten a few obvious points but I might update this as we go as soon as my dear readers point out my faults and mistakes!

My estimate: HTTP 1.1 will be around for many years to come. There is going to be a double-digit percentage share of the existing sites on the Internet (and who knows how many that aren’t even accessible from the Internet) for the foreseeable future. For technical reasons, for philosophical reasons and for good old we’ll-never-touch-it-again reasons.

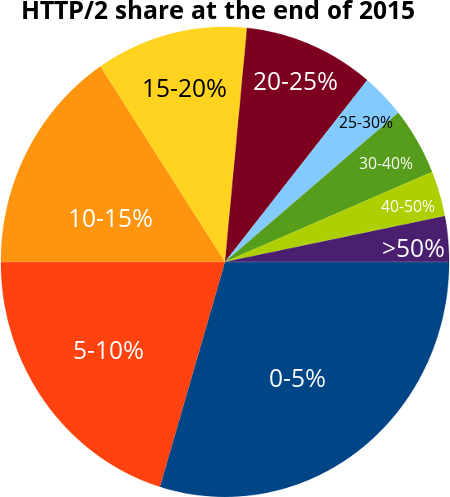

Finally, I asked friends on twitter, G+ and Facebook what they think the HTTP/2 share would be by the end of 2015 with the help of a little poll. This does of course not make it into any sound or statistically safe number but is still just a collection of what a set of random people guessed. A quick poll to get a rough feel. This is how the 64 responses I received were distributed:

Evidently, if you take a median out of these results you can see that the middle point is between 5-10 and 10-15. I’ll make it easy and say that the poll showed a group estimate on 10%. Ten percent of the total HTTP traffic to be HTTP/2 at the end of 2015.

I didn’t vote here but I would’ve checked the 15-20 choice, thus a fair bit over the median but only slightly into the top quarter..

In plain numbers this was the distribution of the guesses:

| 0-5% | 29.1% (19) |

| 5-10% | 21.8% (13) |

| 10-15% | 14.5% (10) |

| 15-20% | 10.9% (7) |

| 20-25% | 9.1% (6) |

| 25-30% | 3.6% (2) |

| 30-40% | 3.6% (3) |

| 40-50% | 3.6% (2) |

| more than 50% | 3.6% (2) |

I use a Func KB-460 keyboard with Nordic layout – that basically means it is a qwerty design with the Nordic keys for “åäö” on the right side as shown on the picture above. (yeah yeah Swedish has those letters fairly prominent in the language, don’t mock me now)

I use a Func KB-460 keyboard with Nordic layout – that basically means it is a qwerty design with the Nordic keys for “åäö” on the right side as shown on the picture above. (yeah yeah Swedish has those letters fairly prominent in the language, don’t mock me now)

The most annoying part with this keyboard has been that the key repeat on the apostrophe key has been sort of broken. If you pressed it and then another key, it would immediately generate another (or more than one) apostrophe. I’ve sort of learned to work around it with some muscle memory and treating the key with care but it hasn’t been ideal.

This problem is apparently only happening on Linux someone told me (I’ve never used it on anything else) and what do you know? Here’s how to fix it on a recent Debian machine that happens to run and use systemd so your mileage will vary if you have something else:

1. Edit the file “/lib/udev/hwdb.d/60-keyboard.hwdb”. It contains keyboard mappings of scan codes to key codes for various keyboards. We will add a special line for a single scan code and for this particular keyboard model only. The line includes the USB vendor and product IDs in uppercase and you can verify that it is correct with lsusb -v and check your own keyboard.

So, add something like this at the end of the file:

# func KB-460

keyboard:usb:v195Dp2030*

KEYBOARD_KEY_70031=reserved

2. Now update the database:

$ udevadm hwdb –update

3. … and finally reload the tweaks:

$ udevadm trigger

4. Now you should have a better working key and life has improved!

With a slightly older Debian without systemd, the instructions I got that I have not tested myself but I include here for the world:

1. Find the relevant input for the device by “cat /proc/bus/input/devices”

2. Make a very simple keymap. Make a file with only a single line like this:

$ cat /lib/udev/keymaps/func

0x70031 reserved

3 Map the key with ‘keymap’:

$ sudo /lib/udev/keymap -i /dev/input/eventX /lib/udev/keymaps/func

where X is the event number you figured out in step 1.

The related kernel issue.

I blogged about curl’s 17th birthday on March 20th 2015. I’ve done similar posts in the past and they normally pass by mostly undetected and hardly discussed. This time, something else happened.

Primarily, the blog post quickly became the single most viewed blog entry I’ve ever written – and I’ve been doing it for many many years. Already in the first day it was up, I counted more than 65,000 views.

The blog post got more comments than on any other blog post I’ve ever done. Right now they have probably stopped but there are 60 of them now, almost everyone one of them saying congratulations and/or thanks.

The posting also got discussed on both hacker news and reddit, totaling in more than 260 comments. Most of those in positive spirit.

The initial tweet I made about my blog post is the most retweeted and stared tweet I’ve ever posted. At least 87 retweets and 49 favorites (it might even grow a bit more over time). Others subsequently also tweeted the link hundreds of times. I got numerous replies and friendly call-outs on twitter saying “congrats” and “thanks” in many variations.

Spontaneously (ie not initiated or requested by me but most probably because of a comment on hacker news), I also suddenly started to get donations from the curl web site’s donation web page (to paypal). Within 24 hours from my post, I had received 35 donations from friendly fans who donated a total sum of 445 USD. A quick count revealed that the total number of donations ever through the history of curl’s lifetime was 43 before this day. In one day we had basically gotten as many as we had gotten the first 17 years.

Interesting data from this donation “race”: I got donations varying from 1 USD (yes one dollar) to 50 USD and the average donation was then 12.7 USD.

Let me end this summary by thanking everyone who in various ways made the curl birthday extra fun by being nice and friendly and some even donating some of their hard earned money. I am honestly touched by the attention and all the warmth and positiveness. Thank you for proving internet comments can be this good!

Today we celebrate the fact that it is exactly 17 years since the first public release of curl. I have always been the lead developer and maintainer of the project.

When I released that first version in the spring of 1998, we had only a handful of users and a handful of contributors. curl was just a little tool and we were still a few years out before libcurl would become a thing of its own.

The tool we had been working on for a while was still called urlget in the beginning of 1998 but as we just recently added FTP upload capabilities that name turned wrong and I decided cURL would be more suitable. I picked ‘cURL’ because the word contains URL and already then the tool worked primarily with URLs, and I thought that it was fun to partly make it a real English word “curl” but also that you could pronounce it “see URL” as the tool would display the contents of a URL.

Much later, someone (I forget who) came up with the “backronym” Curl URL Request Library which of course is totally awesome.

17 years are 6209 days. During this time we’ve done more than 150 public releases containing more than 2600 bug fixes!

We started out GPL licensed, switched to MPL and then landed in MIT. We started out using RCS for version control, switched to CVS and then git. But it has stayed written in good old C the entire time.

The term “Open Source” was coined 1998 when the Open Source Initiative was started just the month before curl was born, which was superseded with just a few days by the announcement from Netscape that they would free their browser code and make an open browser.

We’ve hosted parts of our project on servers run by the various companies I’ve worked for and we’ve been on and off various free services. Things come and go. Virtually nothing stays the same so we better just move with the rest of the world. These days we’re on github a lot. Who knows how long that will last…

We have grown to support a ridiculous amount of protocols and curl can be built to run on virtually every modern operating system and CPU architecture.

The list of helpful souls who have contributed to make curl into what it is now have grown at a steady pace all through the years and it now holds more than 1200 names.

Employments

In 1998, I was employed by a company named Frontec Tekniksystem. I would later leave that company and today there’s nothing left in Sweden using that name as it was sold and most employees later fled away to other places. After Frontec I joined Contactor for many years until I started working for my own company, Haxx (which we started on the side many years before that), during 2009. Today, I am employed by my forth company during curl’s life time: Mozilla. All through this project’s lifetime, I’ve kept my work situation separate and I believe I haven’t allowed it to disturb our project too much. Mozilla is however the first one that actually allows me to spend a part of my time on curl and still get paid for it!

The Netscape announcement which was made 2 months before curl was born later became Mozilla and the Firefox browser. Where I work now…

Future

I’m not one of those who spend time glazing toward the horizon dreaming of future grandness and making up plans on how to go there. I work on stuff right now to work tomorrow. I have no idea what we’ll do and work on a year from now. I know a bunch of things I want to work on next, but I’m not sure I’ll ever get to them or whether they will actually ship or if they perhaps will be replaced by other things in that list before I get to them.

The world, the Internet and transfers are all constantly changing and we’re adapting. No long-term dreams other than sticking to the very simple and single plan: we do file-oriented internet transfers using application layer protocols.

Rough estimates say we may have a billion users already. Chances are, if things don’t change too drastically without us being able to keep up, that we will have even more in the future.

It has to feel good, right?

I will of course point out that I did not take curl to this point on my own, but that aside the ego-boost this level of success brings is beyond imagination. Thinking about that my code has ended up in so many places, and is driving so many little pieces of modern network technology is truly mind-boggling. When I specifically sit down or get a reason to think about it at least.

Most of the days however, I tear my hair when fixing bugs, or I try to rephrase my emails to no sound old and bitter (even though I can very well be that) when I once again try to explain things to users who can be extremely unfriendly and whining. I spend late evenings on curl when my wife and kids are asleep. I escape my family and rob them of my company to improve curl even on weekends and vacations. Alone in the dark (mostly) with my text editor and debugger.

There’s no glory and there’s no eternal bright light shining down on me. I have not climbed up onto a level where I have a special status. I’m still the same old me, hacking away on code for the project I like and that I want to be as good as possible. Obviously I love working on curl so much I’ve been doing it for over seventeen years already and I don’t plan on stopping.

Celebrations!

Yeps. I’ll get myself an extra drink tonight and I hope you’ll join me. But only one, we’ll get back to work again afterward. There are bugs to fix, tests to write and features to add. Join in the fun! My backlog is only growing…

I mentioned the talk before, and now the video has been made available. About 25 minutes with me presenting curl.