Here’s a closer look at three new features that we’re shipping in curl and libcurl 7.49.0, to be released on May 18th 2016.

connect to this instead

If you’re one of the users who thought --resolve and doing Host: header tricks with --header weren’t good enough, you’ll appreciate that we’re adding yet another option for you to fiddle with the connection procedure. Another “Swiss army knife style” option for you who know what you’re doing.

With --connect-to you basically provide an internal alias for a certain name + port to instead internally use another name + port to connect to.

Instead of connecting to HOST1:PORT1, connect to HOST2:PORT2

It is very similar to --resolve which is a way to say: when connecting to HOST1:PORT1 use this ADDR2:PORT2. --resolve effectively prepopulates the internal DNS cache and makes curl completely avoid the DNS lookup and instead feeds it with the IP address you’d like it to use.

--connect-to doesn’t avoid the DNS lookup, but it will make sure that a different host name and destination port pair is used than what was found in the URL. A typical use case for this would be to make sure that your curl request asks a specific server out of several in a pool of many, where each has a unique name but you normally reach them with a single URL who’s host name is otherwise load balanced.

--connect-to can be specified multiple times to add mappings for multiple names, so that even following HTTP redirects to other host names etc can be handled. You don’t even necessarily have to redirect the first used host name.

The libcurl option name for for this feature is CURLOPT_CONNECT_TO.

Michael Kaufmann brought this feature.

http2 prior knowledge

In our ongoing quest to provide more and better HTTP/2 support in a world that is slowly but steadily doing more and more transfers over the new version of the protocol, curl now offers --http2-prior-knowledge.

As the name might hint, this is a way to tell curl that you have “prior knowledge” that the URL you specifies goes to a host that you know supports HTTP/2. The term prior knowledge is in fact used in the HTTP/2 spec (RFC 7540) for this scenario.

Normally when given a HTTP:// or a HTTPS:// URL, there will be no assumption that it supports HTTP/2 but curl when then try to upgrade that from version HTTP/1. The command line tool tries to upgrade all HTTPS:// URLs by default even, and libcurl can be told to do so.

libcurl wise, you ask for a prior knowledge use by setting CURLOPT_HTTP_VERSION to CURL_HTTP_VERSION_2_PRIOR_KNOWLEDGE.

Asking for http2 prior knowledge when the server does in fact not support HTTP/2 will give you an error back.

Diego Bes brought this feature.

TCP Fast Open

TCP Fast Open is documented in RFC 7413 and is basically a way to pass on data to the remote machine earlier in the TCP handshake – already in the SYN and SYN-ACK packets. This of course as a means to get data over faster and reduce latency.

The --tcp-fastopen option is supported on Linux and OS X only for now.

This is an idea and technique that has been around for a while and it is slowly getting implemented and supported by servers. There have been some reports of problems in the wild when “middle boxes” that fiddle with TCP traffic see these packets, that sometimes result in breakage. So this option is opt-in to avoid the risk that it causes problems to users.

A typical real-world case where you would use this option is when sending an HTTP POST to a site you don’t have a connection already established to. Just note that TFO relies on the client having had contact established with the server before and having a special TFO “cookie” stored and non-expired.

TCP Fast Open is so far only used for clear-text TCP protocols in curl. These days more and more protocols switch over to their TLS counterparts (and there’s room for future improvements to add the initial TLS handshake parts with TFO). A related option to speed up TLS handshakes is --false-start (supported with the NSS or the secure transport backends).

With libcurl, you enable TCP Fast Open with CURLOPT_TCP_FASTOPEN.

Alessandro Ghedini brought this feature.

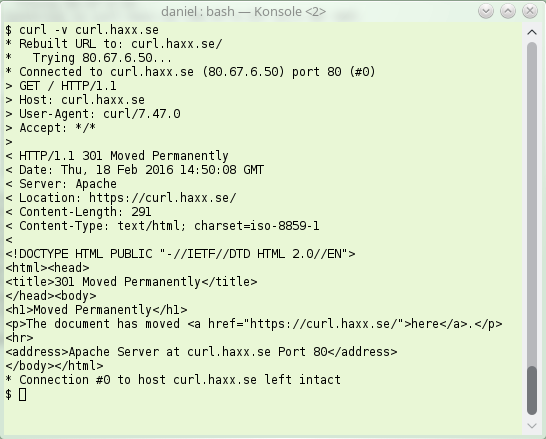

erver sending back an instruction to the client – instead of giving back the contents the client wanted. The server basically says “go look over [here] instead for that thing you asked for“.

erver sending back an instruction to the client – instead of giving back the contents the client wanted. The server basically says “go look over [here] instead for that thing you asked for“.