Things went southwards already this morning. My wife was about to work from home and called me before 8am asking for help to get online as the wireless Internet access setup didn’t work for her.

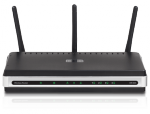

As this has happened at some occasions before she knew she might need to reboot the wifi router to get things running again. So she did. Only this time, when she inserted the power plug again there was not a single LED turning on. None. She yanked it out again and re-inserted it. Nothing.

Okay, so she was not able to use the wifi and the router was dead.

At lunch, I took a short walk in the sunshine to my nearest “Kjell & Co” and got myself a new wifi router and brought it back with me home after work and immediately replaced the dead one with the new shiny one. I ran upstairs (most of my network gear is under the staircase on the bottom floor while my main computer an d work space is on the upper floor), configured the new router with the static IP and those things that need to be there and…

d work space is on the upper floor), configured the new router with the static IP and those things that need to be there and…

…weird, I still can’t access the Internet!

I then decided to do the power recycle dance with the ADSL modem as well. I could see how the “WAN” led blinked, turned stable and then I could actually successfully send several ping packets (that got responses) before the connection broke again and the WAN led on the modem was again switched off. I retried the power cycle procedure but the led stayed off.

I called customer support for my ADSL service (Bredbandsbolaget) and they immediately spotted how old my modem is, indicating that it was probably the reason for the failure and set me up to receive a free replacement unit within 2-3 days.

This left me with several problems still nudging my brain:

- Why would suddenly two devices standing next to each other, connected with a cat5, break on the same day when they both have been running flawlessly like this for years? I had perfect network access when I went to bed last night and there were no power outages, lightning strikes or similar.

- Why and how could the customer service so quickly judge that the reason was the age of my modem? I get the sense they just knee-jerk the replacement unit because of the age of mine and there’s a rather big risk that when I plug in the new modem in a few days it will show the same symptoms…

- 2-3 days!! Gaaaah. Thank God I can tether with my phone, but man 3G may be nice and all but its not like my trusty old 12mbit ADSL I tend to get. Not to mention that the RTT is much worse and that’s a factor for me who use quite a lot of SSH to remote machines.

I guess I will find out when the new hardware arrives. I may get reason to write a follow-up then. I hope not!

Update on September 23rd:

A new ADSL modem arrived just two days after my call and yay, it could sync and I could use internet. Unfortunately something was still wrong though as my telephone didn’t work (I have a IP-telephony service that goes through the ADSL box). I took me until Sunday to call customer service again, and on Tuesday a second replace modem arrived which I installed on Thursday and… now even the phone works!

I never figured out why both devices died, but the end result is that my 802.11n wifi works properly with speeds above 6.5MB/sec in my house.

I have my own house. My thinking is that copper-based technologies such as the up-to-24mbit-but-really-12mbit ADSL (I have some 700 meters or so to the nearest station) I have now has reached something of an end of the road. I had 3 mbit/sec ADSL almost ten years ago: obviously not a lot of improvement is happening in this area. We need to look elsewhere in order to up our connection speeds. I think getting a proper fibre connection to the house will be a good thing for years to come. I don’t expect wireless/radio techniques to be able to compete properly, at least not within the next coming years.

I have my own house. My thinking is that copper-based technologies such as the up-to-24mbit-but-really-12mbit ADSL (I have some 700 meters or so to the nearest station) I have now has reached something of an end of the road. I had 3 mbit/sec ADSL almost ten years ago: obviously not a lot of improvement is happening in this area. We need to look elsewhere in order to up our connection speeds. I think getting a proper fibre connection to the house will be a good thing for years to come. I don’t expect wireless/radio techniques to be able to compete properly, at least not within the next coming years.