Status: 00:27 in the morning of December 4 my account was restored again. No words or explanations on how it happened – yet.

This morning (December 3rd, 2020) I woke up to find myself logged out from my Twitter account on the devices where I was previously logged in. Due to “suspicious activity” on my account. I don’t know the exact time this happened. I checked my phone at around 07:30 and then it has obviously already happened. So at time time over night.

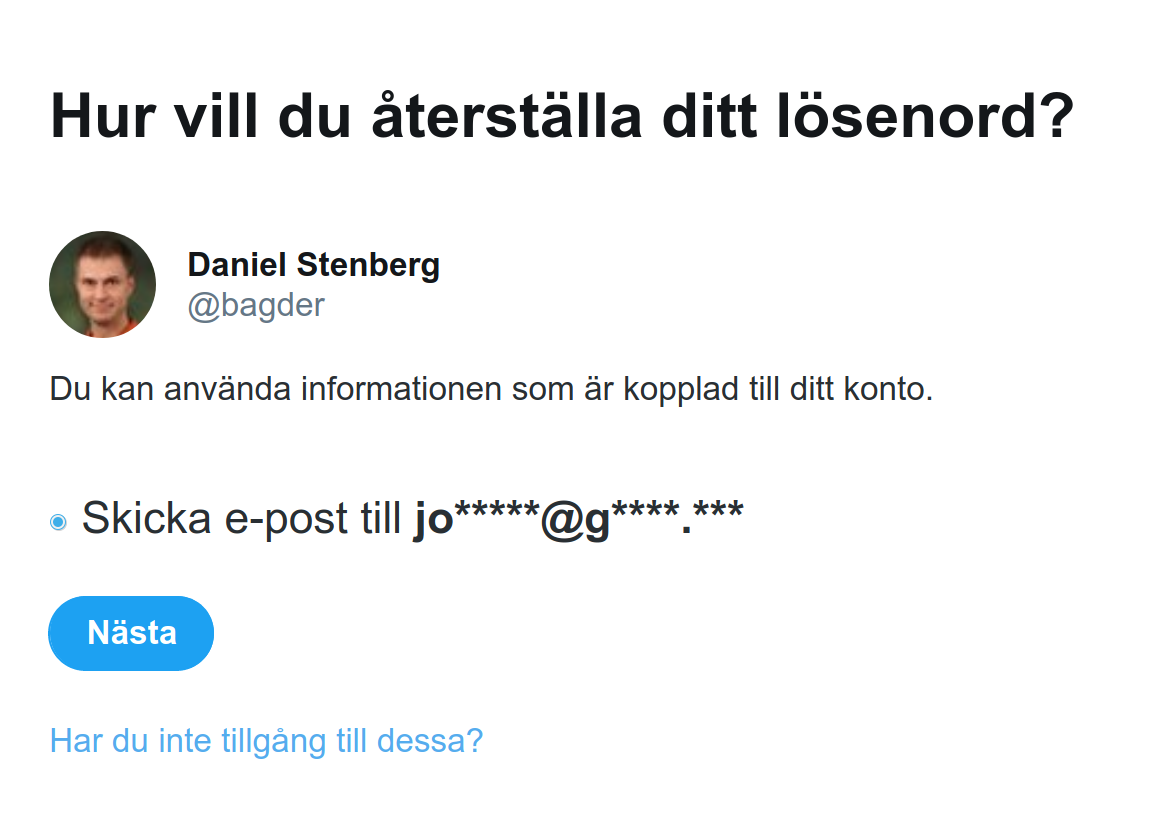

Trying to log back in, I get prompted saying I need to update my password first. Trying that, it wants to send a confirmation email to an email address that isn’t mine! Someone has managed to modify the email address associated with my account.

It has only been two weeks since someone hijacked my account the last time and abused it for scams. When I got the account back, I made very sure I both set a good, long, password and activated 2FA on my account. 2FA with auth-app, not SMS.

The last time I wasn’t really sure about how good my account security was. This time I know I did it by the book. And yet this is what happened.

Communication

I was in touch with someone at Twitter security and provided lots of details of my systems , software, IP address etc while they researched their end about what happened. I was totally transparent and gave them all info I had that could shed some light.

I was contacted by a Sr. Director from Twitter (late Dec 4 my time). We have a communication established and I’ve been promised more details and information at some point next week. Stay tuned.

Was I breached?

Many people have proposed that the attacker must have come through my local machine to pull this off. If someone did, it has been a very polished job as there is no trace at all of that left anywhere on my machine. Also, to reset my password I would imagine the attacker would need to somehow hijack my twitter session, need the 2FA or trigger a password reset and intercept the email. I don’t receive emails on my machine so the attacker would then have had to (also?) manage to get into my email machine and removed that email – and not too many others because I receive a lot of email and I’ve kept on receiving a lot of email during this period.

I’m not ruling it out. I’m just thinking it seems unlikely.

If the attacker would’ve breached my phone and installed something nefarious on that, it would not have removed any reset emails and it seems like a pretty touch challenge to hijack a “live” session from the Twitter client or get the 2FA code from the authenticator app. Not unthinkable either, just unlikely.

Most likely?

As I have no insights into the other end I cannot really say which way I think is the most likely that the perpetrator used for this attack, but I will maintain that I have no traces of a local attack or breach and I know of no malicious browser add-ons or twitter apps on my devices.

Details

Firefox version 83.0 on Debian Linux with Tweetdeck in a tab – a long-lived session started over a week ago (ie no recent 2FA codes used),

Browser extensions: Cisco Webex, Facebook container, multi-account containers, HTTPS Everywhere, test pilot and ublock origin.

I only use one “authorized app” with Twitter and that’s Tweetdeck.

On the Android phone, I run an updated Android with an auto-updated Twitter client. That session also started over a week ago. I used Google Authenticator for 2fa.

While this hijack took place I was asleep at home (I don’t know the exact time of it), on my WiFi, so all my most relevant machines would’ve been seen as originating from the same “NATed” IP address. This info was also relayed to Twitter security.

Restored

The actual restoration happens like this (and it was the exact same the last time): I just suddenly receive an email on how to reset my password for my account.

The email is a standard one without any specifics for this case. Just a template press the big button and it takes you to the Twitter site where I can set a new password for my account. There is nothing in the mail that indicates a human was involved in sending it. There is no text explaining what happened. Oh, right, the mail also include a bunch of standard security advice like “use a strong password”, “don’t share your password with others” and “activate two factor” etc as if I hadn’t done all that already…

It would be prudent of Twitter to explain how this happened, at least roughly and without revealing sensitive details. If it was my fault somehow, or if I just made it easier because of something in my end, I would really like to know so that I can do better in the future.

What was done to it?

No tweets were sent. The name and profile picture remained intact. I’ve not seen any DMs sent or received from while the account was “kidnapped”. Given this, it seems possible that the attacker actually only managed to change the associated account email address.