The RFC 7838 was published already in April 2016. It describes the new HTTP header Alt-Svc, or as the title of the document says HTTP Alternative Services.

HTTP Alternative Services

An alternative service in HTTP lingo is a quite simply another server instance that can provide the same service and act as the same origin as the original one. The alternative service can run on another port, on another host name, on another IP address, or over another HTTP version.

An HTTP server can inform a client about the existence of such alternatives by returning this Alt-Svc header. The header, which has an expiry time, tells the client that there’s an optional alternative to this service that is hosted on that host name, that port number using that protocol. If that client is a browser, it can connect to the alternative in the background and if that works out fine, continue to use that host for the rest of the time that alternative is said to work.

In reality, this header becomes a little similar to the DNS records SRV or URI: it points out a different route to the server than what the A/AAAA records for it say.

The Alt-Svc header came into life as an attempt to help out with HTTP/2 load balancing, since with the introduction of HTTP/2 clients would suddenly use much more persistent and long-living connections instead of the very short ones used for traditional HTTP/1 web browsing which changed the nature of how connections are done. This way, a system that is about to go down can hint the clients on how to continue using the service, elsewhere.

Alt-Svc: h2="backup.example.com:443"; ma=2592000;

HTTP upgrades

Once that header was published, the by then already existing and deployed Google QUIC protocol switched to using the Alt-Svc header to hint clients (read “Chrome users”) that “hey, this service is also available over gQUIC“. (Prior to that, they used their own custom alternative header that basically had the same meaning.)

This is important because QUIC is not TCP. Resources on the web that are pointed out using the traditional HTTPS:// URLs, still imply that you connect to them using TCP on port 443 and you negotiate TLS over that connection. Upgrading from HTTP/1 to HTTP/2 on the same connection was “easy” since they were both still TCP and TLS. All we needed then was to use the ALPN extension and voila: a nice and clean version negotiation.

To upgrade a client and server communication into a post-TCP protocol, the only official way to it is to first connect using the lowest common denominator that the HTTPS URL implies: TLS over TCP, and only once the server tells the client what more there is to try, the client can go on and try out the new toys.

For HTTP/3, this is the official way for HTTP servers to tell users about the availability of an HTTP/3 upgrade option.

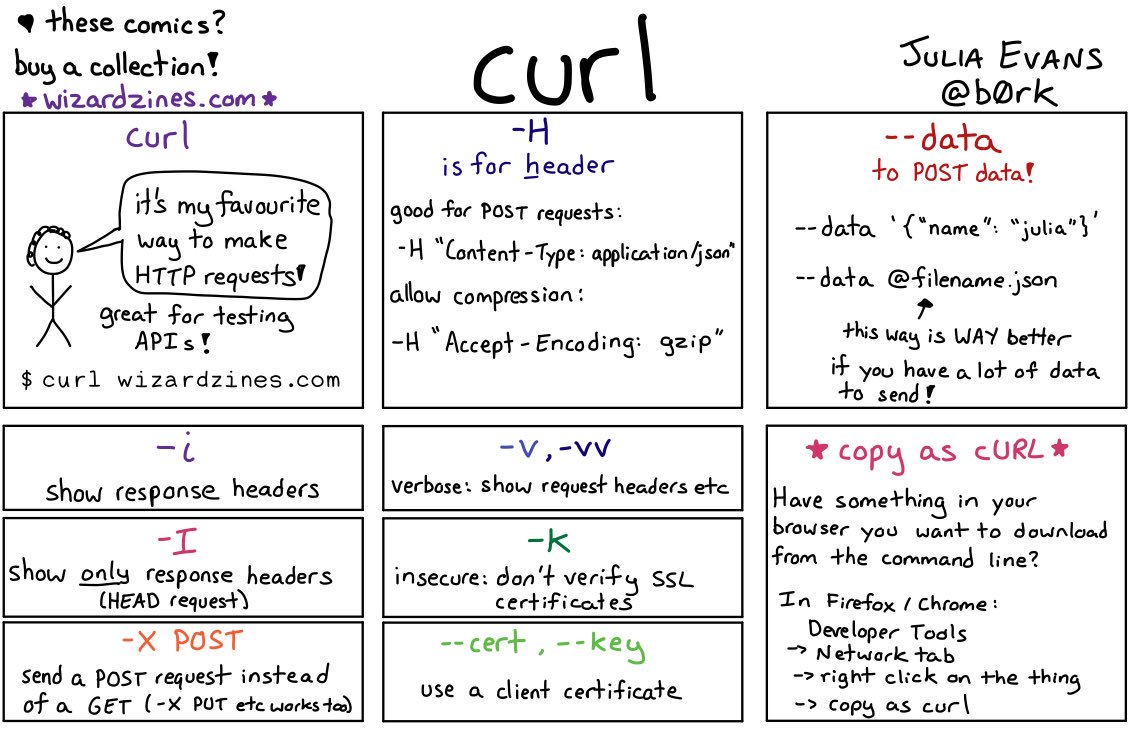

curl

I want curl to support HTTP/3 as soon as possible and then as I’ve mentioned above, understanding Alt-Svc is a key prerequisite to have a working “bootstrap”. curl needs to support Alt-Svc. When we’re implementing support for it, we can just as well support the whole concept and other protocol versions and not just limit it to HTTP/3 purposes.

curl will only consider received Alt-Svc headers when talking HTTPS since only then can it know that it actually speaks with the right host that has the authority enough to point to other places.

Experimental

This is the first feature and code that we merge into curl under a new concept we do for “experimental” code. It is a way for us to mark this code as: we’re not quite sure exactly how everything should work so we allow users in to test and help us smooth out the quirks but as a consequence of this we might actually change how it works, both behavior and API wise, before we make the support official.

We strongly discourage anyone from shipping code marked experimental in production. You need to explicitly enable this in the build to get the feature. (./configure –enable-alt-svc)

But at the same time we urge and encourage interested users to test it out, try how it works and bring back your feedback, criticism, praise, bug reports and help us make it work the way we’d like it to work so that we can make it land as a “normal” feature as soon as possible.

Ship

The experimental alt-svc code has been merged into curl as of commit 98441f3586 (merged March 3rd 2019) and will be present in the curl code starting in the public release 7.64.1 that is planned to ship on March 27, 2019. I don’t have any time schedule for when to remove the experimental tag but ideally it should happen within just a few release cycles.

alt-svc cache

The curl implementation of alt-svc has an in-memory cache of known alternatives. It can also both save that cache to a text file and load that file back into memory. Saving the alt-svc cache to disk allows it to survive curl invokes and to truly work the way it was intended. The cache file stores the expire timestamp per entry so it doesn’t matter if you try to use a stale file.

curl –alt-svc

Caveat: I now talk about how a feature works that I’ve just above said might change before it ships. With the curl tool you ask for alt-svc support by pointing out the alt-svc cache file to use. Or pass a “” (empty name) to make it not load or save any file. It makes curl load an existing cache from that file and at the end, also save the cache to that file.

curl also already since a long time features fancy connection options such as –resolve and –connect-to, which both let a user control where curl connects to, which in many cases work a little like a static poor man’s alt-svc. Learn more about those in my curl another host post.

libcurl options for alt-svc

We start out the alt-svc support for libcurl with two separate options. One sets the file name to the alt-svc cache on disk (CURLOPT_ALTSVC), and the other control various aspects of how libcurl should behave in regards to alt-svc specifics (CURLOPT_ALTSVC_CTRL).

I’m quite sure that we will have reason to slightly adjust these when the HTTP/3 support comes closer to actually merging.