I did this 50 minute talk on May 21 2015 for a Swedish company. With tongue in cheek subtitled “from hobby to world domination”. I think it turned out pretty decent and covers what the project is, how we work on it and what I do to make it run. Some of the questions are not easy to hear but in general it works out fine. Enjoy!

Category Archives: Open Source

Open Source, Free Software, and similar

daniel weekly

My series of weekly videos, in lack of a better name called daniel weekly, reached episode 35 today. I’m celebrating this fact by also adding an RSS-feed for those of you who prefer to listen to me in an audio-only version.

As an avid podcast listener myself, I can certainly see how this will be a better fit to some. Most of these videos are just me talking anyway so losing the visual shouldn’t be much of a problem.

A typical episode

I talk about what I work on in my open source projects, which means a lot of curl stuff and occasional stuff from my work on Firefox for Mozilla. I also tend to mention events I attend and HTTP/networking developments I find interesting and grab my attention. Lots of HTTP/2 talk for example. I only ever express my own personal opinions.

It is generally an extremely geeky and technical video series.

Every week I mention a (curl) “bug of the week” that allows me to joke or rant about the bug in question or just mention what it is about. In episode 31 I started my “command line options of the week” series in which I explain one or a few curl command line options with some amount of detail. There are over 170 options so the series is bound to continue for a while. I’ve explained ten options so far.

I’ve set a limit for myself and I make an effort to keep the episodes shorter than 20 minutes. I’ve not succeed every time.

Analytics

The 35 episodes have been viewed over 17,000 times in total. Episode two is the most watched individual one with almost 1,500 views.

Right now, my channel has 190 subscribers.

The top-3 countries that watch my videos: USA, Sweden and UK.

Share of viewers that are female: 3.7%

server push to curl

The next step in my efforts to complete curl‘s HTTP/2 implementation, after having made sure downloading and uploading transfers in parallel work, was adding support for HTTP/2 server push.

A quick recap

HTTP/2 Server push is a way for the server to initiate the transfer of a resource. Like when the client asks for resource X, the server can deem that the client most probably also wants to have resource Y and Z and initiate their transfers.

The server then sends a PUSH_PROMISE to the client for the new resource and hands over a set of “request headers” that a GET for that resource could have used, and then it sends the resource in a way that it would have done if it was requested the “regular” way.

The push promise frame gives the client information to make a decision if the resource is wanted or not and it can then immediately deny this transfer if it considers it unwanted. Like in a browser case if it already has that file in its local cache or similar. If not denied, the stream has an initial window size that allows the server to send a certain amount of data before the client has to give the stream more allowance to continue.

It is also suitable to remember that server push is a new protocol feature in HTTP/2 and as such it has not been widely used yet and it remains to be seen exactly how it will become used the best way and what will turn out popular and useful. We have this “immaturity” in mind when designing this support for libcurl.

Enter libcurl

When setting up a transfer over HTTP/2 with libcurl you do it with the multi interface to make it able to work multiplexed. That way you can set up and perform any number of transfers in parallel, and if they happen to use the same host they can be done multiplexed but if they use different hosts they will use separate connections.

To the application, transfers pretty much look the same and it can remain agnostic to whether the transfer is multiplexed or not, it is just another transfer.

With the libcurl API, the application creates an “easy handle” for each transfer and it sets options in that handle for the upcoming transfer. before it adds that to the “multi handle” and then libcurl drives all those individual transfers at the same time.

Server-initiated transfers

Starting in the future version 7.44.0 – planned release date in August, the plan is to introduce the API support for server push. It couldn’t happen sooner because I missed the merge window for 7.43.0 and then 7.44.0 is simply the next opportunity. The wiki link here is however updated and reflects what is currently being implemented.

An application sets a callback to allow server pushed streams. The callback gets called by libcurl when a PUSH_PROMISE is received by the client side, and the callback can then tell libcurl if the new stream should be allowed or not. It could be as simple as this:

static int server_push_callback(CURL *parent,

CURL *easy,

size_t num_headers,

struct curl_pushheaders *headers,

void *userp)

{

char *headp;

size_t i;

FILE *out;

/* here's a new stream, save it in a new file for each new push */

out = fopen("push-stream", "wb");

/* write to this file */

curl_easy_setopt(easy, CURLOPT_WRITEDATA, out);

headp = curl_pushheader_byname(headers, ":path");

if(headp)

fprintf(stderr, "The PATH is %s\n", headp);

return CURL_PUSH_OK;

}

The callback would instead return CURL_PUSH_DENY if the stream isn’t desired. If no callback is set, no pushes will be accepted.

An interesting effect of this API is that libcurl now creates and adds easy handles to the multi handle by itself when the callback okeys it, so there will be more easy handles to cleanup at the end of the operations than what the application added. Each pushed transfer needs get cleaned up by the application that “inherits” the ownership of the transfer and the easy handle for it.

PUSH_PROMISE headers

The headers passed along in that frame will contain the mandatory “special” request ones (“:method”, “:path”, “:scheme” and “:authority”) but other than those it really isn’t certain which other headers servers will provide and how this will work. To prepare for this fact, we provide two accessor functions for the push callback to access all PUSH_PROMISE headers libcurl received:

- curl_pushheader_byname() lets the callback get the contents of a specific header. I imagine that “:path” for example is one of those that most typical push callbacks will want to take a closer look at.

- curl_pushheader_bynum() allows the function to iterate over all received headers and do whatever it needs to do, it gets the full header by index.

These two functions are also somewhat special and new in the libcurl world since they are only possible to use from within this particular callback and they are invalid and wrong to use in any and all other contexts.

HTTP/2 headers are compressed on the wire using HPACK compression, but when access from this callback all headers use the familiar HTTP/1.1 style of “name:value”.

Work in progress

As I mentioned above already, this is work in progress and I welcome all and any comments or suggestions on how this API can be improved or tweaked to even better fit your needs. Implementing features such as these usually turn out better when there are users trying them out before they are written in stone.

As I mentioned above already, this is work in progress and I welcome all and any comments or suggestions on how this API can be improved or tweaked to even better fit your needs. Implementing features such as these usually turn out better when there are users trying them out before they are written in stone.

To try it out, build a libcurl from the http2-push branch:

https://github.com/bagder/curl/commits/http2-push

And while there are docs and an example in that branch already, you may opt to read the wiki version of the docs:

https://github.com/bagder/curl/wiki/HTTP-2-Server-Push

The best way to send your feedback on this is to post to the curl-library mailing list, but if you find obvious bugs or want to provide patches you can also opt to file issues or pull-requests on github.

picturing curl’s future

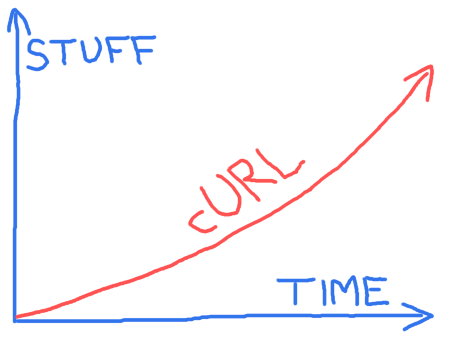

There will be more stuff over time in the cURL project. Exactly which stuff and how long time it takes for everything, we don’t know. It depends largely on who works on what and how much time said persons can spend on implementing the stuff they work on…

I suspect we might be able to do things slightly faster over time, which is why the red arrow isn’t just a straight line.

I drew this little picture inspired from discussions with friends after a talk I did about curl and how development works in an open source project such as this. We know we will work on things that will improve the products but we don’t see exactly what very far in advance. I tweeted this picture a few days ago, and it turned out very popular.

2015 curl user poll analysis

My full 30 page document with all details and analyses of the curl user poll 2015 is now available. It shows details of all the questions, most of them with a comparison with last year’s survey. The write-ins are also full of good advice, wisdom and some signs of ignorance or unawareness.

I hope all curl hackers and others generally interested in the project can use my “report” to learn something about our users and our user’s view of the project and our products.

Let’s use this to guide us going forward.

status update: http2 multiplexed uploads

I wrote a previous update about my work on multiplexing in curl. This is a follow-up to describe the status as of today.

I’ve successfully used the http2-upload.c code to upload 600 parallel streams to the test server and they were all sent off fine and the responses received were stored fine. MAX_CONCURRENT_STREAMS on the server was set to 100.

This is using curl git master as of right now (thus scheduled for inclusion in the pending curl 7.43.0 release). I’m not celebrating just yet, but it is looking pretty good. I’ll continue testing.

Commit b0143a2a3 was crucial for this, as I realized we didn’t store and use the read callback in the easy handle but in the connection struct which is completely wrong when many easy handles are using the same connection! I don’t recall the exact reason why I put the data in that struct (I went back and read the commit messages etc) but I think this setup is correct conceptually and code-wise, so if this leads to some side-effects I think we need to just fix it.

Next up: more testing, and then taking on the concept of server push to make libcurl able to support it. It will certainly be a subject for future blog posts…

RFC 7540 is HTTP/2

HTTP/2 is the new protocol for the web, as I trust everyone reading my blog are fully aware of by now. (If you’re not, read http2 explained.)

Today RFC 7540 was published, the final outcome of the years of work put into this by the tireless heroes in the HTTPbis working group of the IETF. Closely related to the main RFC is the one detailing HPACK, which is the header compression algorithm used by HTTP/2 and that is now known as RFC 7541.

The IETF part of this journey started pretty much with Mike Belshe’s posting of draft-mbelshe-httpbis-spdy-00 in February 2012. Google’s SPDY effort had been going on for a while and when it was taken to the httpbis working group in IETF, where a few different proposals on how to kick off the HTTP/2 work were debated.

The first “httpbis’ified” version of that document (draft-ietf-httpbis-http2-00) was then published on November 28 2012 and the standardization work began for real. HTTP/2 was of course discussed a lot on the mailing list since the start, on the IETF meetings but also in interim meetings around the world.

The first “httpbis’ified” version of that document (draft-ietf-httpbis-http2-00) was then published on November 28 2012 and the standardization work began for real. HTTP/2 was of course discussed a lot on the mailing list since the start, on the IETF meetings but also in interim meetings around the world.

In Zurich, in January 2014 there was one that I only attended remotely. We had the design team meeting in London immediately after IETF89 (March 2014) in the Mozilla offices just next to Piccadilly Circus (where I took the photos that are shown in this posting). We had our final in-person meetup with the HTTP team at Google’s offices in NYC in June 2014 where we ironed out most of the remaining issues.

In between those two last meetings I published my first version of http2 explained. My attempt at a lengthy and very detailed description of HTTP/2, including describing problems with HTTP/1.1 and motivations for HTTP/2. I’ve since published eleven updates.

The last draft update of HTTP/2 that contained actual changes of the binary format was draft-14, published in July 2014. After that, the updates were in the language and clarifications on what to do when. There are some functional changes (added in -16 I believe) for like when which sort of frames are accepted that changes what a state machine should do, but it doesn’t change how the protocol looks on the wire.

The last draft update of HTTP/2 that contained actual changes of the binary format was draft-14, published in July 2014. After that, the updates were in the language and clarifications on what to do when. There are some functional changes (added in -16 I believe) for like when which sort of frames are accepted that changes what a state machine should do, but it doesn’t change how the protocol looks on the wire.

RFC 7540 was published on May 15th, 2015

I’ve truly enjoyed having had the chance to be a part of this. There are a bunch of good people who made this happen and while I am most certainly forgetting key persons, some of the peeps that have truly stood out are: Mark, Julian, Roberto, Roy, Will, Tatsuhiro, Patrick, Martin, Mike, Nicolas, Mike, Jeff, Hasan, Herve and Willy.

curl user poll 2015

Update: the poll is now closed. The responses can be found here.

Now is the time. If you use curl or libcurl from time to time, please consider helping us out with providing your feedback and opinions on a few things:

It’ll take you a couple of minutes and it’ll help us a lot when making decisions going forward.

The poll is hosted by Google and that short link above will take you to:

https://docs.google.com/forms/d/1uQNYfTmRwF9RX5-oq_HV4VyeT1j7cxXpuBIp8uy5nqQ/viewform

HTTP/2 in curl, status update

I’m right now working on adding proper multiplexing to libcurl’s HTTP/2 code. So far we’ve only done a single stream per connection and while that works fine and is HTTP/2, applications will still want more when switching to HTTP/2 as the multiplexing part is one of the key components and selling features of the new protocol version.

I’m right now working on adding proper multiplexing to libcurl’s HTTP/2 code. So far we’ve only done a single stream per connection and while that works fine and is HTTP/2, applications will still want more when switching to HTTP/2 as the multiplexing part is one of the key components and selling features of the new protocol version.

Pipelining means multiplexed

As a starting point, I’m using the “enable HTTP pipelining” switch to tell libcurl it should consider multiplexing. It makes libcurl work as before by default. If you use the multi interface and enable pipelining, libcurl will try to re-use established connections and just add streams over them rather than creating new connections. Yes this means that A) you need to use the multi interface to get the full HTTP/2 stuff and B) the curl tool won’t be able to take advantage of it since it doesn’t use the multi interface! (An old outstanding idea is to move the tool to use the multi interface and this would yet another reason why this could be a good idea.)

We still have some decisions to make about how we want libcurl to act by default – especially when we can expect application to use both HTTP/1.1 and HTTP/2 at the same time. Since we don’t know if the server supports HTTP/2 until after a certain point in the negotiation, we need to decide on how to do when we issue N transfers at once to the same server that might speak HTTP/2… Right now, we get the best HTTP/2 behavior by telling libcurl we only want one connection per host but that is probably not ideal for an application that might use a mix of HTTP/1.1 and HTTP/2 servers.

Downsides with abusing pipelining

There are some drawbacks with using that pipelining switch to allow multiplexing since users may very well want HTTP/2 multiplexing but not HTTP/1.1 pipelining since the latter is just riddled with interop problems.

Also, re-using the same options for limited connections to host names etc for both HTTP/1.1 and HTTP/2 may not at all be what real-world applications want or need.

One easy handle, one stream

libcurl API wise, each HTTP/2 stream is its own easy handle. It makes it simple and keeps the API paradigm very much in the same way it works for all the other protocols. It comes very natural for the libcurl application author. If you setup three easy handles, all identifying a resource on the same server and you tell libcurl to use HTTP/2, it makes perfect sense that all these three transfers are made using a single connection.

As multiplexed data means that when reading from the socket, there is data arriving that belongs to other streams than just a single one. So we need to feed the received data into the different “data buckets” for the involved streams. It gives us a little internal challenge: we get easy handles with no socket activity to trigger a read, but there is data to take care of in the incoming buffer. I’ve solved this so far with a special trigger that says that there is data to take care of, that it should make a read anyway that then will get the data from the buffer.

Server push

HTTP/2 supports server push. That’s a stream that gets initiated from the server side without the client specifically asking for it. A resource the server deems likely that the client wants since it asked for a related resource, or similar. My idea is to support server push with the application setting up a transfer with an easy handle and associated options, but the URL would only identify the server so that it knows on which connection it would accept a push, and we will introduce a new option to libcurl that would tell it that this is an easy handle that should be used for the next server pushed stream on this connection.

Of course there are a few outstanding issues with this idea. Possibly we should allow an easy handle to get created when a new stream shows up so that we can better deal with a dynamic number of new streams being pushed.

It’d be great to hear from users who have ideas on how to use server push in a real-world application and how you’d imagine it could be used with libcurl.

Work in progress code

My work in progress code for this drive can be found in two places.

First, I do the libcurl multiplexing development in the separate http2-multiplex branch in the regular curl repo:

https://github.com/bagder/curl/tree/http2-multiplex.

Then, I put all my test setup and test client work in a separate repository just in case you want to keep up and reproduce my testing and experiments:

https://github.com/bagder/curl-http2-dev

Feedback?

All comments, questions, praise or complaints you may have on this are best sent to the curl-library mailing list. If you are planning on doing a HTTP/2 capable applications or otherwise have thoughts or ideas about the API for this, please join in and tell me what you think. It is much better to get the discussions going early and work on different design ideas now before anything is set in stone rather than waiting for us to ship something semi-stable as the closer to an actual release we get, the harder it’ll be to change the API.

Not quite working yet

As I write this, I’m repeatedly doing 99 parallel HTTP/2 streams with no data corruption… But there’s a lot more to be done before I’ll call it a victory.

talking curl on the changelog

The changelog is the name of a weekly podcast on which the hosts discuss open source and stuff.

Last Friday I was invited to participate and I joined hosts Adam and Jerod for an hour long episode about curl. It all started as a response to my post on curl 17 years, so we really got into how things started out and how curl has developed through the years, how much time I’ve spent on it and if I could mention a really great moment in time that stood out over the years?

They day before, they released the little separate teaser we made about about the little known –remote-name-all command line option that basically makes curl default to do -O on all given URLs.

The full length episode can be experienced in all its glory here: https://changelog.com/podcast/153