There has been 56 days since curl 7.66.0 was released. Here comes 7.67.0!

This might not be a release with any significant bells or whistles that will make us recall this date in the future when looking back, but it is still another steady step along the way and thanks to the new things introduced, we still bump the minor version number. Enjoy!

As always, download curl from curl.haxx.se.

If you need excellent commercial support for whatever you do with curl. Contact us at wolfSSL.

Numbers

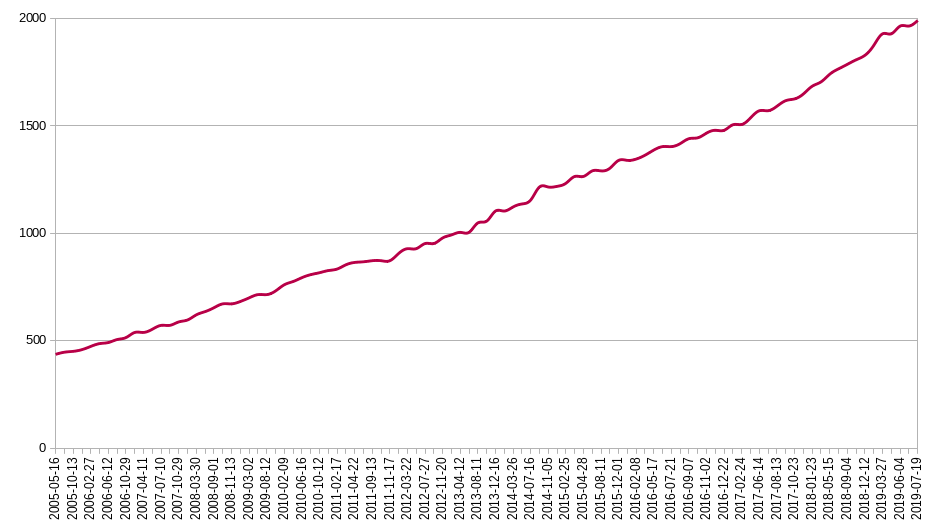

the 186th release

3 changes

56 days (total: 7,901)

125 bug fixes (total: 5,472)

212 commits (total: 24,931)

1 new public libcurl function (total: 81)

0 new curl_easy_setopt() option (total: 269)

1 new curl command line option (total: 226)

68 contributors, 42 new (total: 2,056)

42 authors, 26 new (total: 744)

0 security fixes (total: 92)

0 USD paid in Bug Bounties

The 3 changes

Disable progress meter

Since virtually forever you’ve been able to tell curl to “shut up” with -s. The long version of that is --silent. Silent makes the curl tool disable the progress meter and all other verbose output.

Starting now, you can use --no-progress-meter, which in a more granular way only disables the progress meter and lets the other verbose outputs remain.

CURLMOPT_MAX_CONCURRENT_STREAMS

When doing HTTP/2 using curl and multiple streams over a single connection, you can now also set the number of parallel streams you’d like to use which will be communicated to the server. The idea is that this option should be possible to use for HTTP/3 as well going forward, but due to the early days there it doesn’t yet.

CURLU_NO_AUTHORITY

This is a new flag that the URL parser API supports. It informs the parser that even if it doesn’t recognize the URL scheme it should still allow it to not have an authority part (like host name).

Bug-fixes

Here are some interesting bug-fixes done for this release. Check out the changelog for the full list.

Winbuild build error

The winbuild setup to build with MSVC with nmake shipped in 7.66.0 with a flaw that made it fail. We had added the vssh directory but not adjusted these builds scripts for that. The fix was of course very simple.

We have since added several winbuild builds to the CI to make sure we catch these kinds of mistakes earlier and better in the future.

FTP: optimized CWD handling

At least two landed bug-fixes make curl avoid issuing superfluous CWD commands (FTP lingo for “cd” or change directory) thereby reducing latency.

HTTP/3

Several fixes improved HTTP/3 handling. It builds on Windows better, the ngtcp2 backend now also behaves correctly on macOS, the build instructions are clearer.

Mimics socketpair on Windows

Thanks to the new socketpair look-alike function, libcurl now provides a socket for the application to wait for even when doing name resolves in the dedicated resolver thread. This makes the Windows code work catch up with the similar change that landed in 7.66.0. This makes it easier for applications to behave correctly during the short time gaps when libcurl resolves a host name and nothing else is happening.

curl with lots of URLs

With the introduction of parallel transfers in 7.66.0, we changed how curl allocated handles and setup transfers ahead of time. This made command lines that for example would use [1-1000000] ranges create a million CURL handles and thus use a lot of memory.

It did in fact break a few existing use cases where people did very large ranges with curl. Starting now, curl will just create enough curl handles ahead of time to allow the maximum amount of parallelism requested and users should yet again be able to specify ranges with many million iterations.

curl -d@ was slow

It was discovered that if you ask curl to post data with -d @filename, that operation was unnecessary slow for large files and was sped up significantly.

DoH fixes

Several corrections were made after some initial fuzzing of the DoH code. A benign buffer overflow, a memory leak and more.

HTTP/2 fixes

We relaxed the :authority push promise checks, fixed two cases where libcurl could “forget” a stream after it had delivered all data and dup’ed HTTP/2 handles could issue dummy PRIORITY frames!

connect with ETIMEDOUT now makes CURLE_OPERATION_TIMEDOUT

When libcurl’s connect attempt fails and errno says ETIMEDOUT it means that the underlying TCP connect attempt timed out. This will now be reflected back in the libcurl API as the timed out error code instead of the previously used CURLE_COULDNT_CONNECT.

One of the use cases for this is curl’s --retry option which now considers this situation to be a timeout and thus will consider it fine to retry…

Parsing URL with fragment and question mark

There was a regression in the URL parser that made it mistreat URLs without a query part but with a question mark in the fragment.