When you use curl to communicate with a HTTPS site (or any other protocol that uses TLS), it will by default verify that the server is signed by a trusted Certificate Authority (CA). It does this by checking the CA bundle it was built to use, or instructed to use with the –cacert command line option.

Sometimes you end up in a situation where you don’t have the necessary CA cert in your bundle. It could then look something like this:

$ curl https://example.com/

curl: (60) SSL certificate problem: self signed certificate

More details here: https://curl.se/docs/sslcerts.html

Do not disable!

A first gut reaction could be to disable the certificate check. Don’t do that. You’ll just make that end up in production or get copied by someone else and then you’ll spread the insecure use to other places and eventually cause a security problem.

Get the CA cert

I’ll show you four different ways to fix this.

1. Update your OS CA store

Operating systems come with a CA bundle of their own and on most of them, curl is setup to use the system CA store. A system update often makes curl work again.

This of course doesn’t help you if you have a self-signed certificate or otherwise use a CA that your operating system doesn’t have in its trust store.

2. Get an updated CA bundle from us

curl can be told to use a separate stand-alone file as CA store, and conveniently enough curl provides an updated one on the curl web site. That one is automatically converted from the one Mozilla provides for Firefox, updated daily. It also provides a little backlog so the ten most recent CA stores are available.

If you agree to trust the same CAs that Firefox trusts. This is a good choice.

3. Get it with openssl

Now we’re approaching the less good options. It’s way better to get the CA certificates via other means than from the actual site you’re trying to connect to!

This method uses the openssl command line tool. The servername option used below is there to set the SNI field, which often is necessary to tell the server which actual site’s certificate you want.

$ echo quit | openssl s_client -showcerts -servername server -connect server:443 > cacert.pem

A real world example, getting the certs for daniel.haxx.se and then getting the main page with curl using them:

$ echo quit | openssl s_client -showcerts -servername daniel.haxx.se -connect daniel.haxx.se:443 > cacert.pem

$ curl --cacert cacert.pem https://daniel.haxx.se

4. Get it with Firefox

Suppose you’re browsing the site already fine with Firefox. Then you can do inspect it using the browser and export to use with curl.

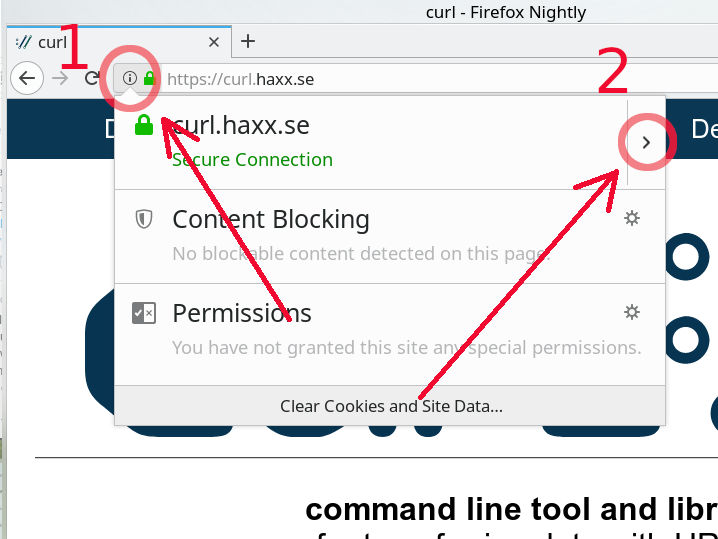

Step 1 – click the i in the circle on the left of the URL in the address bar of your browser.

Step 2 – click the right arrow on the right side in the drop-down window that appeared.

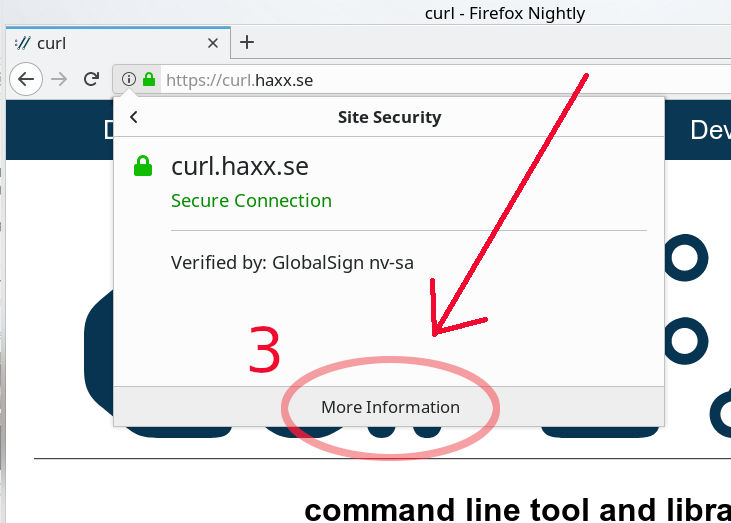

Step 3 – new contents appeared, now click the “More Information” at the bottom, which pops up a new separate window…

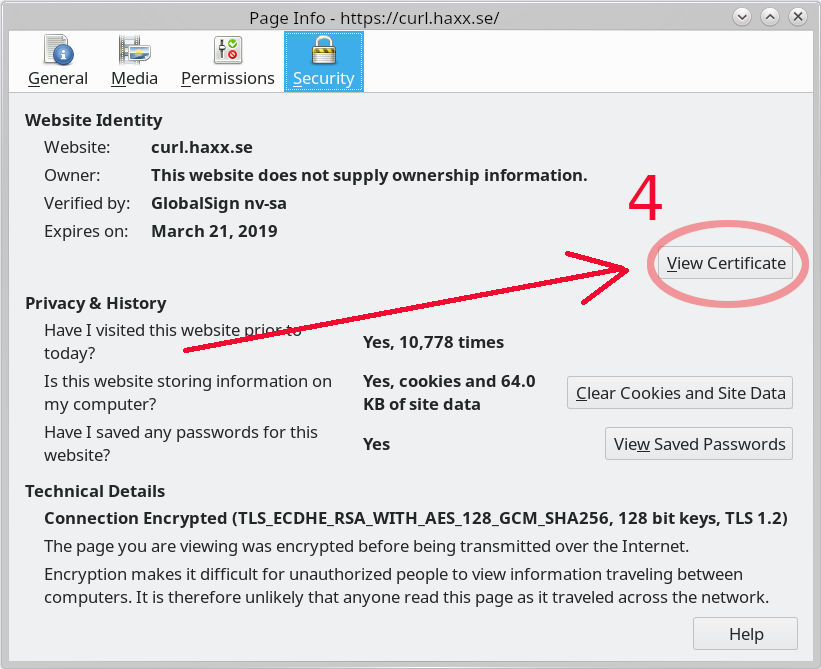

Step 4 – Here you get security information from Firefox about the site you’re visiting. Click the “View Certificate” button on the right. It pops up yet another separate window.

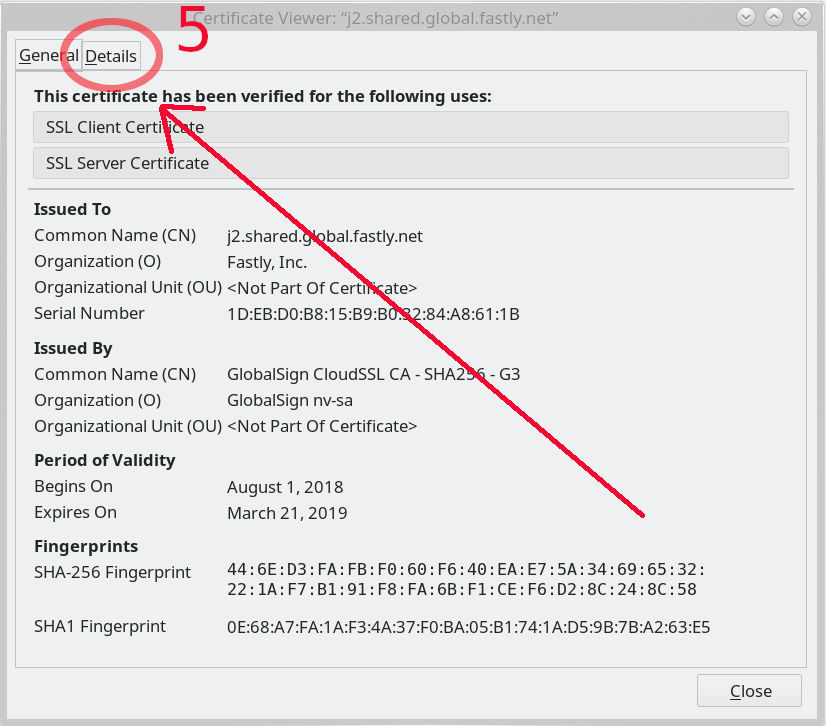

Step 5 – in this window full of certificate information, select the “Details” tab…

Step 6 – when switched to the details tab, there’s the certificate hierarchy shown at the top and we select the top choice there. This list will of course look different for different sites

Step 7 – now click the “Export” tab at the bottom left and save the file (that uses a .crt extension) somewhere suitable.

If you for example saved the exported certificate using in /tmp, you could then use curl with that saved certificate something like this:

$ curl --cacert /tmp/GlobalSignRootCA-R3.crt https://curl.se

But I’m not using openssl!

This description assumes you’re using a curl that uses a CA bundle in the PEM format, which not all do – in particular not the ones built with NSS, Schannel (native Windows) or Secure Transport (native macOS and iOS) don’t.

If you use one of those, you need to then add additional command to import the PEM formatted cert into the particular CA store of yours.

A CA store is many PEM files concatenated

Just concatenate many different PEM files into a single file to create a CA store with multiple certificates.

servers on the Internet. curl and libcurl comes pre-installed on some Linux distributions and for those that it doesn’t, most users and sysadmins install it. My estimate says there are few such servers out there without curl on them.

servers on the Internet. curl and libcurl comes pre-installed on some Linux distributions and for those that it doesn’t, most users and sysadmins install it. My estimate says there are few such servers out there without curl on them.

I’ve been informed by “people with knowledge” that libcurl runs on all Netflix’s devices that aren’t browsers. Some stats listed on the Internet says

I’ve been informed by “people with knowledge” that libcurl runs on all Netflix’s devices that aren’t browsers. Some stats listed on the Internet says  The very long and rarely watched ending sequence to this game does indeed

The very long and rarely watched ending sequence to this game does indeed  curl has shipped as a bundled component of macOS since August 2001. In April 2017, Apple’s CEO Tim Cook says that there were

curl has shipped as a bundled component of macOS since August 2001. In April 2017, Apple’s CEO Tim Cook says that there were  I wrote about this in a

I wrote about this in a  This game is made by Epic Games and credits curl in their

This game is made by Epic Games and credits curl in their  We know curl is used in television sets made by Sony, Philips, Toshiba, LG, Bang & Olufsen, JVC, Panasonic, Samsung and Sharp – at least.

We know curl is used in television sets made by Sony, Philips, Toshiba, LG, Bang & Olufsen, JVC, Panasonic, Samsung and Sharp – at least. Since a while back,

Since a while back,  I posit that there are almost no smart phones or tablets in the world that doesn’t run curl.

I posit that there are almost no smart phones or tablets in the world that doesn’t run curl.