I talked with Ed Hoover on the between screens podcast a while ago and that episode has now been published. It is a dense 12 minutes as the good Ed edited it massively.

Another wget reference was Bourne

Back in 2013, it came to light that Wget was used to to copy the files private Manning was convicted for having leaked. Around that time, EFF made and distributed stickers saying wget is not a crime.

Back in 2013, it came to light that Wget was used to to copy the files private Manning was convicted for having leaked. Around that time, EFF made and distributed stickers saying wget is not a crime.

Weirdly enough, it was hard to find a high resolution version of that image today but I’m showing you a version of it on the right side here.

In the 2016 movie Jason Bourne, Swedish actress Alicia Vikander is seen working on her laptop at around 1:16:30 into the movie and there’s a single visible sticker on that laptop. Yeps, it is for sure the same EFF sticker. There’s even a very brief glimpse of the top of the red EFF dot below the “crime” word.

Also recall the wget occurance in The Social Network.

curl up in Nuremberg!

I’m very happy to announce that the curl project is about to run our first ever curl meeting and developers conference.

March 18-19, Nuremberg Germany

Everyone interested in curl, libcurl and related matters is invited to participate. We only ask of you to register and pay the small fee. The fee will be used for food and more at the event.

You’ll find the full and detailed description of the event and the specific location in the curl wiki.

The agenda for the weekend is purposely kept loose to allow for flexibility and unconference-style adding things and topics while there. You will thus have the chance to present what you like and affect what others present. Do tell us what you’d like to talk about or hear others talk about! The sign-up for the event isn’t open yet, as we first need to work out some more details.

We have a dedicated mailing list for discussing the meeting, called curl-meet, so please consider yourself invited to join in there as well!

Thanks a lot to SUSE for hosting!

Feel free to help us make a cool logo for the event!

(The 19th birthday of curl is suitably enough the day after, on March 20.)

a single byte write opened a root execution exploit

Thursday, September 22nd 2016. An email popped up in my inbox.

Subject: ares_create_query OOB write

As one of the maintainers of the c-ares project I’m receiving mails for suspected security problems in c-ares and this was such a one. In this case, the email with said subject came from an individual who had reported a ChromeOS exploit to Google.

It turned out that this particular c-ares flaw was one important step in a sequence of necessary procedures that when followed could let the user execute code on ChromeOS from JavaScript – as the root user. I suspect that is pretty much the worst possible exploit of ChromeOS that can be done. I presume the reporter will get a fair amount of bug bounty reward for this. (Update: he got 100,000 USD for it.)

The setup and explanation on how this was accomplished is very complicated and I am deeply impressed by how this was figured out, tracked down and eventually exploited in a repeatable fashion. But bear with me. Here comes a very simplified explanation on how a single byte buffer overwrite with a fixed value could end up aiding running exploit code as root.

The main Google bug for this problem is still not open since they still have pending mitigations to perform, but since the c-ares issue has been fixed I’ve been told that it is fine to talk about this publicly.

c-ares writes a 1 outside its buffer

c-ares has a function called ares_create_query. It was added in 1.10 (released in May 2013) as an updated version of the older function ares_mkquery. This detail is mostly interesting because Google uses an older version than 1.10 of c-ares so in their case the flaw is in the old function. This is the two functions that contain the problem we’re discussing today. It used to be in the ares_mkquery function but was moved over to ares_create_query a few years ago (and the new function got an additional argument). The code was mostly unchanged in the move so the bug was just carried over. This bug was actually already present in the original ares project that I forked and created c-ares from, back in October 2003. It just took this long for someone to figure it out and report it!

I won’t bore you with exactly what these functions do, but we can stick to the simple fact that they take a name string as input, allocate a memory area for the outgoing packet with DNS protocol data and return that newly allocated memory area and its length.

Due to a logic mistake in the function, you could trick the function to allocate a too short buffer by passing in a string with an escaped trailing dot. An input string like “one.two.three\.” would then cause the allocated memory area to be one byte too small and the last byte would be written outside of the allocated memory area. A buffer overflow if you want. The single byte written outside of the memory area is most commonly a 1 due to how the DNS protocol data is laid out in that packet.

This flaw was given the name CVE-2016-5180 and was fixed and announced to the world in the end of September 2016 when c-ares 1.12.0 shipped. The actual commit that fixed it is here.

What to do with a 1?

Ok, so a function can be made to write a single byte to the value of 1 outside of its allocated buffer. How do you turn that into your advantage?

The Redhat security team deemed this problem to be of “Moderate security impact” so they clearly do not think you can do a lot of harm with it. But behold, with the right amount of imagination and luck you certainly can!

Back to ChromeOS we go.

First, we need to know that ChromeOS runs an internal HTTP proxy which is very liberal in what it accepts – this is the software that uses c-ares. This proxy is a key component that the attacker needed to tickle really badly. So by figuring out how you can send the correctly crafted request to the proxy, it would send the right string to c-ares and write a 1 outside its heap buffer.

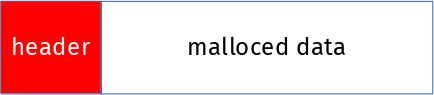

ChromeOS uses dlmalloc for managing the heap memory. Each time the program allocates memory, it will get a pointer back to the request memory region, and dlmalloc will put a small header of its own just before that memory region for its own purpose. If you ask for N bytes with malloc, dlmalloc will use ( header size + N ) and return the pointer to the N bytes the application asked for. Like this:

With a series of cleverly crafted HTTP requests of various sizes to the proxy, the attacker managed to create a hole of freed memory where he then reliably makes the c-ares allocated memory to end up. He knows exactly how the ChromeOS dlmalloc system works and its best-fit allocator, how big the c-ares malloc will be and thus where the overwritten 1 will end up. When the byte 1 is written after the memory, it is written into the header of the next memory chunk handled by dlmalloc:

The specific byte of that following dlmalloc header that it writes to, is used for flags and the lowest bits of size of that allocated chunk of memory.

Writing 1 to that byte clears 2 flags, sets one flag and clears the lowest bits of the chunk size. The important flag it sets is called prev_inuse and is used by dlmalloc to tell if it can merge adjacent areas on free. (so, if the value 1 simply had been a 2 instead, this flaw could not have been exploited this way!)

When the c-ares buffer that had overflowed is then freed again, dlmalloc gets fooled into consolidating that buffer with the subsequent one in memory (since it had toggled that bit) and thus the larger piece of assumed-to-be-free memory is partly still being in use. Open for manipulations!

Using that memory buffer mess

This freed memory area whose end part is actually still being used opened up the play-field for more “fun”. With doing another creative HTTP request, that memory block would be allocated and used to store new data into.

The attacker managed to insert the right data in that further end of the data block, the one that was still used by another part of the program, mostly since the proxy pretty much allowed anything to get crammed into the request. The attacker managed to put his own code to execute in there and after a few more steps he ran whatever he wanted as root. Well, the user would have to get tricked into running a particular JavaScript but still…

I cannot even imagine how long time it must have taken to make this exploit and how much work and sweat that were spent. The report I read on this was 37 very detailed pages. And it was one of the best things I’ve read in a long while! When this goes public in the future, I hope at least parts of that description will become available for you as well.

A lesson to take away from this?

No matter how limited or harmless a flaw may appear at a first glance, it can serve a malicious purpose and serve as one little step in a long chain of events to attack a system. And there are skilled people out there, ready to figure out all the necessary steps.

Update: A detailed write-up about this flaw (pretty much the report I refer to above) by the researcher who found it was posted on Google’s Project Zero blog on December 14:

Chrome OS exploit: one byte overflow and symlinks.

poll on mac 10.12 is broken

When Mac OS X first launched they did so without an existing poll function. They later added poll() in Mac OS X 10.3, but we quickly discovered that it was broken (it returned a non-zero value when asked to wait for nothing) so in the curl project we added a check in configure for that and subsequently avoided using poll() in all OS X versions to and including Mac OS 10.8 (Darwin 12). The code would instead switch to the alternative solution based on select() for these platforms.

With the release of Mac OS X 10.9 “Mavericks” in October 2013, Apple had fixed their poll() implementation and we’ve built libcurl to use it since with no issues at all. The configure script picks the correct underlying function to use.

Enter macOS 10.12 (yeah, its not called OS X anymore) “Sierra”, released in September 2016. Quickly we discovered that poll() once against did not act like it should and we are back to disabling the use of it in preference to the backup solution using select().

The new error looks similar to the old problem: when there’s nothing to wait for and we ask poll() to wait N milliseconds, the 10.12 version of poll() returns immediately without waiting. Causing busy-loops. The problem has been reported to Apple and its Radar number is 28372390. (There has been no news from them on how they plan to act on this.)

poll() is defined by POSIX and The Single Unix Specification it specifically says:

If none of the defined events have occurred on any selected file descriptor, poll() waits at least timeout milliseconds for an event to occur on any of the selected file descriptors.

We pushed a configure check for this in curl, to be part of the upcoming 7.51.0 release. I’ll also show you a small snippet you can use stand-alone below.

Apple is hardly alone in the broken-poll department. Remember how Windows’ WSApoll is broken?

Here’s a little code snippet that can detect the 10.12 breakage:

#include <poll.h>

#include <stdio.h>

#include <sys/time.h>

int main(void)

{

struct timeval before, after;

int rc;

size_t us;

gettimeofday(&before, NULL);

rc = poll(NULL, 0, 500);

gettimeofday(&after, NULL);

us = (after.tv_sec - before.tv_sec) * 1000000 +

(after.tv_usec - before.tv_usec);

if(us < 400000) {

puts("poll() is broken");

return 1;

}

else {

puts("poll() works");

}

return 0;

}

Follow-up, January 2017

This poll bug has been confirmed fixed in the macOS 10.12.2 update (released on December 13, 2016), but I’ve found no official mention or statement about this fact.

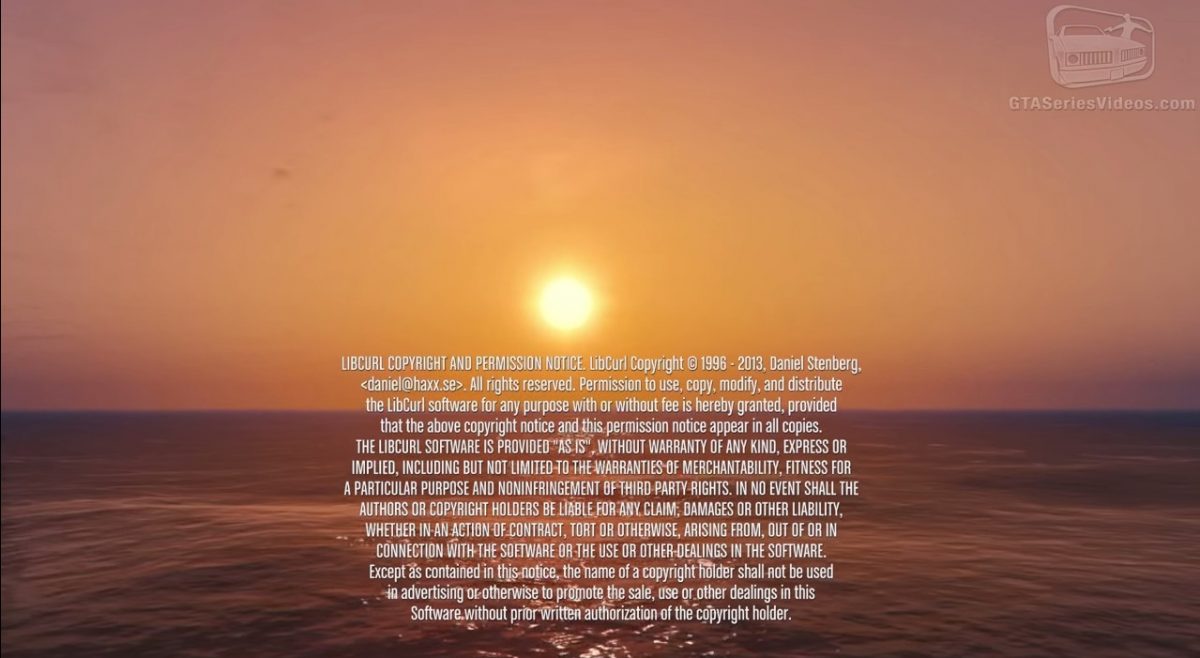

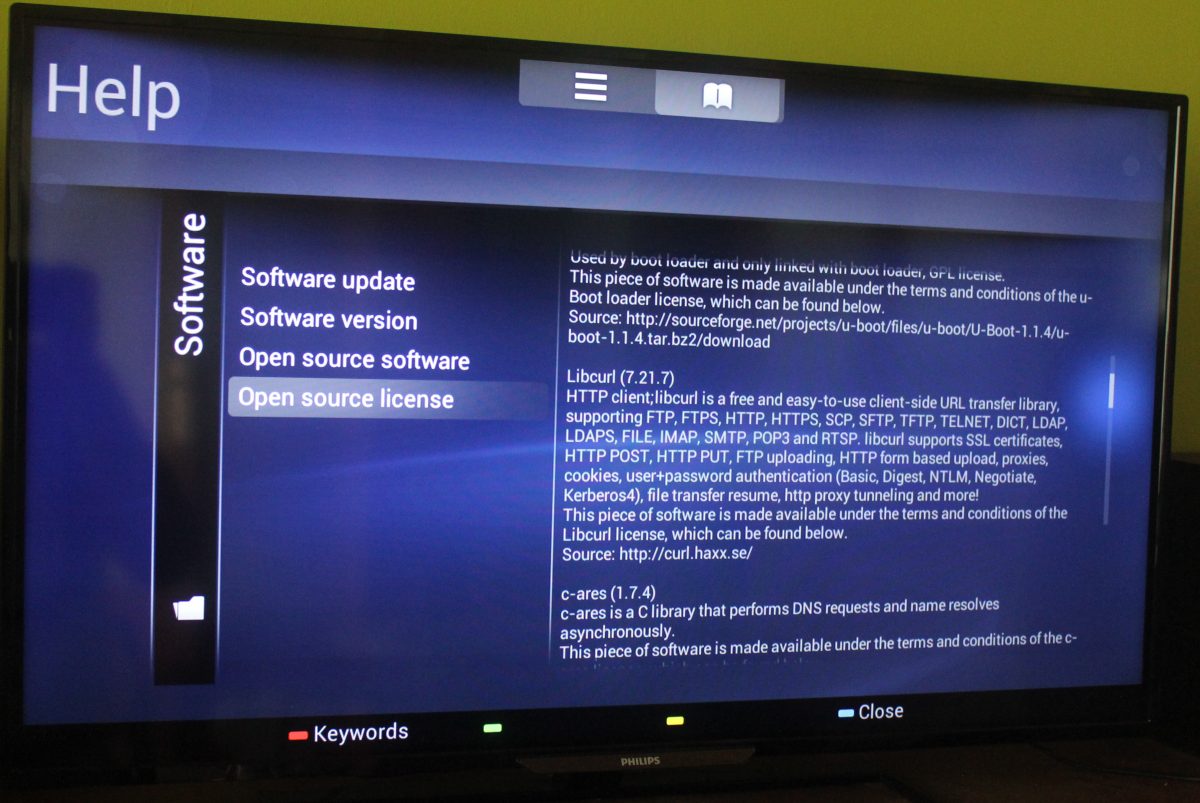

screenshotted curl credits

If you have more or better screenshots, please share!

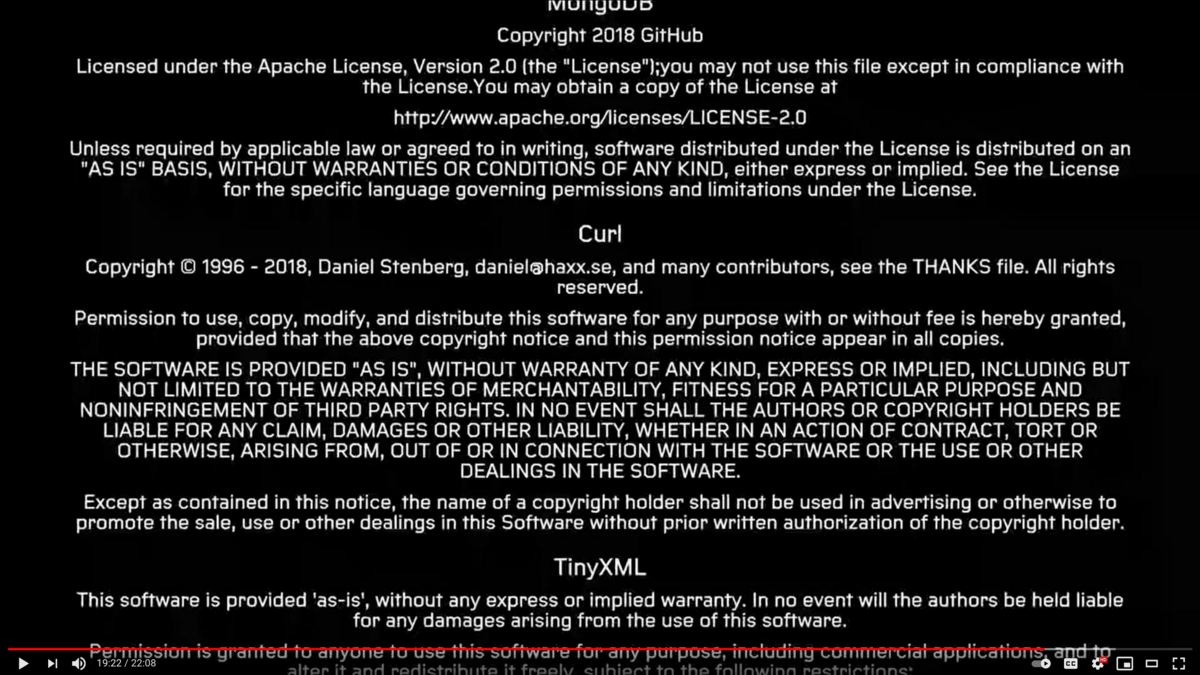

This shot is taken from the ending sequence of the PC version of the game Grand Theft Auto V. 44 minutes in! See the youtube version.

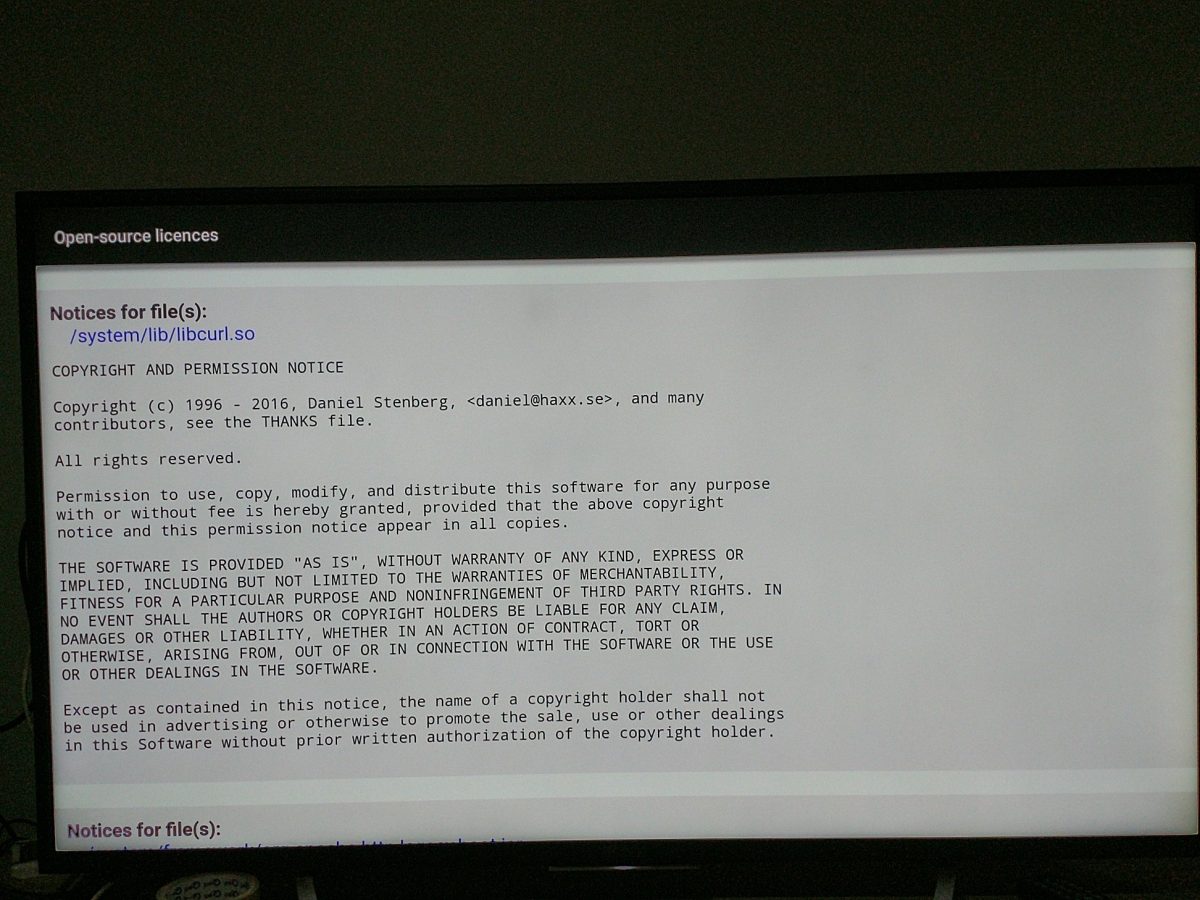

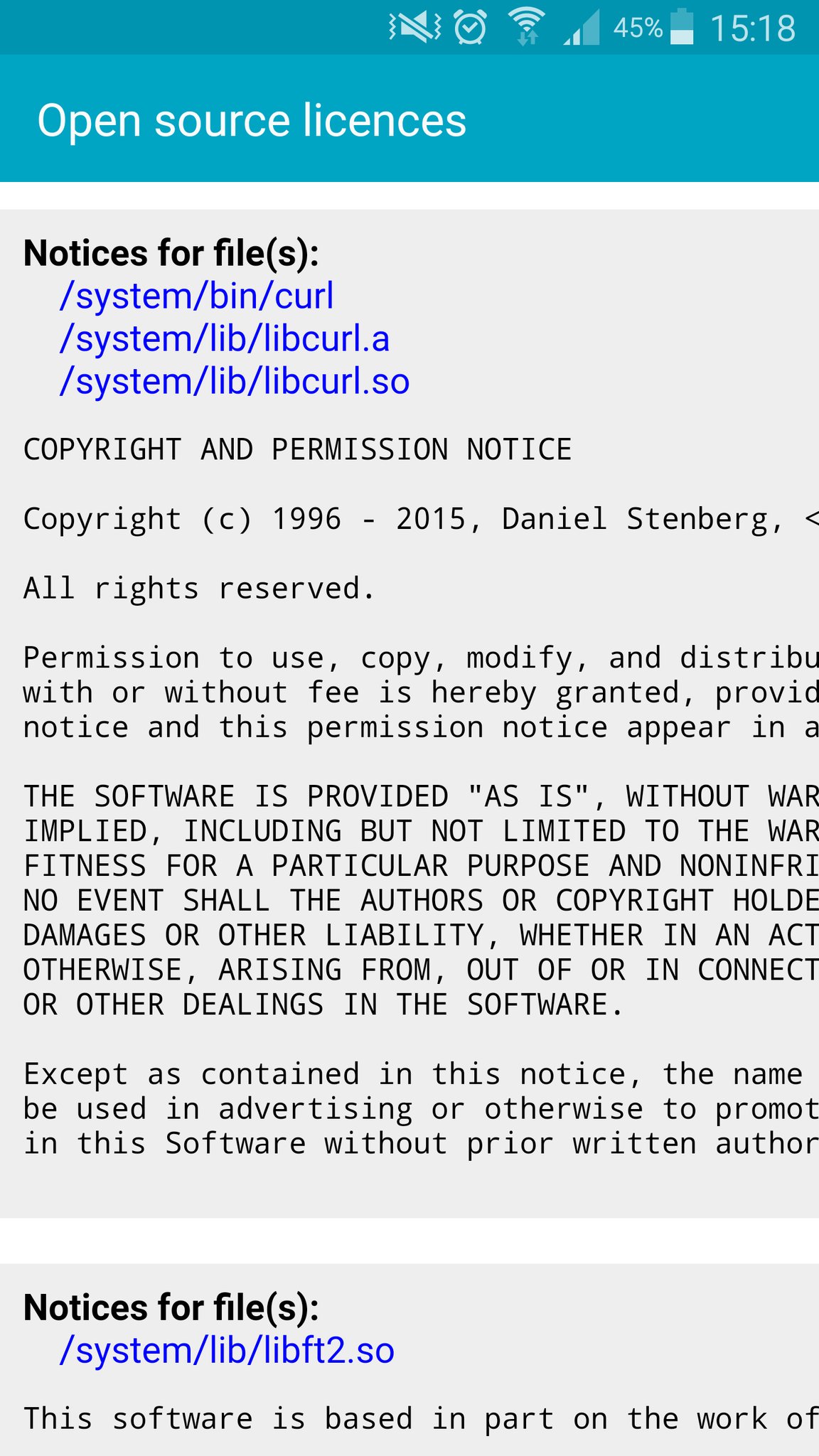

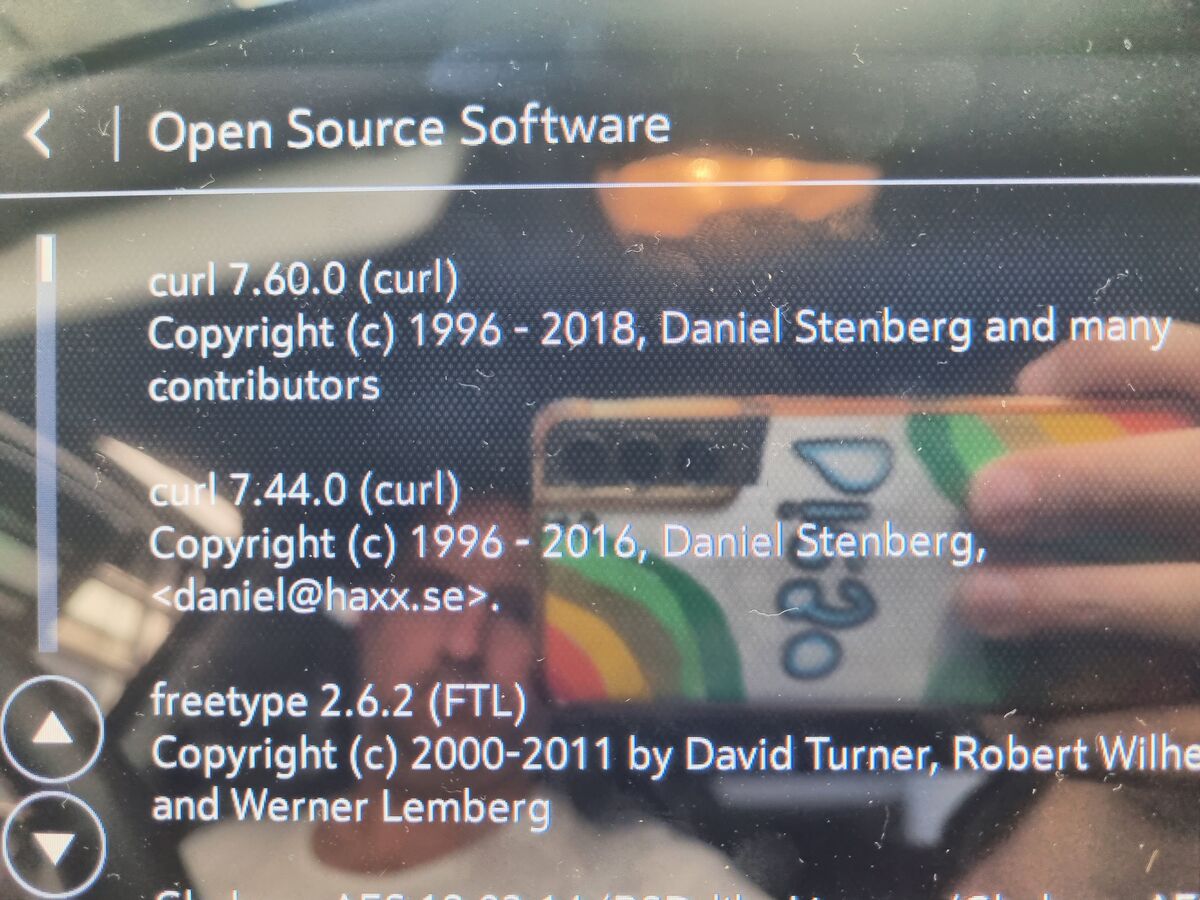

Sky HD is a satellite TV box.

This is a Philips TV. The added use of c-ares I consider a bonus!

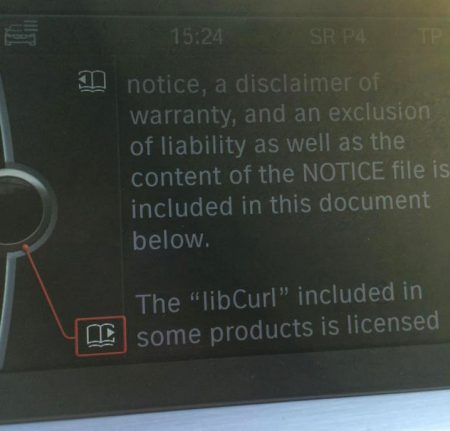

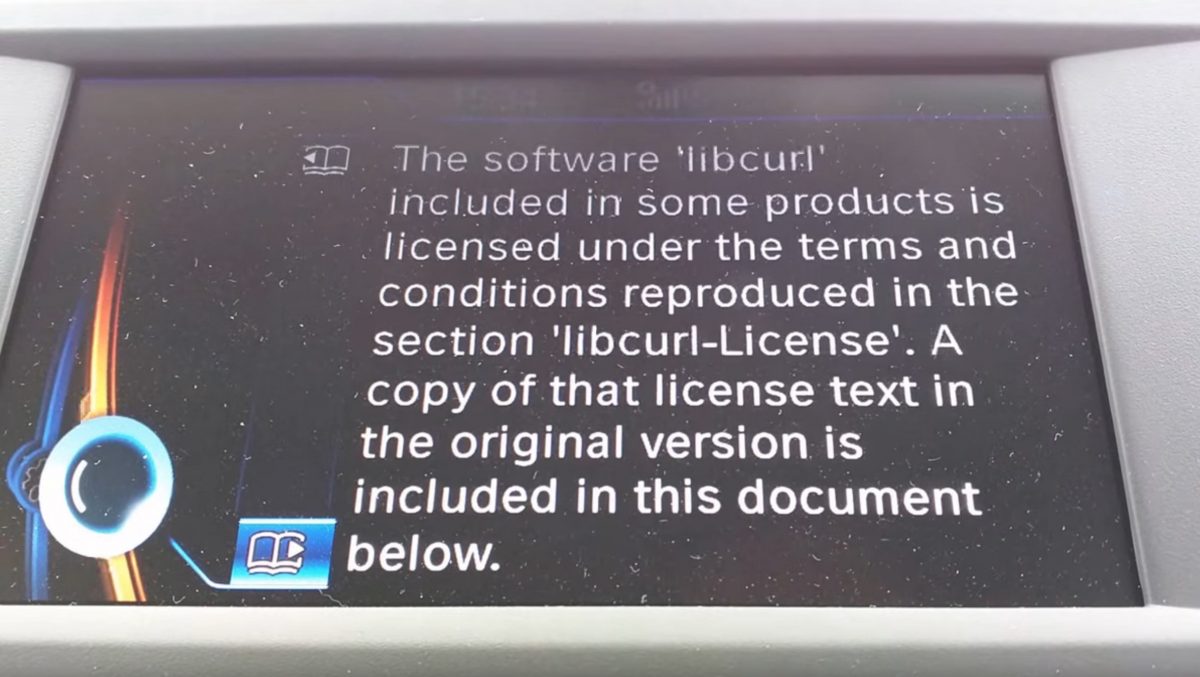

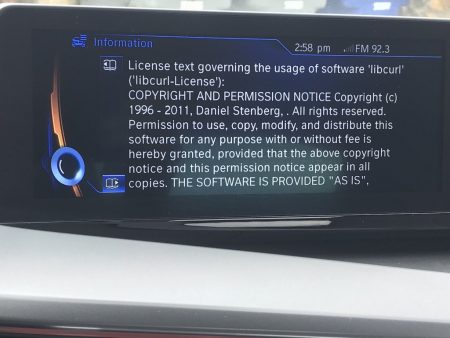

The infotainment display of a BMW car.

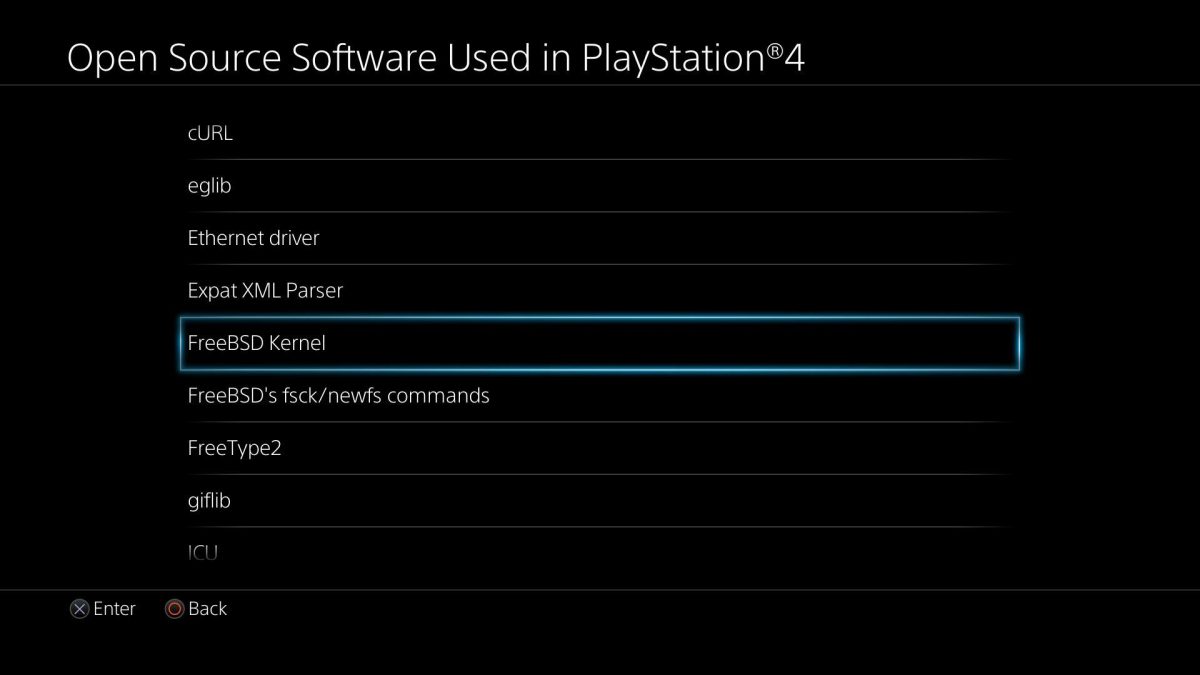

Playstation 4 lists open source products it uses.

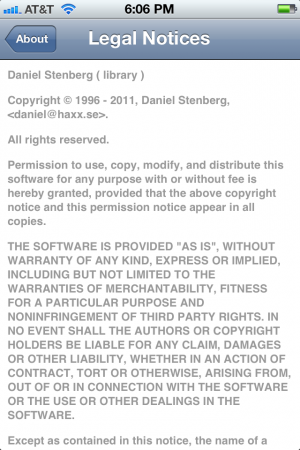

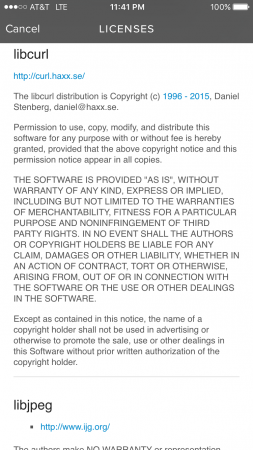

This is a screenshot from an Iphone open source license view. The iOS 10 screen however, looks like this:

curl in iOS 10 with an older year span than in the much older screenshot?

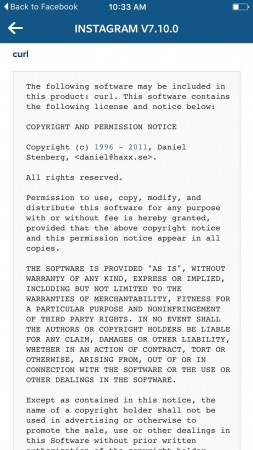

Instagram on an Iphone.

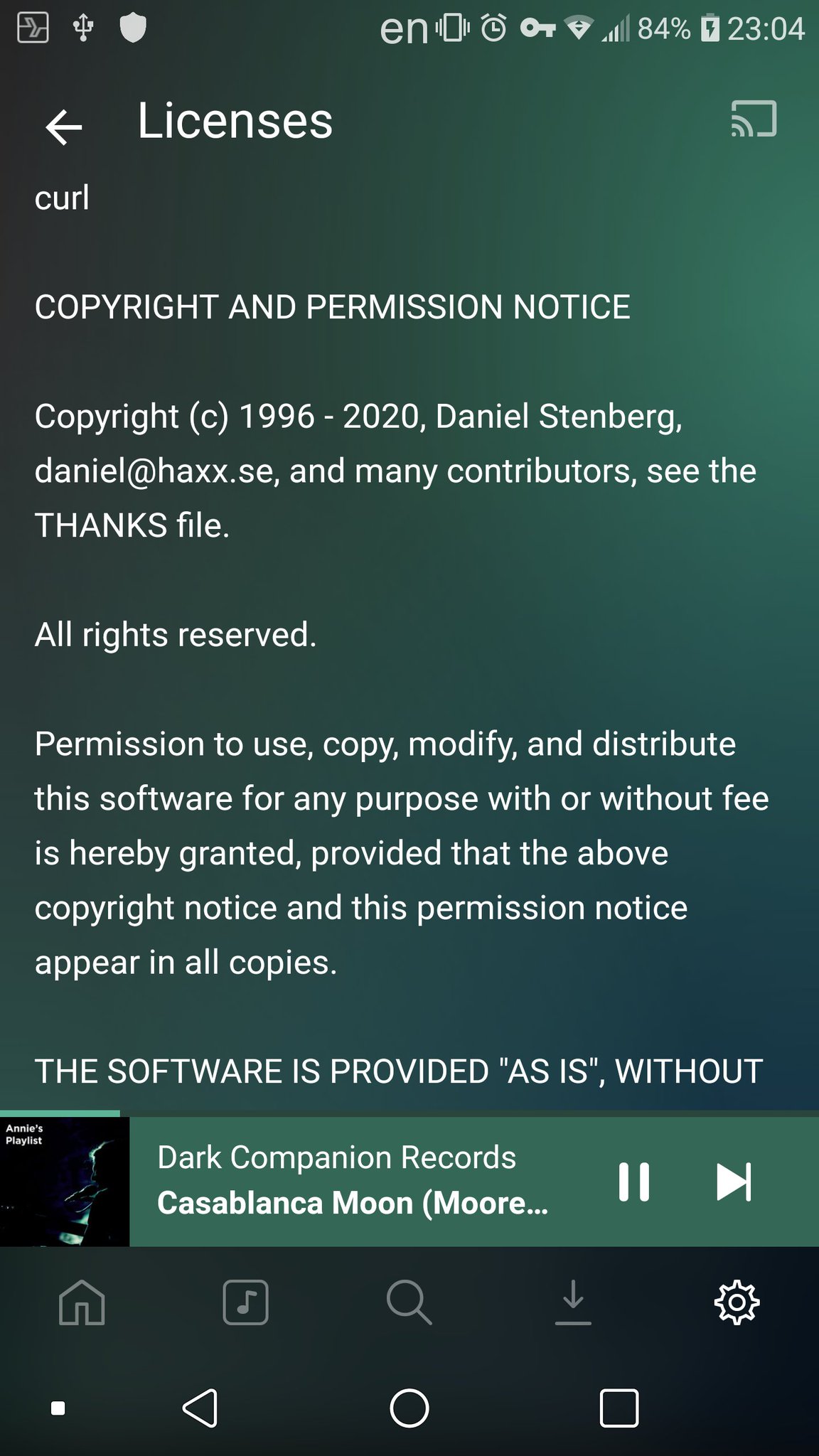

Spotify on an Iphone.

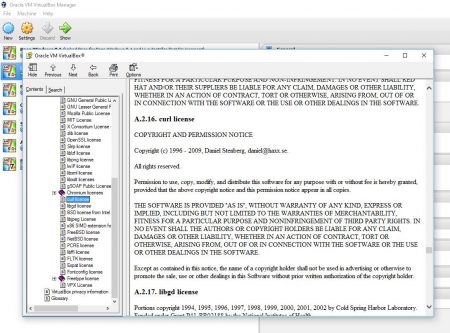

Virtualbox (thanks to Anders Nilsson)

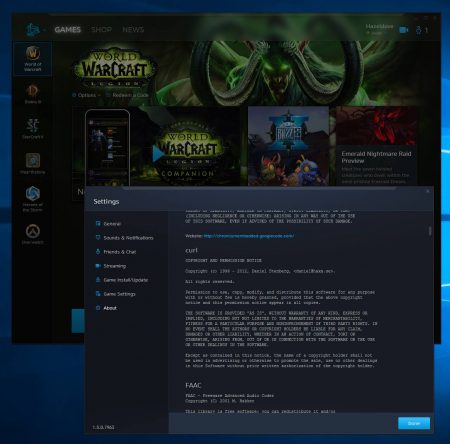

Battle.net (thanks Anders Nilsson)

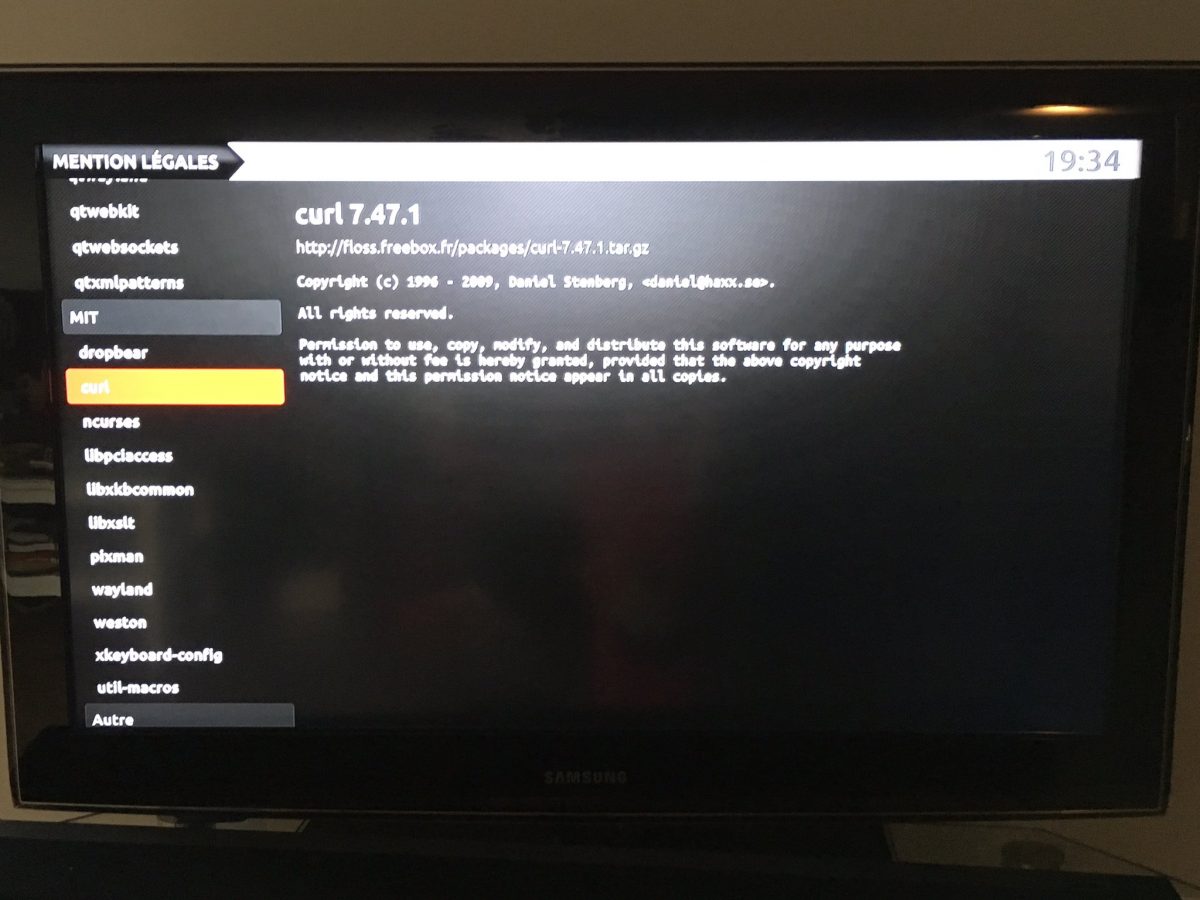

Freebox (thanks Alexis La Goutte)

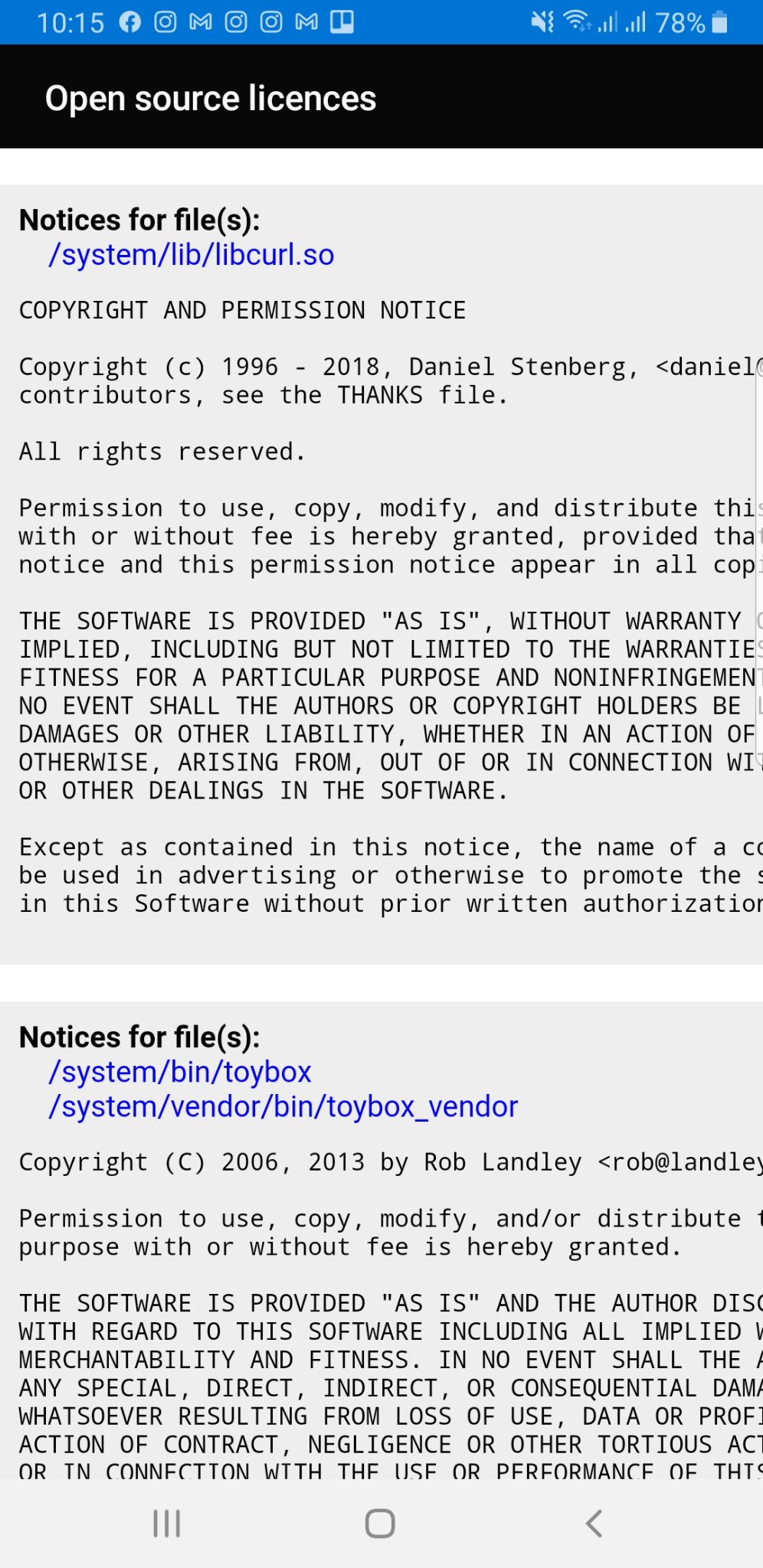

The Youtube app on Android. (Thanks Ray Satiro)

The Youtube app on iOS (Thanks Anthony Bryan)

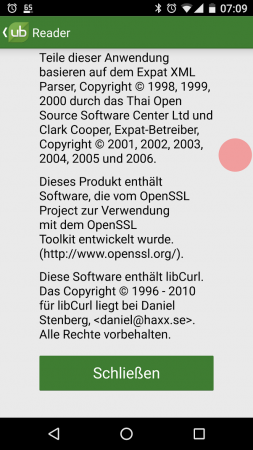

UBReader is an ebook reader app on Android.

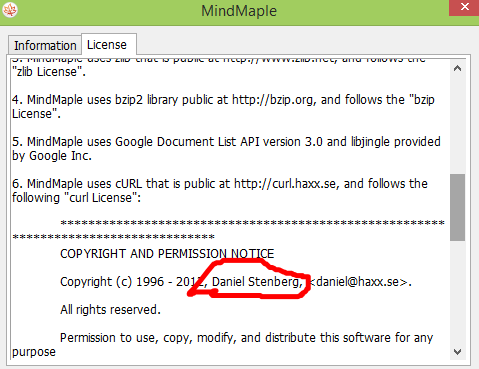

MindMaple is using curl (Thanks to Peter Buyze)

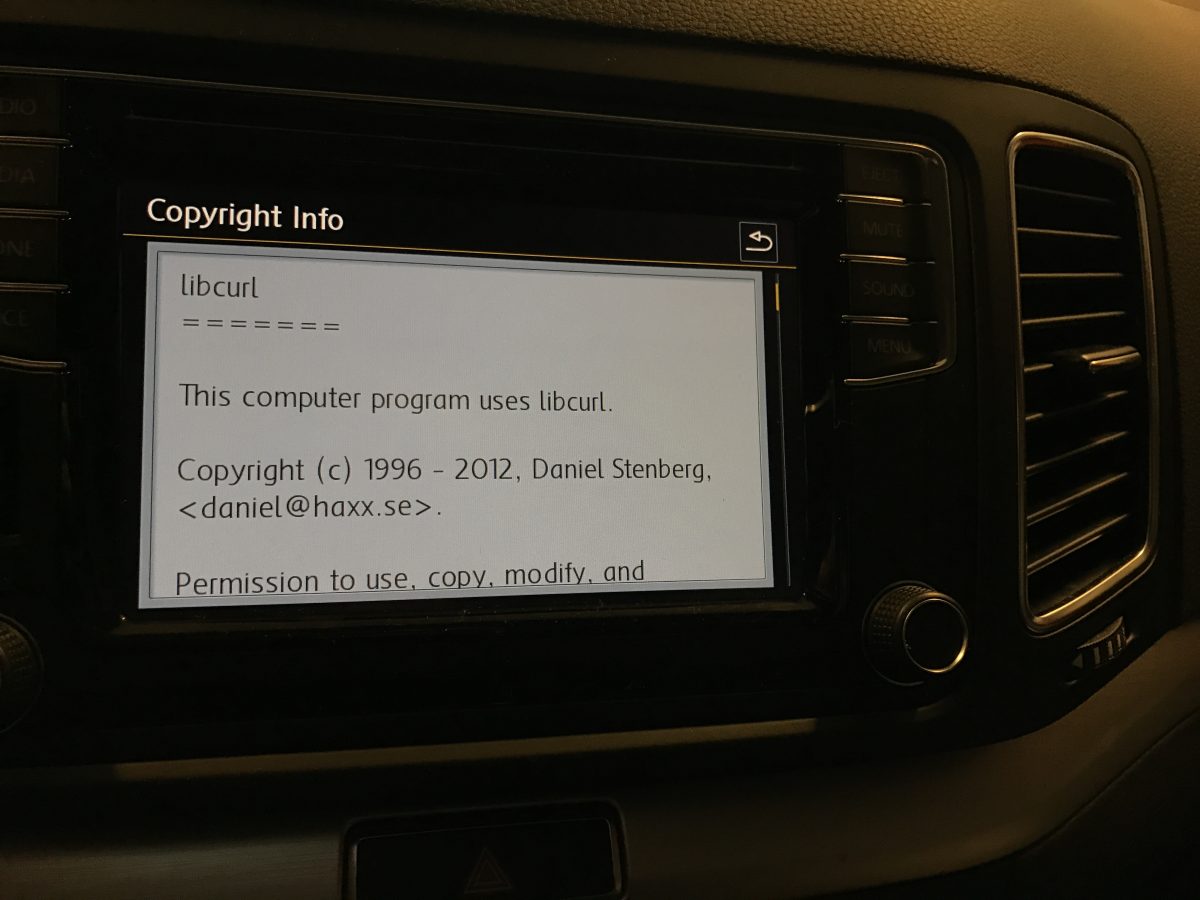

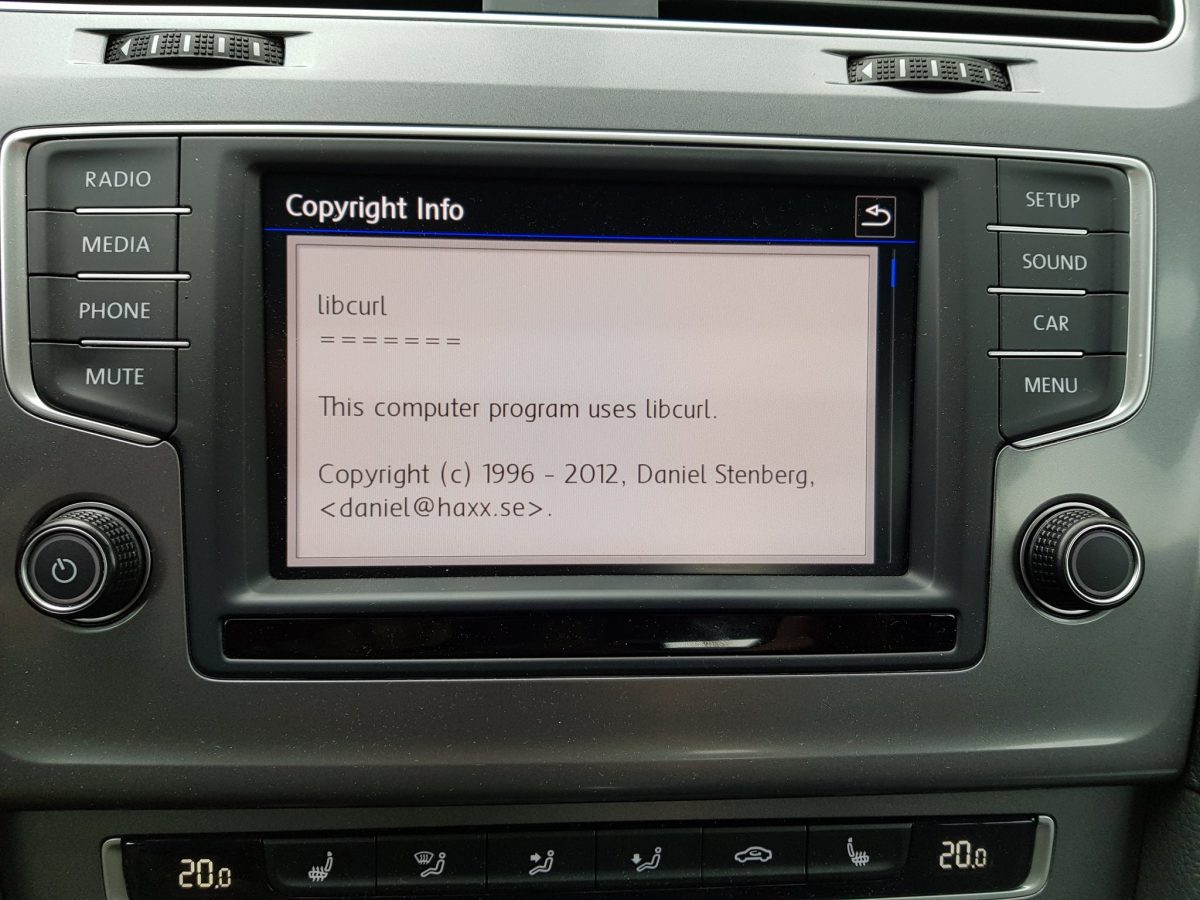

license screen from a VW Sharan car (Thanks to Jonas Lejon)

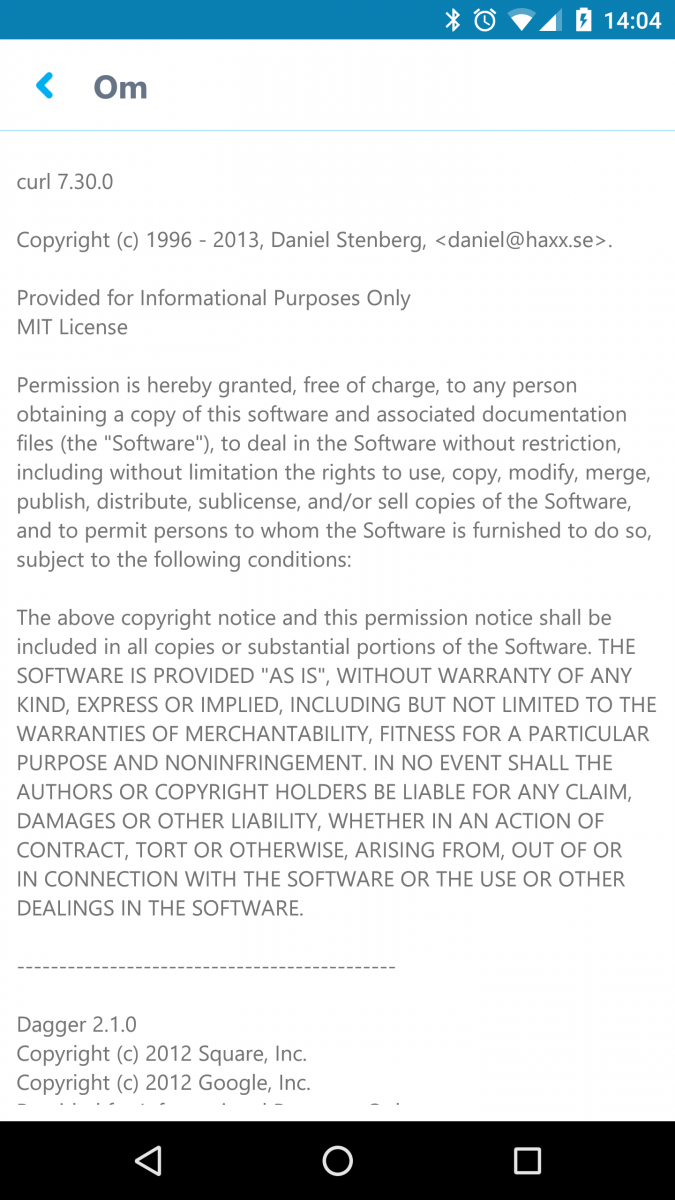

Skype on Android

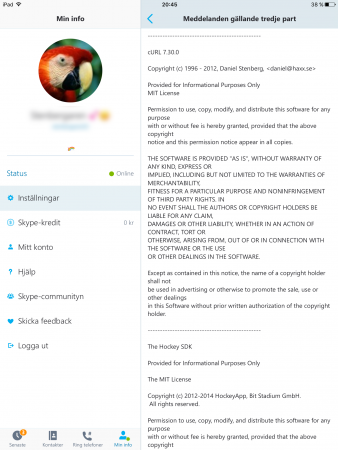

Skype on an iPad

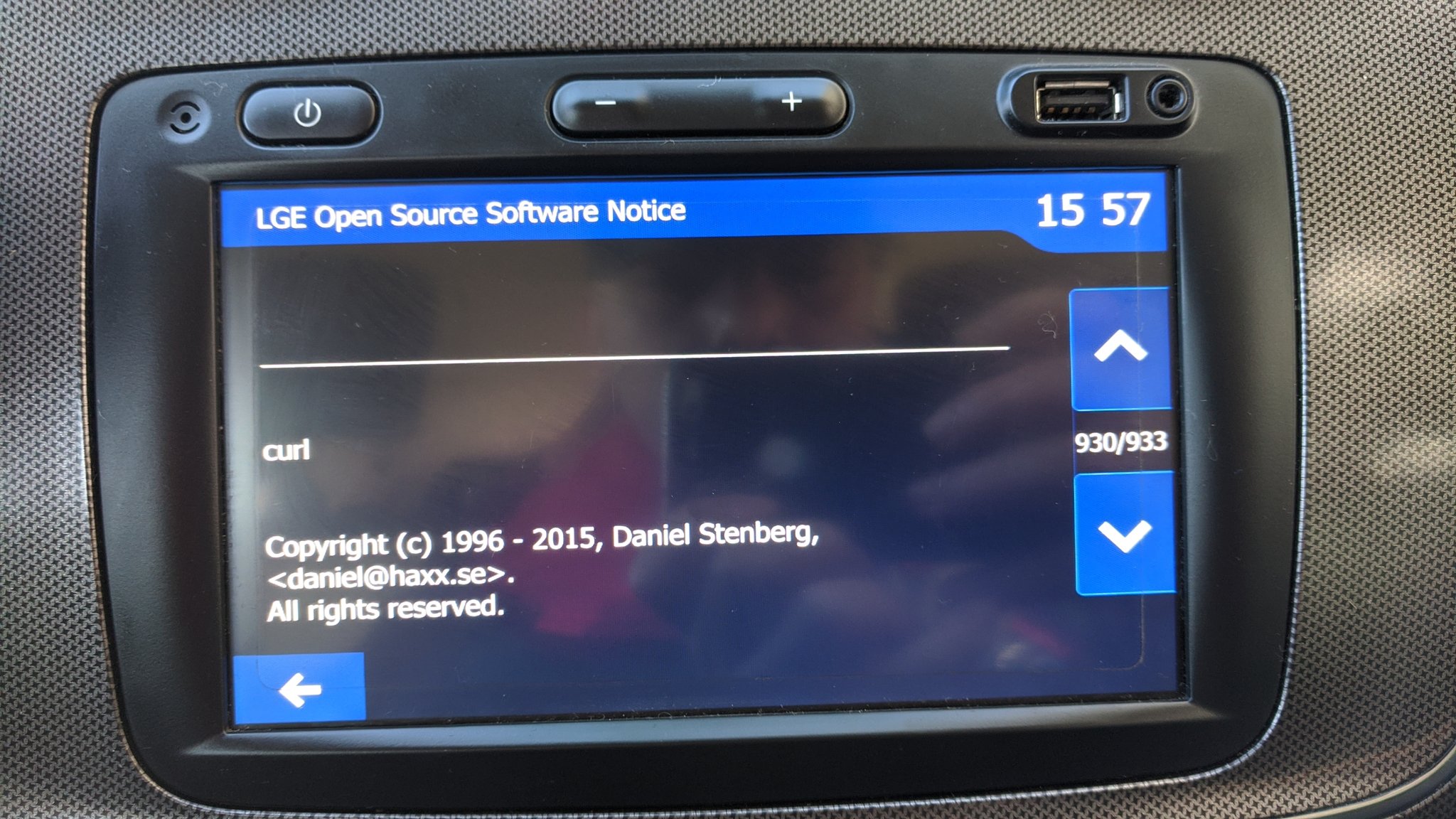

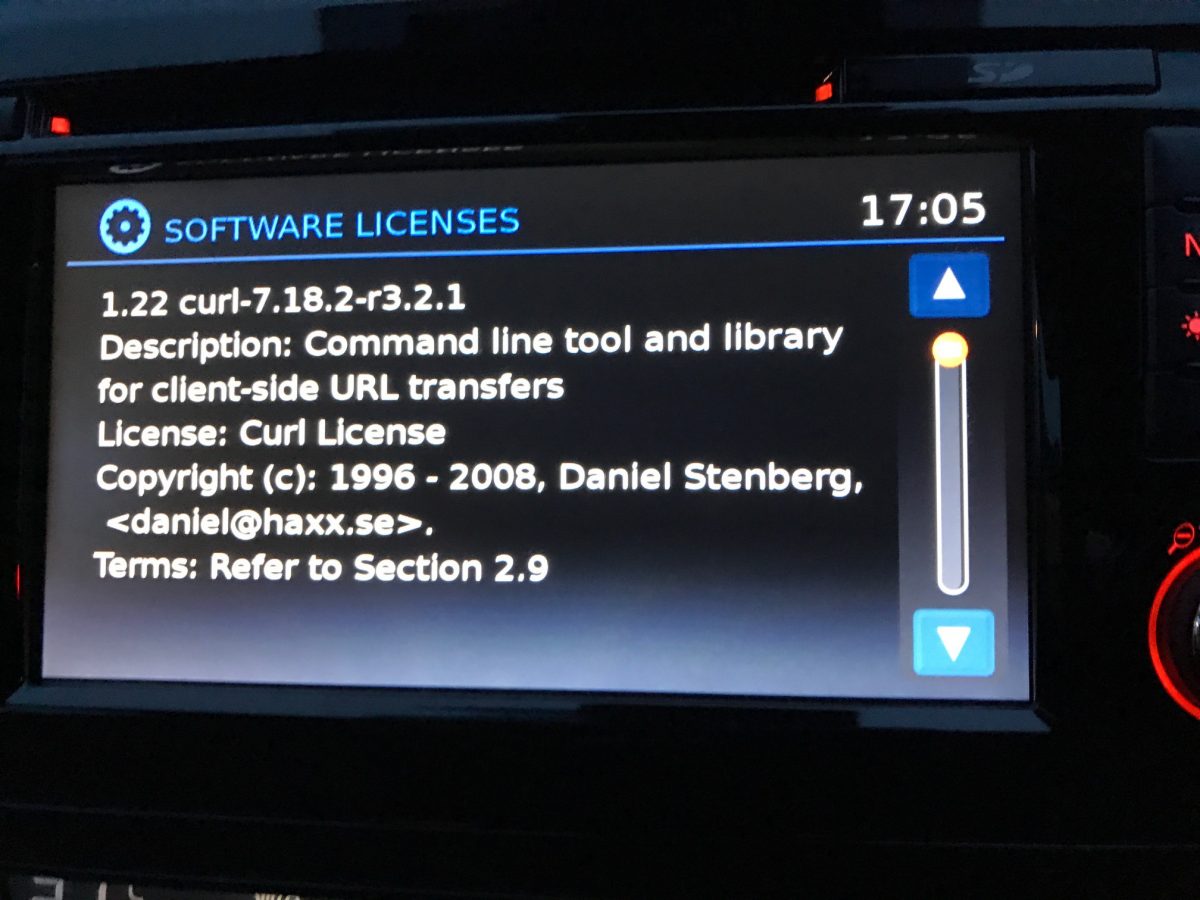

Nissan Qashqai 2016 (thanks to Peteski)

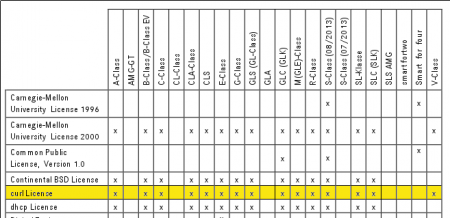

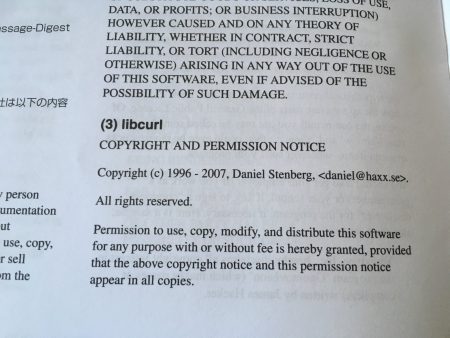

The Mercedes Benz license agreement from 2015 listing which car models that include curl.

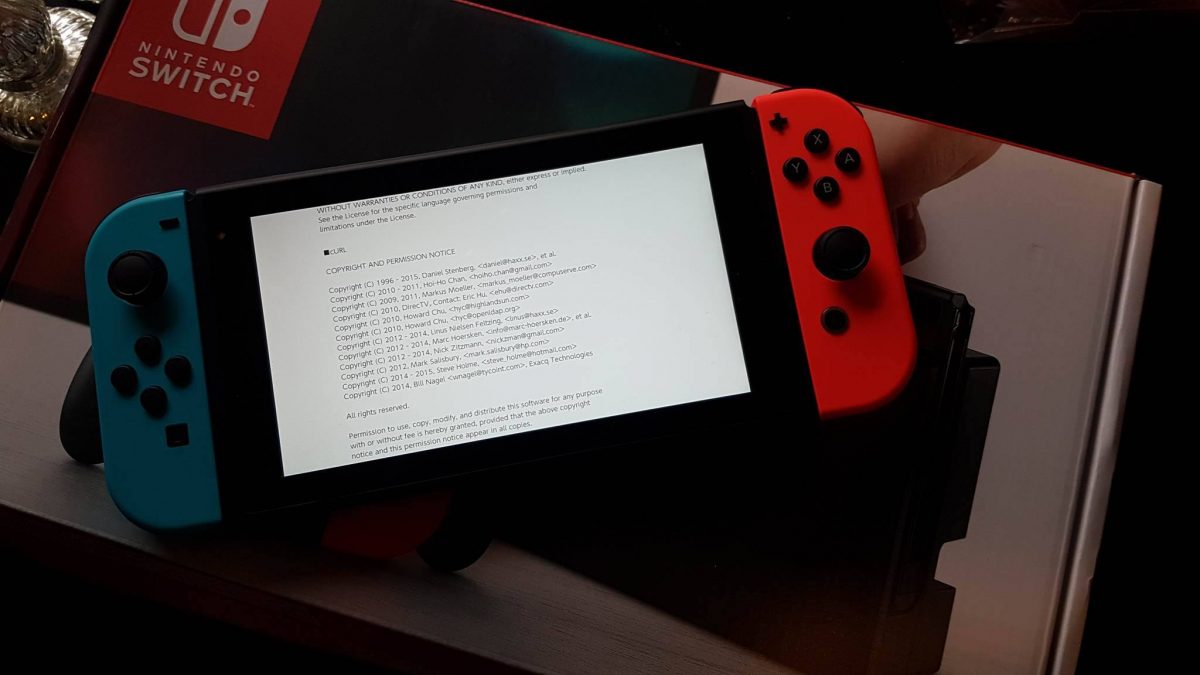

Nintendo Switch uses curl (Thanks to Anders Nilsson)

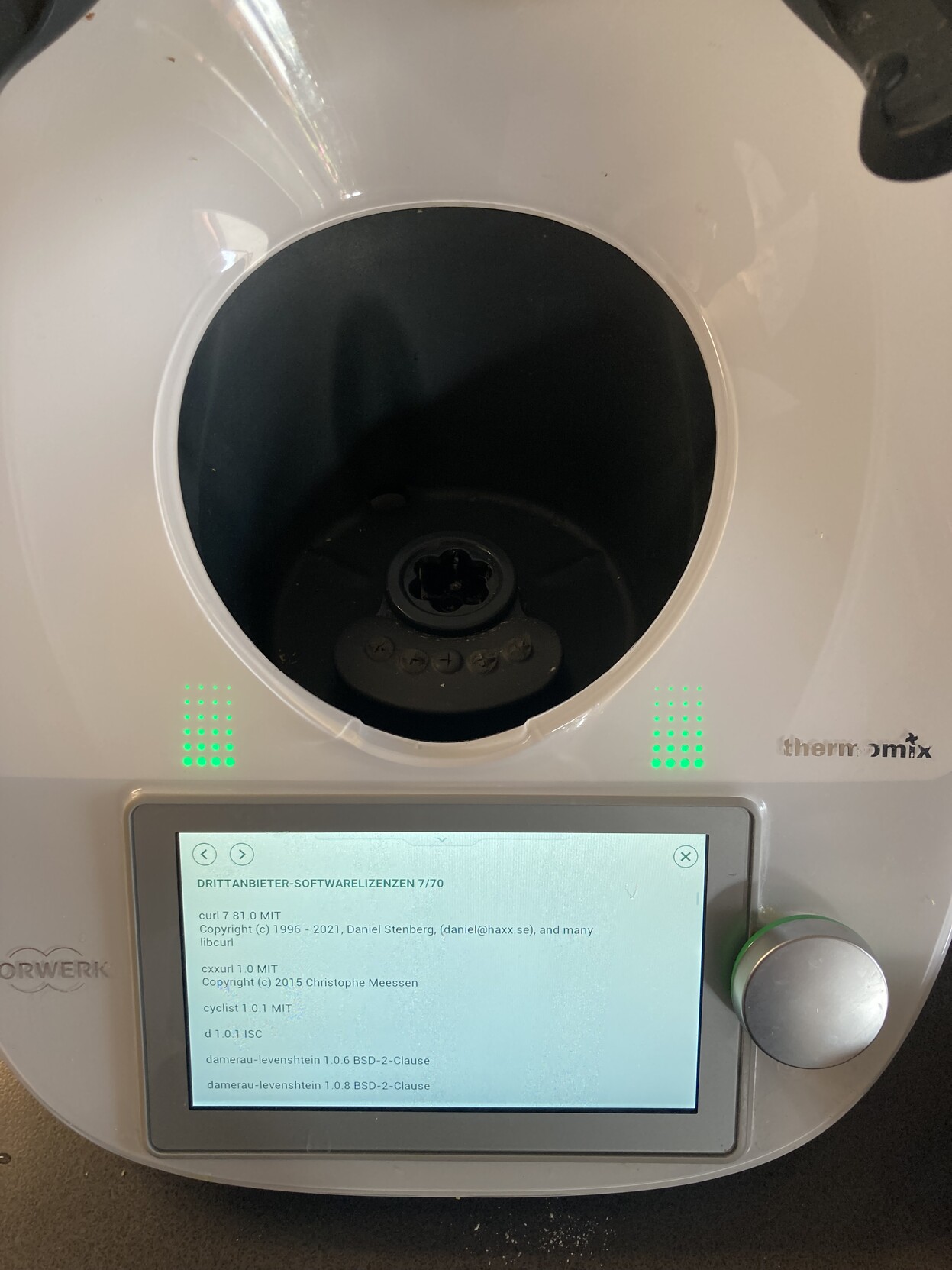

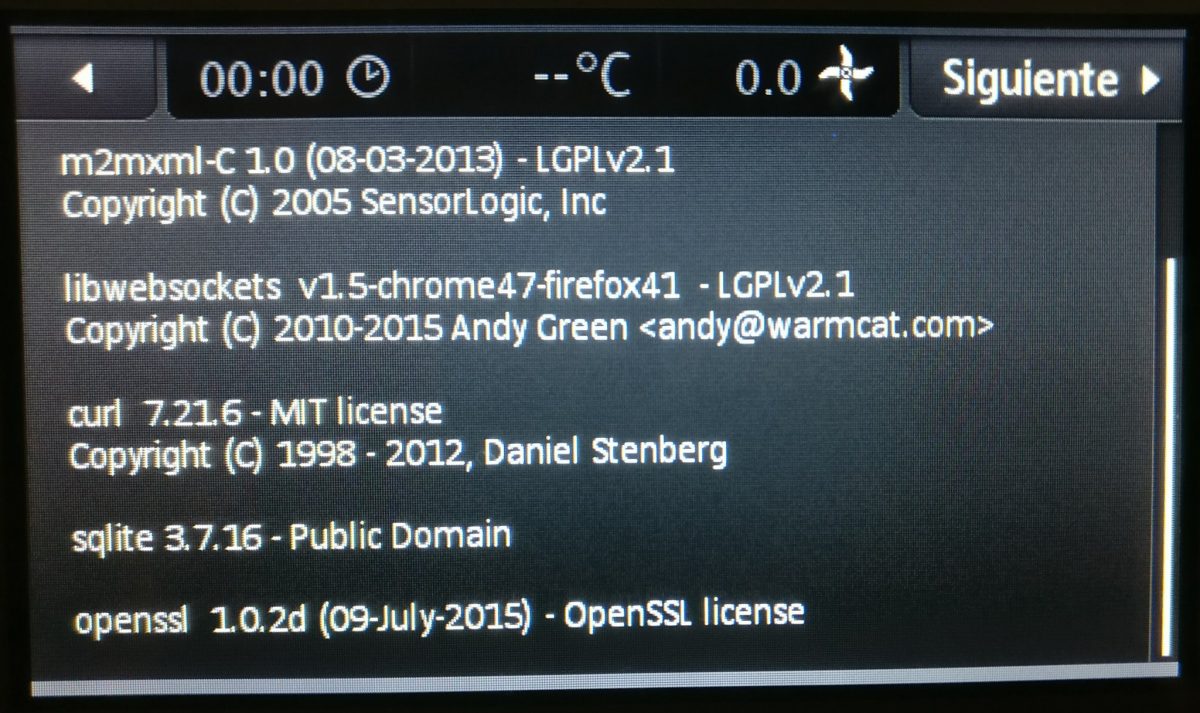

The Thermomix TM 5 kitchen/cooking appliance (Thanks to Sergio Conde)

Cisco Anyconnect (Thanks to Dane Knecht) – notice the age of the curl copyright string in comparison to the main one!

Sony Android TV (Thanks to Sajal Kayan)

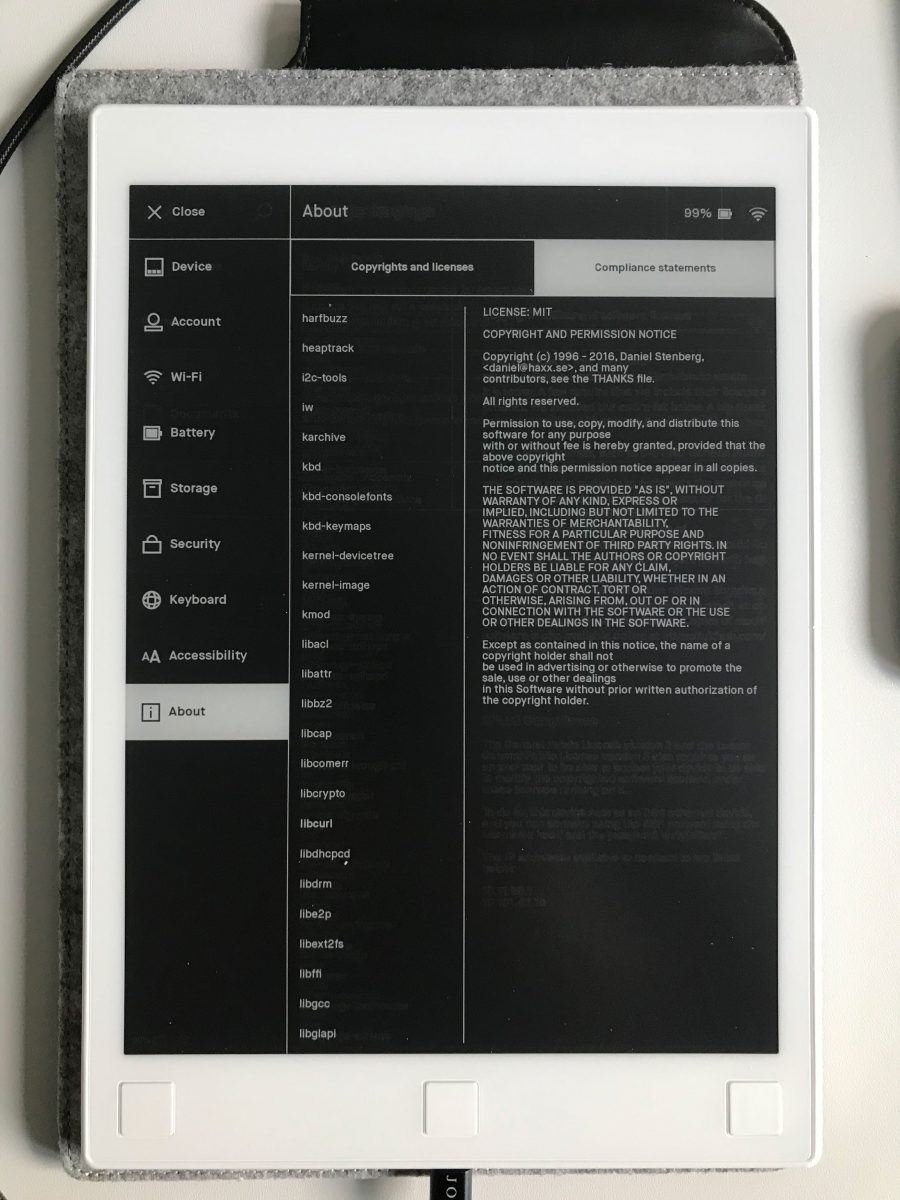

The reMarkable E-paper tablet uses curl. (Thanks to Zakx)

BMW i3, snapshot from this video (Thanks to Terence Eden)

BMW i8. (Thanks to eeeebbbbrrrr)

Amazon Kindle Paperwhite 3 (thanks to M Hasbini)

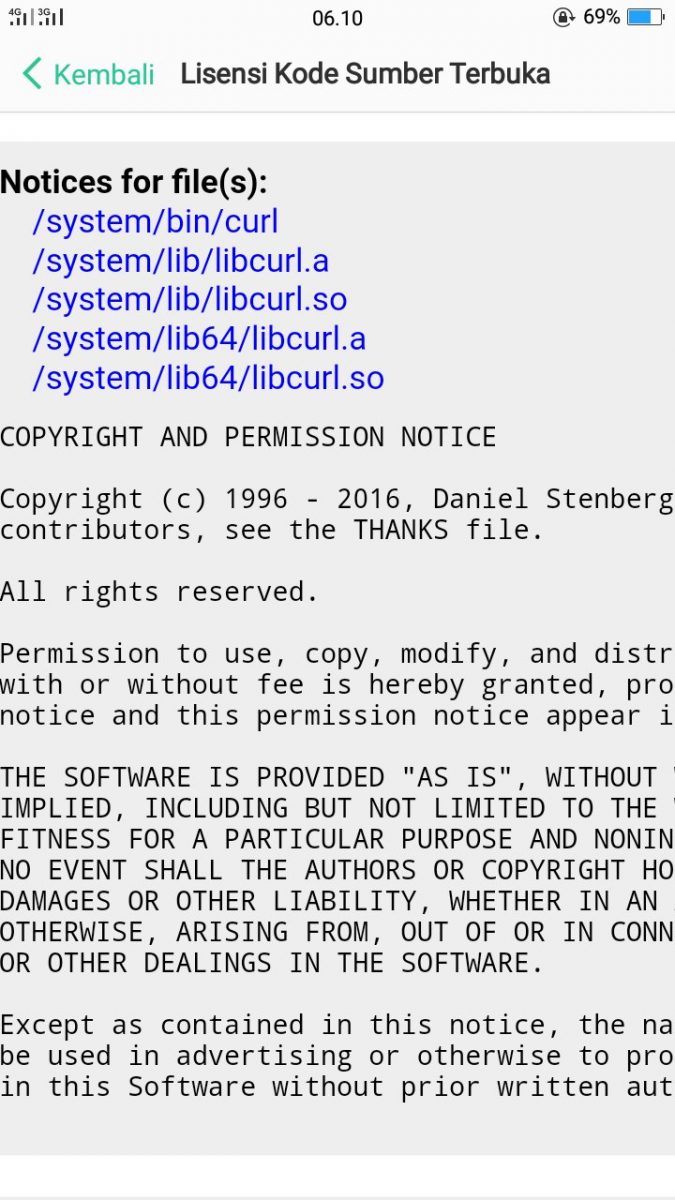

Xiaomi Android uses both curl and libcurl. (Thanks to Björn Stenberg)

Verisure V box microenhet smart lock runs curl (Thanks to Jonas Lejon)

curl in a Subaru (Thanks to Jani Tarvainen)

Another VW (Thanks to Michael Topal)

Oppo Android uses curl (Thanks to Dio Oktarianos Putra)

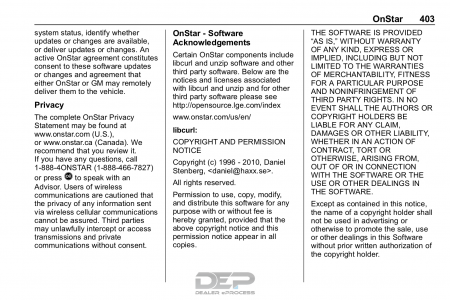

Chevrolet Traverse 2018 uses curl according to its owners manual on page 403. It is mentioned almost identically in other Chevrolet model manuals such as for the Corvette, the 2018 Camaro, the 2018 TRAX, the 2013 VOLT, the 2014 Express and the 2017 BOLT.

The curl license is also in owner manuals for other brands and models such as in the GMC Savana, Cadillac CT6 2016, Opel Zafira, Opel Insignia, Opel Astra, Opel Karl, Opel Cascada, Opel Mokka, Opel Ampera, Vauxhall Astra … (See 100 million cars run curl).

The Onkyo TX-NR609 AV-Receiver uses libcurl as shown by the license in its manual. (Thanks to Marc Hörsken)

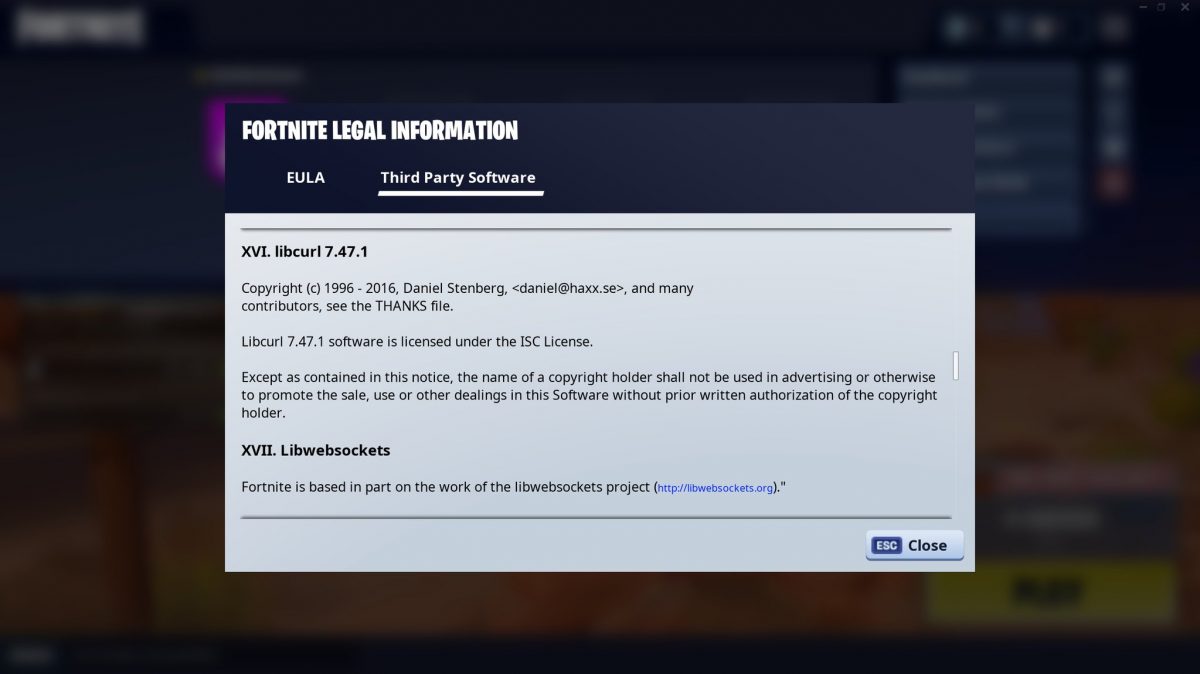

Fortnite uses libcurl. (Thanks to Neil McKeown)

Red Dead Redemption 2 uses libcurl. The ending sequence video. (Thanks to @nadbmal)

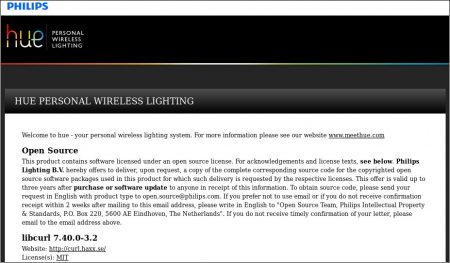

Philips Hue Lights uses libcurl (Thanks to Lorenzo Fontana)

Pioneer makes Blu-Ray players that use libcurl. (Thanks to Maarten Tijhof)

curl is credited in the game Marvel’s Spider-Man for PS4.

Garmin Fenix 5X Plus runs curl (thanks to Jonas Björk)

Crusader Kings II uses curl (thanks to Frank Gevaerts)

DiRT Rally 2.0 (PlayStation 4 version) uses curl (thanks to Roman)

Microsoft Flight Simulator uses libcurl. Thanks to Till von Ahnen.

Google Photos on Android uses curl.

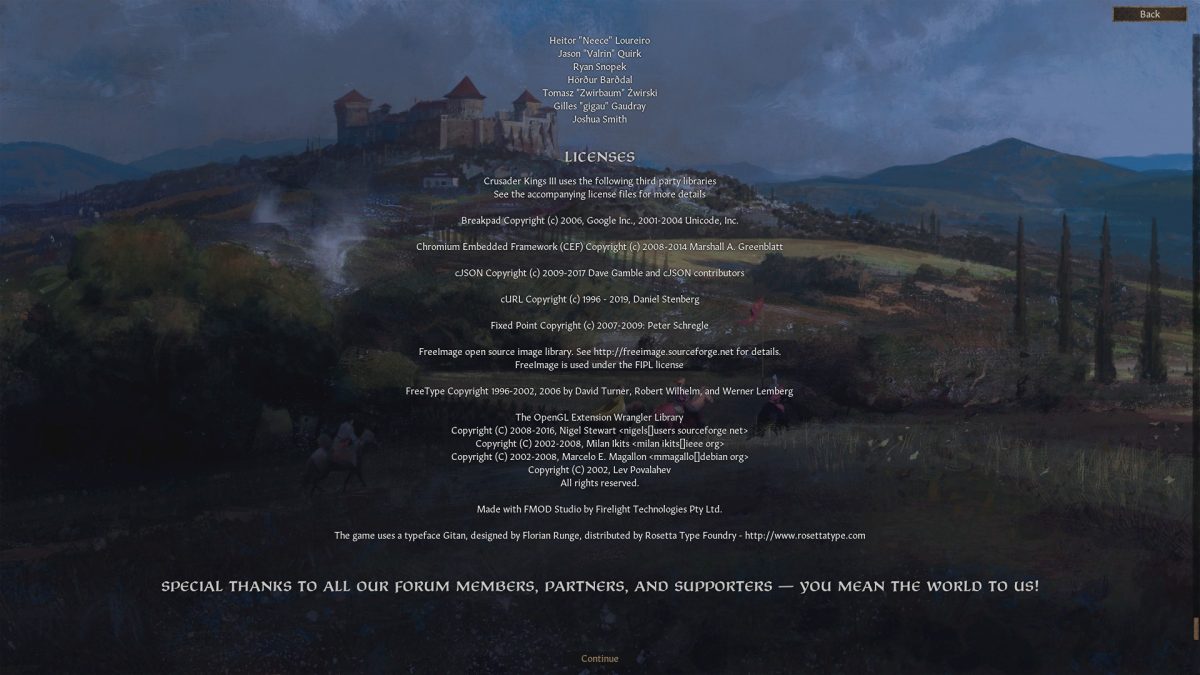

Crusader Kings III uses curl (thanks to Frank Gevaerts)

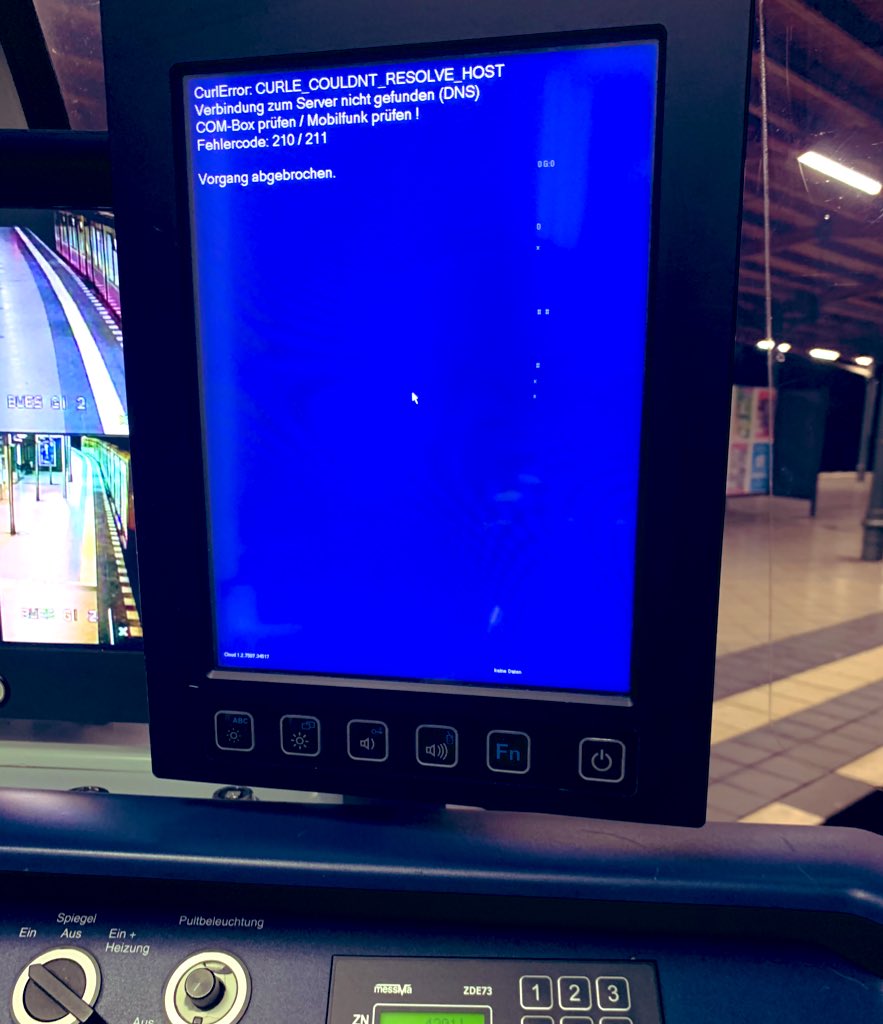

The SBahn train in Berlin uses curl! (Thanks to @_Bine__)

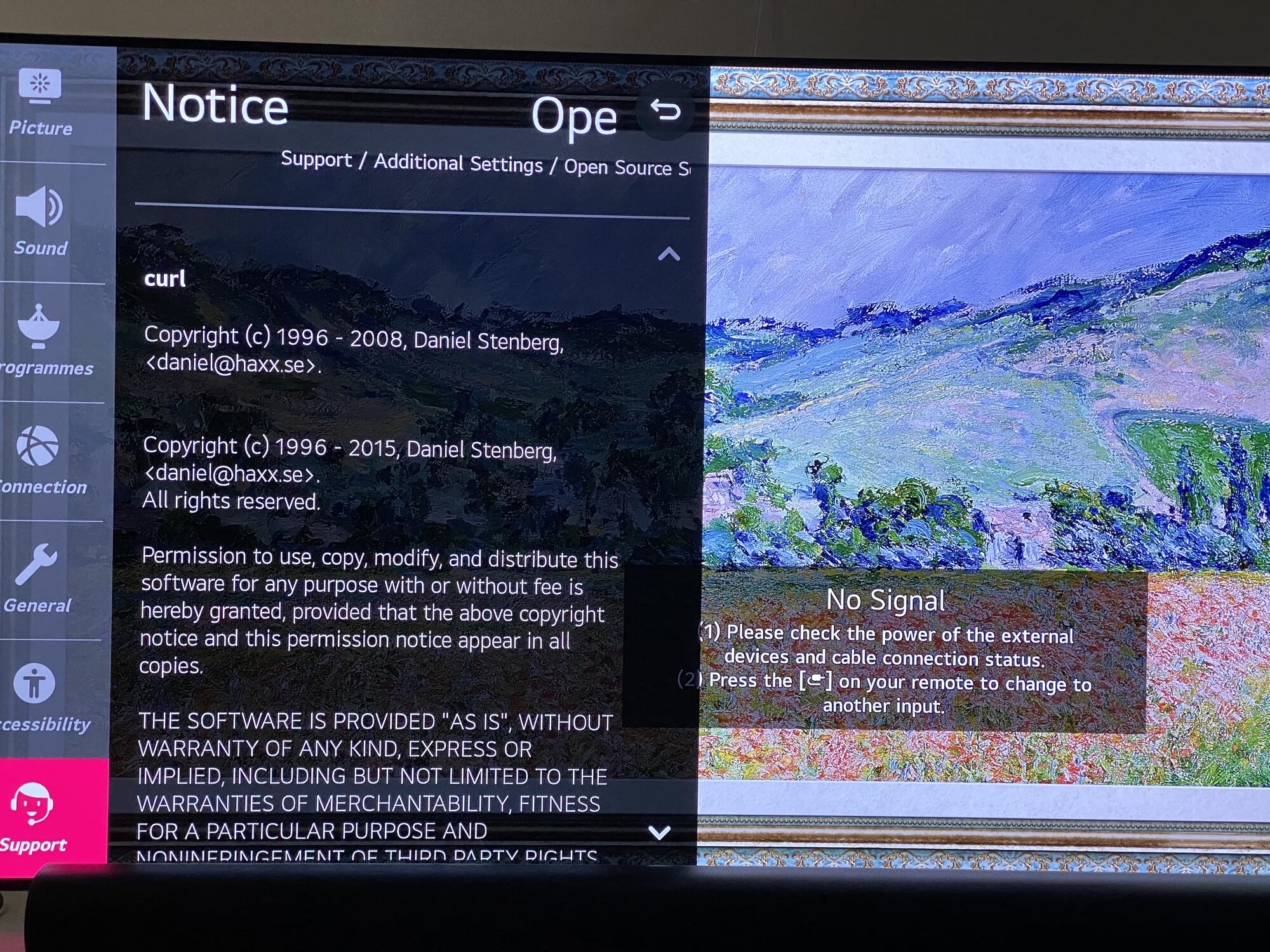

LG uses curl in TVs.

Garmin Forerunner 245 also runs curl (Thanks to Martin)

The bicycle computer Hammerheaed Karoo v2 (thanks to Adrián Moreno Peña)

Playstation 5 uses curl (thanks to djs)

The Netflix app on Android uses libcurl (screenshot from January 29, 2021). Set to Swedish, hence the title of the screen.

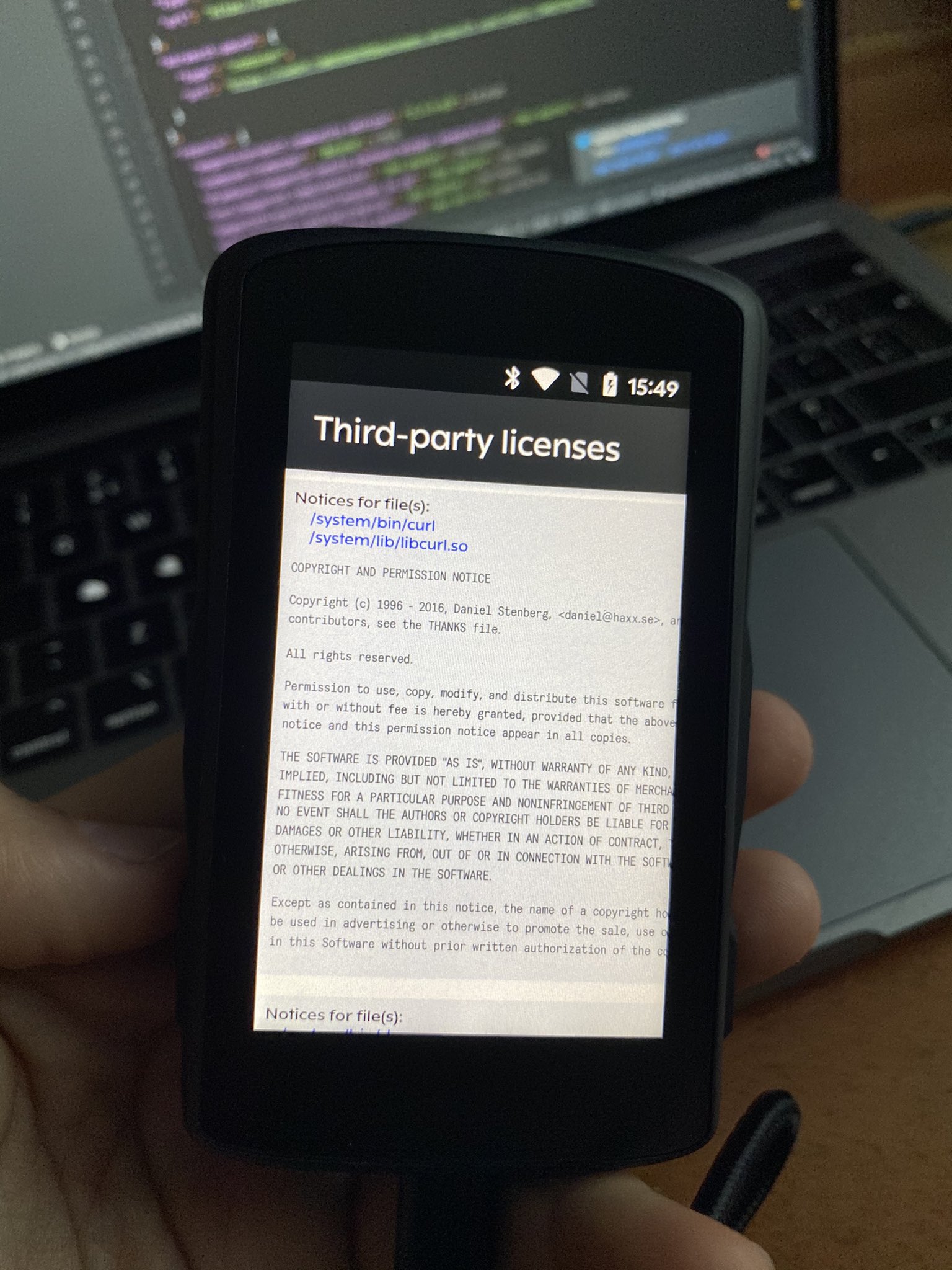

(Google) Android 11 uses libcurl. Screenshot from a Pixel 4a 5g.

Samsung Android uses libcurl in presumably every model…

The ending sequence as seen on YouTube.

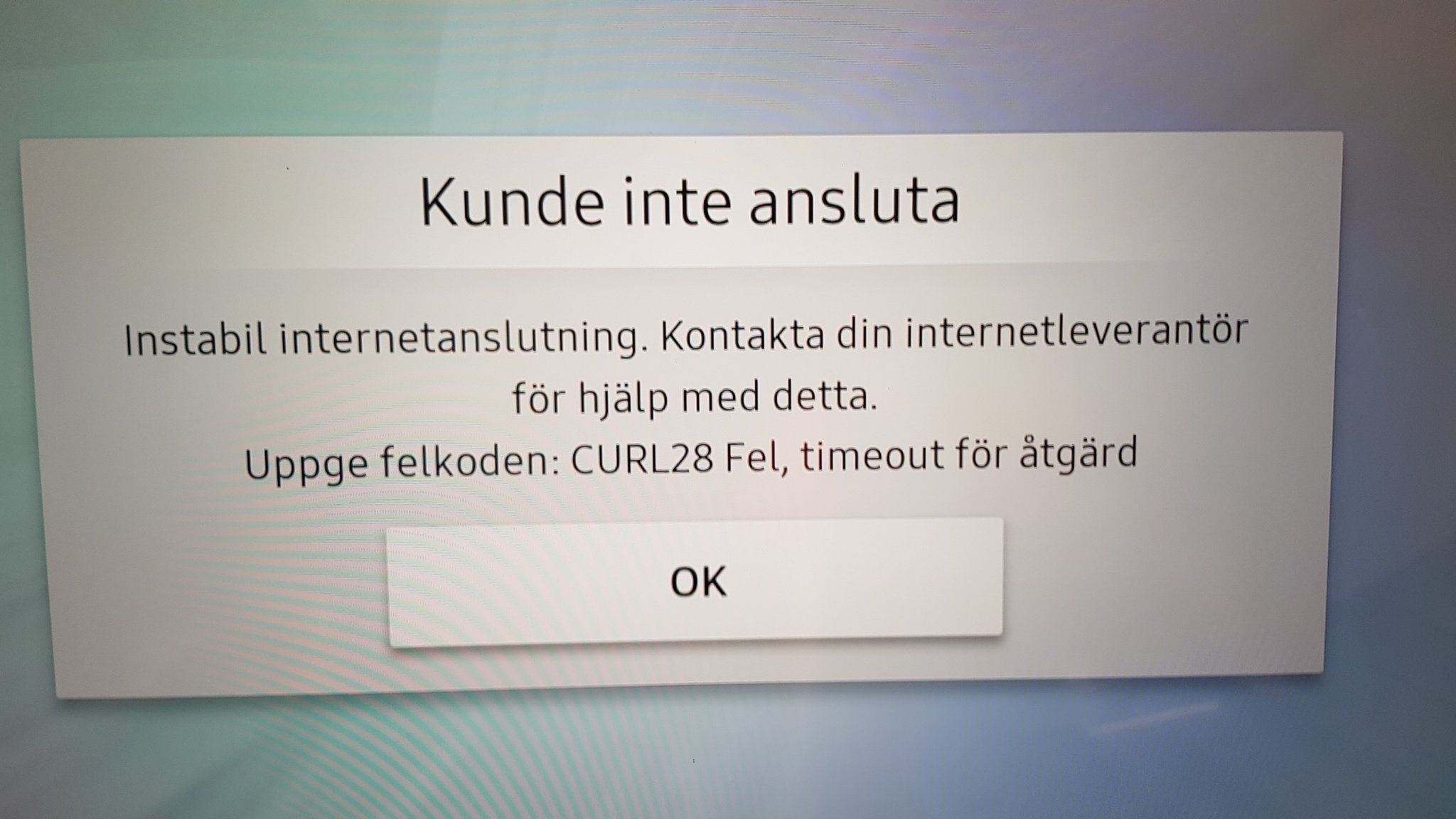

A Samsung TV speaking Swedish showing off a curl error code. Thanks to Thomas Svensson.

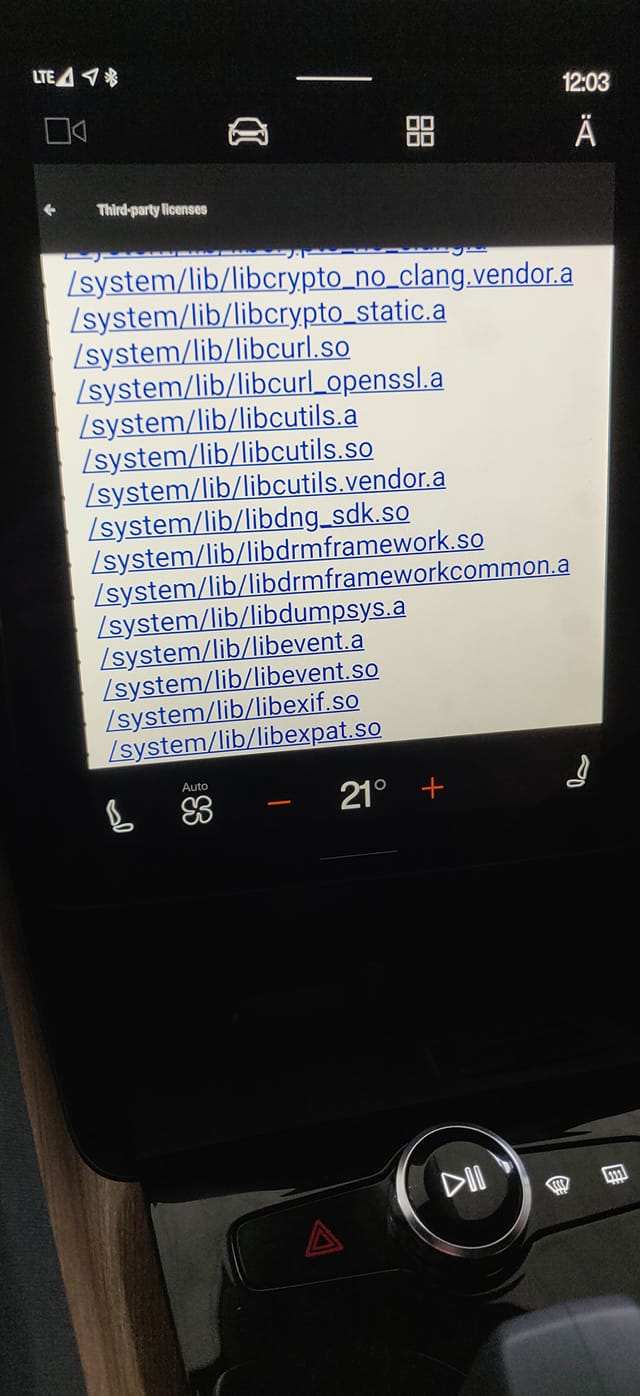

Polestar 2 (thanks to Robert Friberg for the picture)

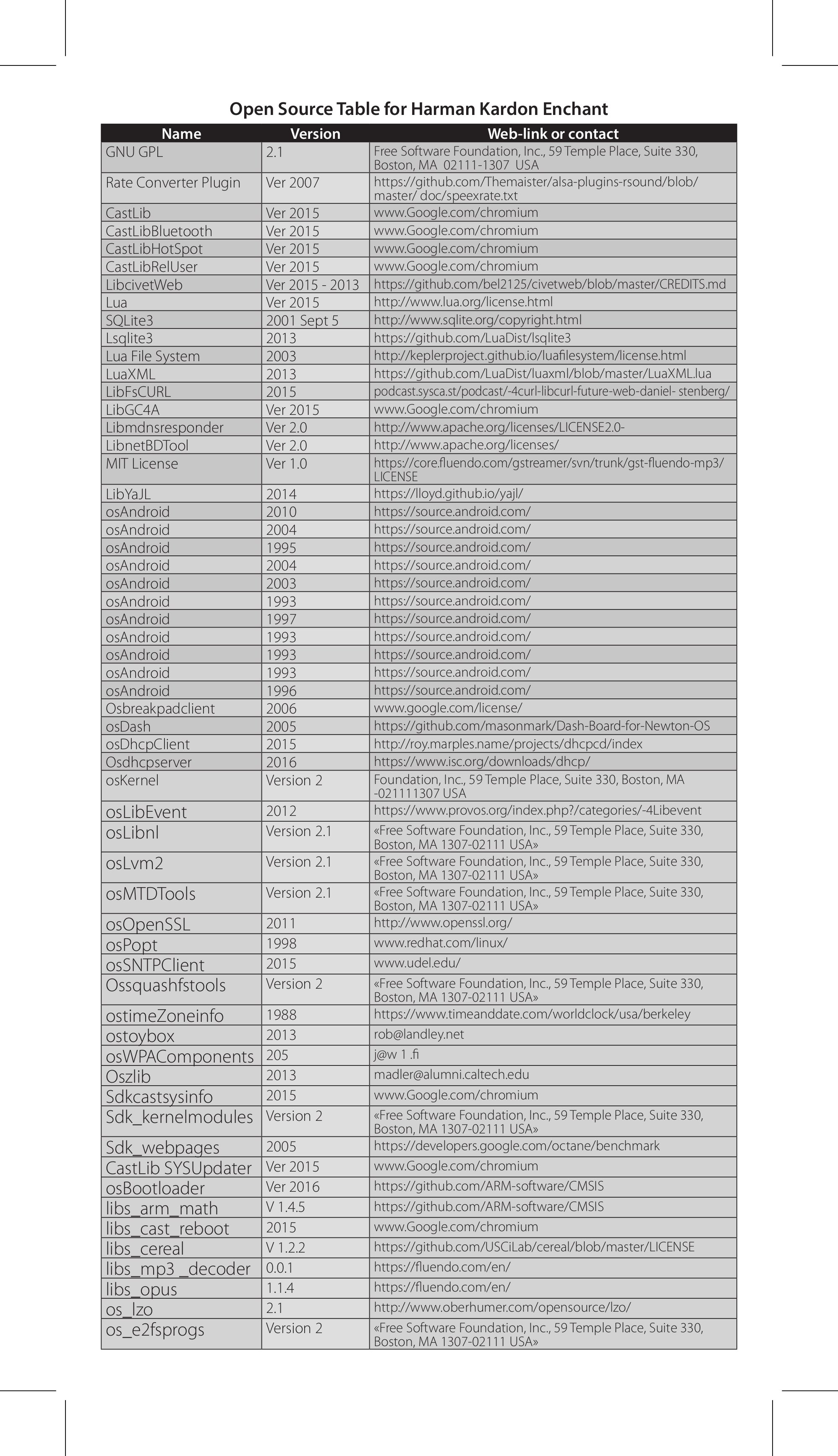

Harman Kardon uses libcurl in their Enchant soundbars (thanks to Fabien Benetou). The name and the link in that list are hilarious though.

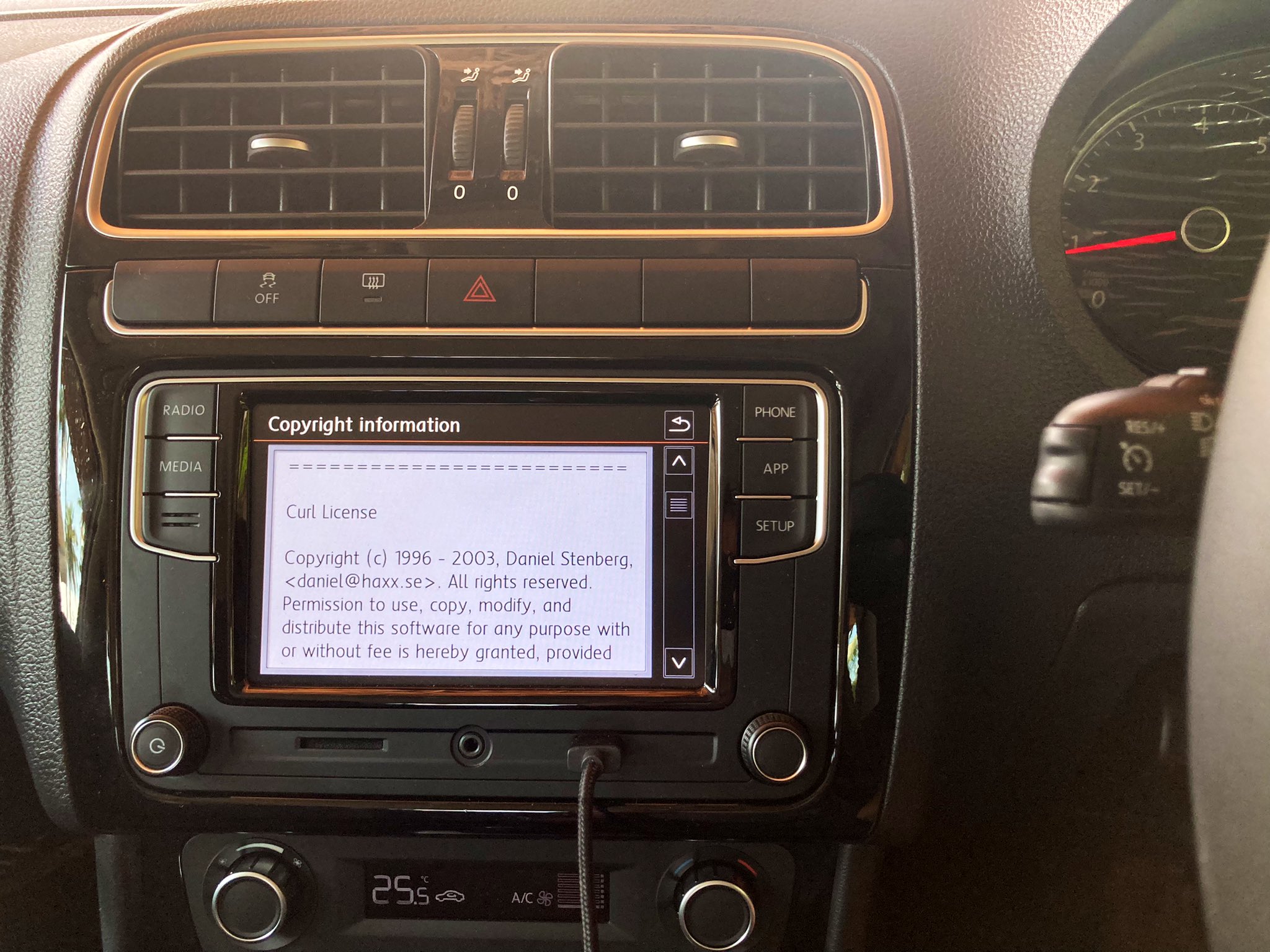

VW Polo running curl (Thanks to Vivek Selvaraj)

a BMW 2021 R1250GS motorcycle (Thanks to @growse)

Baldur’s Gate 3 uses libcurl (Thanks to Akhlis)

An Andersson TV using curl (Thanks to Björn Stenberg)

Ghost of Tsushima – a game. (Thanks to Patrik Svensson)

Sonic Frontier (Thanks to Esoteric Emperor)

The KAON NS1410 (set top box), possibly also called Mirada Inspire3 or Broadcom Nexus,. (Thanks to Aksel)

The Panasonic DC-GH5 camera. (Thanks fasterthanlime)

Plexamp, the Android app. (Thanks Fabio Loli)

The Dacia Sandero Stepway car (Thanks Adnane Belmadiaf)

The Garmin Venu Sq watch (Thanks gergo)

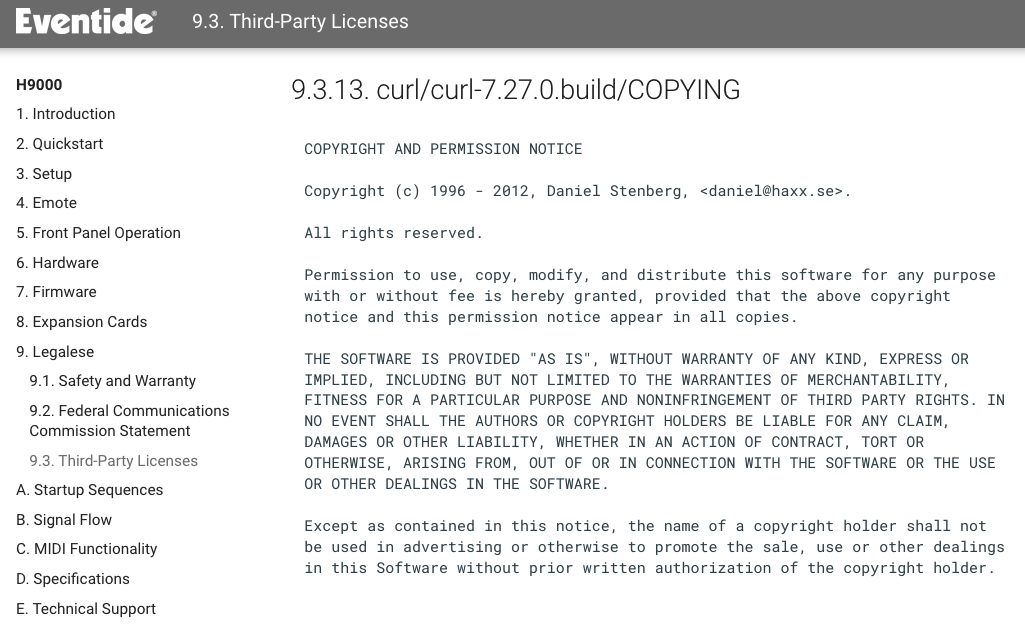

The Eventide H9000 runs curl. A high-end audio processing and effects device. (Thanks to John Baylies)

Diablo IV (Thanks to John Angelmo)

The Siemens EQ900 espresso machine runs curl. Screenshots below from a German version.

Thermomix TM6 by Vorwerk (Thanks to Uli H)

The Grandstream GXP2160 uses curl (thanks to Cameron Katri)

Assassin’s Creed Mirage (Thanks to Patrik Svensson)

Assassin’s Creed Shadows (Thanks to Mauricio Teixeira)

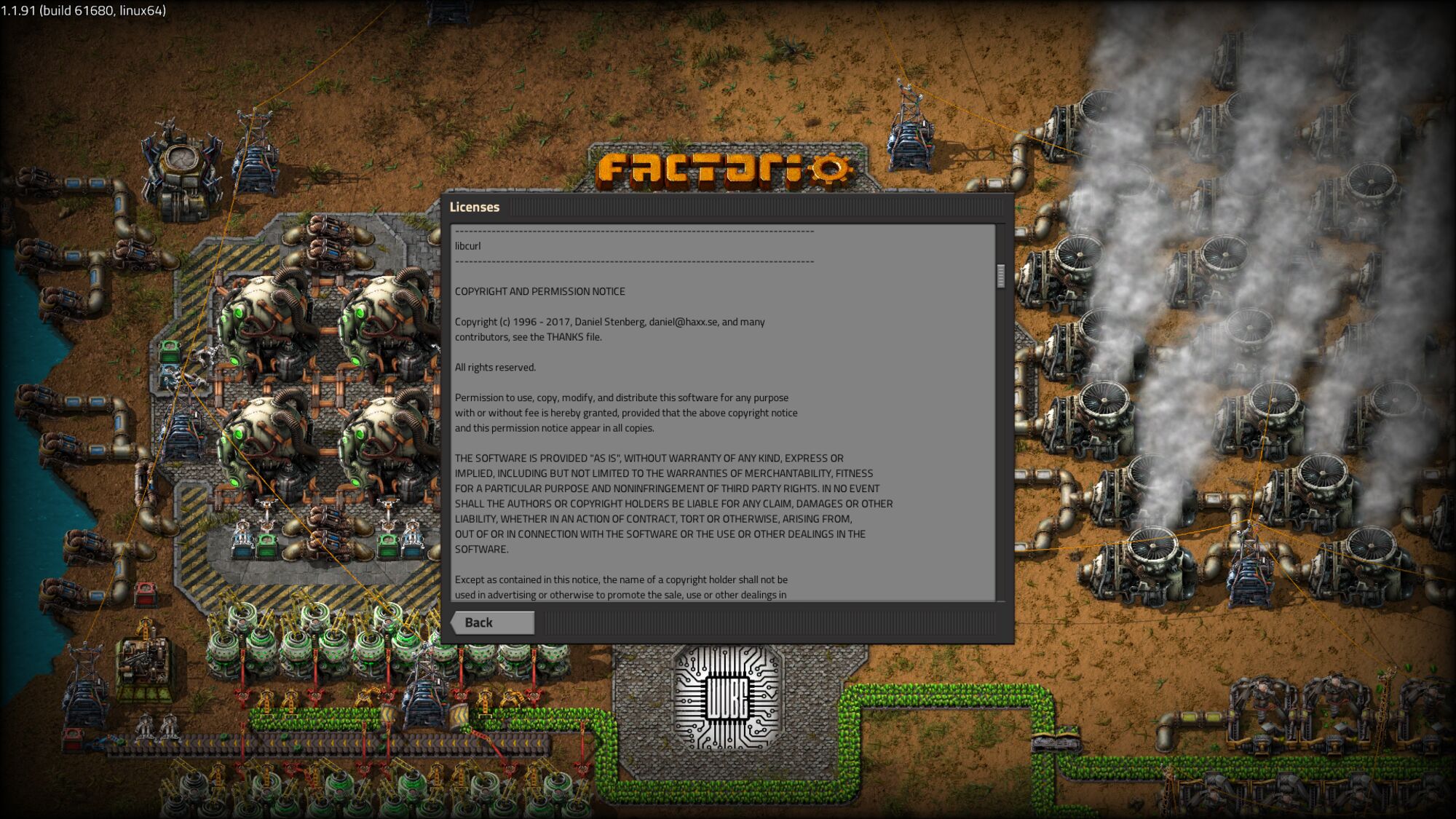

Factorio (Thanks to Der Große Böse Wolff)

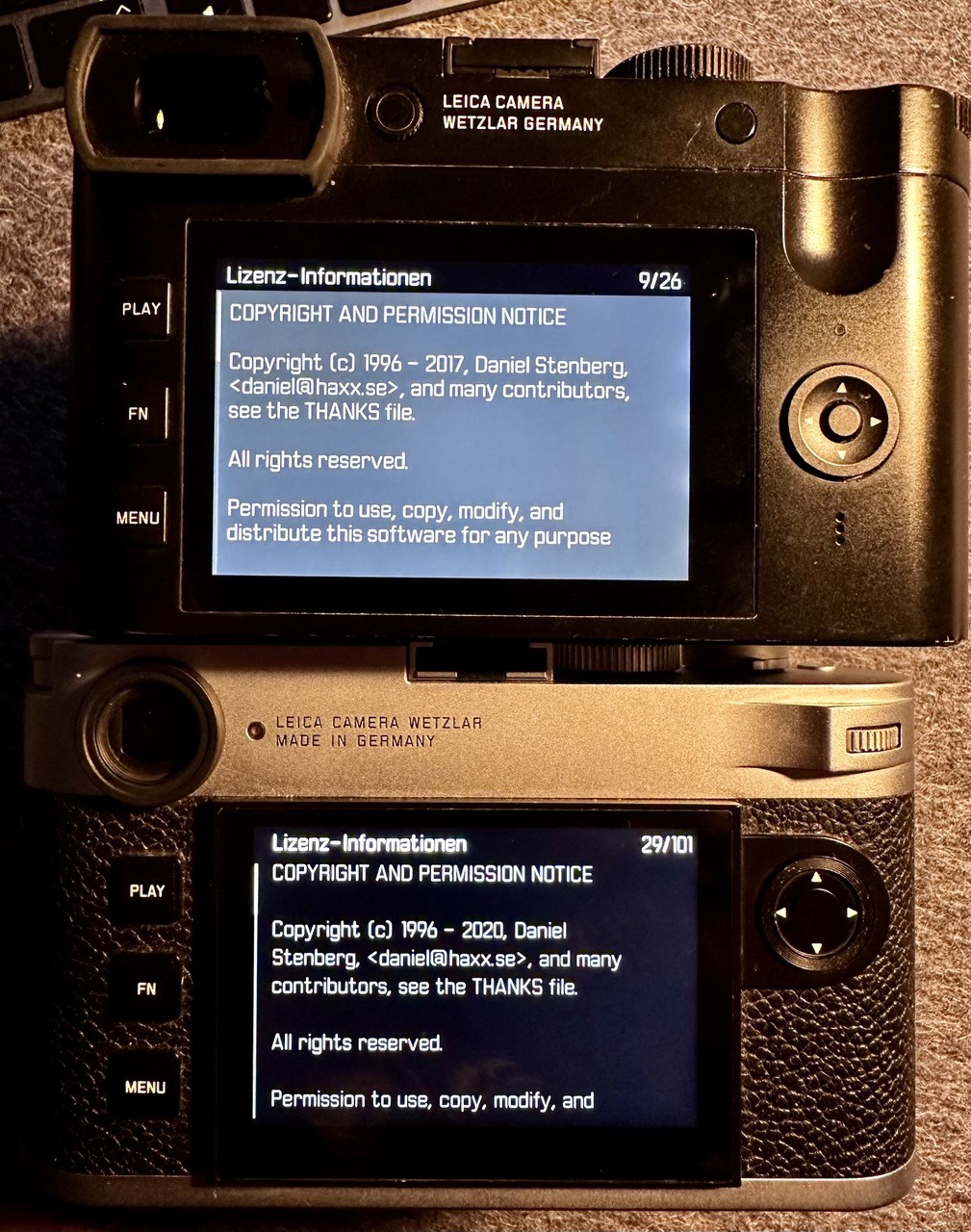

Leica Q2 and Leica M11 use curl (Thanks to PattaFeuFeu)

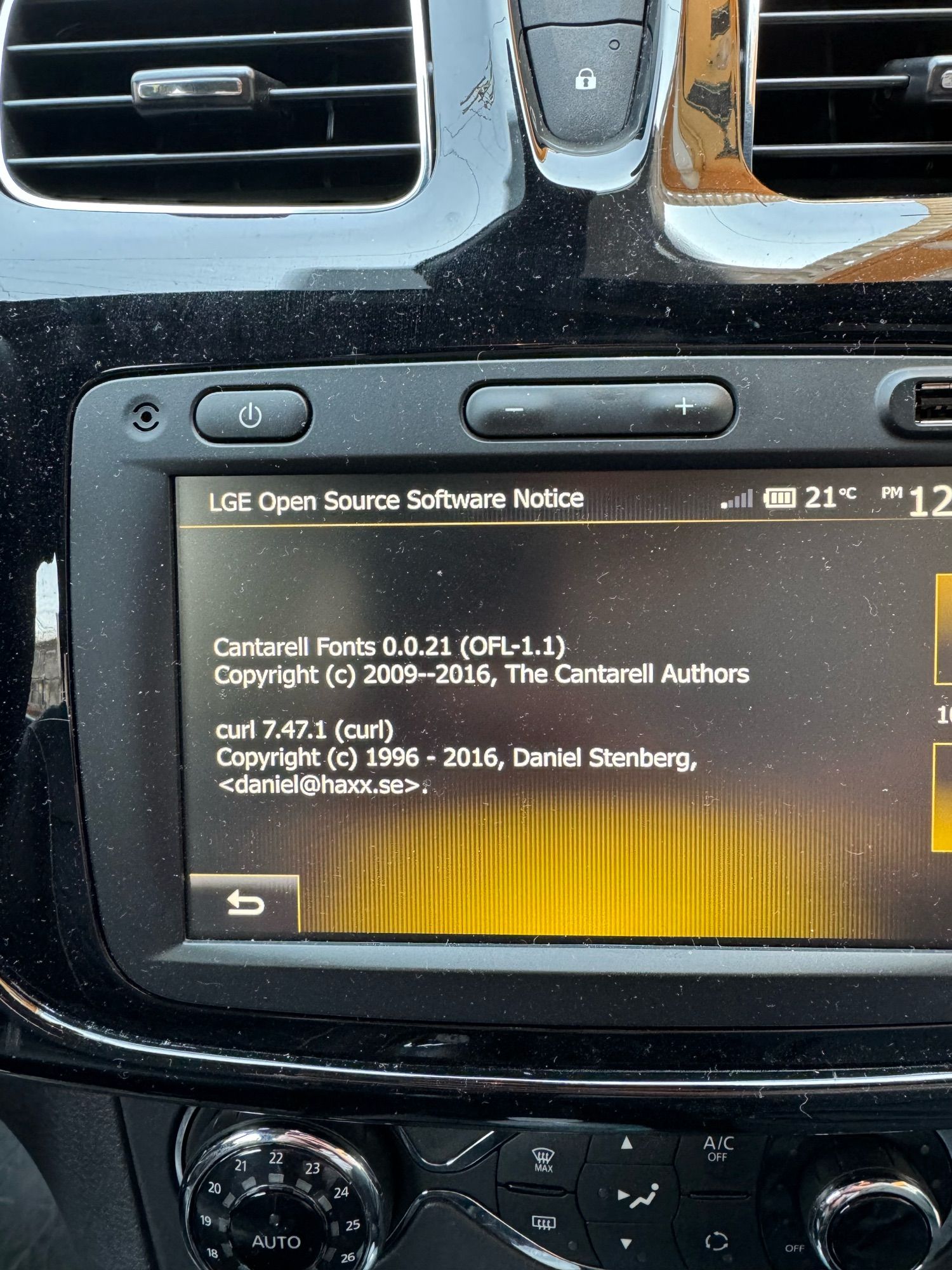

Renault Logan (thanks to Aksel)

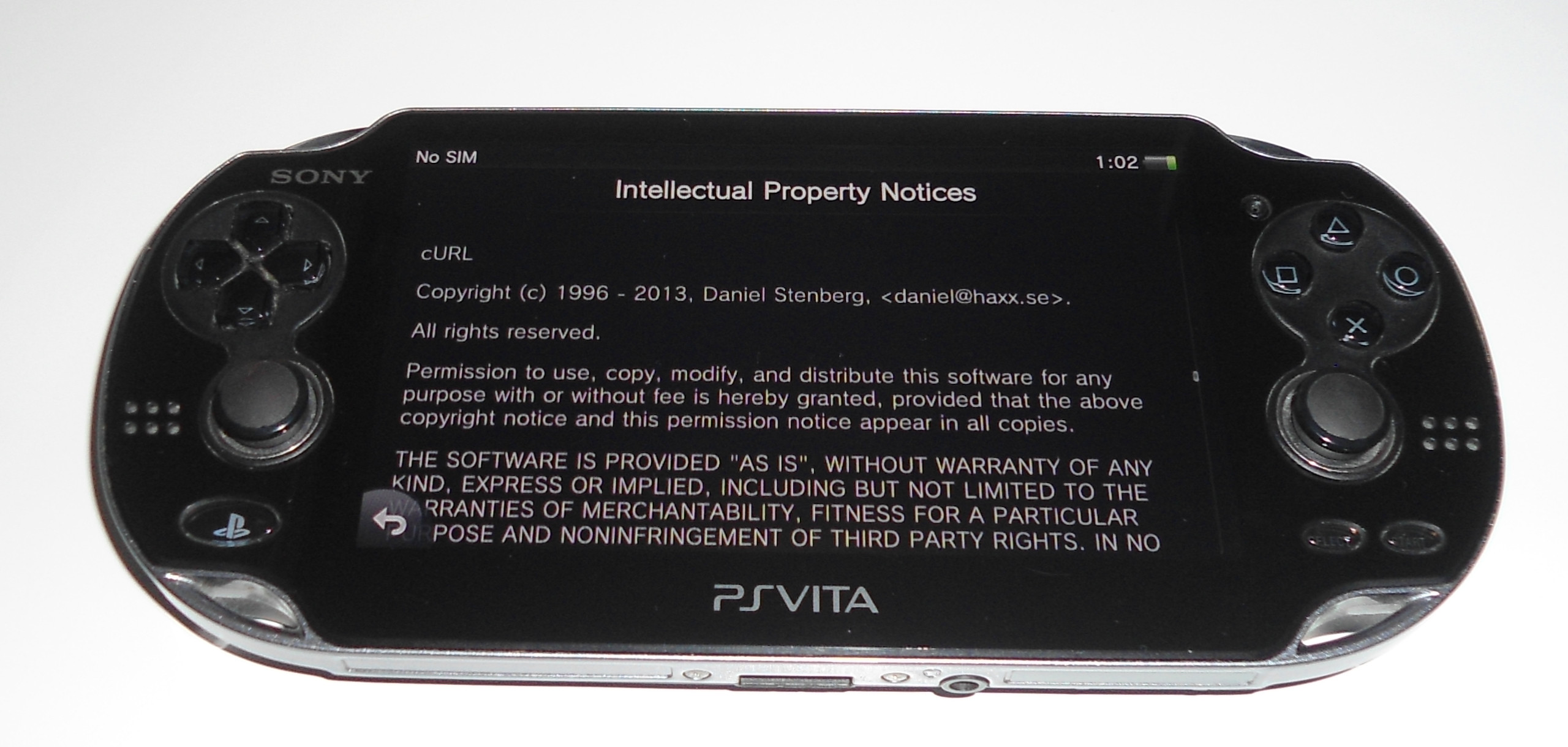

The original model of the PlayStation Vita (PCH-1000, 3G) (thanks to ml)

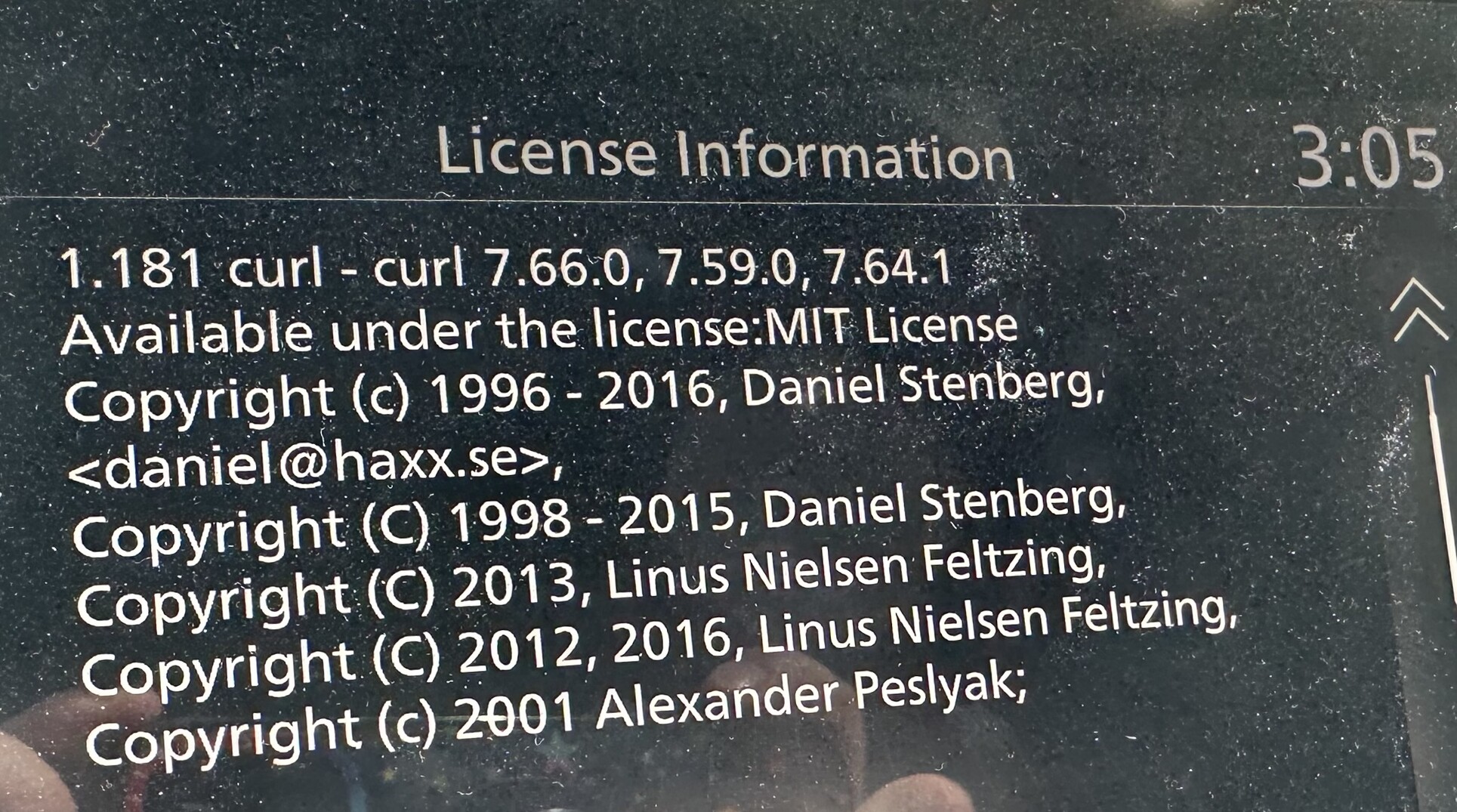

The 2023 Infiniti QX80, Premium Select trim level (an SUV)

Renault Scenic (thanks to Taxo Rubio)

Volvo XC40 Recharge 2024 edition obviously features a libcurl from 2019…

The Roland Fantom 6 Synthesizer Keyboard, runs curl (Thanks Anopka)

Used in the Mini Countryman (the car) (Thanks Alejandro Pablo Revilla)

Nissan Qashqai MY21 (Thanks Michele Adduci)

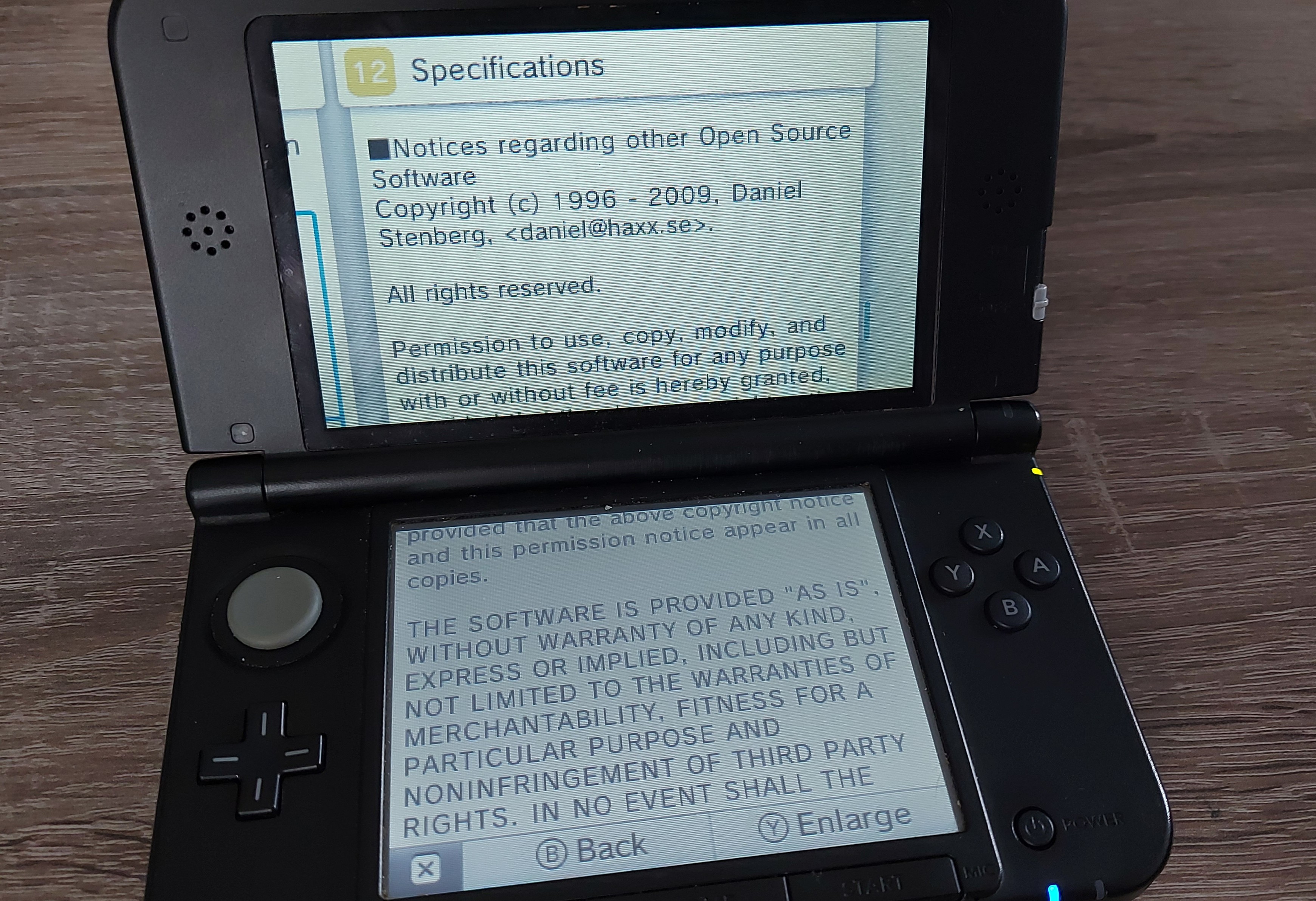

The Nintendo 3DS internet browser probably runs curl. It does not say so, but the license looks like the curl one. (Thanks to Marlon Pohl)

This is a Seat Leon (Thanks to Mormegil)

Chevrolet Tracker LTZ 2024 (Thanks Diego)

Used in Korean iOS apps. Left to right below: KakaoTalk, Naver and TMAP MOBILITY . Thanks to Hong Yongmin.

The BOOX 10.3″ Tab Ultra C Pro features curl. (Thanks Henrik Sandklef)

This SMART Interactive Display runs curl (Thanks Marcel)

The Nintendo Switch 2 runs curl (Thanks to Patrik Svensson)

Sonic Racing: CrossWorlds uses libcurl. (Thanks to Mufasa)

Saints Row: IV (Thanks to DCoder)

Warframe and Soulframe (games) use curl. Thanks.

25,000 curl questions on stackoverflow

Over time, I’ve reluctantly come to terms with the fact that a lot of questions and answers about curl is not done on the mailing lists we have setup in the project itself.

Over time, I’ve reluctantly come to terms with the fact that a lot of questions and answers about curl is not done on the mailing lists we have setup in the project itself.

A primary such external site with curl related questions is of course stackoverflow – hardly news to programmers of today. The questions tagged with curl is of course only a very tiny fraction of the vast amount of questions and answers that accumulate on that busy site.

The pile of questions tagged with curl on stackoverflow has just surpassed the staggering number of 25,000. Of course, these questions involve persons who ask about particular curl behaviors (and a large portion is about PHP/CURL) but there’s also a significant amount of tags for questions where curl is only used to do something and that other something is actually what the question is about. And ‘libcurl’ is used as a separate tag and is often used independently of the ‘curl’ one. libcurl is tagged on almost 2,000 questions.

But still. 25,000 questions. Wow.

But still. 25,000 questions. Wow.

I visit that site every so often and answer to some questions but I often end up feeling a great “distance” between me and questions there, and I have a hard time to bridge that gap. Also, stackoverflow the site and the format isn’t really suitable for debugging or solving problems within curl so I often end up trying to get the user move over to file an issue on curl’s github page or discuss the curl problem on a mailing list instead. Forums more suitable for plenty of back-and-forth before the solution or fix is figured out.

Now, any bets for how long it takes until we reach 100K questions?

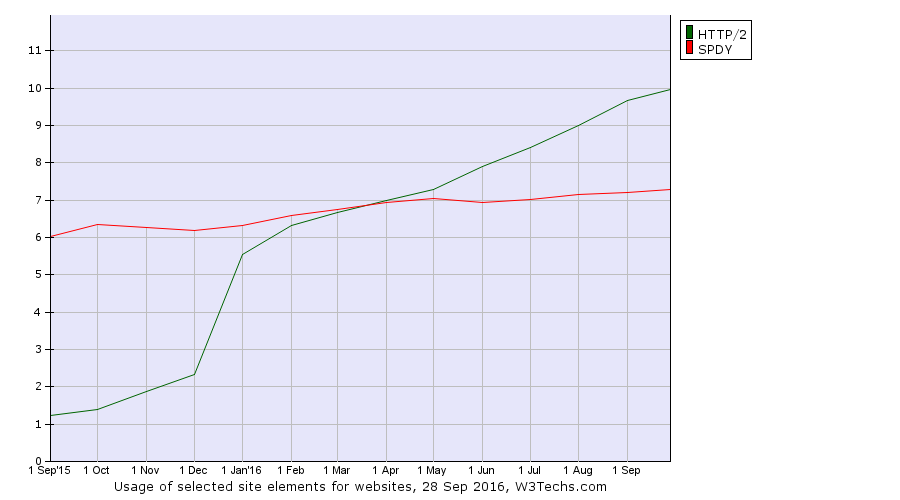

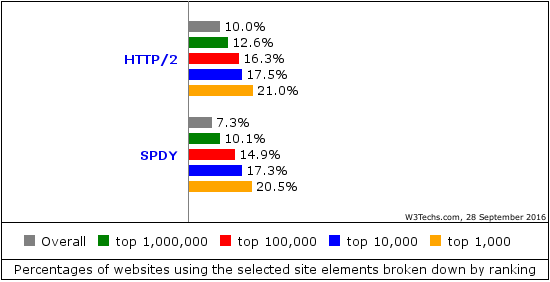

1,000,000 sites run HTTP/2

… out of the top ten million sites that is. So there’s at least that many, quite likely a few more.

This is according to w3techs who runs checks daily. Over the last few months, there have been about 50,000 new sites per month switching it on.

It also shows that the HTTP/2 ratio has increased from a little over 1% deployment a year ago to the 10% today.

HTTP/2 gets more used the more popular site it is. Among the top 1,000 sites on the web, more than 20% of them use HTTP/2. HTTP/2 also just recently (September 9) overcame SPDY among the top-1000 most popular sites.

On September 7, Amazon announced their CloudFront service having enabled HTTP/2, which could explain an adoption boost over the last few days. New CloudFront users get it enabled by default but existing users actually need to go in and click a checkbox to make it happen.

As the web traffic of the world is severely skewed toward the top ones, we can be sure that a significantly larger share than 10% of the world’s HTTPS traffic is using version 2.

Recent usage stats in Firefox shows that HTTP/2 is used in half of all its HTTPS requests!

A sea of curl stickers

To spread the word, to show off the logo, to share the love, to boost the brand, to allow us to fill up our own and our friend’s laptop covers I ordered a set of curl stickers to hand out to friends and fans whenever I meet any going forward. They arrived today, and I thought I’d give you a look. (You can still purchase your own set of curl stickers from unixstickers.com)

The sticker is 74 x 26 mm at its max.

My first 20 years of HTTP

During the autumn 1996 I took my first swim in the ocean known as HTTP. Twenty years ago now.

I had previously worked with writing an IRC bot in C, and IRC is a pretty simple text based protocol over TCP so I could use some experiences from that when I started to look into HTTP. That IRC bot was my first real application distributed to the world that was using TCP/IP. It was portable to most unixes and Amiga and it was open source.

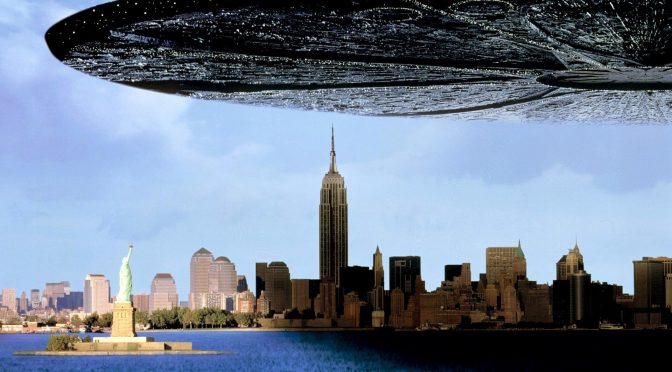

1996 was the year the movie Independence Day premiered and the single hit song that plagued the world more than others that year was called Macarena. AOL, Webcrawler and Netscape were the most popular websites on the Internet. There were less than 300,000 web sites on the Internet (compared to some 900 million today).

I decided I should spice up the bot and make it offer a currency exchange rate service so that people who were chatting could ask the bot what 200 SEK is when converted to USD or what 50 AUD might be in DEM. – Right, there was no Euro currency yet back then!

I simply had to fetch the currency rates at a regular interval and keep them in the same server that ran the bot. I just needed a little tool to download the rates over HTTP. How hard can that be? I googled around (this was before Google existed so that was not the search engine I could use!) and found a tool named ‘httpget’ that made pretty much what I wanted. It truly was tiny – a few hundred  lines of code.

lines of code.

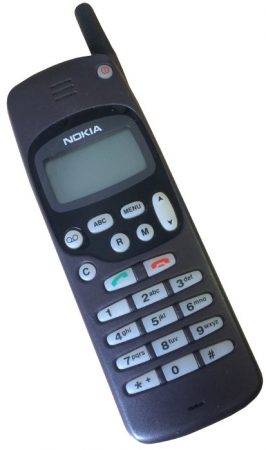

I don’t have an exact date saved or recorded for when this happened, only the general time frame. You know, we had no smart phones, no Google calendar and no digital cameras. I sported my first mobile phone back then, the sexy Nokia 1610 – viewed in the picture on the right here.

The HTTP/1.0 RFC had just recently came out – which was the first ever real spec published for HTTP. RFC 1945 was published in May 1996, but I was blissfully unaware of the youth of the standard and I plunged into my little project. This was the first published HTTP spec and it says:

HTTP has been in use by the World-Wide Web global information initiative since 1990. This specification reflects common usage of the protocol referred too as "HTTP/1.0". This specification describes the features that seem to be consistently implemented in most HTTP/1.0 clients and servers.

Many years after that point in time, I have learned that already at this time when I first searched for a HTTP tool to use, wget already existed. I can’t recall that I found that in my searches, and if I had found it maybe history would’ve made a different turn for me. Or maybe I found it and discarded for a reason I can’t remember now.

I wasn’t the original author of httpget; Rafael Sagula was. But I started contributing fixes and changes and soon I was the maintainer of it. Unfortunately I’ve lost my emails and source code history from those earliest years so I cannot easily show my first steps. Even the oldest changelogs show that we very soon got help and contributions from users.

The earliest saved code archive I have from those days, is from after we had added support for Gopher and FTP and renamed the tool ‘urlget’. urlget-3.5.zip was released on January 20 1998 which thus was more than a year later my involvement in httpget started.

The original httpget/urlget/curl code was stored in CVS and it was licensed under the GPL. I did most of the early development on SunOS and Solaris machines as my first experiments with Linux didn’t start until 97/98 something.

The first web page I know we have saved on archive.org is from December 1998 and by then the project had been renamed to curl already. Roughly two years after the start of the journey.

RFC 2068 was the first HTTP/1.1 spec. It was released already in January 1997, so not that long after the 1.0 spec shipped. In our project however we stuck with doing HTTP 1.0 for a few years longer and it wasn’t until February 2001 we first started doing HTTP/1.1 requests. First shipped in curl 7.7. By then the follow-up spec to HTTP/1.1, RFC 2616, had already been published as well.

The IETF working group called HTTPbis was started in 2007 to once again refresh the HTTP/1.1 spec, but it took me a while until someone pointed out this to me and I realized that I too could join in there and do my part. Up until this point, I had not really considered that little me could actually participate in the protocol doings and bring my views and ideas to the table. At this point, I learned about IETF and how it works.

I posted my first emails on that list in the spring 2008. The 75th IETF meeting in the summer of 2009 was held in Stockholm, so for me still working on HTTP only as a spare time project it was very fortunate and good timing. I could meet a lot of my HTTP heroes and HTTPbis participants in real life for the first time.

I have participated in the HTTPbis group ever since then, trying to uphold the views and standpoints of a command line tool and HTTP library – which often is not the same as the web browsers representatives’ way of looking at things. Since I was employed by Mozilla in 2014, I am of course now also in the “web browser camp” to some extent, but I remain a protocol puritan as curl remains my first “child”.