The Mr Robot TV series features a security expert and hacker lead character, Elliot.

Season 4, episode 8

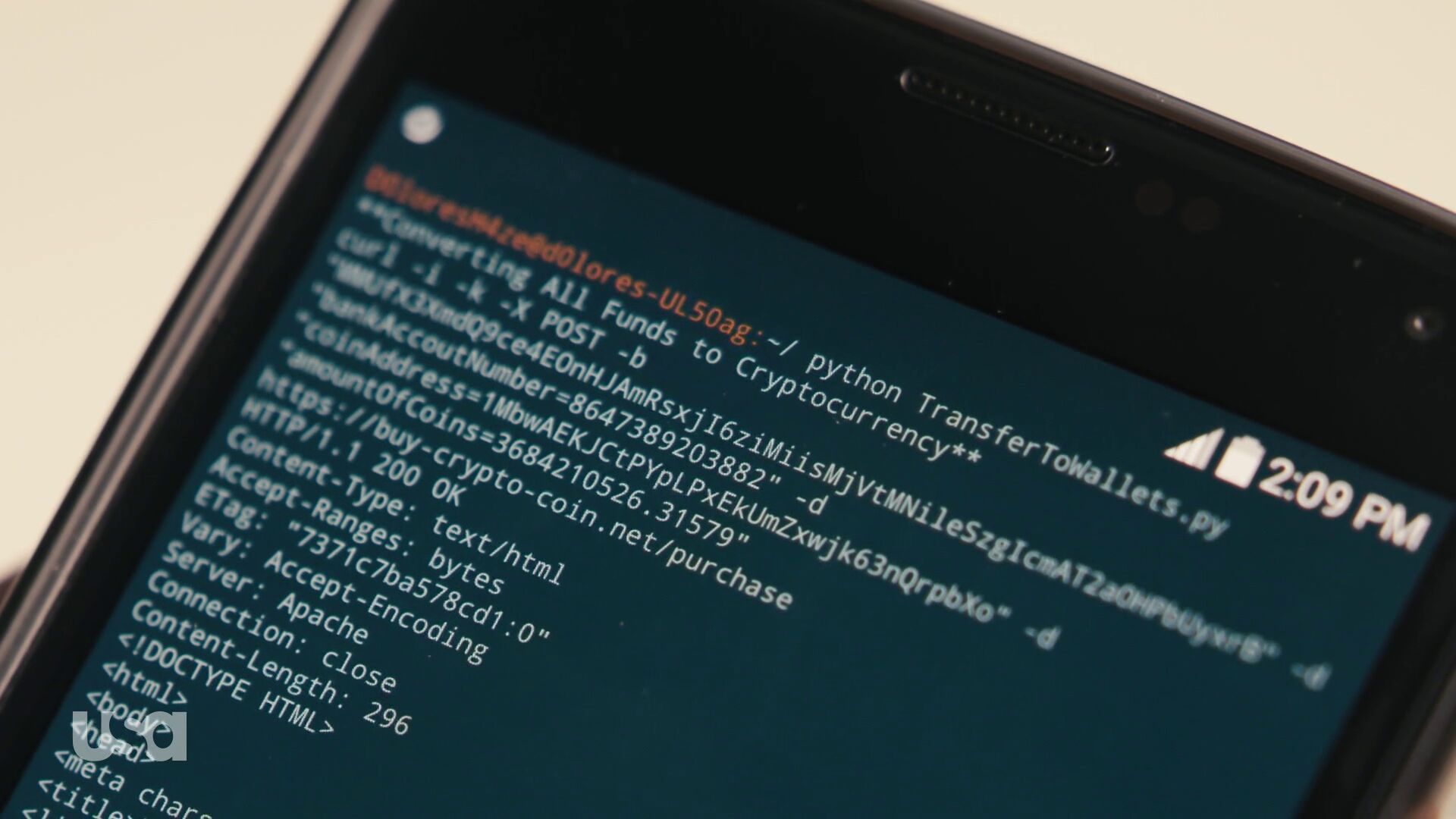

Vasilis Lourdas reported that he did a “curl sighting” in the show and very well I took a closer peek and what do we see some 37 minutes 36 seconds into episode 8 season 4…

(I haven’t followed the show since at some point in season two so I cannot speak for what actually has happened in the plot up to this point. I’m only looking at and talking about what’s on the screenshots here.)

Elliot writes Python. In this Python program, we can see two curl invokes, both unfortunately a blurry on the right side so it’s hard to see them exactly (the blur is really there in the source and I couldn’t see/catch a single frame without it). Fortunately, I think we get some additional clues later on in episode 10, see below.

He invokes curl with -i to see the response header coming back but then he makes some questionable choices. The -k option is the short version of --insecure. It truly makes a HTTPS connection insecure since it completely switches off the CA cert verification. We all know no serious hacker would do that in a real world use.

Perhaps the biggest problem for me is however the following -X POST. In itself it doesn’t have to be bad, but when taking the second shot from episode 10 into account we see that he really does combine this with the use of -d and thus the -X is totally superfluous or perhaps even wrong. The show technician who wrote this copied a bad example…

The -b that follows is fun. That sets a specific cookie to be sent in the outgoing HTTP request. The random look of this cookie makes it smell like a session cookie of some sorts, which of course you’d rarely then hard-code it like this in a script and expect it to be of use at a later point. (Details unfold later.)

Season 4, episode 10

Lucas Pardue followed-up with this second sighting of curl from episode 10, at about 23:24. It appears that this might be the python program from episode 8 that is now made to run on or at least with a mobile phone. I would guess this is a session logged in somewhere else.

In this shot we can see the command line again from episode 8.

We learn something more here. The -b option didn’t specify a cookie – because there’s no = anywhere in the argument following. It actually specified a file name. Not sure that makes anything more sensible, because it seems weird to purposely use such a long and random-looking filename to store cookies in…

Here we also see that in this POST request it passes on a bank account number, a “coin address” and amountOfCoins=3684210526.31579 to this URL: https://buy-crypto-coin.net/purchase, and it gets 200 OK back from a HTTP/1.1 server.

I tried it

curl -i -k -X POST -d bankAccountNumber=8647389203882 -d coinAddress=1MbwAEKJCtPYpLPxEkUmZxwjk63nQrpbXo -d amountOfCoins=3684210526.31579 https://buy-crypto-coin.net/purchase

I don’t have the cookie file so it can’t be repeated completely. What did I learn?

First: OpenSSL 1.1.1 doesn’t even want to establish a TLS connection against this host and says dh key too small. So in order to continue this game I took to a curl built with a TLS library that didn’t complain on this silly server.

Next: I learned that the server responding on this address (because there truly is a HTTPS server there) doesn’t have this host name in its certificate so -k is truly required to make curl speak to this host!

Then finally it didn’t actually do anything fun that I could notice. How boring. It just responded with a 301 and Location: http://www.buy-crypto-coin.net. Notice how it redirects away from HTTPS.

What’s on that site? A rather good-looking fake cryptocurrency market site. The links at the bottom all go to various NBC Universal and USA Network URLs, which I presume are the companies behind the TV series. I saved a screenshot below just in case it changes.