libcurl is thread-safe

That’s the primary message that we push and that’s important to remember. You can write a multi-threaded application that does concurrent Internet transfers with libcurl in as many threads as you like and they fly just fine.

But

But there are nuances and details of course and the devil is always in those. The main obstacle that then and again causes problems for users is the curl_global_init() function. But how come?

curl_global_init

Back in the day, libcurl developers realized that when we work with in particular a lot of third party TLS libraries, they feature init functions that need to be called first, before any other function in those libraries are called. And they typically are all marked as not thread-safe, we have to call those functions knowing that no other thread calls them. This was the case for GnuTLS (before version 3.3.0) and it was the case for OpenSSL (until they shipped version 1.1.1) etc.

In order for libcurl to adhere to those restrictions that weren’t our own inventions, we added a function to libcurl called curl_global_init() that then in itself inherited those non-thread safe characteristics. We documented the function as not thread-safe.

Time passed, and as we now had a function that is a global initialization function that is also marked not thread-safe, it was an attractive point to add more and other functionality for the library. Other global initializations that then weren’t thread-safe either – as that wasn’t any point in doing anyway since the entire thing wasn’t thread-safe to begin with.

The problems

Having the global init function not being thread-safe has caused problems to users, mostly in use cases where for example they use libcurl in a plugin-like cases where you can’t know if you’re the only user in the process.

We’ve then mostly been longing for better days and blamed the third party libraries that forced us into this corner.

Third parties shaped up, we didn’t

One day in recent times when we looked at what third party libraries a typical libcurl user uses in a modern system, we see that they’ve all fixed their init functions! OpenSSL and GnuTLS that once were part of the original reasons for this function have fixed their issues. They no longer have thread-unsafe init functions.

But libcurl still does! 🙁

While we were initially pushed into this unfortunate corner because of limitations in third party libraries, we had added our own init functions into that function that aren’t thread-safe and now, even though the third party libraries had done the right thing over time, we found ourselves no longer able to put the blame on others. Now we need to clean up our own backyard!

Fix it!

In libcurl 7.69.0 we’ve started this journey with two distinct changes. The goal is to make the function thread-safe under the condition that libcurl is built with only thread-safe dependencies, and we should make configure etc check if that’s the case.

1: EINTR handling

Since libcurl 7.30.0, we’ve provided a flag in the curl_global_init() function to let libcurl users ask for EINTR to actually abort internal loops. Starting now, that flag has no meaning and this is now default behavior. No need to store this state globally anywhere.

2: Working IPv6

At least in the past, it has been common with systems that are IPv6-capable at build-times but that can’t actually create IPv6 sockets and therefor they can’t actually use IPv6. This was previously checked for, once, in the global init and then IPv6 is disabled for everyone. Without a global state, we’ve been forced to move this check and it is now instead done for every created multi handle. A minuscule performance hit for thread safety.

Left to do until completely thread-safe

The transition isn’t completed. The low hanging fruit has been picked, here are some remaining issues to solve:

When is it thread-safe?

Since curl can be built with a number of different third party libraries, including version old versions, we need to make the configure script know what versions of what libraries that are safe so that it can tell. But how are libcurl application authors supposed to know? Can we figure how a way to tell them?

curl_version*

Both curl_version() and curl_version_info() store information in static buffers and return information pointing to that memory. They’re currently setup in the global init so they work safely from multiple threads today, but we probably need to create new, alternative versions of them, that instead allocate heap memory to return the info in. Or possibly store the info in memory associated with a handle.

Update: Patrick Monnerat made me realize that a possibly even better way to fix them is to make sure they generate the same output in a way that repeated or concurrent invokes are fine.

Reference counter

There’s a counter counting calls to curl_global_init() so that the corresponding number of calls to curl_global_cleanup() is required before things are actually cleaned up.

This is a hard nut to crack without a global context and no mutex locks. I haven’t yet figured out how to solve this. If you have ideas, I’m listening!

When?

There’s no fixed time schedule for when these remaining nits are supposed to be fixed, but I hope to work on them going forward and I will appreciate all the help I can get and if things just progress, I would imagine we can end 2020 with a libcurl with these flaws fixed!

Oh, and we also really need to make sure that we don’t simultaneously come up with or think of new thread unsafe functionality for the init function..

Credits

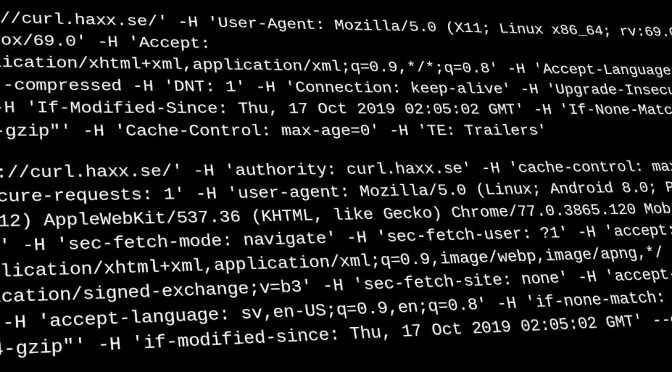

Top image by Andreas Lischka from Pixabay